✏️ 编者按:

在数据量指数型增长、数据类型日益丰富的今天,标量数据存储已不能满足日新月异的数据场景。我们要如何存储和管理图像、视频、文本等非结构化数据?推荐系统、语义理解、新药发现、股票市场分析……向量数据库又是如何应对这些复杂场景的?

🤵♂️ 作者简介:

Frank Liu,Zilliz AI 技术运营与产品专家,拥有斯坦福大学电子电气工程硕士学位,曾就职于美国雅虎公司,后回国创业,在人工智能算法研究和应用方面拥有多年经验。Frank 目前在 Zilliz 负责 Towhee 项目的技术与产品运营,围绕社区构建开源技术生态体系,全面推进用户侧的转化落地与场景实现。

Relational is not Enough

X2vec: A New Way to Understand Data

* Early computer vision and image processing relied on local feature descriptors to turn an image into a “bag” of embedding vectors – one vector for each detected keypoint. SIFT, SURF, and ORB are three well-known feature descriptors you may have heard of. These feature descriptors, while useful for matching images with one another, proved to be a fairly poor way to represent audio (via spectrograms) and images.

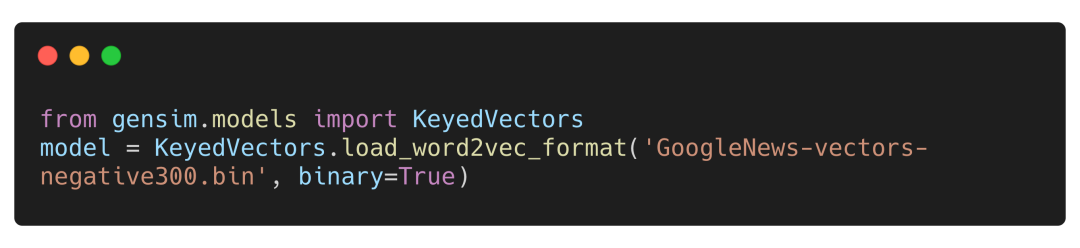

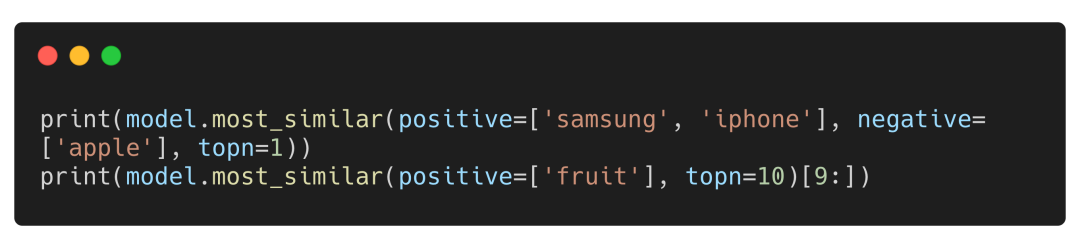

Example: Apple, the company, the fruit, ... or both?

The word "apple" can refer to both the company as well as the delicious red fruit. In this example, we can see that Word2Vec retains both meanings.

[('droid_x', 0.6324754953384399)]

[('apple', 0.6410146951675415)]

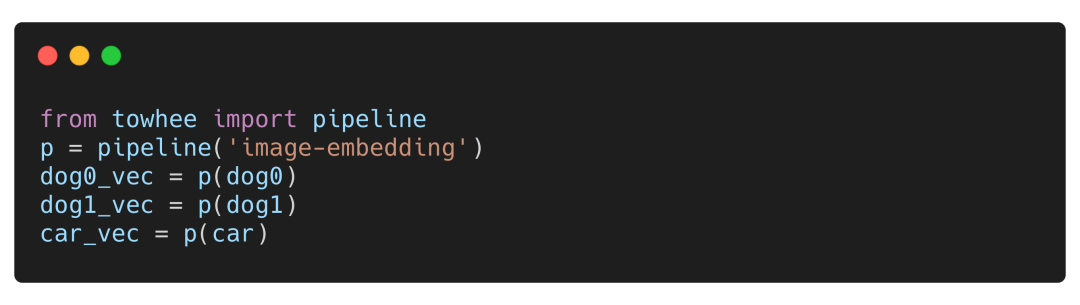

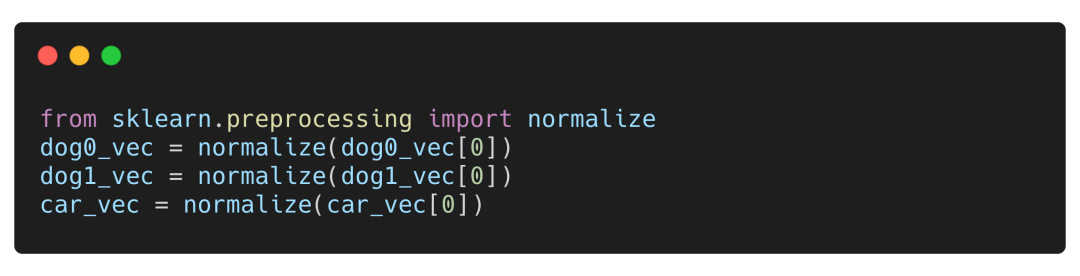

Generating embeddings

Now let's use towhee to generate embeddings for our images.

Now let's compute distances

dog0 to dog1 distance: 0.59794164

dog0 to car distance: 1.1380062

Searching Across Vectors

Putting It All Together

Scalable: Embedding vectors are fairly small in terms of absolute memory, but to facilitate read and write speeds, they are usually stored in-memory (disk-based NN/ANN search is a topic for another blog post). When scaling to billions of embedding vectors and beyond, storage and compute quickly become unmanageable for a single machine. Sharding can solve this problem, but this requires splitting the indexes across multiple machines as well. Reliable: Modern relational databases are fault-tolerant. Replication allows cloud native enterprise databases to avoid having single points of failure, enabling graceful startup and shutdown. Vector databases are no different, and should be able to handle internal faults without data loss and with minimal operational impact. Fast: Yes, query and write speeds are important, even for vector databases. An increasingly common use case is processing and indexing database inputs in realtime. For platforms such as Snapchat and Instagram, which can have hundreds or thousands of new photos (a type of unstructured data) uploaded per second, speed becomes an incredibly important factor.

The World’s Most Advanced Vector Database

文章转载自ZILLIZ,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。