手动安装TiDB集群之 PD,KV,SERVER节点部署

【TiDB 简介】

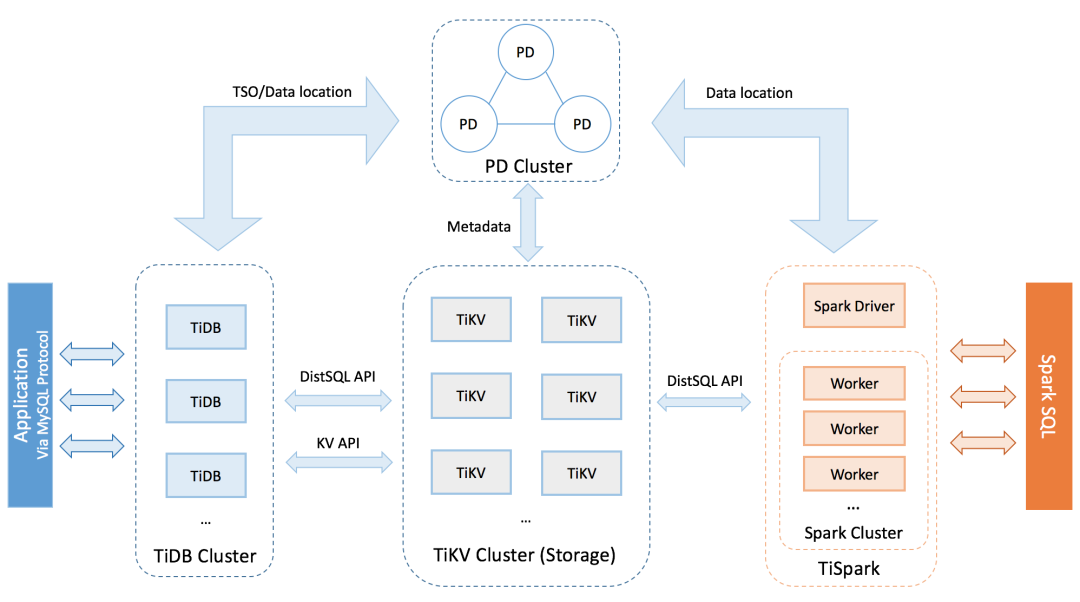

TiDB 是NewSQL数据库,上层协议完全兼容MySQL协议,各类语言可用MySQL驱动、客户端访问TiDB 数据库。 存储层支持动态可伸缩扩展。底层Rocksdb 存储引擎。业务场景为OLTP 和 OLAP 提供一站式解决方案。

TiDB 集群架构:

TiDB 集群分3类节点。分别如下:

TiDB Server :TiDB Server 负责接收 SQL 请求,处理 SQL 相关的逻辑,并通过 PD 找到存储计算所需数据的 TiKV 地址,与 TiKV 交互获取数据,最终返回结果。TiDB Server 是无状态的,其本身并不存储数据,只负责计算,可以无限水平扩展,可以通过负载均衡组件(如LVS、HAProxy 或 F5)对外提供统一的接入地址。

PD Server :Placement Driver (简称 PD) 是整个集群的管理模块,其主要工作有三个:一是存储集群的元信息(某个 Key 存储在哪个 TiKV 节点);二是对 TiKV 集群进行调度和负载均衡(如数据的迁移、Raft group leader 的迁移等);三是分配全局唯一且递增的事务 ID。

PD 通过 Raft 协议保证数据的安全性。Raft 的 leader server 负责处理所有操作,其余的 PD server 仅用于保证高可用。建议部署奇数个 PD 节点。

TiKV Server :TiKV Server 负责存储数据,从外部看 TiKV 是一个分布式的提供事务的 Key-Value 存储引擎。存储数据的基本单位是 Region,每个 Region 负责存储一个 Key Range(从 StartKey 到 EndKey 的左闭右开区间)的数据,每个 TiKV 节点会负责多个 Region。TiKV 使用 Raft 协议做复制,保持数据的一致性和容灾。副本以 Region 为单位进行管理,不同节点上的多个 Region 构成一个 Raft Group,互为副本。数据在多个 TiKV 之间的负载均衡由 PD 调度,这里也是以 Region 为单位进行调度。

安装TiDB 各类节点,初次安装TiDB集群的顺序是:安装PD节点》安装TiKV节点》安装server节点

(引用:https://pingcap.com/docs-cn/stable/architecture/)

【安装环境配置】

1、OS 版本 CentOS 7.3

2、磁盘 SSD

3、vim /etc/security/limits.d/limits.conf

* soft nofile 829400

* hard nofile 829400

目录规范:

设置端口为PORT,例如5001

安装目录:/usr/local/tidb_5001

数据目录:/data/tidb_5001

日志目录:/data/tidblog_5001

【TiDB 安装介质】

1、通过git下载完对应的安装包到downlows目录

git clone https://github.com/pingcap/tidb-ansible.git

cd tidb-ansible

ansible-playbook local_prepare.yml

cd downloads

注:或者直接到官网下载。

2、目录会有tidb相关程序的命,如下。把程序打包到 /usr/local/tidb_5001/bin 目录下,

binlogctl drainer etcdctl loader mydumper mysql mysqladmin mysqldump mysqlshow pd-ctl pd-recover pd-server pump reparo tidb-ctl tidb-server tikv-ctl tikv-server

3、yum 安装依赖包:

yum install libcgroup

【安装TiDB集群】

准备3台机器,假设集群部署规划如下:

机器IP | PD | TiKV | Server |

192.168.1.11 | 安装目录:/usr/local/tidb_5101 数据目录:/data/tidb_5101 日志目录:/data/tidblog_5101 配置文件:/data/tidb_5101/tidb.conf | 安装目录:/usr/local/tidb_5301 数据目录:/data/tidb_5301 日志目录:/data/tidblog_5301 配置文件:/data/tidb_5301/tidb.conf | 安装目录:/usr/local/tidb_5501 数据目录:/data/tidb_5501 日志目录:/data/tidblog_5501 配置文件:/data/tidb_5501/tidb.conf |

192.168.1.12 | |||

192.168.1.13 |

【安装PD节点】

配置文件设置(主要参数):

vim /data/tidb_5101/tidb.conf

client-urls :客户端连接查询的监听地址,这里端口是5101

peer-urls :集群之间互相通信的端口,这里用客户端监听端口5101+100=5201

name :改PD节点的名称

data-dir :数据目录

initial-cluster : 集群初始化时的PD节点,格式是:pd节点 name=peer-urls 。这里写入3个节点作为集群元数据初始化。

注:tidb 个类节点的配置,第一部分,没有[]指定的模块是基础模块,其余有中括号指定,只作用于指定模块,例如[log]

(更多配置释义参照:https://github.com/pingcap/pd/blob/master/conf/config.toml)

peer-urls = "http://192.168.1.11:5201"data-dir = "/data/tidb_5101"name = "192.168.1.11:5101"client-urls = "http://192.168.1.11:5101"initial-cluster = "192.168.1.11:5101=http://192.168.1.11:5201,192.168.1.12:5101=http://192.168.1.12:5201,192.168.1.13:5101=http://192.168.1.13:5201"[log]level = "info"format = "text"[log.file]max-backups = 7filename = "/data/tidblog_5101/run.log"log-rotate = true[metric]interval = "15s"[schedule]max-merge-region-size = 20max-merge-region-keys = 200000split-merge-interval = "1h"max-snapshot-count = 3max-pending-peer-count = 16max-store-down-time = "30m"leader-schedule-limit = 4region-schedule-limit = 64replica-schedule-limit = 64merge-schedule-limit = 8hot-region-schedule-limit = 4[replication]max-replicas = 3

启动PD集群:

/usr/local/tidb_5101/bin/pd-server --config=/data/tidb_5101/tidb.conf &

(其余2个PD节点按照同样方式安装)

验证:

用pd-ctl连接PD集群的一个节点,验证集群是否正常

/usr/local/tidb_5101/bin/pd-ctl -u http://192.168.1.11:5101 -i

>> health

>> member

【安装 TiKV 节点】

配置文件设置(主要参数):

vim /data/tidb_5301/tidb.conf

addr = "192.168.1.11:5301" 指定客户端连接的监听监听地址,即与PD-SERVER和tidb-server通信的

status-addr = "192.168.1.11:5401" 用于收集节点性能指标,状态等的监听地址。这里端口用addr的客户端端口+100。

endpoints = ["192.168.1.11:5101", "192.168.1.12:5101", "192.168.1.13:5101"] tikv 要指定PD控制管理节点,用于管理整个加入整个集群。

(更多参数释义参照:https://github.com/tikv/tikv/blob/master/etc/config-template.toml)

log-level = "warning"log-file = "/data/tidblog_5301/run.log"log-rotation-timespan = "24h"[server]addr = "192.168.1.11:5301"status-addr = "192.168.1.11:5401"grpc-concurrency = 6[readpool.storage]normal-concurrency = 10[storage]data-dir = "/data/tidb_5301"scheduler-concurrency = 1024000scheduler-worker-pool-size = 4[pd]endpoints = ["192.168.1.11:5101", "192.168.1.12:5101", "192.168.1.13:5101"][metric]interval = "5s"[raftstore]sync-log = falsestore-pool-size = 3apply-pool-size = 3[rocksdb]max-background-jobs = 2max-open-files = 409600max-manifest-file-size = "20MB"compaction-readahead-size = "20MB"[rocksdb.defaultcf]block-size = "64KB"compression-per-level = ["no", "no", "lz4", "lz4", "lz4", "zstd", "zstd"]write-buffer-size = "128MB"max-write-buffer-number = 5max-bytes-for-level-base = "512MB"target-file-size-base = "8MB"[rocksdb.writecf]compression-per-level = ["no", "no", "lz4", "lz4", "lz4", "zstd", "zstd"]write-buffer-size = "128MB"max-write-buffer-number = 5min-write-buffer-number-to-merge = 1max-bytes-for-level-base = "512MB"target-file-size-base = "8MB"[raftdb]max-open-files = 409600compaction-readahead-size = "20MB"[raftdb.defaultcf]compression-per-level = ["no", "no", "lz4", "lz4", "lz4", "zstd", "zstd"]write-buffer-size = "128MB"max-write-buffer-number = 5min-write-buffer-number-to-merge = 1max-bytes-for-level-base = "512MB"target-file-size-base = "32MB"[rocksdb.lockcf][storage.block-cache]capacity = "4GB"[security][import]import-dir = "/data/tidb_5301/import"num-threads = 2stream-channel-window = 128

启动tikv节点:

/usr/local/tidb_5301/bin/tikv-server --config=/data/tidb_5301/tidb.conf &

(其余2个TiKV节点按照同样方式安装)

验证:

登陆PD节点,查看当前集群下的存储纠结点状态。

/usr/local/tidb_5101/bin/pd-ctl -u http://192.168.1.11:5101 -i

>>store

【安装SERVER节点】

配置文件设置(主要参数):

vim /data/tidb_5501/tidb.conf

port = 5501 客户端监听端口

status-port = 5601 用于收集节点性能指标,状态等的监听地址。 这里用客户端监听端口+100

path = "192.168.1.11:5101,192.168.1.12:5101,192.168.1.13:5101" 指定pd集群的节点,指定pd集群才能加入到tidb集群里。

(更多参数释义参照:https://github.com/pingcap/tidb/blob/master/config/config.toml.example)

compatible-kill-query = falselower-case-table-names = 2enable-streaming = falseoom-action = "log"token-limit = 1000split-table = truelease = "45s"run-ddl = truepath = "192.168.1.11:5101,192.168.1.12:5101,192.168.1.13:5101"store = "tikv"port = 5501host = "0.0.0.0"[log]level = "warn"format = "text"disable-timestamp = falseslow-query-file = "/data/tidblog_5501/slow.log"slow-threshold = 300expensive-threshold = 10000query-log-max-len = 2048[log.file]filename = "/data/tidblog_5501/run.log"max-size = 300max-days = 0max-backups = 0log-rotate = true[security][status]report-status = truestatus-port = 5601metrics-interval = 15[performance]max-procs = 0stmt-count-limit = 5000tcp-keep-alive = truecross-join = truestats-lease = "3s"run-auto-analyze = truefeedback-probability = 0.05query-feedback-limit = 0pseudo-estimate-ratio = 0.8force-priority = "NO_PRIORITY"[proxy-protocol]header-timeout = 5[opentracing]enable = falserpc-metrics = false[opentracing.reporter]queue-size = 0buffer-flush-interval = 0log-spans = false[opentracing.sampler]type = "const"param = 1.0max-operations = 0sampling-refresh-interval = 0[tikv-client]grpc-connection-count = 16commit-timeout = "41s"grpc-keepalive-time = 10grpc-keepalive-timeout = 3max-batch-wait-time = 2000000[txn-local-latches]enabled = falsecapacity = 1024000[binlog]enable=falseignore-error = falsewrite-timeout = "15s"

启动tikv节点:

/usr/local/tidb_5501/bin/tidb-server --config=/data/tidb_5501/tidb.conf &

(其余2个TiKV节点按照同样方式安装)

验证:

用mysql客户端工具登陆,刚初始化的tidb集群,默认root账号密码为空

/usr/local/tidb_5501/bin/mysql -h 127.0.0.1 -uroot -P5501

mysql> show databases

至此安装完毕