DB最近在做迁移的事情,逻辑迁移过程中发现将数据从A集群迁移到B集群时,偶发B集群中slave实例异常crash的问题。

整个问题的处理思路

查看故障表象

故障复现

直接问题分析

真正的问题定位

问题修复

故障表象

错误日志中记录了crash时故障线程函数调用信息,如下(下面截图):

As this is a crash and something is definitely wrong, the information

collection process might fail.

key_buffer_size=33554432

read_buffer_size=16777216

max_used_connections=3

max_threads=5000

thread_count=6

connection_count=3

It is possible that mysqld could use up to

key_buffer_size + (read_buffer_size + sort_buffer_size)*max_threads = 122979486 K bytes of memory

Hope that's ok; if not, decrease some variables in the equation.

Thread pointer: 0x7ff6b414d9a0

Attempting backtrace. You can use the following information to find out

where mysqld died. If you see no messages after this, something went

terribly wrong...

stack_bottom = 7ff7721bae28 thread_stack 0x40000

/data/mysql/base/5.7.18/bin/mysqld(my_print_stacktrace+0x35)[0xf23815]

/data/mysql/base/5.7.18/bin/mysqld(handle_fatal_signal+0x4a4)[0x79ea64]

/lib64/libpthread.so.0[0x3ca9c0f7e0]

/data/mysql/base/5.7.18/bin/mysqld[0x1313a8a]

/data/mysql/base/5.7.18/bin/mysqld(_Z23well_formed_copy_ncharsPK15charset_info_stPcmS1_PKcmmPS4_S5_S5_+0xba)[0xdc7b1a]

/data/mysql/base/5.7.18/bin/mysqld(_Z29field_well_formed_copy_ncharsPK15charset_info_stPcmS1_PKcmmPS4_S5_S5_+0x54)[0x7c5f34]

/data/mysql/base/5.7.18/bin/mysqld(_ZN10Field_blob14store_internalEPKcmPK15charset_info_st+0x19d)[0x7cdf0d]

/data/mysql/base/5.7.18/bin/mysqld(_ZN17Fill_process_listclEP3THD+0x46b)[0xd5487b]

/data/mysql/base/5.7.18/bin/mysqld(_ZN18Global_THD_manager19do_for_all_thd_copyEP11Do_THD_Impl+0x25d)[0x79d50d]

/data/mysql/base/5.7.18/bin/mysqld(_Z23fill_schema_processlistP3THDP10TABLE_LISTP4Item+0x43)[0xd3e583]

/data/mysql/base/5.7.18/bin/mysqld[0xd3d1a8]

/data/mysql/base/5.7.18/bin/mysqld(_Z24get_schema_tables_resultP4JOIN23enum_schema_table_state+0x1cc)[0xd3d42c]

/data/mysql/base/5.7.18/bin/mysqld(_ZN4JOIN14prepare_resultEv+0x6d)[0xd32bad]

/data/mysql/base/5.7.18/bin/mysqld(_ZN4JOIN4execEv+0xc0)[0xcc9fe0]

/data/mysql/base/5.7.18/bin/mysqld(_Z12handle_queryP3THDP3LEXP12Query_resultyy+0x250)[0xd33f40]

/data/mysql/base/5.7.18/bin/mysqld[0xcf50e3]

/data/mysql/base/5.7.18/bin/mysqld(_Z21mysql_execute_commandP3THDb+0x3571)[0xcf8921]

/data/mysql/base/5.7.18/bin/mysqld(_Z11mysql_parseP3THDP12Parser_state+0x3a5)[0xcfa725]

/data/mysql/base/5.7.18/bin/mysqld(_Z16dispatch_commandP3THDPK8COM_DATA19enum_server_command+0x11b8)[0xcfb948]

/data/mysql/base/5.7.18/bin/mysqld(_Z10do_commandP3THD+0x194)[0xcfc834]

/data/mysql/base/5.7.18/bin/mysqld(handle_connection+0x29c)[0xdc9b9c]

/data/mysql/base/5.7.18/bin/mysqld(pfs_spawn_thread+0x174)[0xf46844]

/lib64/libpthread.so.0[0x3ca9c07aa1]

/lib64/libc.so.6(clone+0x6d)[0x3ca98e8aad]

截图:

根据操作人员的反馈,只有slave实例可能会出现这个问题,master没有出现过。从日志内容可以看出,触发crash的线程不是IO或者SQL线程,而是外部的某个请求,因为复制线程有相对特殊的函数调用。mysql通过信号捕获机制让它在出现某些异常时,可以将相应的触发crash的线程栈记录到错误日志中,实现机制可以阅读函数void my_init_signals()

以及extern "C" void handle_fatal_signal(int sig)。

由于线上没有开启mysqld的core dump,只通过仅有的错误日志很难定位到问题的根本原因,所以需要尽可能在线下环境进行稳定复现。并且就算定位到问题,反馈给官方以及提交patch,也同样要提供可稳定复现的场景,而不能指着线上这堆日志说事儿。

故障复现

在上一篇文章 before_dml hook 引发的drop table阻塞事件,就提到过,任何不一致的条件都有可能无法准确的复现问题,所以这次我直接拷贝了生产环境的配置文件,搭建和生产相同的复制环境,并且使用了出现问题点的生产数据,进行数据导入。但一开始并没有成功复现问题(其实也是我期待的结果),印证了一开始的猜想:外部的某个查询是触发bug的导火索。所以只在复制环境中进行相同的数据导入是不会触发这个问题的。

通过错误日志猜测崩溃的线程当时执行的SQL语句:

/data/mysql/base/5.7.18/bin/mysqld(_ZN17Fill_process_listclEP3THD+0x46b)[0xd5487b]

/data/mysql/base/5.7.18/bin/mysqld(_ZN18Global_THD_manager19do_for_all_thd_copyEP11Do_THD_Impl+0x25d)[0x79d50d]

/data/mysql/base/5.7.18/bin/mysqld(_Z23fill_schema_processlistP3THDP10TABLE_LISTP4Item+0x43)[0xd3e583]

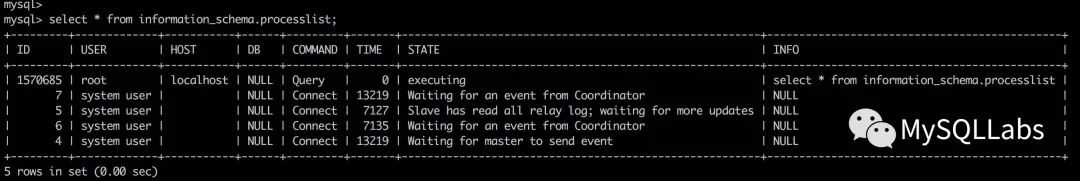

这几层栈帧基本可以确定,出问题的线程正在访问information_schema.processlist表。根据生产环境的实际情况,slave上发生这种类似查询大概率是监控程序发起的。明确这点后,开始在master数据导入的同时,slave上循环执行监控SQL,具体的SQL内容不太方便展示,可以简单认为是

select * from information_schema.processlist;

至此问题相对顺利的可以稳定复现,我们也得到了初步的原因,或者是引发crash的导火索:数据导入以及监控SQL。

根因挖掘

一旦问题可以稳定复现,就可以方便的在测试环境拿到core dump文件,有了core dump后,分析问题也方便了很多,可以看到崩溃点堆栈信息和错误日志是吻合的:

#0 0x0000003ca9889cc8 in _wordcopy_fwd_aligned () from lib64/libc.so.6

#1 0x0000003ca9883b22 in memmove () from lib64/libc.so.6

#2 0x0000000000dc7b2d in well_formed_copy_nchars (to_cs=0x1e5ac80, to=0x7ff6790f29e0 "I"...,

to_length=196605, from_cs=0x1dec460, from=0x7ff668847550 <Address 0x7ff668847550 out of bounds>,

from_length=65535, nchars=65535, well_formed_error_pos=0x7ff76e1f8f88,

cannot_convert_error_pos=0x7ff76e1f8f80, from_end_pos=0x7ff76e1f8f78)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql-common/sql_string.cc:1049

#3 0x00000000007c5f34 in field_well_formed_copy_nchars (to_cs=0x1e5ac80, to=<value optimized out>,

to_length=<value optimized out>, from_cs=0x1dec460,

from=0x7ff668847550 <Address 0x7ff668847550 out of bounds>, from_length=65535, nchars=65535,

well_formed_error_pos=0x7ff76e1f8f88, cannot_convert_error_pos=0x7ff76e1f8f80,

from_end_pos=0x7ff76e1f8f78)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/field.cc:1294

#4 0x00000000007cdf0d in Field_blob::store_internal (this=0x7ff6780150b0,

from=0x7ff668847550 <Address 0x7ff668847550 out of bounds>, length=65535, cs=0x1dec460)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/field.cc:8240

#5 0x0000000000d5487b in Fill_process_list::operator() (this=0x7ff76e1fa0d0,

inspect_thd=0x7ff6900008c0)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_show.cc:2507

#6 0x000000000079d50d in operator() (this=0x1ffcea0, func=0x7ff76e1fa0d0)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/mysqld_thd_manager.cc:46

#7 for_each<THD**, Do_THD> (this=0x1ffcea0, func=0x7ff76e1fa0d0)

at usr/lib/gcc/x86_64-redhat-linux6E/4.4.4/../../../../include/c++/4.4.4/bits/stl_algo.h:4200

#8 Global_THD_manager::do_for_all_thd_copy (this=0x1ffcea0, func=0x7ff76e1fa0d0)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/mysqld_thd_manager.cc:262

#9 0x0000000000d3e583 in fill_schema_processlist (thd=<value optimized out>,

tables=<value optimized out>, cond=<value optimized out>)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_show.cc:2531

#10 0x0000000000d3d1a8 in do_fill_table (thd=<value optimized out>, table_list=0x7ff6780090d8,

qep_tab=0x7ff6780f0170)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_show.cc:8126

#11 0x0000000000d3d42c in get_schema_tables_result (join=0x7ff6780eb0c8,

executed_place=PROCESSED_BY_JOIN_EXEC)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_show.cc:8256

#12 0x0000000000d32bad in JOIN::prepare_result (this=0x7ff6780eb0c8)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_select.cc:908

#13 0x0000000000cc9fe0 in JOIN::exec (this=0x7ff6780eb0c8)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_executor.cc:124

#14 0x0000000000d33f40 in handle_query (thd=0x7ff67802e950, lex=0x7ff678030a98,

result=0x7ff6780eab28, added_options=1, removed_options=0)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_select.cc:184

#15 0x0000000000cf50e3 in execute_sqlcom_select (thd=0x7ff67802e950, all_tables=<value optimized out>)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_parse.cc:5196

#16 0x0000000000cf8921 in mysql_execute_command (thd=0x7ff67802e950, first_level=true)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_parse.cc:2795

#17 0x0000000000cfa725 in mysql_parse (thd=0x7ff67802e950, parser_state=<value optimized out>)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_parse.cc:5612

#18 0x0000000000cfb948 in dispatch_command (thd=0x7ff67802e950, com_data=0x7ff76e1fbd60,

command=COM_QUERY)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/sql_parse.cc:1461

#19 0x0000000000cfc834 in do_command (thd=0x7ff67802e950)

这里开始要结合源码来进行问题的分析,先理下崩溃线程的函数调用过程:

handle_query(THD*, LEX*, Query_result*, unsigned long long, unsigned long long)

JOIN::exec()

JOIN::prepare_result()

get_schema_tables_result(JOIN*, enum_schema_table_state)

do_fill_table(THD*, TABLE_LIST*, QEP_TAB*)

fill_schema_processlist(THD*, TABLE_LIST*, Item*)//填充is库processlist表入口函数

Global_THD_manager::do_for_all_thd_copy(Do_THD_Impl*) //对每个线程循环调用

Do_THD std::__1::for_each<THD**, Do_THD>(THD**, THD**, Do_THD)

Do_THD::operator()(THD*)

Fill_process_list::operator()(THD*)

Field_blob::store(char const*, unsigned long, charset_info_st const*)//填充INFO(longtext)字段信息

Field_blob::store_internal(char const*, unsigned long, charset_info_st const*)

field_well_formed_copy_nchars(charset_info_st const*, char*, unsigned long, charset_info_st const*, char const*, unsigned long, unsigned long, char const**, char const**, char const**)

well_formed_copy_nchars(charset_info_st const*, char*, unsigned long, charset_info_st const*, char const*, unsigned long, unsigned long, char const**, char const**, char const**)

崩溃时是在获取目标线程的info信息,比如这样:

core dump显示函数well_formed_copy_nchars在执行到memmove时,出现了对于from内存地址的非法访问造成crash,well_formed_copy_nchars部分代码如下:

set_if_smaller(from_length, to_length);

res= to_cs->cset->well_formed_len(to_cs, from, from + from_length,

nchars, &well_formed_error);

memmove(to, from, res);

解这样的问题,需要从内向外一层层剥离,了解from指向的内存是谁分配,后面对它做了什么。造成内存非法访问一般会有两种情况,一是越界,二是内存被释放。但无论是这两种情况的任何一种,都要先定位到from指针的初始化是在哪儿(事实是对这部分代码不是很熟悉,不然早就知道了)。这个过程需要详细的阅读相关代码,这里不做展开。直接给出from指针初始化的地方,在函数Fill_process_list::operator()(THD*)

,如下 :

/* INFO */

mysql_mutex_lock(&inspect_thd->LOCK_thd_query);

{

const char *query_str= inspect_thd->query().str; //获取目标线程当前执行的SQL

size_t query_length= inspect_thd->query().length;

#ifndef EMBEDDED_LIBRARY

String buf;

if (inspect_thd->is_a_srv_session())

{

buf.append(query_length? "PLUGIN: ":"PLUGIN");

if (query_length)

buf.append(query_str, query_length);

query_str= buf.c_ptr();

query_length= buf.length();

}

/* No else. We need fall-through */

#endif

if (query_str)

{

const size_t width= min<size_t>(PROCESS_LIST_INFO_WIDTH, query_length);

table->field[7]->store(query_str, width, inspect_thd->charset());

table->field[7]->set_notnull();

}

}

mysql_mutex_unlock(&inspect_thd->LOCK_thd_query);

这段代码展示了函数well_formed_copy_nchars

中的实参from指向的是THD中m_query_string的str。并且赋值以后的相关操作都是在目标线程的LOCK_thd_query下进行,讲道理,目标线程应该在写入m_query_string时也加锁互斥。所以不应该出现锁保护下访问,反而中途被其他线程释放。

互斥锁 LOCK_thd_query

/**

Protects THD::m_query_string. No other mutexes should be locked

while having this mutex locked.

*/

mysql_mutex_t LOCK_thd_query;

THD内部更新m_query_string以及监控线程在获取这部分信息时,都是在锁保护下进行的。举个写m_query_string的例子

void THD::set_query(const LEX_CSTRING& query_arg)

{

DBUG_ASSERT(this == current_thd);

mysql_mutex_lock(&LOCK_thd_query);

m_query_string= query_arg;

mysql_mutex_unlock(&LOCK_thd_query);

#ifdef HAVE_PSI_THREAD_INTERFACE

PSI_THREAD_CALL(set_thread_info)(query_arg.str, query_arg.length);

#endif

}

读取时,参照上面提到过的函数Fill_process_list::operator()(THD*),同样加锁保护。

下一步要定位的就是,当前监控线程正在处理的目标线程是谁,以及目标线程当前所做的操作,是否有可能在不加锁的情况下释放了对应的内存。这部分信息也可以通过core dump文件拿到。通过crash线程拿到正在处理的目标线程id号,或者直接看是不是复制线程,操作:

(gdb) p inspect_thd->slave_thread$6 = true

寻找监控线程正在处理的目标线程(运气比较好,slave上的线程不多),并且切换过去,查看目标线程在mysqld crash时的堆栈信息

(gdb) bt

#0 0x0000003ca98e54b7 in munmap () from lib64/libc.so.6

#1 0x0000003ca9878aa7 in _int_free () from lib64/libc.so.6

#2 0x0000000000eb7a0c in Rows_query_log_event::~Rows_query_log_event() ()

#3 0x0000000000ef6b17 in Relay_log_info::cleanup_context (this=0x7ff68c0233f0, thd=0x7ff6900008c0,

error=false)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/rpl_rli.cc:1720

#4 0x0000000000eac990 in rows_event_stmt_cleanup (this=0x7ff68ddf02a0, rli=0x7ff68c0233f0)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/log_event.cc:11204

#5 Rows_log_event::do_apply_event (this=0x7ff68ddf02a0, rli=0x7ff68c0233f0)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/log_event.cc:11082

#6 0x0000000000f03565 in slave_worker_exec_job_group (worker=0x7ff68c0233f0, rli=0x387ddb50)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/rpl_rli_pdb.cc:2667

#7 0x0000000000ef1b73 in handle_slave_worker (arg=0x7ff68c0233f0)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/sql/rpl_slave.cc:6132

#8 0x0000000000f46844 in pfs_spawn_thread (arg=0x7ff68c027370)

at export/home/pb2/build/sb_0-22759043-1489826356.96/mysql-5.7.18/storage/perfschema/pfs.cc:2188

#9 0x0000003ca9c07aa1 in start_thread () from lib64/libpthread.so.0

#10 0x0000003ca98e8aad in clone () from lib64/libc.so.6

这部分代码看起来亲切了许多,Rows_query_log_event其实就是在开启binlog_rows_query_log_events后,ROW格式的binlog同时也会记录原始SQL,并且sql线程回放到对应的event时,可以通过show processlist可以看到当前正在执行的SQL明文。

其实到这里,问题开始清晰起来,监控线程正在处理的目标线程是SQL回放线程,而SQL回放线程中的m_query_string的内存在释放时,可能不需要加锁处理。

这个得从SQL线程分配m_query_string说起: SQL线程处理Rows_query_log_event时,通过set_query函数初始化m_query_string,m_query_string中的str指针指向m_rows_query

#if defined(MYSQL_SERVER) && defined(HAVE_REPLICATION)

int Rows_query_log_event::do_apply_event(Relay_log_info const *rli)

{

DBUG_ENTER("Rows_query_log_event::do_apply_event");

DBUG_ASSERT(rli->info_thd == thd);

/* Set query for writing Rows_query log event into binlog later.*/

thd->set_query(m_rows_query, strlen(m_rows_query)); m_query_string指向m_rows_query

DBUG_ASSERT(rli->rows_query_ev == NULL);

const_cast<Relay_log_info*>(rli)->rows_query_ev= this;

/* Tell worker not to free the event */

worker= NULL;

DBUG_RETURN(0);

}

#endif

而m_rows_query分配则在构造Rows_query_log_event时,代码如下,

class Rows_query_log_event : public Ignorable_log_event,

public binary_log::Rows_query_event{

public:

#ifndef MYSQL_CLIENT

Rows_query_log_event(THD *thd_arg, const char * query, size_t query_len)

: Ignorable_log_event(thd_arg)

{

DBUG_ENTER("Rows_query_log_event::Rows_query_log_event");

common_header->type_code= binary_log::ROWS_QUERY_LOG_EVENT;

if (!(m_rows_query= (char*) my_malloc(key_memory_Rows_query_log_event_rows_query,

query_len + 1, MYF(MY_WME))))

return;

my_snprintf(m_rows_query, query_len + 1, "%s", query);

DBUG_PRINT("enter", ("%s", m_rows_query));

DBUG_VOID_RETURN;

}

#endif

这跟其他的外部线程进行m_query_string的构造完全不同,参照函数alloc_query,通过mem_root进行内存分配和释放。

char *query= static_cast<char*>(thd->alloc(packet_length + 1));if (!query)return TRUE;memcpy(query, packet, packet_length);query[packet_length]= '\0';thd->set_query(query, packet_length);

再来看SQL线程释放 m_rows_query的过程(参照coredump):SQL线程在回放完成后,通过Rows_query_log_event的析构函数,释放 m_rows_query分配的内存,这个过程不在LOCK_thd_query mutex锁保护下进行, 释放的逻辑在函数void Relay_log_info::cleanup_context(THD *thd, bool error)

中,

if (rows_query_ev)

{

delete rows_query_ev;

rows_query_ev= NULL;

info_thd->reset_query();

}

delete Rows_query_log_event对象rows_query_ev,调用析构函数,释放m_rows_query内存,如下:

virtual ~Rows_query_log_event()

{

if (m_rows_query)

my_free(m_rows_query);

m_rows_query= NULL;

}

所以也就造成了监控线程对于非法内存的访问(from指针初始化时指向合法的内存,使用过程中被SQL线程通过另外的指针释放掉了)。到这里问题基本上查清楚了,下面来看怎么修复。

bug修复/解决方案

方案1 在函数 void Relay_log_info::cleanup_context(THD *thd, bool error)中,执行

delete rows_query_ev

在LOCK_thd_query锁保护下进行方案2 调整delete rows_query_ev和info_thd->reset_query()的顺序,如下 :现有代码

if (error)

{

trans_rollback_stmt(thd); // if a "statement transaction"

trans_rollback(thd); // if a "real transaction"

}

if (rows_query_ev)

{

delete rows_query_ev;

rows_query_ev= NULL;

info_thd->reset_query();

}

修改为

if (error)

{

trans_rollback_stmt(thd); // if a "statement transaction"

trans_rollback(thd); // if a "real transaction"

}

if (rows_query_ev)

{

info_thd->reset_query();

delete rows_query_ev;

rows_query_ev= NULL;

}

方案1固然可行,但是可能会加重复加锁和释放锁,当然也可以加一次,需要侵入THD代码。方案2通过调整reset_query和析构rows_query_ev的顺序,来避免其他线程在访问THD中的m_query_string时,进行内存释放。

本来想给官方提个bug,但当我把代码切到5.7.28,发现已经修复了,往前看了几个版本,这个bug是在5.7.21中被修复,后续又进行了相应的优化处理。官方采用了第二种方案,并且添加了注释。

if (error)

{

trans_rollback_stmt(thd); // if a "statement transaction"

trans_rollback(thd); // if a "real transaction"

}

if (rows_query_ev)

{

/*

In order to avoid invalid memory access, THD::reset_query() should be

called before deleting the rows_query event.

*/

info_thd->reset_query();

delete rows_query_ev;

rows_query_ev= NULL;

DBUG_EXECUTE_IF("after_deleting_the_rows_query_ev",

{

const char action[]="now SIGNAL deleted_rows_query_ev WAIT_FOR go_ahead";

DBUG_ASSERT(!debug_sync_set_action(info_thd,

STRING_WITH_LEN(action)));

};);

}

但是大概看了下 5.7.21的Release Notes,也没看到和这个bug修复相关的介绍,不然这种crash p0级别的bug在我们进行bug追踪的过程中应该早就注意到了。对于生产环境而言,不可能立刻进行版本的升级来避免此问题的发生,所以还需要给出一个临时解决方案: 进行逻辑数据迁移导入到目标集群前,把目标集群的master实例中的binlog_rows_query_log_events设置为off即可。

对于大多数人可能不会遇到这个bug,因为出现的条件相对还是比较苛刻的。

好了,结束。