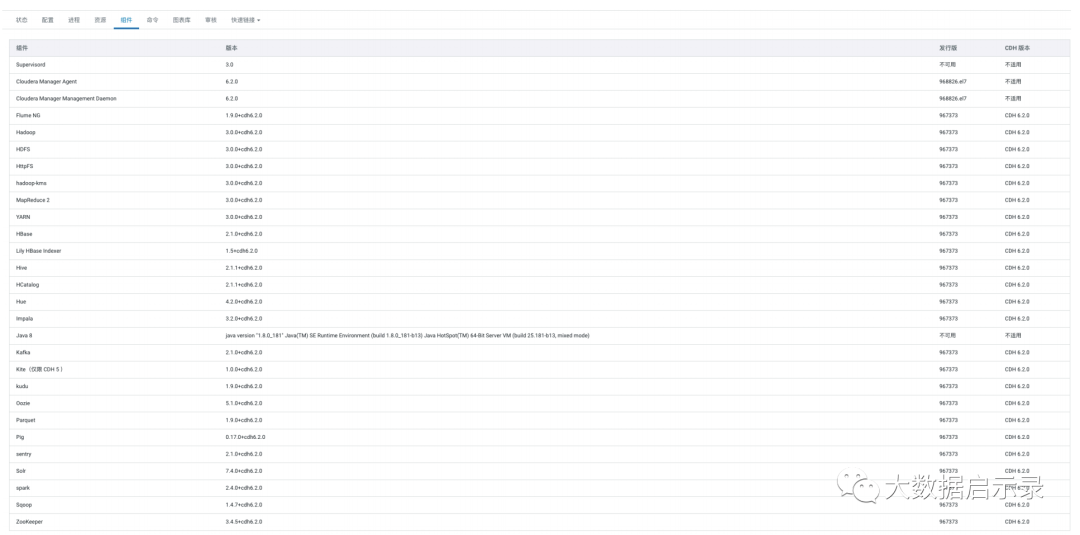

版本相关

1: CDH各组件版本

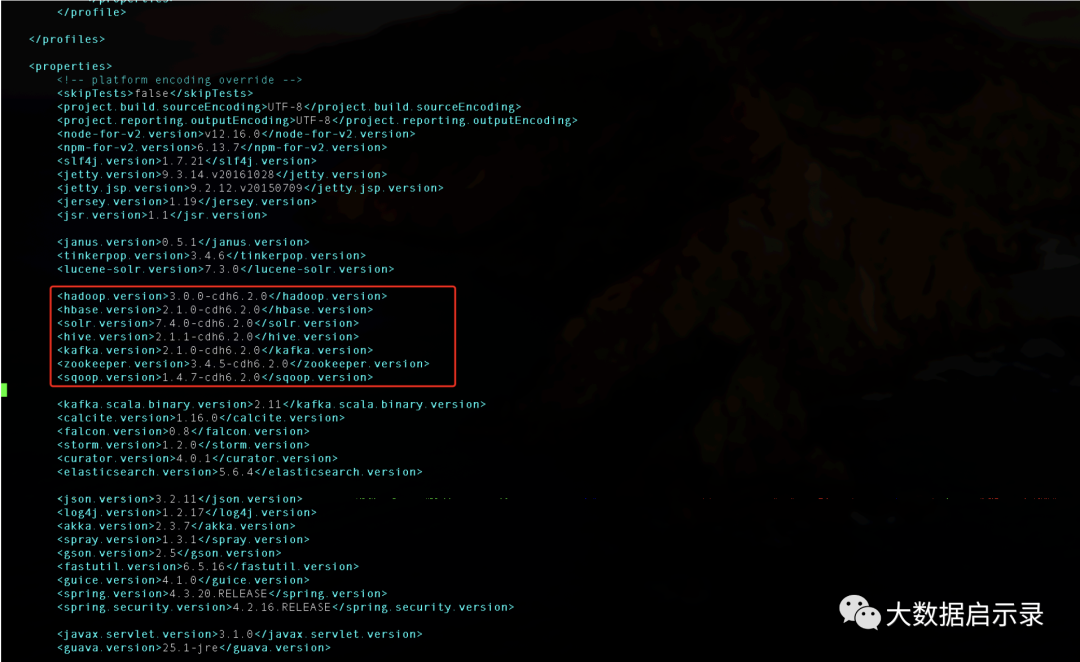

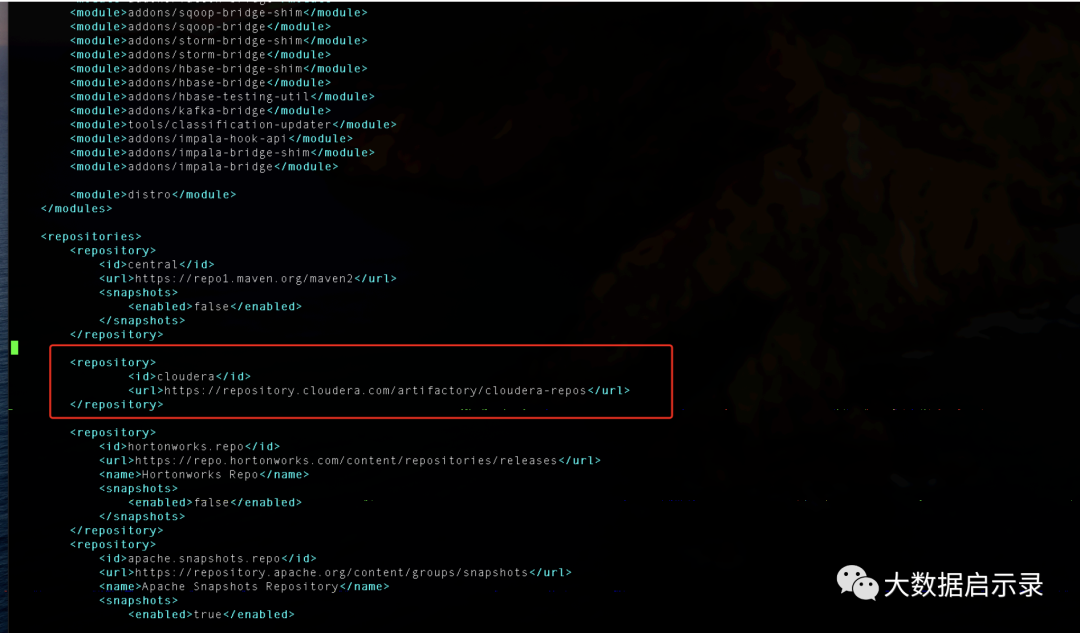

2: 配置

# 下载wget https://dlcdn.apache.org/atlas/2.1.0/apache-atlas-2.1.0-sources.tar.gz --nocheck-certificate#pom.xml<hadoop.version>3.0.0-cdh6.2.0</hadoop.version><hbase.version>2.1.0-cdh6.2.0</hbase.version><solr.version>7.4.0-cdh6.2.0</solr.version><hive.version>2.1.1-cdh6.2.0</hive.version><kafka.version>2.1.0-cdh6.2.0</kafka.version><zookeeper.version>3.4.5-cdh6.2.0</zookeeper.version><sqoop.version>1.4.7-cdh6.2.0</sqoop.version># pom.xml<repository><id>cloudera</id><url>https://repository.cloudera.com/artifactory/cloudera-repos</url></repository>

注意:pom.xml修改的内容,要与cdh集群中的版本一致。

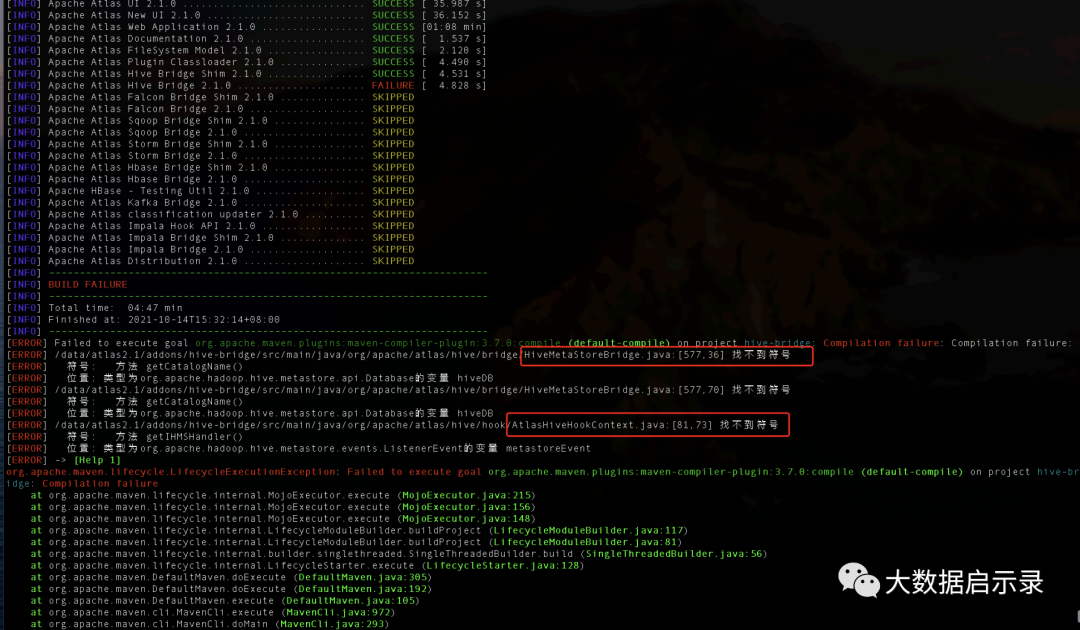

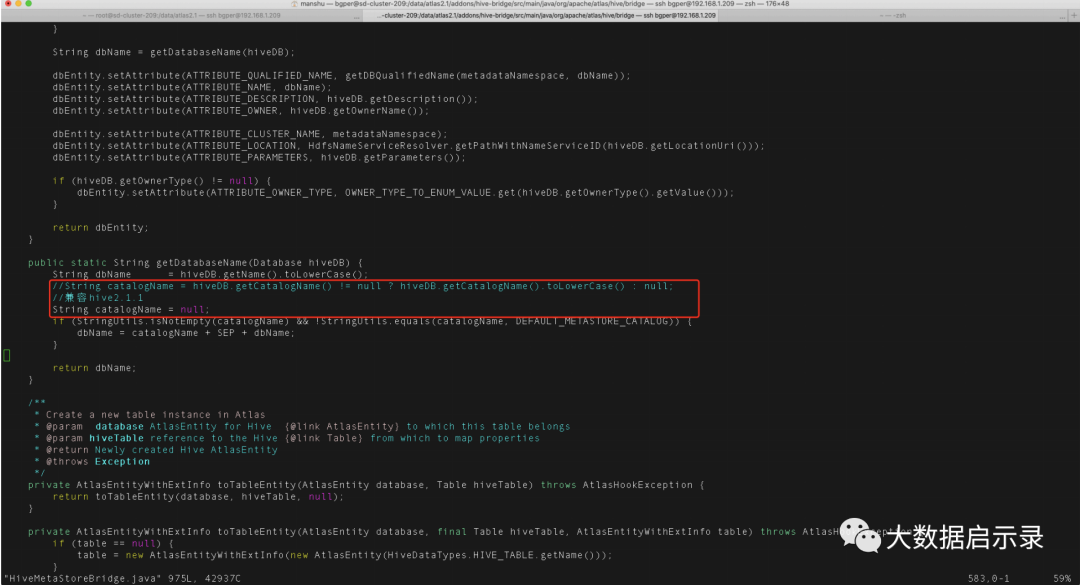

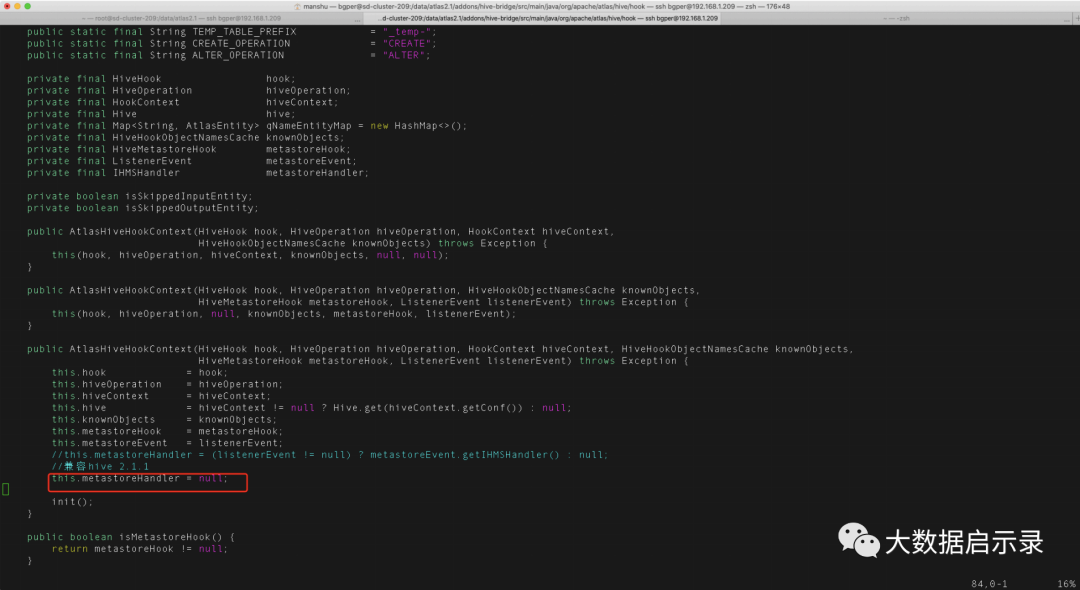

3: 兼容Hive2.1.1-cdh6.2.0修改源码

注意:不做兼容编译打包会失败

子工程apache-atlas-sources-2.1.0\addons\hive-bridgeorg/apache/atlas/hive/bridge/HiveMetaStoreBridge.javaString catalogName = hiveDB.getCatalogName() != null ? hiveDB.getCatalogName().toLowerCase() : null;改为:String catalogName = null;

org/apache/atlas/hive/hook/AtlasHiveHookContext.javathis.metastoreHandler = (listenerEvent != null) ? metastoreEvent.getIHMSHandler(): null;改为:this.metastoreHandler = null;

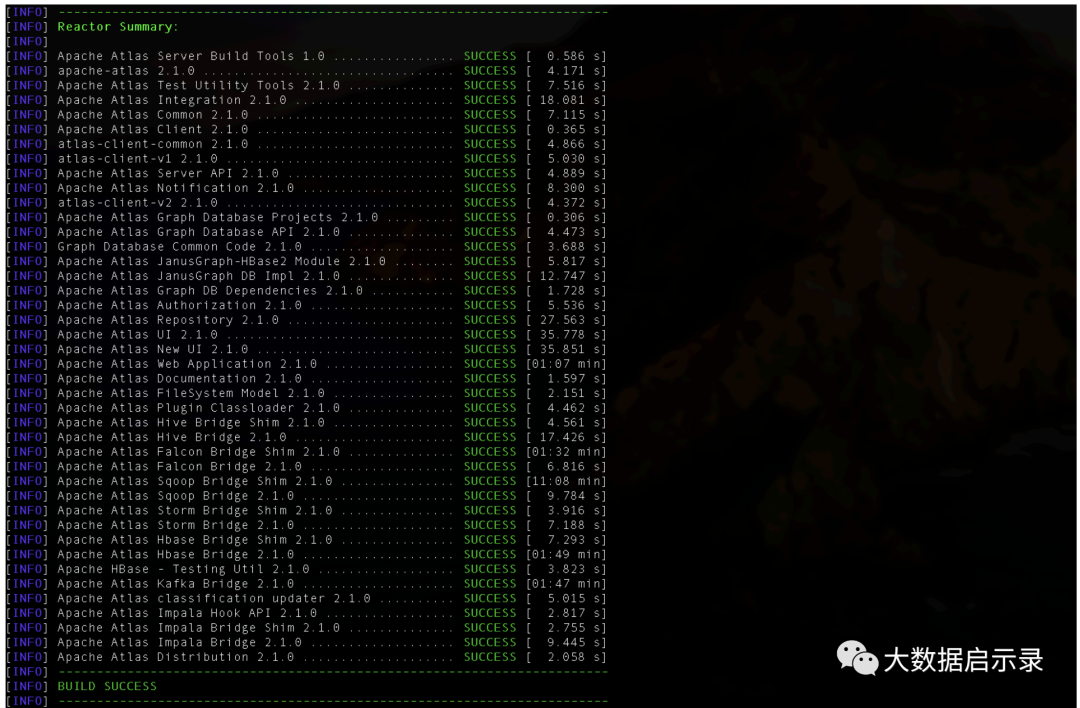

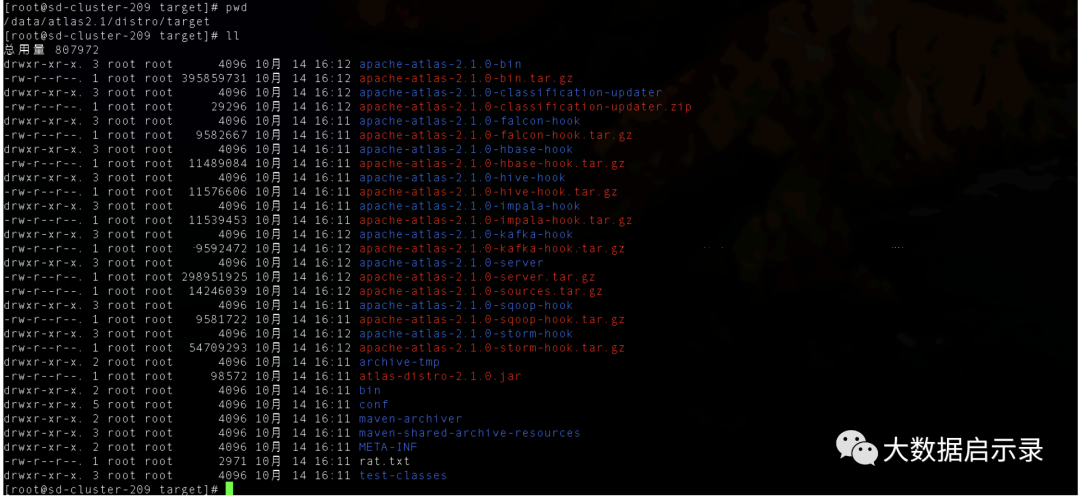

4: 编译打包

export MAVEN_OPTS="-Xms2g -Xmx2g"sudo mvn clean -DskipTests install [ -X(查看debug信息)]sudo mvn clean -DskipTests package -Pdist

5: Atlas安装

# 将安装包移动至/data/app下,并改名为atlas2.1cp -r apache-atlas-2.1.0/ /data/app/mv apache-atlas-2.1.0/ atlas2.1# 配置文件vim /data/app/atlas2.1/conf/atlas-application.properties# 配置项######### Server Properties #########atlas.rest.address=http://192.168.1.209:21000## Server port configurationatlas.server.http.port=21000

6: 集成CDH组件

6.1 集成kafka

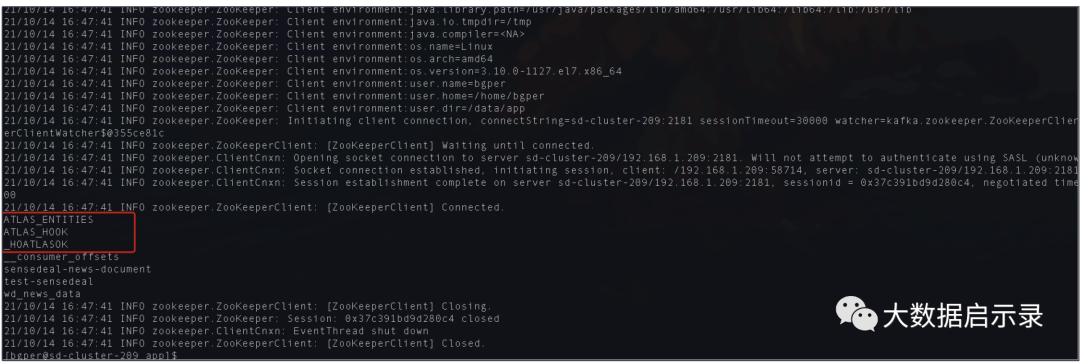

# 配置文件vim /data/app/atlas2.1/conf/atlas-application.properties# 配置项atlas.notification.embedded=false(如果要使用内嵌的kafka,则需改为true)atlas.kafka.zookeeper.connect=sd-cluster-207:2181,sd-cluster-208:2181,sd-cluster-209:2181atlas.kafka.bootstrap.servers=sd-cluster-207:9092,sd-cluster-208:9092,sd-cluster-209:9092atlas.kafka.zookeeper.session.timeout.ms=4000atlas.kafka.zookeeper.connection.timeout.ms=2000atlas.kafka.enable.auto.commit=trueatlas.kafka.offsets.topic.replication.factor=3kafka-topics --zookeeper sd-cluster-209:2181 --create --replication-factor 3 --partitions 3 --topic _HOATLASOKkafka-topics --zookeeper sd-cluster-209:2181 --create --replication-factor 3 --partitions 3 --topic ATLAS_HOOKkafka-topics --zookeeper sd-cluster-209:2181 --create --replication-factor 3 --partitions 3 --topic ATLAS_ENTITIESkafka-topics.sh --list --zookeeper sd-cluster-209:2181

6.2 集成Hbase

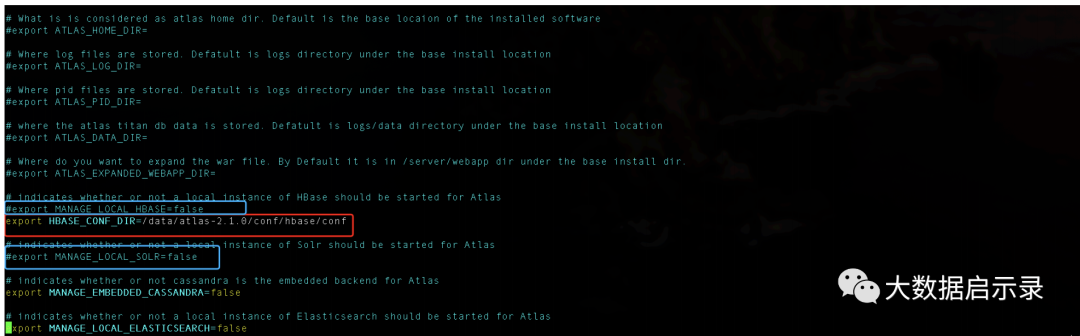

# 配置文件vim /data/app/atlas2.1/conf/atlas-application.properties# 配置项atlas.graph.storage.hostname=sd-cluster-207:2181,sd-cluster-208:2181,sd-cluster-209:2181# 将hbase的配置文件软连接到Atlas的conf的hbase目录下ln -s /etc/hbase/conf/ /data/app/atlas2.1/conf/hbase/# 配置文件vim /data/app/atlas2.1/conf/atlas-env.shexport HBASE_CONF_DIR=//data/app/atlas2.1/conf/hbase/conf

6.3 集成Solr

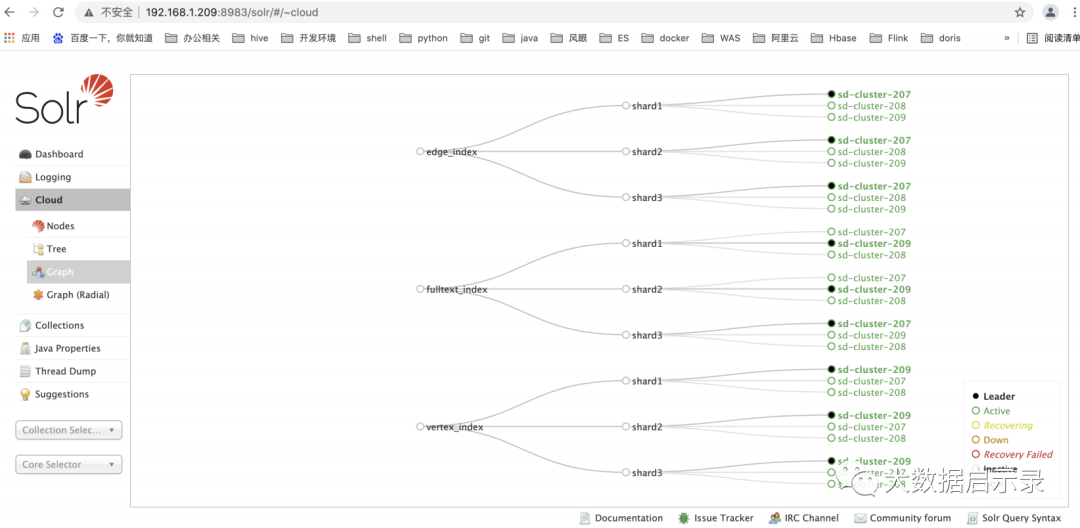

# 配置文件vim /data/app/atlas2.1/conf/atlas-application.properties# 配置项atlas.graph.index.search.solr.zookeeper-url=sd-cluster-207:2181/solr,sd-cluster-208:2181/solr,sd-cluster-209:2181/solr# 将Atlas的conf目录下Solr文件夹同步到Solr的目录下并更名cp -r /data/app/atlas2.1/conf/solr/ /opt/cloudera/parcels/CDH/lib/solr/atlas_solr# 同步到其它节点 sd-cluster-207̵sd-cluster-208/opt/cloudera/parcels/CDH/lib/solr/atlas_solr# 修改用户组chown -R solr:solr /opt/cloudera/parcels/CDH/lib/solr/# 切换用户su solr# Solr创建collection/opt/cloudera/parcels/CDH/lib/solr/bin/solr create -c vertex_index -d/opt/cloudera/parcels/CDH/lib/solr/atlas_solr -force -shards 3 -replicationFactor 3/opt/cloudera/parcels/CDH/lib/solr/bin/solr create -c edge_index -d/opt/cloudera/parcels/CDH/lib/solr/atlas_solr -force -shards 3 -replicationFactor 3/opt/cloudera/parcels/CDH/lib/solr/bin/solr create -c fulltext_index -d/opt/cloudera/parcels/CDH/lib/solr/atlas_solr -force -shards 3 -replicationFactor 3

6.4 Atlas启动

/data/app/atlas2.1/bin/atlas_start.pyhttp://192.168.1.209:21000/默认用户名和密码: admin

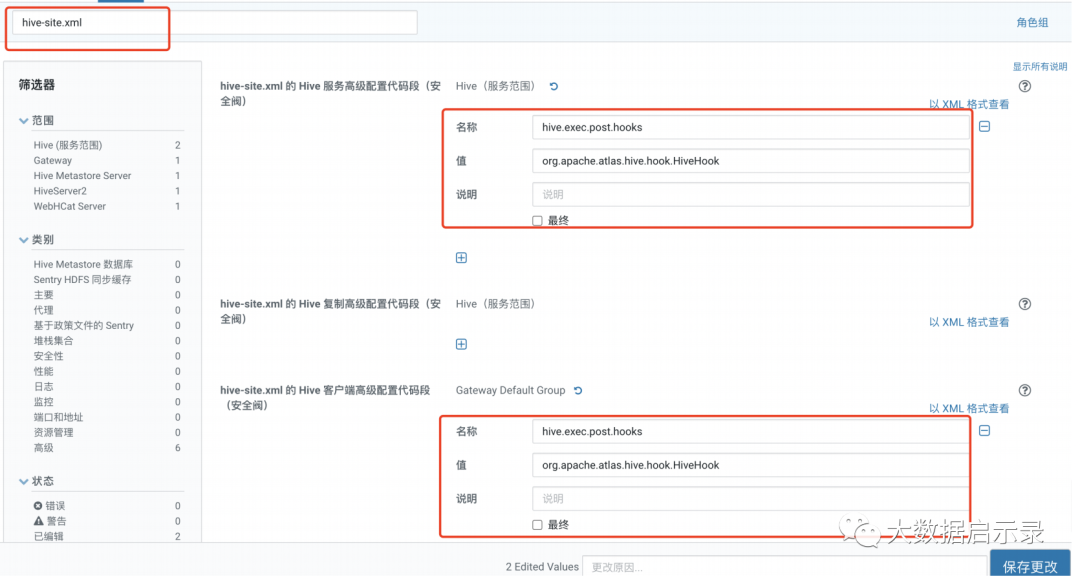

6.5 集成Hive

修改【hive-site.xml的Hive服务高级代码段(安全阀)】名称:hive.exec.post.hooks值:org.apache.atlas.hive.hook.HiveHook修改【hive-site.xml的Hive客户端高级代码段(安全阀)】名称:hive.exec.post.hooks值:org.apache.atlas.hive.hook.HiveHook,org.apache.hadoop.hive.ql.hooks.LineageLogger

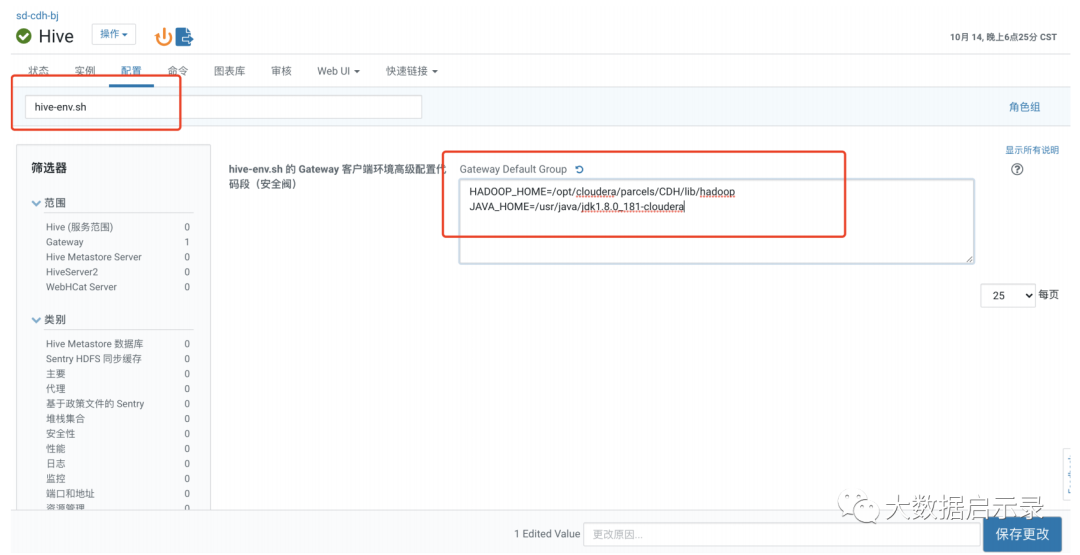

修改【hive-env.sh的Gateway客户端环境高级配置代码段(安全阀)】HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoopJAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

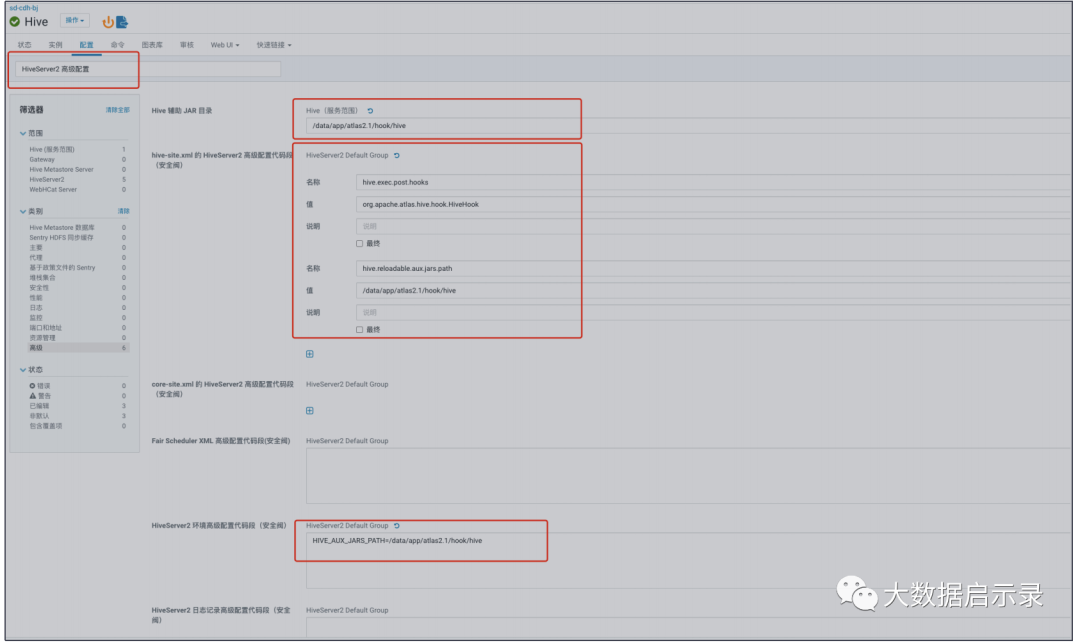

修改【Hive辅助JAR目录】值:/data/app/atlas2.1/hook/hive修改hive-site.xml 的HiveServer2高级配置代码段(安全阀)】名称:hive.exec.post.hooks值:org.apache.atlas.hive.hook.HiveHook,org.apache.hadoop.hive.ql.hooks.LineageLogger名称:hive.reloadable.aux.jars.path值:/data/app/atlas2.1/hook/hive修改【HiveServer2环境高级配置代码段(安全阀)】HIVE_AUX_JARS_PATH=/data/app/atlas2.1/hook/hive

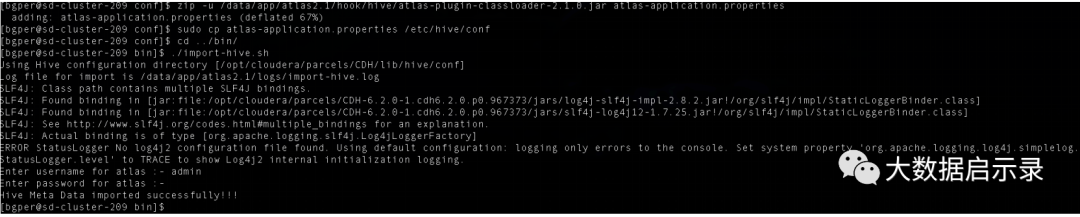

将配置文件atlas-application.properties添加到atlas2.1/hook/hive的atlas-plugin-classloader-2.1.0.jar

# 切换到Atlas的conf目录下cd /data/atlas-2.1.0/conf# 添加zip -u /data/app/atlas2.1/hook/hive/atlas-plugin-classloader-2.1.0.jar atlasapplication.properties# 将配置文件添加到Hive的配置目录下sudo cp atlas-application.properties /etc/hive/conf# scp到其它主机hive配置下scp atlas-application.properties sd-cluster-207̵sd-cluster-207:/etc/hive/conf

将Hive数据导入Atlas

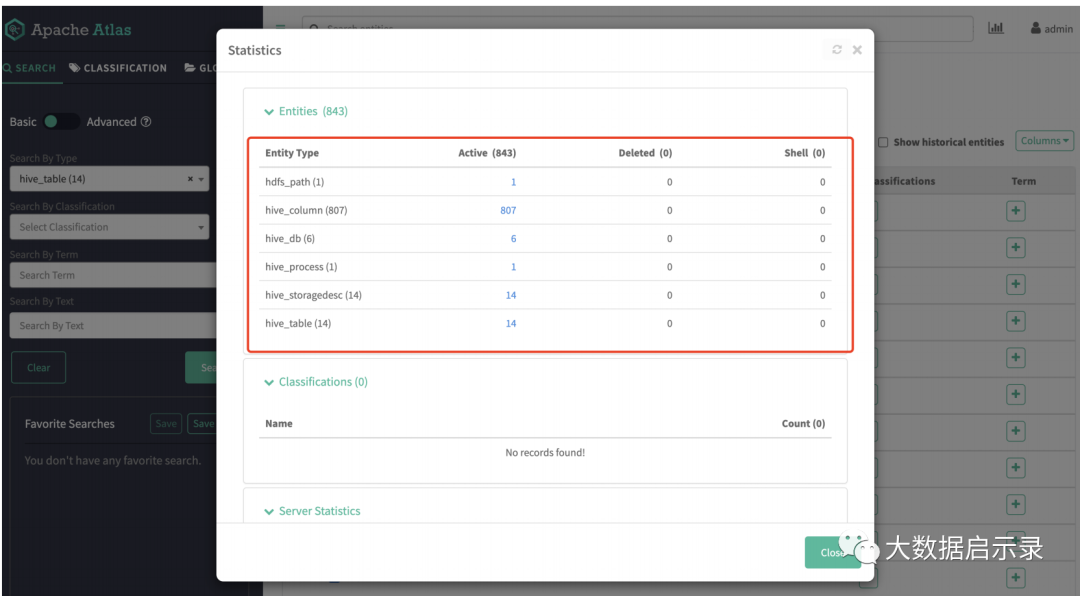

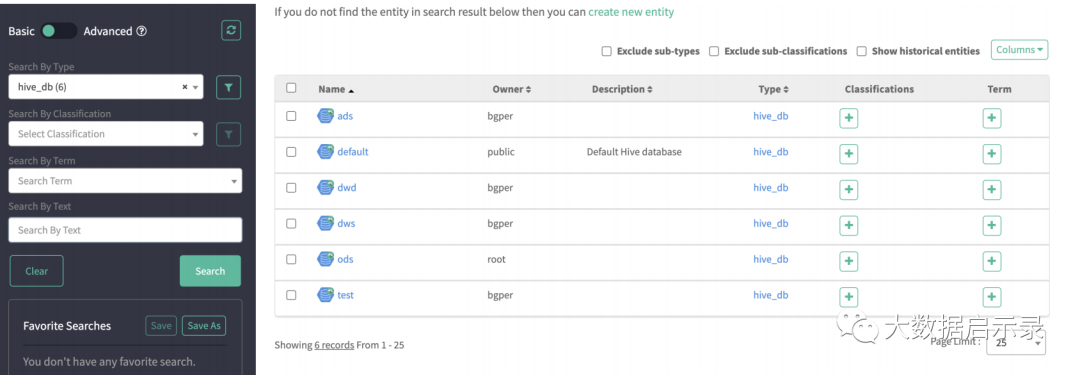

cd /data/app/atlas2.1/bin./import-hive.sh

登陆atlas查看hive相关元数据

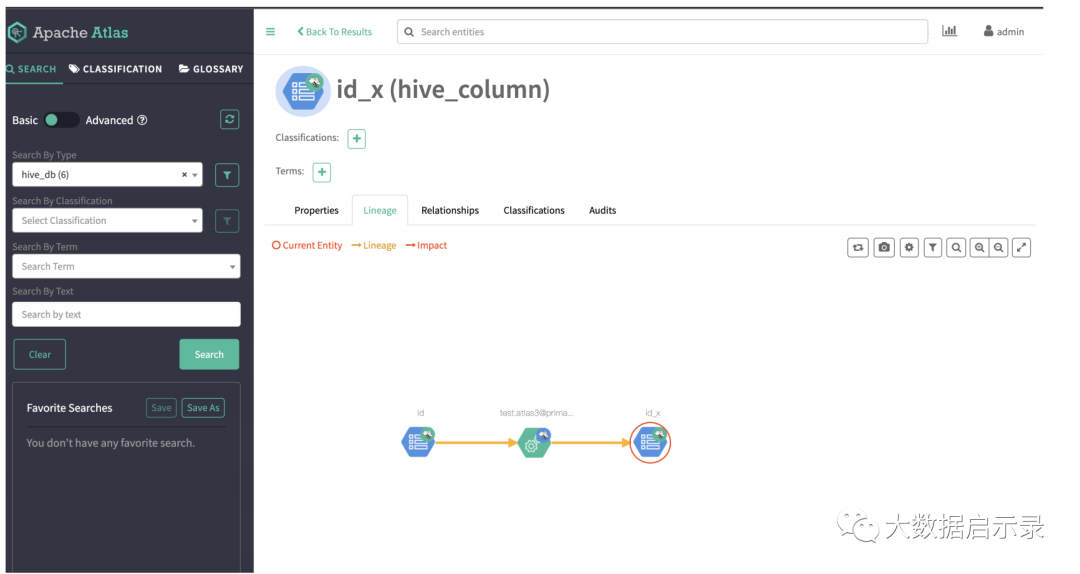

7: 验证分析

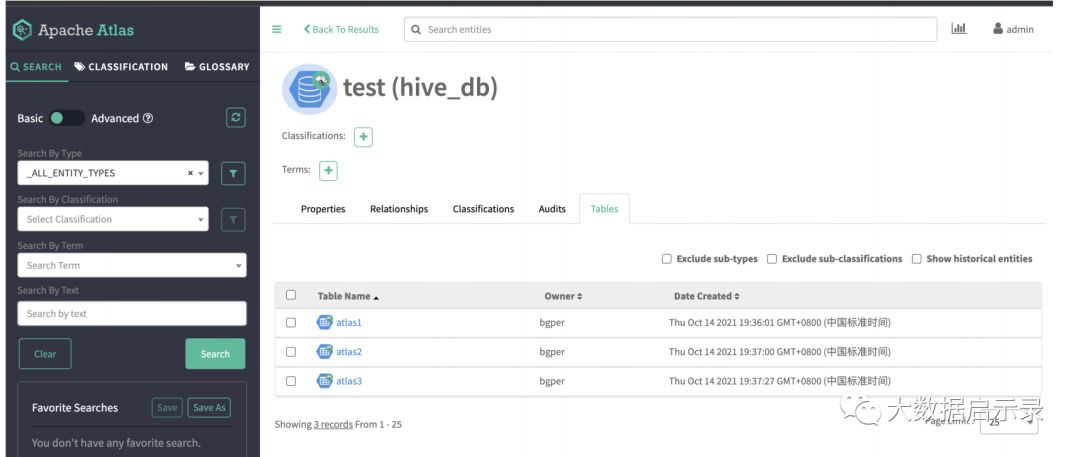

建表

create table test.atlas1 as select '1' as id,'wangman' as name;create table test.atlas2 as select '1' as id,'jilin' as address;create table test.atlas3 asselect a.id as id_x,a.name as name_x,b.address as address_x from test.atlas1 aleft join test.atlas2 b on a.id=b.id

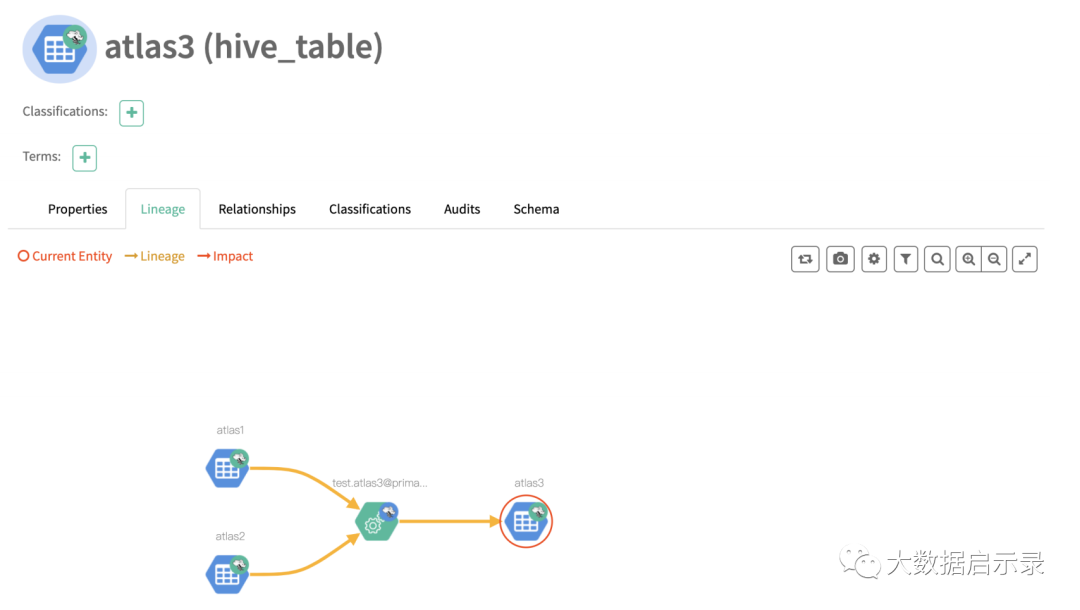

表血缘

自动血缘

文章转载自大数据启示录,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。