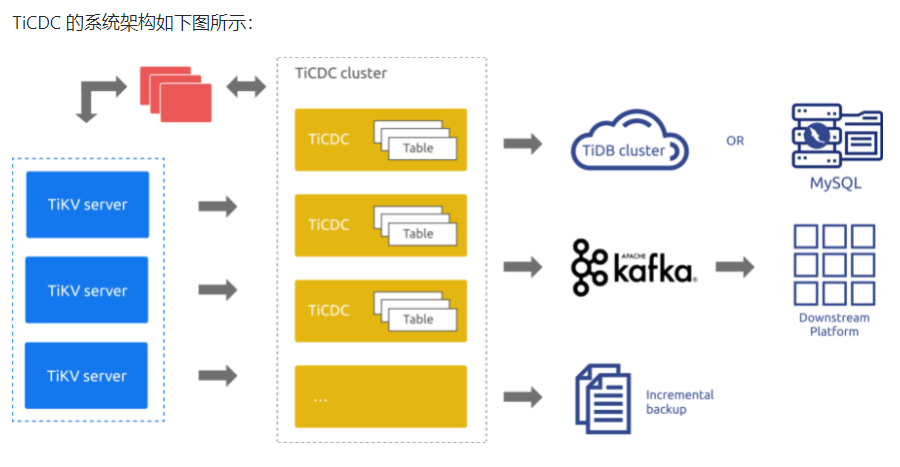

TICDC 是TIDB KV level 捕获数据变化的工具。 属于 TIDB 数据库集群中的一个组件。

TICDC 不需要打开bin log, 同时可以支持订阅式的多个数据 sink 的同步, 像mysql, kafak, 文件什么的

TICDC 运行时是一种无状态节点,通过 PD 内部的 etcd 实现高可用。TiCDC 集群支持创建多个同步任务,向多个不同的下游进行数据同步。

官网详情: https://docs.pingcap.com/zh/tidb/stable/ticdc-overview#ticdc-%E7%AE%80%E4%BB%8B

我们可以使用TIUP工具来进行安装。

我们利用tiup 集群扩容的命令来安装 TICDC这个组件。

编辑配置文件 scale-out.yaml

INFRA [mysql@wqdcsrv3065 ~]# cat scale-out.yaml cdc_servers: - host: 10.67.200.37 port: 8300 deploy_dir: "/data/tidb/tidb-deploy/cdc-8300" log_dir: "/data/tidb/tidb-deploy/log"

运行扩容命令,安装TICDC组件 :

tiup cluster scale-out test-tidb scale-out.yaml -umysql -p

INFRA [mysql@wqdcsrv3065 ~]# tiup cluster scale-out test-tidb scale-out.yaml -umysql -p tiup is checking updates for component cluster ... A new version of cluster is available: The latest version: v1.9.1 Local installed version: v1.8.2 Update current component: tiup update cluster Update all components: tiup update --all Starting component `cluster`: /home/mysql/.tiup/components/cluster/v1.8.2/tiup-cluster /home/mysql/.tiup/components/cluster/v1.8.2/tiup-cluster scale-out test-tidb scale-out.yaml -umysql -p Input SSH password: + Detect CPU Arch - Detecting node 10.67.200.37 ... Done Please confirm your topology: Cluster type: tidb Cluster name: test-tidb Cluster version: v5.3.0 Role Host Ports OS/Arch Directories ---- ---- ----- ------- ----------- cdc 10.67.200.37 8300 linux/x86_64 /data/tidb/tidb-deploy/cdc-8300,/data/tidb/tidb-data/cdc-8300 Attention: 1. If the topology is not what you expected, check your yaml file. 2. Please confirm there is no port/directory conflicts in same host. Do you want to continue? [y/N]: (default=N) y + [ Serial ] - SSHKeySet: privateKey=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/ssh/id_rsa, publicKey=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/ssh/id_rsa.pub + [Parallel] - UserSSH: user=mysql, host=10.67.200.37 + [Parallel] - UserSSH: user=mysql, host=10.67.200.37 + [Parallel] - UserSSH: user=mysql, host=10.67.200.37 + [Parallel] - UserSSH: user=mysql, host=10.67.200.37 + [Parallel] - UserSSH: user=mysql, host=10.67.200.37 + [Parallel] - UserSSH: user=mysql, host=10.67.200.37 + [Parallel] - UserSSH: user=mysql, host=10.67.200.37 + [Parallel] - UserSSH: user=mysql, host=10.67.200.37 - Download cdc:v5.3.0 (linux/amd64) ... Done + [ Serial ] - UserSSH: user=mysql, host=10.67.200.37 + [ Serial ] - Mkdir: host=10.67.200.37, directories='/data/tidb/tidb-deploy/cdc-8300','/data/tidb/tidb-deploy/cdc-8300/bin','/data/tidb/tidb-deploy/cdc-8300/conf','/data/tidb/tidb-deploy/cdc-8300/scripts' + [ Serial ] - Mkdir: host=10.67.200.37, directories='/data/tidb/tidb-data/cdc-8300' + [ Serial ] - Mkdir: host=10.67.200.37, directories='/data/tidb/tidb-deploy/log' + [ Serial ] - CopyComponent: component=cdc, version=v5.3.0, remote=10.67.200.37:/data/tidb/tidb-deploy/cdc-8300 os=linux, arch=amd64 + [ Serial ] - ScaleConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, service=cdc-8300.service, deploy_dir=/data/tidb/tidb-deploy/cdc-8300, data_dir=[/data/tidb/tidb-data/cdc-8300], log_dir=/data/tidb/tidb-deploy/log, cache_dir= + Check status Enabling component cdc Enabling instance 10.67.200.37:8300 Enable instance 10.67.200.37:8300 success Enabling component node_exporter Enabling instance 10.67.200.37 Enable 10.67.200.37 success Enabling component blackbox_exporter Enabling instance 10.67.200.37 Enable 10.67.200.37 success + [ Serial ] - Save meta + [ Serial ] - Start Cluster Starting component cdc Starting instance 10.67.200.37:8300 Start instance 10.67.200.37:8300 success Starting component node_exporter Starting instance 10.67.200.37 Start 10.67.200.37 success Starting component blackbox_exporter Starting instance 10.67.200.37 Start 10.67.200.37 success + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/tidb-4000.service, deploy_dir=/data/tidb/tidb-deploy/tidb-4000, data_dir=[], log_dir=/data/tidb/tidb-deploy/tidb-4000/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/pd-2379.service, deploy_dir=/data/tidb/tidb-deploy/pd-2379, data_dir=[/data/tidb/tidb-data/pd-2379], log_dir=/data/tidb/tidb-deploy/pd-2379/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/tikv-20161.service, deploy_dir=/data/tidb/tidb-deploy/tikv-20161, data_dir=[/data/tidb/tidb-data/tikv-20161], log_dir=/data/tidb/tidb-deploy/tikv-20161/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/tikv-20162.service, deploy_dir=/data/tidb/tidb-deploy/tikv-20162, data_dir=[/data/tidb/tidb-data/tikv-20162], log_dir=/data/tidb/tidb-deploy/tikv-20162/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/tikv-20160.service, deploy_dir=/data/tidb/tidb-deploy/tikv-20160, data_dir=[/data/tidb/tidb-data/tikv-20160], log_dir=/data/tidb/tidb-deploy/tikv-20160/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/tiflash-9000.service, deploy_dir=/data/tidb/tidb-deploy/tiflash-9000, data_dir=[/data/tidb/tidb-data/tiflash-9000], log_dir=/data/tidb/tidb-deploy/tiflash-9000/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/cdc-8300.service, deploy_dir=/data/tidb/tidb-deploy/cdc-8300, data_dir=[/data/tidb/tidb-data/cdc-8300], log_dir=/data/tidb/tidb-deploy/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/prometheus-9090.service, deploy_dir=/data/tidb/tidb-deploy/prometheus-9090, data_dir=[/data/tidb/tidb-data/prometheus-9090], log_dir=/data/tidb/tidb-deploy/prometheus-9090/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - InitConfig: cluster=test-tidb, user=mysql, host=10.67.200.37, path=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache/grafana-3000.service, deploy_dir=/data/tidb/tidb-deploy/grafana-3000, data_dir=[], log_dir=/data/tidb/tidb-deploy/grafana-3000/log, cache_dir=/home/mysql/.tiup/storage/cluster/clusters/test-tidb/config-cache + [ Serial ] - SystemCtl: host=10.67.200.37 action=reload prometheus-9090.service + [ Serial ] - UpdateTopology: cluster=test-tidb Scaled cluster `test-tidb` out successfully

我们在集群中查看TICDC的组件:

INFRA [mysql@wqdcsrv3065 ~]# tiup cluster display test-tidb | grep cdc tiup is checking updates for component cluster ... A new version of cluster is available: The latest version: v1.9.1 Local installed version: v1.8.2 Update current component: tiup update cluster Update all components: tiup update --all Starting component `cluster`: /home/mysql/.tiup/components/cluster/v1.8.2/tiup-cluster /home/mysql/.tiup/components/cluster/v1.8.2/tiup-cluster display test-tidb 10.67.200.37:8300 cdc 10.67.200.37 8300 linux/x86_64 Up /data/tidb/tidb-data/cdc-8300 /data/tidb/tidb-deploy/cdc-8300

我们还可以通过 tiup ctl 查看组件的安装信息:

tiup ctl:v5.0.0 cdc capture list --pd=http://10.67.200.37:2379

INFRA [mysql@wqdcsrv3065 ~]# tiup ctl:v5.0.0 cdc capture list --pd=http://10.67.200.37:2379 Starting component `ctl`: /home/mysql/.tiup/components/ctl/v5.0.0/ctl /home/mysql/.tiup/components/ctl/v5.0.0/ctl cdc capture list --pd=http://10.67.200.37:2379 [ { "id": "0d13164f-791f-4b2b-adea-7f51facf88dc", "is-owner": true, "address": "10.67.200.37:8300" } ]

安装好TICDC工具后,我们要进行一下测试: 同步源TIDB 数据 到 目标MYSQL 表中:

首先我们需要在目标MYSQL数据库中 load 进去时区表:

uat mysql@wqdcsrv3352[11:02:02]:~ $ /opt/mysql/product/percona8.0/bin/mysql_tzinfo_to_sql /usr/share/zoneinfo | /opt/mysql/product/percona8.0/bin/mysql --login-path=root3021 --database mysql

Warning: Unable to load '/usr/share/zoneinfo/iso3166.tab' as time zone. Skipping it.

Warning: Unable to load '/usr/share/zoneinfo/leapseconds' as time zone. Skipping it.

Warning: Unable to load '/usr/share/zoneinfo/tzdata.zi' as time zone. Skipping it.

Warning: Unable to load '/usr/share/zoneinfo/zone.tab' as time zone. Skipping it.

Warning: Unable to load '/usr/share/zoneinfo/zone1970.tab' as time zone. Skipping it.

mysql> show tables like '%time_zone%';

+-------------------------------+

| Tables_in_mysql (%time_zone%) |

+-------------------------------+

| time_zone |

| time_zone_leap_second |

| time_zone_name |

| time_zone_transition |

| time_zone_transition_type |

+-------------------------------+

5 rows in set (0.00 sec)

我们在源端和目标端建一张相同的数据表:

[root@127.0.0.1][(none)]> create database dbcdc; Query OK, 0 rows affected (0.13 sec) [root@127.0.0.1][(none)]> use dbcdc; Database changed [root@127.0.0.1][dbcdc]> create table T1(id int primary key, name varchar(20)); Query OK, 0 rows affected (0.13 sec)

下一步,我们尝试一下启动CDC同步任务:

INFRA [mysql@wqdcsrv3065 bin]# pwd /data/tidb/tidb-deploy/cdc-8300/bin INFRA [mysql@wqdcsrv3065 bin]# ls cdc INFRA [mysql@wqdcsrv3065 bin]# ./cdc cli changefeed create --pd=http://10.67.200.37:2379 --sink-uri="mysql://app_monitor:admin123@10.67.38.50:3021/" --changefeed-id="replication-task-1" --sort-engine="unified" Create changefeed successfully! ID: replication-task-1 Info: {"sink-uri":"mysql://app_monitor:admin123@10.67.38.50:3021/","opts":{"_changefeed_id":"sink-verify"},"create-time":"2022-02-25T11:14:05.172548799+08:00","start-ts":431425806699331585,"target-ts":0,"admin-job-type":0,"sort-engine":"unified","sort-dir":"","config":{"case-sensitive":true,"enable-old-value":true,"force-replicate":false,"check-gc-safe-point":true,"filter":{"rules":["*.*"],"ignore-txn-start-ts":null},"mounter":{"worker-num":16},"sink":{"dispatchers":null,"protocol":"default"},"cyclic-replication":{"enable":false,"replica-id":0,"filter-replica-ids":null,"id-buckets":0,"sync-ddl":false},"scheduler":{"type":"table-number","polling-time":-1},"consistent":{"level":"none","max-log-size":64,"flush-interval":1000,"storage":""}},"state":"normal","history":null,"error":null,"sync-point-enabled":false,"sync-point-interval":600000000000,"creator-version":"v5.3.0"}

查看我们刚刚创建的CDC任务: state : nomal 表示运行正常

INFRA [mysql@wqdcsrv3065 bin]# ./cdc cli changefeed list --pd=http://10.67.200.37:2379 [ { "id": "replication-task-1", "summary": { "state": "normal", "tso": 431425830173802497, "checkpoint": "2022-02-25 11:15:34.684", "error": null } } ]

我们需要查看任务的具体信息 : 可以用命令 cdc cli changefeed query

INFRA [mysql@wqdcsrv3065 bin]# ./cdc cli changefeed query --pd=http://10.67.200.37:2379 --changefeed-id=replication-task-1 { "info": { "sink-uri": "mysql://app_monitor:admin123@10.67.38.50:3021/", "opts": { "_changefeed_id": "sink-verify" }, "create-time": "2022-02-25T11:14:05.172548799+08:00", "start-ts": 431425806699331585, "target-ts": 0, "admin-job-type": 0, "sort-engine": "unified", "sort-dir": "", "config": { "case-sensitive": true, "enable-old-value": true, "force-replicate": false, "check-gc-safe-point": true, "filter": { "rules": [ "*.*" ], "ignore-txn-start-ts": null }, "mounter": { "worker-num": 16 }, "sink": { "dispatchers": null, "protocol": "default" }, "cyclic-replication": { "enable": false, "replica-id": 0, "filter-replica-ids": null, "id-buckets": 0, "sync-ddl": false }, "scheduler": { "type": "table-number", "polling-time": -1 }, "consistent": { "level": "none", "max-log-size": 64, "flush-interval": 1000, "storage": "" } }, "state": "normal", "history": null, "error": null, "sync-point-enabled": false, "sync-point-interval": 600000000000, "creator-version": "v5.3.0" }, "status": { "resolved-ts": 431425915501674497, "checkpoint-ts": 431425915239530497, "admin-job-type": 0 }, "count": 0, "task-status": [ { "capture-id": "0d13164f-791f-4b2b-adea-7f51facf88dc", "status": { "tables": { "101": { "start-ts": 431425806699331585, "mark-table-id": 0 }, "65": { "start-ts": 431425806699331585, "mark-table-id": 0 }, "69": { "start-ts": 431425806699331585, "mark-table-id": 0 }, "74": { "start-ts": 431425806699331585, "mark-table-id": 0 }, "88": { "start-ts": 431425806699331585, "mark-table-id": 0 }, "97": { "start-ts": 431425806699331585, "mark-table-id": 0 } }, "operation": {}, "admin-job-type": 0 } } ] }

我们尝试在源端TIDB进行数据的插入操作, 并且观察目标端MYSQL数据库是否应用了CDC的变化:

插入:

源端tidb:

[root@127.0.0.1][dbcdc]> insert into T1 values(1,'jason');

Query OK, 1 row affected (0.01 sec)

目标端mysql:

mysql> select * from t1;

+----+-------+

| id | name |

+----+-------+

| 1 | jason |

+----+-------+

1 row in set (0.00 sec)