本系列总的专栏:一步一步学习OceanBase系列

一、说明

对于ob集群的扩容和租户的扩容,在之前的1.4版本文章里已经有过实操体验。ob2.2版本下操作命令是一样的。

本篇使用的ob版本是目前oceanbase官网免费提供下载的2c8g迷你试用版mini-ob-deploy.tar,关于此试用版的安装,上一篇文章也已经介绍了,本篇不再累述。

本篇主要介绍在ob2.2迷你试用安装版上,进行集群的扩容和租户的扩容,并观察ob集群的负载均衡、分区副本的迁移以及leader选主等特性。

二、我的操作流程是这样的:

1、先搭建一个三副本的1-1-1架构集群。

2、创建两个业务租户,一个mysql类型的租户mysql_test_tent和一个oracle类型的租户ora_test_tent,资源池分别是mysql_pool_test和ora_pool_test,使用的都是1c1g的unit规格。

3、分别在两个租户下创建业务用户aps2,并在业务用户aps2下创建两个测试表,一个是非分区表test,一个是hash分区表test_hash,并插入测试数据。

4、记录在1-1-1集群架构下,各节点资源使用情况,以及两个租户下每个表的分区副本所在节点情况。(扩容前的状态)

5、开始集群扩容,从1-1-1架构增加三台服务器,扩容到2-2-2架构。

6、记录集群扩容到2-2-2架构下,整个集群资源使用情况,两个租户下表分区副本分布情况。(集群扩容后的状态:涉及ob的负载均衡、分区副本迁移、leader_switch等)

7、开始租户扩容,这里只演示增加资源池的unit数量。

8、记录租户扩容后,整个集群资源使用情况,两个租户下表分区副本分布情况。(租户扩容后的状态:涉及ob的负载均衡、分区副本迁移、leader_switch等)

三、最后以手工绘图的形式来展示集群和租户分别扩容后的变化

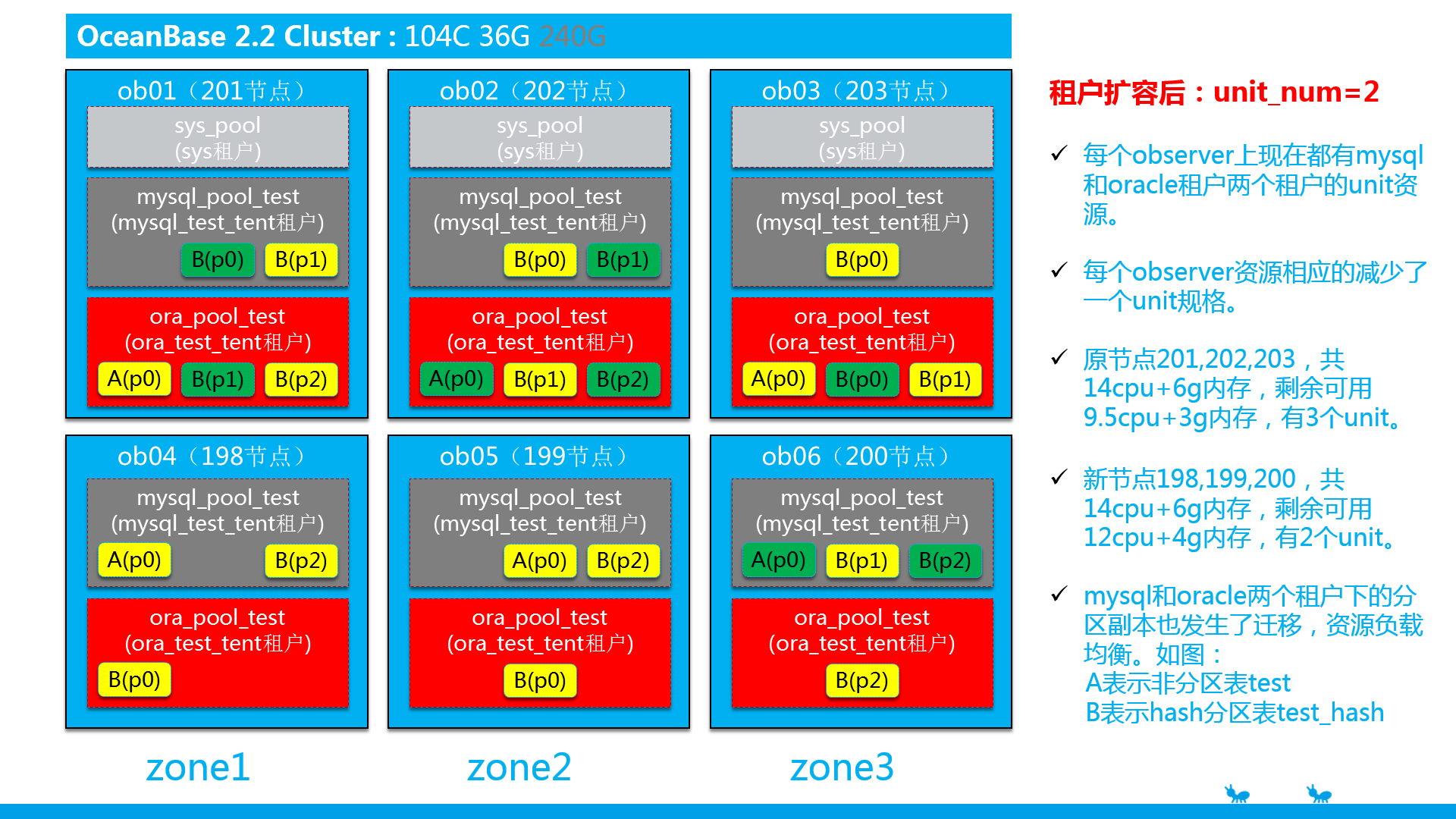

图一:集群扩容前:1-1-1架构

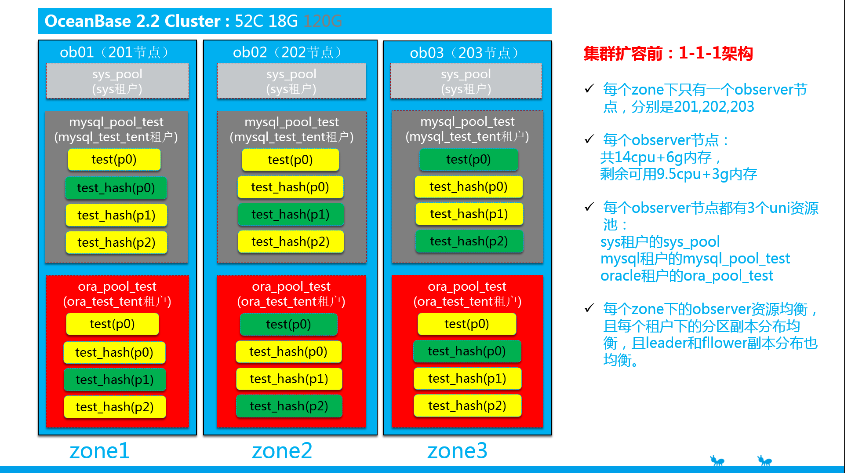

图二:集群扩容后:2-2-2架构

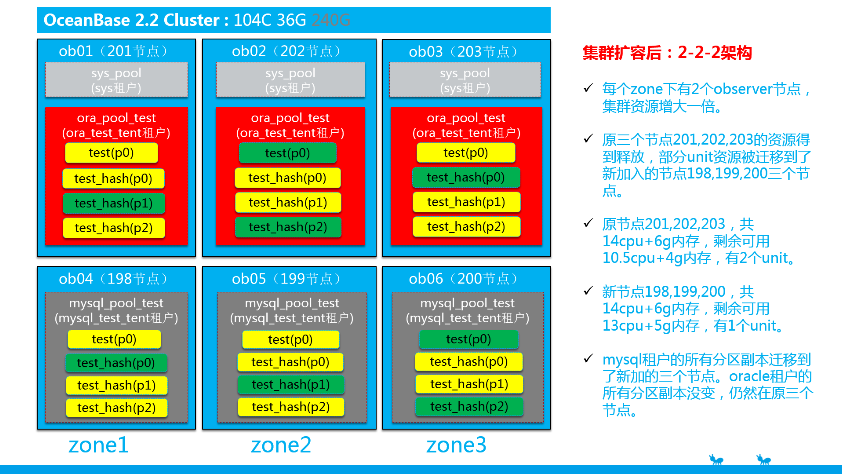

图三:租户扩容后:unit_num=2

以下是操作步骤:

1、搭建1-1-1架构的ob2.2集群

节点服务器配置4cpu+16g内存(这里由于服务器资源够,采用的是4c16g配置来安装,当然你也可以完全用2c8g的配置来安装。)

zone1 ob01: 192.168.26.201

zone2 ob02: 192.168.26.202

zone3 ob03: 192.168.26.203

启动observer进程时,指定的参数除了系统内部预留内存system_memory=10G,其它参数不变,参考上一篇文章。

安装完成后,查看集群的信息,如下:

$ obclient -h192.168.26.201 -P2883 -uroot@sys#obdemo -padmin123 -c -A oceanbase

obclient> select zone,svr_ip,svr_port,inner_port,with_rootserver,status,gmt_create from __all_server order by zone, svr_ip;

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone | svr_ip | svr_port | inner_port | with_rootserver | status | gmt_create |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone1 | 192.168.26.201 | 2882 | 2881 | 1 | active | 2020-10-14 10:19:35.093668 |

| zone2 | 192.168.26.202 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:28.917915 |

| zone3 | 192.168.26.203 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:27.641625 |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

3 rows in set (0.01 sec)

obclient> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free,

-> round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,unit_num,

-> substr(a.build_version,1,6) version,usec_to_time(b.start_service_time) start_service_time,b.status

-> from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

-> order by a.zone, a.svr_ip;

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | disk_total_gb | unit_num | version | start_service_time | status |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone1 | 192.168.26.201:2882 | 14 | 11.5 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 10:20:38.298274 | active |

| zone2 | 192.168.26.202:2882 | 14 | 11.5 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 10:20:40.019536 | active |

| zone3 | 192.168.26.203:2882 | 14 | 11.5 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 10:20:41.147584 | active |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

3 rows in set (0.01 sec)

obclient> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 1 | zone1 | 192.168.26.201:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 2 | zone2 | 192.168.26.202:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 3 | zone3 | 192.168.26.203:2882 | 1 | sys |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-------------+

3 rows in set (0.01 sec)

obclient> select unit_config_id,name,max_cpu,min_cpu,round(max_memory/1024/1024/1024) max_mem_gb,

-> round(min_memory/1024/1024/1024) min_mem_gb, round(max_disk_size/1024/1024/1024) max_disk_size_gb

-> from __all_unit_config

-> order by unit_config_id;

+----------------+-----------------+---------+---------+------------+------------+------------------+

| unit_config_id | name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | max_disk_size_gb |

+----------------+-----------------+---------+---------+------------+------------+------------------+

| 1 | sys_unit_config | 5 | 2.5 | 2 | 2 | 40 |

+----------------+-----------------+---------+---------+------------+------------+------------------+

1 row in set (0.03 sec)

obclient> select tenant_id, tenant_name, compatibility_mode,zone_list, locality ,primary_zone ,gmt_modified from __all_tenant;

+-----------+-------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

| tenant_id | tenant_name | compatibility_mode | zone_list | locality | primary_zone | gmt_modified |

+-----------+-------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

| 1 | sys | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | zone1;zone2,zone3 | 2020-10-14 10:20:36.979339 |

+-----------+-------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

1 row in set (0.00 sec)

可以看到,搭建1-1-1的ob集群,集群扩容前,只有201,202,203三个observer节点,每个zone下的observer节点资源使用情况是:

cpu总共14个,剩余可用11.5个,内存总共6g剩余可用5g,且每个zone下的observer只有一个sys系统租户的unit单元,大小规格是2.5~5cpu,2g内存。

2、创建mysql和oracle租户,并创建业务用户和测试表

创建mysql租户

$ obclient -h192.168.26.201 -P2883 -uroot@sys#obdemo -padmin123 -c -A oceanbase

obclient> create resource unit my_unit_1c1g max_cpu=1, min_cpu=1, max_memory='1g', min_memory='1g', max_iops=10000, min_iops=1000, max_session_num=1000000, max_disk_size='10g';

Query OK, 0 rows affected (0.18 sec)

obclient> select unit_config_id,name,max_cpu,min_cpu,round(max_memory/1024/1024/1024) max_mem_gb,

-> round(min_memory/1024/1024/1024) min_mem_gb, round(max_disk_size/1024/1024/1024) max_disk_size_gb

-> from __all_unit_config

-> order by unit_config_id;

+----------------+-----------------+---------+---------+------------+------------+------------------+

| unit_config_id | name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | max_disk_size_gb |

+----------------+-----------------+---------+---------+------------+------------+------------------+

| 1 | sys_unit_config | 5 | 2.5 | 2 | 2 | 40 |

| 1001 | my_unit_1c1g | 1 | 1 | 1 | 1 | 10 |

+----------------+-----------------+---------+---------+------------+------------+------------------+

2 rows in set (0.00 sec)

obclient> create resource pool mysql_pool_test unit = 'my_unit_1c1g', unit_num = 1;

Query OK, 0 rows affected (0.39 sec)

obclient> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 1 | zone1 | 192.168.26.201:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 2 | zone2 | 192.168.26.202:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 3 | zone3 | 192.168.26.203:2882 | 1 | sys |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1001 | zone1 | 192.168.26.201:2882 | NULL | NULL |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1002 | zone2 | 192.168.26.202:2882 | NULL | NULL |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1003 | zone3 | 192.168.26.203:2882 | NULL | NULL |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-------------+

6 rows in set (0.01 sec)

obclient> create tenant mysql_test_tent resource_pool_list=('mysql_pool_test'), primary_zone='RANDOM',comment 'mysql tenant/instance', charset='utf8' set ob_tcp_invited_nodes='%', ob_compatibility_mode='mysql';

Query OK, 0 rows affected (16.68 sec)

obclient> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 1 | zone1 | 192.168.26.201:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 2 | zone2 | 192.168.26.202:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 3 | zone3 | 192.168.26.203:2882 | 1 | sys |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1001 | zone1 | 192.168.26.201:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1002 | zone2 | 192.168.26.202:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1003 | zone3 | 192.168.26.203:2882 | 1001 | mysql_test_tent |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

6 rows in set (0.00 sec)

obclient> select tenant_id, tenant_name, compatibility_mode,zone_list, locality ,primary_zone ,gmt_modified from __all_tenant;

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

| tenant_id | tenant_name | compatibility_mode | zone_list | locality | primary_zone | gmt_modified |

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

| 1 | sys | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | zone1;zone2,zone3 | 2020-10-14 10:20:36.979339 |

| 1001 | mysql_test_tent | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | RANDOM | 2020-10-14 10:43:31.034562 |

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

2 rows in set (0.00 sec)

obclient> select zone,svr_ip,svr_port,inner_port,with_rootserver,status,gmt_create from __all_server order by zone, svr_ip;

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone | svr_ip | svr_port | inner_port | with_rootserver | status | gmt_create |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone1 | 192.168.26.201 | 2882 | 2881 | 1 | active | 2020-10-14 10:19:35.093668 |

| zone2 | 192.168.26.202 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:28.917915 |

| zone3 | 192.168.26.203 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:27.641625 |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

3 rows in set (0.01 sec)

登录mysql租户,修改管理员密码,并创建业务数据库和业务用户:

$ obclient -h192.168.26.201 -P2883 -uroot@mysql_test_tent#obdemo -p -c -A oceanbase

obclient> alter user root identified by 'admin123';

Query OK, 0 rows affected (0.17 sec)

obclient> create database testdb;

Query OK, 1 row affected (0.30 sec)

obclient> grant all privileges on testdb.* to aps2@'%' identified by 'aps2#12345';

Query OK, 0 rows affected (0.27 sec)

obclient> show grants for aps2;

+----------------------------------------------+

| Grants for aps2@% |

+----------------------------------------------+

| GRANT USAGE ON *.* TO 'aps2' |

| GRANT ALL PRIVILEGES ON `testdb`.* TO 'aps2' |

+----------------------------------------------+

2 rows in set (0.02 sec)

obclient>

登录mysql租户的业务用户,创建测试表,并插入测试数据:

$ obclient -h192.168.26.201 -uaps2@mysql_test_tent#obdemo -P2883 -paps2#12345 -c -A testdb

创建一个非分区表test:

obclient> create table test(id int not null primary key,name varchar(20),age int,dt datetime);

Query OK, 0 rows affected (0.70 sec)

obclient> insert into test values(1,'ob2.2.50',20,now());

Query OK, 1 row affected (0.08 sec)

obclient> insert into test values(2,'张三',26,'1998-06-17');

Query OK, 1 row affected (0.02 sec)

obclient> insert into test values(3,'李四',32,'1989-10-13');

Query OK, 1 row affected (0.02 sec)

obclient> insert into test values(4,'Tom',30,'1990-02-21');

Query OK, 1 row affected (0.02 sec)

obclient> select * from test;

+----+------------+------+---------------------+

| id | name | age | dt |

+----+------------+------+---------------------+

| 1 | ob2.2-mini | 20 | 2020-10-14 10:47:08 |

| 2 | 张三 | 26 | 1998-06-17 00:00:00 |

| 3 | 李四 | 32 | 1989-10-13 00:00:00 |

| 4 | Tom | 30 | 1990-02-21 00:00:00 |

+----+------------+------+---------------------+

4 rows in set (0.25 sec)

obclient>

创建一个hash分区表test_hash:

obclient> create table test_hash(id int not null,name varchar(20) not null,

-> age int,dt datetime default now())

-> partition by hash(id)

-> partitions 3;

Query OK, 0 rows affected (0.54 sec)

obclient> insert into test_hash values(1,'张三',26,'1998-06-17');

Query OK, 1 row affected (0.09 sec)

obclient> insert into test_hash values(2,'李四',32,'1989-10-13');

Query OK, 1 row affected (0.05 sec)

obclient> insert into test_hash values(3,'Tom',30,'1990-02-21');

Query OK, 1 row affected (0.06 sec)

obclient> select * from test_hash;

+----+--------+------+---------------------+

| id | name | age | dt |

+----+--------+------+---------------------+

| 3 | Tom | 30 | 1990-02-21 00:00:00 |

| 2 | 李四 | 32 | 1989-10-13 00:00:00 |

| 1 | 张三 | 26 | 1998-06-17 00:00:00 |

+----+--------+------+---------------------+

3 rows in set (0.05 sec)

obclient>

创建oracle类型的租户:

$ obclient -h192.168.26.201 -P2883 -uroot@sys#obdemo -padmin123 -c -A oceanbase

obclient> create resource pool ora_pool_test unit = 'my_unit_1c1g', unit_num = 1;

Query OK, 0 rows affected (0.64 sec)

obclient> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 1 | zone1 | 192.168.26.201:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 2 | zone2 | 192.168.26.202:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 3 | zone3 | 192.168.26.203:2882 | 1 | sys |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1001 | zone1 | 192.168.26.201:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1002 | zone2 | 192.168.26.202:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1003 | zone3 | 192.168.26.203:2882 | 1001 | mysql_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1004 | zone1 | 192.168.26.201:2882 | NULL | NULL |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1005 | zone2 | 192.168.26.202:2882 | NULL | NULL |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1006 | zone3 | 192.168.26.203:2882 | NULL | NULL |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

9 rows in set (0.01 sec)

obclient> create tenant ora_test_tent resource_pool_list=('ora_pool_test'), primary_zone='RANDOM',comment 'oracle tenant/instance', charset='utf8' set ob_tcp_invited_nodes='%', ob_compatibility_mode='oracle';

Query OK, 0 rows affected (14.09 sec)

obclient> select tenant_id, tenant_name, compatibility_mode,zone_list, locality ,gmt_modified from __all_tenant;

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+----------------------------+

| tenant_id | tenant_name | compatibility_mode | zone_list | locality | gmt_modified |

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+----------------------------+

| 1 | sys | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | 2020-10-14 10:20:36.979339 |

| 1001 | mysql_test_tent | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | 2020-10-14 10:43:31.034562 |

| 1002 | ora_test_tent | 1 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | 2020-10-14 10:50:51.345926 |

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+----------------------------+

3 rows in set (0.00 sec)

登录oracle租户,修改sys管理员密码,并创建业务用户和测试表:

$ obclient -h192.168.26.201 -usys@ora_test_tent#obdemo -P2883 -p -c -A

obclient> alter user sys identified by admin123;

Query OK, 0 rows affected (0.06 sec)

obclient> exit

Bye

$ obclient -h192.168.26.201 -usys@ora_test_tent#obdemo -P2883 -padmin123 -c -A

obclient> create user aps2 identified by aps2#12345;

Query OK, 0 rows affected (0.23 sec)

obclient> grant all privileges on aps2.* to aps2 with grant option;

Query OK, 0 rows affected (0.10 sec)

obclient> show grants for aps2;

+--------------------------------------------------------------+

| Grants for APS2@% |

+--------------------------------------------------------------+

| GRANT USAGE ON *.* TO 'APS2' |

| GRANT ALL PRIVILEGES ON "APS2".* TO 'APS2' WITH GRANT OPTION |

+--------------------------------------------------------------+

2 rows in set (0.06 sec)

obclient> exit

Bye

$ obclient -h192.168.26.201 -uaps2@ora_test_tent#obdemo -P2883 -paps2#12345 -c -A

--创建一个非分区表test

obclient> create table test(id int not null,name varchar2(20) not null,age int,dt date not null,primary key(id));

Query OK, 0 rows affected (0.70 sec)

obclient> insert into test values(1,'ob2.2-oracle',20,sysdate);

Query OK, 1 row affected (0.15 sec)

obclient> insert into test values(2,'张三',26,to_date('1998-06-17','yyyy-mm-dd'));

Query OK, 1 row affected (0.03 sec)

obclient> insert into test values(3,'李四',32,to_date('1989-10-13','yyyy-mm-dd'));

Query OK, 1 row affected (0.02 sec)

obclient> commit;

Query OK, 0 rows affected (0.06 sec)

--创建一个hash分区表

obclient> create table test_hash(id int not null,name varchar2(20) not null,age int,dt date default sysdate) partition by hash(id) partitions 3;

Query OK, 0 rows affected (1.18 sec)

obclient> insert into test_hash values(1,'ob2.2-oracle',20,sysdate);

Query OK, 1 row affected (0.06 sec)

obclient> insert into test_hash values(2,'张三',26,to_date('1998-06-17','yyyy-mm-dd'));

Query OK, 1 row affected (0.24 sec)

obclient> insert into test_hash values(3,'李四',32,to_date('1989-10-13','yyyy-mm-dd'));

Query OK, 1 row affected (0.11 sec)

obclient> commit;

Query OK, 0 rows affected (0.12 sec)

obclient> select * from test_hash;

+----+--------------+------+---------------------+

| ID | NAME | AGE | DT |

+----+--------------+------+---------------------+

| 2 | 张三 | 26 | 1998-06-17 00:00:00 |

| 1 | ob2.2-oracle | 20 | 2020-10-14 10:58:06 |

| 3 | 李四 | 32 | 1989-10-13 00:00:00 |

+----+--------------+------+---------------------+

3 rows in set (0.01 sec)

obclient> exit

Bye

3、查看集群扩容前,资源使用情况和租户下分区副本所在节点情况:

$ obclient -h192.168.26.201 -P2883 -uroot@sys#obdemo -padmin123 -c -A oceanbase

扩容前查看集群资源使用情况:

obclient> select zone,svr_ip,svr_port,inner_port,with_rootserver,status,gmt_create from __all_server order by zone, svr_ip;

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone | svr_ip | svr_port | inner_port | with_rootserver | status | gmt_create |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone1 | 192.168.26.201 | 2882 | 2881 | 1 | active | 2020-10-14 10:19:35.093668 |

| zone2 | 192.168.26.202 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:28.917915 |

| zone3 | 192.168.26.203 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:27.641625 |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

3 rows in set (0.01 sec)

obclient> select tenant_id, tenant_name, compatibility_mode,zone_list, locality ,primary_zone ,gmt_modified from __all_tenant;

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

| tenant_id | tenant_name | compatibility_mode | zone_list | locality | primary_zone | gmt_modified |

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

| 1 | sys | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | zone1;zone2,zone3 | 2020-10-14 10:20:36.979339 |

| 1001 | mysql_test_tent | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | RANDOM | 2020-10-14 10:43:31.034562 |

| 1002 | ora_test_tent | 1 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | RANDOM | 2020-10-14 10:50:51.345926 |

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

3 rows in set (0.00 sec)

obclient> select unit_config_id,name,max_cpu,min_cpu,round(max_memory/1024/1024/1024) max_mem_gb,

-> round(min_memory/1024/1024/1024) min_mem_gb, round(max_disk_size/1024/1024/1024) max_disk_size_gb

-> from __all_unit_config

-> order by unit_config_id;

+----------------+-----------------+---------+---------+------------+------------+------------------+

| unit_config_id | name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | max_disk_size_gb |

+----------------+-----------------+---------+---------+------------+------------+------------------+

| 1 | sys_unit_config | 5 | 2.5 | 2 | 2 | 40 |

| 1001 | my_unit_1c1g | 1 | 1 | 1 | 1 | 10 |

+----------------+-----------------+---------+---------+------------+------------+------------------+

2 rows in set (0.00 sec)

obclient> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 1 | zone1 | 192.168.26.201:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 2 | zone2 | 192.168.26.202:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 3 | zone3 | 192.168.26.203:2882 | 1 | sys |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1001 | zone1 | 192.168.26.201:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1002 | zone2 | 192.168.26.202:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1003 | zone3 | 192.168.26.203:2882 | 1001 | mysql_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1004 | zone1 | 192.168.26.201:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1005 | zone2 | 192.168.26.202:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1006 | zone3 | 192.168.26.203:2882 | 1002 | ora_test_tent |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

9 rows in set (0.00 sec)

obclient> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free,

-> round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,unit_num,

-> substr(a.build_version,1,6) version,usec_to_time(b.start_service_time) start_service_time,b.status

-> from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

-> order by a.zone, a.svr_ip;

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | disk_total_gb | unit_num | version | start_service_time | status |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone1 | 192.168.26.201:2882 | 14 | 9.5 | 6 | 3 | 40 | 3 | 2.2.50 | 2020-10-14 10:20:38.298274 | active |

| zone2 | 192.168.26.202:2882 | 14 | 9.5 | 6 | 3 | 40 | 3 | 2.2.50 | 2020-10-14 10:20:40.019536 | active |

| zone3 | 192.168.26.203:2882 | 14 | 9.5 | 6 | 3 | 40 | 3 | 2.2.50 | 2020-10-14 10:20:41.147584 | active |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

3 rows in set (0.00 sec)

obclient>

查看mysql租户下的分区副本所在节点分布情况:

obclient> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id =1001

-> order by t1.tenant_id,t3.tablegroup_id, t3.table_name,t4.partition_Id,t4.role ;

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.26.203 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.26.201 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.26.202 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.26.201 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.26.202 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.26.203 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.26.202 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.26.201 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.26.203 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.26.203 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.26.201 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.26.202 | 2 | 0 |

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

12 rows in set (0.12 sec)

查看oracle租户下的分区副本所在节点分布情况:

obclient> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id =1002

-> order by t1.tenant_id,t3.tablegroup_id, t3.table_name,t4.partition_Id,t4.role ;

+-----------+---------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+---------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone2 | 192.168.26.202 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone3 | 192.168.26.203 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone3 | 192.168.26.203 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone2 | 192.168.26.202 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone1 | 192.168.26.201 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone2 | 192.168.26.202 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone3 | 192.168.26.203 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone2 | 192.168.26.202 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone3 | 192.168.26.203 | 2 | 0 |

+-----------+---------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

12 rows in set (0.06 sec)

以上可以看到,创建两个租户后,ob集群资源使用情况是:

三个zone下,每个zone下只有一个observer节点,分别是201,202,203,每个observer节点总共14个cpu+6g内存,剩余可用9.5cpu+3g内存,有3个租户的unit资源。

其中:

mysl类型的sys系统租户资源占用2.5cpu+2g内存,所在节点201,202,203,每个节点剩余可用9.5cpu+3g内存。

mysql类型的业务租户 mysql_test_tent 资源占用1cpu1g内存,所在节点201,202,203,每个节点剩余可用9.5cpu+3g内存。

oracle类型的业务租户 ora_test_tent 资源占用1cpu1g内存,所在节点201,202,203,每个节点剩余可用9.5cpu+3g内存。

两个租户下表分区副本分布情况:(扩容前)

(1).mysql_test_tent租户下testdb库

非分区表test的p0号分区,主副本在zone3下的203节点,两个备副本在zone1下的201节点和zone2下的202节点。

分区表test_hash的p0分区的主副本在zone1下的201节点,p0分区的两个备副本在zone2下的202节点和zone3下的203节点。

分区表test_hash的p1分区的主副本在zone2下的202节点,p1分区的两个备副本在zone1下的201节点和zone3下的203节点。

分区表test_hash的p2分区的主副本在zone3下的203节点,p2分区的两个备副本在zone1下的201节点和zone2下的202节点。

(1).ora_test_tent租户下aps2用户

非分区表test的p0号分区,主副本在zone2下的202节点,两个备副本在zone1下的201节点和zone3下的203节点。

分区表test_hash的p0分区的主副本在zone3下的203节点,p0分区的两个备副本在zone1下的201节点和zone2下的202节点。

分区表test_hash的p1分区的主副本在zone1下的201节点,p1分区的两个备副本在zone2下的202节点和zone3下的203节点。

分区表test_hash的p2分区的主副本在zone2下的202节点,p2分区的两个备副本在zone1下的201节点和zone3下的203节点。

以图的形式展示集群扩容前的状态,如图一所示。由此可以看出,observer上分区主备副本分布比较均匀。

4、集群扩容:

现需要添加3个节点,将1-1-1架构的ob2.2集群扩容到2-2-2架构。

新加的节点服务器配置,跟之前一样4cpu+16g内存

zone1 ob04: 192.168.26.198

zone2 ob05: 192.168.26.199

zone3 ob06: 192.168.26.200

新节点的安装环境准备,以及OB软件安装,observer进程的启动等,这里忽略,参考之前的文章。

添加新节点:

$ obclient -h192.168.26.201 -P2883 -uroot@sys#obdemo -padmin123 -c -A oceanbase

obclient> alter system add server '192.168.26.198:2882' zone 'zone1';

Query OK, 0 rows affected (0.18 sec)

obclient> alter system add server '192.168.26.199:2882' zone 'zone2';

Query OK, 0 rows affected (0.27 sec)

obclient> alter system add server '192.168.26.200:2882' zone 'zone3';

Query OK, 0 rows affected (0.35 sec)

obclient>

节点添加完成后,查看OB集群所有节点信息:

obclient> select zone,svr_ip,svr_port,inner_port,with_rootserver,status,gmt_create from __all_server order by zone, svr_ip;

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone | svr_ip | svr_port | inner_port | with_rootserver | status | gmt_create |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone1 | 192.168.26.198 | 2882 | 2881 | 0 | active | 2020-10-14 11:12:31.240273 |

| zone1 | 192.168.26.201 | 2882 | 2881 | 1 | active | 2020-10-14 10:19:35.093668 |

| zone2 | 192.168.26.199 | 2882 | 2881 | 0 | active | 2020-10-14 11:12:37.089939 |

| zone2 | 192.168.26.202 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:28.917915 |

| zone3 | 192.168.26.200 | 2882 | 2881 | 0 | active | 2020-10-14 11:12:41.474299 |

| zone3 | 192.168.26.203 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:27.641625 |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

6 rows in set (0.00 sec)

可以看到,新加的198,199,200三个observer节点已经加入集群,并且状态是active活动状态。

此时,新节点加入,由于新节点资源负载为0,直接导致OB集群每个zone内各个observer的资源分布不均衡。由于ob自身带集群的负载均衡策略,会根据每个zone的各个observer资源的状况,动态重新调整各个observer上的资源分布,从而达到每个observer的资源负载均衡,这个过程对业务是完全透明的。

当然,平衡observer资源的过程,其实就是分区副本迁移的过程,此过程会耗费点时间根据副本迁移数据量而定,分区副本的迁移,实质是通过分区拷贝复制到新observer,等拷贝复制完成后,再删除原observer上的分区副本,副本迁移过程中业务访问仍然是访问原来分区主副本所在节点,对应用业务是完全透明的,不影响业务的使用。

另外,集群的负载均衡除了体现在资源的负载均衡外,还体现在业务的负载均衡。业务读写默认只访问主副本所在的节点,ob集群内部会根据每个observer的应用读写负载情况,对各个分区的leader和fllower进行自动重新选主,也就是leader主副本切换,从而达到每个observer的应用负载均衡。

分区副本迁移重新选主的过程,可以通过视图__all_rootservice_event_history查看集群event事件信息。

如:

SELECT *

FROM __all_rootservice_event_history

WHERE 1 = 1

and gmt_create> date_format('2020-10-14 11:12:00', '%Y%m%d%H%i%s')

ORDER BY gmt_create

LIMIT 50;

+----------------+--------------------+-----------------------------+------------+------------------------------------------------------------------------+---------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+----------------+

| gmt_create_ | module | event | name1 | value1 | name2 | value2 | rs_svr_ip |

+----------------+--------------------+-----------------------------+------------+------------------------------------------------------------------------+---------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+----------------+

| Oct14 11:12:37 | server | add_server | server | "192.168.26.199:2882" | | | 192.168.26.201 |

| Oct14 11:12:37 | root_service | add_server | ret | 0 | arg | {servers:["192.168.26.199:2882"], zone:"zone2"} | 192.168.26.201 |

| Oct14 11:12:37 | root_service | admin_set_config | ret | 0 | arg | {items:[{name:"all_server_list", value:"192.168.26.201:2882,192.168.26.202:2882,192.168.26.203:2882,192.168.26.198:2882,192.168.26.199:2882", comment:"", zone:"", server:"0.0.0.0", tenant_name:"", exec_tenant_id:1, tenant_ids:[]}]} | 192.168.26.201 |

| Oct14 11:12:37 | server | online | server | "192.168.26.199:2882" | | | 192.168.26.201 |

| Oct14 11:12:41 | server | add_server | server | "192.168.26.200:2882" | | | 192.168.26.201 |

| Oct14 11:12:41 | root_service | add_server | ret | 0 | arg | {servers:["192.168.26.200:2882"], zone:"zone3"} | 192.168.26.201 |

| Oct14 11:12:41 | unit | migrate_unit | unit_id | 1001 | migrate_from_server | "192.168.26.201:2882" | 192.168.26.201 |

| Oct14 11:12:41 | root_service | admin_set_config | ret | 0 | arg | {items:[{name:"all_server_list", value:"192.168.26.201:2882,192.168.26.202:2882,192.168.26.203:2882,192.168.26.198:2882,192.168.26.199:2882,192.168.26.200:2882", comment:"", zone:"", server:"0.0.0.0", tenant_name:"", exec_tenant_id:1, tenant_ids:[]}]} | 192.168.26.201 |

| Oct14 11:12:44 | server | online | server | "192.168.26.200:2882" | | | 192.168.26.201 |

| Oct14 11:12:53 | server | set_with_partition | server | "192.168.26.198:2882" | | | 192.168.26.201 |

| Oct14 11:12:56 | balancer | tenant_balance_started | start_time | 1602645171716008 | | | 192.168.26.201 |

| Oct14 11:13:09 | balancer | start_batch_migrate_replica | tenant_id | 1001 | destiontion | "192.168.26.198:2882" | 192.168.26.201 |

| Oct14 11:13:09 | balancer | start_migrate_replica | partition | {pkey:{tid:1100611139403779, partition_id:0, part_cnt:0}, data_size:0} | source | {server:"192.168.26.201:2882", timestamp:1602643395447764, flag:0, replica_type:0, region:"default_region", memstore_percent:100} | 192.168.26.201 |

| Oct14 11:13:09 | balancer | start_migrate_replica | partition | {pkey:{tid:1100611139403780, partition_id:0, part_cnt:0}, data_size:0} | source | {server:"192.168.26.201:2882", timestamp:1602643395448183, flag:0, replica_type:0, region:"default_region", memstore_percent:100} | 192.168.26.201 |

| Oct14 11:13:09 | balancer | start_migrate_replica | partition | {pkey:{tid:1100611139403781, partition_id:0, part_cnt:0}, data_size:0} | source | {server:"192.168.26.201:2882", timestamp:1602643395448384, flag:0, replica_type:0, region:"default_region", memstore_percent:100} | 192.168.26.201 |

| Oct14 11:13:09 | balancer | start_migrate_replica | partition | {pkey:{tid:1100611139403878, partition_id:0, part_cnt:0}, data_size:0} | source | {server:"192.168.26.201:2882", timestamp:1602643395448836, flag:0, replica_type:0, region:"default_region", memstore_percent:100} | 192.168.26.201 |

...........

...........

...........

...........

| Oct14 11:22:03 | balancer | finish_migrate_replica | partition | {pkey:{tid:1100611139404044, partition_id:0, part_cnt:0}, data_size:0} | source | {server:"192.168.26.203:2882", timestamp:1602643395480649, flag:0, replica_type:0, region:"default_region", memstore_percent:100} | 192.168.26.201 |

| Oct14 11:22:03 | balancer | finish_migrate_replica | partition | {pkey:{tid:1100611139404046, partition_id:0, part_cnt:0}, data_size:0} | source | {server:"192.168.26.203:2882", timestamp:1602643395481042, flag:0, replica_type:0, region:"default_region", memstore_percent:100} | 192.168.26.201 |

| Oct14 11:22:03 | balancer | finish_migrate_replica | partition | {pkey:{tid:1100611139404052, partition_id:0, part_cnt:0}, data_size:0} | source | {server:"192.168.26.203:2882", timestamp:1602643395481317, flag:0, replica_type:0, region:"default_region", memstore_percent:100} | 192.168.26.201 |

| Oct14 11:22:03 | unit | finish_migrate_unit | unit_id | 1003 | end_op | 0 | 192.168.26.201 |

| Oct14 11:22:05 | balancer | tenant_balance_finished | finish_time | 1602645725677943 | task_count | 320 | 192.168.26.201 |

| Oct14 11:22:13 | leader_coordinator | switch_leader | current_rs | "192.168.26.201:2882" | tenant_id | 1001 | 192.168.26.201 |

| Oct14 11:22:13 | leader_coordinator | switch_leader | current_rs | "192.168.26.201:2882" | tenant_id | 1001 | 192.168.26.201 |

等待资源平衡分区副本迁移完成后,查看资源使用情况:

obclient> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free,

-> round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,unit_num,

-> substr(a.build_version,1,6) version,usec_to_time(b.start_service_time) start_service_time,b.status

-> from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

-> order by a.zone, a.svr_ip;

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | disk_total_gb | unit_num | version | start_service_time | status |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone1 | 192.168.26.198:2882 | 14 | 13 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 11:12:38.283882 | active |

| zone1 | 192.168.26.201:2882 | 14 | 10.5 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 10:20:38.298274 | active |

| zone2 | 192.168.26.199:2882 | 14 | 13 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 11:12:48.894618 | active |

| zone2 | 192.168.26.202:2882 | 14 | 10.5 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 10:20:40.019536 | active |

| zone3 | 192.168.26.200:2882 | 14 | 13 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 11:12:55.862304 | active |

| zone3 | 192.168.26.203:2882 | 14 | 10.5 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 10:20:41.147584 | active |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

6 rows in set (0.00 sec)

obclient>

查看mysql租户下的分区副本所在节点分布情况:

obclient> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id =1001

-> order by t1.tenant_id,t3.tablegroup_id, t3.table_name,t4.partition_Id,t4.role ;

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.26.200 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.26.198 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.26.199 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.26.198 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.26.199 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.26.200 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.26.199 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.26.198 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.26.200 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.26.200 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.26.198 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.26.199 | 2 | 0 |

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

12 rows in set (0.36 sec)

查看oracle租户下的分区副本所在节点分布情况:

obclient> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id =1002

-> order by t1.tenant_id,t3.tablegroup_id, t3.table_name,t4.partition_Id,t4.role ;

+-----------+---------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+---------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone2 | 192.168.26.202 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone3 | 192.168.26.203 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone3 | 192.168.26.203 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone2 | 192.168.26.202 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone1 | 192.168.26.201 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone2 | 192.168.26.202 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone3 | 192.168.26.203 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone2 | 192.168.26.202 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone3 | 192.168.26.203 | 2 | 0 |

+-----------+---------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

12 rows in set (0.05 sec)

obclient>

以上可以看到,集群扩容前后ob集群资源变化情况:

集群扩容前,原三个节点201,202,203,每个节点资源总共14cpu+6g内存,剩余还可用9.5cpu, 3g内存,有3个unit,其中系统sys租户占用2.5cpu+2g内存,两个业务租户mysql_test_tent和ora_test_tent各占用1cpu+1g内存。

集群扩容后,原三个节点201,202,203的资源得到释放,部分unit资源被迁移到了新加入的节点198,199,200三个节点。

集群扩容后,最终资源大概可用情况:

原节点201,202,203,总共14个cpu+6g内存,剩余可用10.5cpu+4g内存,有2个unit资源(一个sys租户资源和一个mysql租户资源)。

新节点198,199,200,总共14个cpu+6g内存,剩余可用13cpu+5g内存,有1个unit资源(一个oracle租户资源)。

增加节点集群扩容后,直接导致了资源Unit迁移,伴随着migrate_replica分区副本迁移和tenant_balance平衡租户资源以及switch_leader切主等操作,这是OceanBase集群负载均衡的体现。

当新的observer节点加入后,由于新节点资源为0,整个OB集群每个zone下的各个observer节点资源使用就不均衡,OceanBase通过副本迁移和leader切换来实现了资源的负载均衡和应用读写的负载均衡,此过程对应用完全透明,不影响业务使用。

补充:资源Unit迁移的步骤是在目标observer节点内部先创建Unit,然后再复制 Unit内部的Partition,最后做副本的角色切换(leader跟follower的切换),最后下线多余的Partition和Unit。

两个租户下表分区副本分布情况:(集群扩容后)

(1).mysql_test_tent租户下testdb库,发生了unit迁移,mysql租户的所有分区副本迁移到了新加入的198,199,200三个节点。

非分区表test的p0号分区,主副本从原来的zone3下的203节点,迁移到了zone3下的200节点;两个备副本也从原来的zone1下的201节点和zone2下的202节点,转移到了zone1下的198节点和zone2下的199节点。

分区表test_hash的p0分区的主副本从原来的zone1下的201节点,迁移到了zone1下的198节点;p0分区的两个备副本也从原来的zone2下的202节点和zone3下的203节点,迁移到了zone2下的199节点和zone3下的200节点。

分区表test_hash的p1分区的主副本从原来的zone2下的202节点,迁移到了zone2下的199节点;p1分区的两个备副本也从原来的zone1下的201节点和zone3下的203节点,迁移到了zone1下的198节点和zone3下的200节点。

分区表test_hash的p2分区的主副本从原来的zone3下的203节点,迁移到了zone3下的200节点;p2分区的两个备副本也从原来的zone1下的201节点和zone2下的202节点,迁移到了zone1下的198节点和zone2下的199节点。

(2).ora_test_tent租户下aps2用户,没有发生unit迁移,该租户的所有分区副本没变

非分区表test的p0号分区,主副本在zone2下的202节点,两个备副本在zone1下的201节点和zone3下的203节点。

分区表test_hash的p0分区的主副本在zone3下的203节点,p0分区的两个备副本在zone1下的201节点和zone2下的202节点。

分区表test_hash的p1分区的主副本在zone1下的201节点,p1分区的两个备副本在zone2下的202节点和zone3下的203节点。

分区表test_hash的p2分区的主副本在zone2下的202节点,p2分区的两个备副本在zone1下的201节点和zone3下的203节点。

以图的形式展示集群扩容后的状态,如图二所示。由此可以看出,集群扩容后,每个zone下的observer资源分布比较均匀。

5、租户扩容

租户扩容有两个思路,一是提升资源单元规格,二是增加资源单元数量。

扩容前查看系统资源和租户下数据主备副本分布情况

obclient>

obclient> select zone,svr_ip,svr_port,inner_port,with_rootserver,status,gmt_create from __all_server order by zone, svr_ip;

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone | svr_ip | svr_port | inner_port | with_rootserver | status | gmt_create |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

| zone1 | 192.168.26.198 | 2882 | 2881 | 0 | active | 2020-10-14 11:12:31.240273 |

| zone1 | 192.168.26.201 | 2882 | 2881 | 1 | active | 2020-10-14 10:19:35.093668 |

| zone2 | 192.168.26.199 | 2882 | 2881 | 0 | active | 2020-10-14 11:12:37.089939 |

| zone2 | 192.168.26.202 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:28.917915 |

| zone3 | 192.168.26.200 | 2882 | 2881 | 0 | active | 2020-10-14 11:12:41.474299 |

| zone3 | 192.168.26.203 | 2882 | 2881 | 0 | active | 2020-10-14 10:19:27.641625 |

+-------+----------------+----------+------------+-----------------+--------+----------------------------+

6 rows in set (0.01 sec)

obclient> select tenant_id, tenant_name, compatibility_mode,zone_list, locality ,primary_zone ,gmt_modified from __all_tenant;

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

| tenant_id | tenant_name | compatibility_mode | zone_list | locality | primary_zone | gmt_modified |

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

| 1 | sys | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | zone1;zone2,zone3 | 2020-10-14 10:20:36.979339 |

| 1001 | mysql_test_tent | 0 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | RANDOM | 2020-10-14 10:43:31.034562 |

| 1002 | ora_test_tent | 1 | zone1;zone2;zone3 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | RANDOM | 2020-10-14 10:50:51.345926 |

+-----------+-----------------+--------------------+-------------------+---------------------------------------------+-------------------+----------------------------+

3 rows in set (0.00 sec)

obclient> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free,

-> round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,unit_num,

-> substr(a.build_version,1,6) version,usec_to_time(b.start_service_time) start_service_time,b.status

-> from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

-> order by a.zone, a.svr_ip;

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | disk_total_gb | unit_num | version | start_service_time | status |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone1 | 192.168.26.198:2882 | 14 | 13 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 11:12:38.283882 | active |

| zone1 | 192.168.26.201:2882 | 14 | 10.5 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 10:20:38.298274 | active |

| zone2 | 192.168.26.199:2882 | 14 | 13 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 11:12:48.894618 | active |

| zone2 | 192.168.26.202:2882 | 14 | 10.5 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 10:20:40.019536 | active |

| zone3 | 192.168.26.200:2882 | 14 | 13 | 6 | 5 | 40 | 1 | 2.2.50 | 2020-10-14 11:12:55.862304 | active |

| zone3 | 192.168.26.203:2882 | 14 | 10.5 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 10:20:41.147584 | active |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

6 rows in set (0.00 sec)

obclient> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 1 | zone1 | 192.168.26.201:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 2 | zone2 | 192.168.26.202:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 3 | zone3 | 192.168.26.203:2882 | 1 | sys |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1001 | zone1 | 192.168.26.198:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1002 | zone2 | 192.168.26.199:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1003 | zone3 | 192.168.26.200:2882 | 1001 | mysql_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1004 | zone1 | 192.168.26.201:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1005 | zone2 | 192.168.26.202:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1006 | zone3 | 192.168.26.203:2882 | 1002 | ora_test_tent |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

9 rows in set (0.01 sec)

obclient> select unit_config_id,name,max_cpu,min_cpu,round(max_memory/1024/1024/1024) max_mem_gb,

-> round(min_memory/1024/1024/1024) min_mem_gb, round(max_disk_size/1024/1024/1024) max_disk_size_gb

-> from __all_unit_config

-> order by unit_config_id;

+----------------+-----------------+---------+---------+------------+------------+------------------+

| unit_config_id | name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | max_disk_size_gb |

+----------------+-----------------+---------+---------+------------+------------+------------------+

| 1 | sys_unit_config | 5 | 2.5 | 2 | 2 | 40 |

| 1001 | my_unit_1c1g | 1 | 1 | 1 | 1 | 10 |

+----------------+-----------------+---------+---------+------------+------------+------------------+

2 rows in set (0.00 sec)

obclient> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id >1000

-> order by t1.tenant_id,t3.tablegroup_id, t3.table_name,t4.partition_Id ,t4.role;

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.26.200 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.26.199 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.26.198 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.26.198 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.26.199 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.26.200 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.26.199 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.26.198 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.26.200 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.26.200 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.26.198 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.26.199 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone2 | 192.168.26.202 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone3 | 192.168.26.203 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone3 | 192.168.26.203 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone2 | 192.168.26.202 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone1 | 192.168.26.201 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone2 | 192.168.26.202 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone3 | 192.168.26.203 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone2 | 192.168.26.202 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone3 | 192.168.26.203 | 2 | 0 |

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

24 rows in set (0.38 sec)

obclient>

这里演示增加unit数量

obclient> alter resource pool mysql_pool_test unit_num = 2;

Query OK, 0 rows affected (0.35 sec)

obclient> alter resource pool ora_pool_test unit_num = 2;

Query OK, 0 rows affected (0.76 sec)

obclient>

租户扩容,unit_num=2,表示每个zone下有两个unit资源。由于集群是2-2-2架构,每个zone下只有2台observer,因此,每个zone下的每个observer都会有1个mysql租户和oracle租户的unit资源。

由于集群负载均衡策略,会导致副本迁移和leader重新选主。

待资源平衡分区副本迁移选主完成后,查看每个节点资源使用情况:

obclient> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free,

-> round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb,

-> round(disk_total/1024/1024/1024) disk_total_gb,unit_num,

-> substr(a.build_version,1,6) version,usec_to_time(b.start_service_time) start_service_time,b.status

-> from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

-> order by a.zone, a.svr_ip;

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | disk_total_gb | unit_num | version | start_service_time | status |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

| zone1 | 192.168.26.198:2882 | 14 | 12 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 11:12:38.283882 | active |

| zone1 | 192.168.26.201:2882 | 14 | 9.5 | 6 | 3 | 40 | 3 | 2.2.50 | 2020-10-14 10:20:38.298274 | active |

| zone2 | 192.168.26.199:2882 | 14 | 12 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 11:12:48.894618 | active |

| zone2 | 192.168.26.202:2882 | 14 | 9.5 | 6 | 3 | 40 | 3 | 2.2.50 | 2020-10-14 10:20:40.019536 | active |

| zone3 | 192.168.26.200:2882 | 14 | 12 | 6 | 4 | 40 | 2 | 2.2.50 | 2020-10-14 11:12:55.862304 | active |

| zone3 | 192.168.26.203:2882 | 14 | 9.5 | 6 | 3 | 40 | 3 | 2.2.50 | 2020-10-14 10:20:41.147584 | active |

+-------+---------------------+-----------+----------+--------------+-------------+---------------+----------+---------+----------------------------+--------+

6 rows in set (0.09 sec)

查看资源分布情况:

obclient> select t1.name resource_pool_name, t2.`name` unit_config_name, t2.max_cpu, t2.min_cpu,

-> round(t2.max_memory/1024/1024/1024) max_mem_gb, round(t2.min_memory/1024/1024/1024) min_mem_gb,

-> t3.unit_id, t3.zone, concat(t3.svr_ip,':',t3.`svr_port`) observer,t4.tenant_id, t4.tenant_name

-> from __all_resource_pool t1 join __all_unit_config t2 on (t1.unit_config_id=t2.unit_config_id)

-> join __all_unit t3 on (t1.`resource_pool_id` = t3.`resource_pool_id`)

-> left join __all_tenant t4 on (t1.tenant_id=t4.tenant_id)

-> order by t1.`resource_pool_id`, t2.`unit_config_id`, t3.unit_id

-> ;

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| resource_pool_name | unit_config_name | max_cpu | min_cpu | max_mem_gb | min_mem_gb | unit_id | zone | observer | tenant_id | tenant_name |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 1 | zone1 | 192.168.26.201:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 2 | zone2 | 192.168.26.202:2882 | 1 | sys |

| sys_pool | sys_unit_config | 5 | 2.5 | 2 | 2 | 3 | zone3 | 192.168.26.203:2882 | 1 | sys |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1001 | zone1 | 192.168.26.198:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1002 | zone2 | 192.168.26.199:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1003 | zone3 | 192.168.26.200:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1007 | zone1 | 192.168.26.201:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1008 | zone2 | 192.168.26.202:2882 | 1001 | mysql_test_tent |

| mysql_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1009 | zone3 | 192.168.26.203:2882 | 1001 | mysql_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1004 | zone1 | 192.168.26.201:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1005 | zone2 | 192.168.26.202:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1006 | zone3 | 192.168.26.203:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1010 | zone1 | 192.168.26.198:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1011 | zone2 | 192.168.26.199:2882 | 1002 | ora_test_tent |

| ora_pool_test | my_unit_1c1g | 1 | 1 | 1 | 1 | 1012 | zone3 | 192.168.26.200:2882 | 1002 | ora_test_tent |

+--------------------+------------------+---------+---------+------------+------------+---------+-------+---------------------+-----------+-----------------+

15 rows in set (0.02 sec)

obclient>

查看mysql和oracle租户下的分区副本所在节点分布情况:

obclient> SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,

-> t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb

-> from `gv$tenant` t1

-> join `gv$database` t2 on (t1.tenant_id = t2.tenant_id)

-> join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0)

-> left join `gv$partition` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1,2))

-> where t1.tenant_id >1000

-> order by t1.tenant_id,t3.tablegroup_id, t3.table_name,t4.partition_Id ,t4.role;

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| tenant_id | tenant_name | database_name | table_id | table_Name | tablegroup_id | part_num | partition_Id | zone | svr_ip | role | data_size_mb |

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone3 | 192.168.26.200 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone2 | 192.168.26.199 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453777 | test | -1 | 1 | 0 | zone1 | 192.168.26.198 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone1 | 192.168.26.201 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone2 | 192.168.26.202 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 0 | zone3 | 192.168.26.203 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone2 | 192.168.26.202 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone3 | 192.168.26.200 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 1 | zone1 | 192.168.26.201 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone3 | 192.168.26.200 | 1 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone1 | 192.168.26.198 | 2 | 0 |

| 1001 | mysql_test_tent | testdb | 1100611139453778 | test_hash | -1 | 3 | 2 | zone2 | 192.168.26.199 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone2 | 192.168.26.202 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone1 | 192.168.26.201 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081553 | TEST | -1 | 1 | 0 | zone3 | 192.168.26.203 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone3 | 192.168.26.203 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone1 | 192.168.26.198 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 0 | zone2 | 192.168.26.199 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone1 | 192.168.26.201 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone2 | 192.168.26.202 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 1 | zone3 | 192.168.26.203 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone2 | 192.168.26.202 | 1 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone3 | 192.168.26.200 | 2 | 0 |

| 1002 | ora_test_tent | APS2 | 1101710651081554 | TEST_HASH | -1 | 3 | 2 | zone1 | 192.168.26.201 | 2 | 0 |

+-----------+-----------------+---------------+------------------+------------+---------------+----------+--------------+-------+----------------+------+--------------+

24 rows in set (0.17 sec)

obclient>

从以上结果可以看出,由于unit资源单元大小为1cpu+1g内存,租户扩容前,每个zone下的observer节点都可以再分配一个此大小的unit单元资源。

当unit_num修改为2个后,增加Unit数量也触发了ob集群的负载均衡逻辑,整个OB集群下observer资源分布再次发生了改变。

租户扩容后(unit_num增加),直接导致了每个资源unit里的分区发生了unit迁移,并通过改变每个分区leader副本在不同unit之间的分布,从而到达集群的负载均衡。

最终租户扩容后,

原节点201、202、203,总共14个cpu+6g内存,剩余可用9.5cpu+3g内存,有3个unit资源(一个sys租户资源、一个mysql租户资源和一个oracle租户资源)。

新节点198、199、200,总共14个cpu+6g内存,剩余可用12cpu+4g内存,有2个unit资源(一个mysql租户资源和一个oracle租户资源)。

两个租户下表分区副本分布情况:(租户扩容后)

(1).mysql_test_tent租户下testdb库

非分区表test的p0号分区的主副本在zone3下的200节点;两个备副本在zone1下的198节点和zone2下的199节点。

分区表test_hash的p0分区的主副本在zone1下的201节点;p0分区的两个备副本在zone2下的202节点和zone3下的203节点。

分区表test_hash的p1分区的主副本在zone2下的202节点;p1分区的两个备副本在zone1下的201节点和zone3下的200节点。

分区表test_hash的p2分区的主副本在zone3下的200节点;p2分区的两个备副本在zone1下的198节点和zone2下的199节点。

(2).ora_test_tent租户下aps2用户

非分区表test的p0号分区,主副本在zone2下的202节点,两个备副本在zone1下的201节点和zone3下的203节点。

分区表test_hash的p0分区的主副本在zone3下的203节点,p0分区的两个备副本在zone1下的198节点和zone2下的199节点。

分区表test_hash的p1分区的主副本在zone1下的201节点,p1分区的两个备副本在zone2下的202节点和zone3下的203节点。

分区表test_hash的p2分区的主副本在zone2下的202节点,p2分区的两个备副本在zone1下的201节点和zone3下的200节点。

以图的形式展示租户扩容后的状态,如图三所示。

查看集群rootserver事件,了解租户扩容过程,如下:

obclient> SELECT *

-> FROM __all_rootservice_event_history

-> WHERE 1 = 1

-> and gmt_create> date_format('2020-10-14 11:30:00', '%Y%m%d%H%i%s')

-> ORDER BY gmt_create DESC

-> LIMIT 50;

+----------------+--------------------+-------------------------+-------------+------------------------------------------------------------------------+------------+-----------------------------------------------------------------------------------------------------------------------------------+----------------+

| gmt_create_ | module | event | name1 | value1 | name2 | value2 | rs_svr_ip |

+----------------+--------------------+-------------------------+-------------+------------------------------------------------------------------------+------------+-----------------------------------------------------------------------------------------------------------------------------------+----------------+

| Oct14 12:03:19 | leader_coordinator | switch_leader | current_rs | "192.168.26.201:2882" | tenant_id | 1001 | 192.168.26.201 |

| Oct14 12:03:08 | balancer | tenant_balance_finished | finish_time | 1602648188797431 | task_count | 8 | 192.168.26.201 |

| Oct14 12:02:58 | balancer | finish_migrate_replica | partition | {pkey:{tid:1100611139453778, partition_id:0, part_cnt:0}, data_size:0} | source | {server:"192.168.26.200:2882", timestamp:1602645547542750, flag:0, replica_type:0, region:"default_region", memstore_percent:100} | 192.168.26.201 |