一. 背景介绍

1.1 国际背景

2022年3月2日,甲骨文Oracle暂定了俄罗斯的所有业务,说明技术有国界,并且是建立利益之上的。

1.2 个人感悟

日常工作中,总能听见这样的声音,Oracle很强,国产数据库很难超越O,如果要用国产数据库,应用可能会不稳定。

作为一名DBA,应该有这样的意识,在国家信创的号召下,勇担一名国产化先锋,尝试为国产化做出一点贡献,为企业的国产化转型带来更多有价值的信息。

小编最近还看到一则新闻,歼-20 换装中国"心",这和国产化数据库的道路挺相似的。

航空发动机是飞机的心脏,是要不来、求不来的!如果是引进,我们的发展就受制于别人的发动机的性能。发动机的发展可以促进飞机的发展,我们要发展自己的军用飞机,航空发动机就必须做强,而且要自主保障。

所以,国产化不仅是为了自主可控,而且也是为了满足我国未来多样化的大数据业务场景。

国产化从不再受制于人,再到超越他人!

二. 迁移工具

说好了要国产化,那我们首先要做的是如何把数据迁移到国产数据库。

那本文主要分享OceanBase社区版的开源工具,目前小编知道有以下迁移工具,如有遗漏,欢迎补充!

因为OceanBase数据迁移的内容实在是太多了,所以,小编分成上卷、中卷、下卷共三卷,本文主要分享上卷内容。

三. 演示环境准备

3.1 前提须知

因为只做数据迁移的测试,不做性能测试,所以,文本要求的迁移数据量不会特别大,但尽可能让基本的数据类型覆盖全面(小编使用Employees数据库和Tpcc-MySQl数据库)。

3.2 MySQL 环境

演示环境默认使用MySQL 8.0.27,除非有特殊说明。

| ID | 操作系统 | IP | 版本 | CPU | 内存 | InnoDB_Buffer_Cache |

|---|---|---|---|---|---|---|

| 1 | RHEL7 | 192.168.117.170 | 5.7.30 | 8个 | 16GB | 2GB |

| 2 | RHEL7 | 192.168.117.170 | 8.0.27 | 8个 | 16GB | 8GB |

3.3 OceanBase环境

| ID | 角色 | 操作系统 | IP地址 | 软件版本 | OS CPU | OS 内存 | Memory | 测试租户 | 作用 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 迁移工具 | RHEL7 | 192.168.117.170 | / | 8个 | 12GB | / | mys_tpcc | MySQL DB、OBproxy、OBDUMPER&OBLOADER、DataX |

| 2 | OBServer1 | RHEL7 | 192.168.117.171 | 3.1.2 | 16个 | 12GB | 8GB | mys_tpcc | OB DB |

| 3 | OBServer2 | RHEL7 | 192.168.117.172 | 3.1.2 | 16个 | 12GB | 8GB | mys_tpcc | OB DB |

| 4 | OBServer3 | RHEL7 | 192.168.117.173 | 3.1.2 | 16个 | 12GB | 8GB | mys_tpcc | OB DB |

3.2 数据准备

注意:本文MySQL 5.7.30和MySQL 8.0.27 都会创建Employees数据库和Tpcc-MySQL数据库,并且使用mysqldump方式进行导出、导入,确保两个数据库的数据和数量是一致的。

3.2.1 EMPLOYEES

Employees数据库 : 点击下载

解压 和 授权

[root@RHOB-DB00 MySQL]# pwd /software/MySQL [root@RHOB-DB00 MySQL]# unzip test_db-master.zip [root@RHOB-DB00 MySQL]# chown -R mysql:mysql test_db-master

创建Employees数据库

[root@RHOB-DB00 test_db-master]# mysql -h 192.168.117.170 -P 3310 -uroot -p'123456' < /software/MySQL/test_db-master/employees.sql

Employees数据库的数据量

| ID | Tables_in_employees | 类型 | 数据量 |

|---|---|---|---|

| 1 | departments | 表 | 9 |

| 2 | dept_emp | 表 | 331603 |

| 3 | dept_manager | 表 | 24 |

| 4 | employees | 表 | 300024 |

| 5 | salaries | 表 | 2844047 |

| 6 | titles | 表 | 443308 |

| 7 | current_dept_emp | 视图 | 300024 |

| 8 | dept_emp_latest_date | 视图 | 300024 |

3.2.2 tpcc-mysql

tpcc-mysql数据库 : 点击下载

TPC-C是专门针对联机交易处理系统(OLTP系统)的规范,由percona根据规范实现,一般情况下我们也把这类系统称为业务处理系统。

tpcc-mysql是percona基于TPC-C衍生出来的产品,专用于MySQL基准测试。

本次基于 MySQL 8 进行编译,小编非常羡慕其他作者,解压和make直接编译通过,自己却遇到了N多问题。

不过部署类的问题,基本上都不是事。

3.2.2.1 profile配置

[root@RHOB-DB00 ~]# cat .bash_profile|grep -Ev '^#|^$' if [ -f ~/.bashrc ]; then . ~/.bashrc fi export MYSQL_HOME=/mysql/app/mysql-8.0.27 export C_INCLUDE_PATH=$MYSQL_HOME/include export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase/lib:$MYSQL_HOME/lib PATH=$PATH:$HOME/bin:$MYSQL_HOME/bin export PATH

3.2.2.2 openssl 升级版本

3.2.2.2.1 解压openssl软件包

[root@RHOB-DB00 tool]# pwd /software/tool [root@RHOB-DB00 tool]# tar -zxvf openssl-1.1.1c.tar.gz

3.2.2.2.2 make

[root@RHOB-DB00 tool]# cd openssl-1.1.1c [root@RHOB-DB00 openssl-1.1.1c]# ./config --prefix=/usr/local/openssl [root@RHOB-DB00 openssl-1.1.1c]# make && make install

3.2.2.2.3 软链接

[root@RHOB-DB00 tool]# rm -rf /usr/bin/openssl [root@RHOB-DB00 tool]# rm -rf /usr/lib64/openssl [root@RHOB-DB00 tool]# rm -rf /usr/lib64/libssl.so [root@RHOB-DB00 openssl-1.1.1c]# ln -s /usr/local/openssl/bin/openssl /usr/bin/openssl [root@RHOB-DB00 openssl-1.1.1c]# ln -s /usr/local/openssl/include/openssl /usr/include/openssl [root@RHOB-DB00 openssl-1.1.1c]# ln -s /usr/local/openssl/lib/libssl.so /usr/lib64/libssl.so [root@RHOB-DB00 openssl-1.1.1c]# echo "/usr/local/openssl/lib" >> /etc/ld.so.conf

3.2.2.2.4 检查lib包

确保libssl.so.1.1和libcrypto.so.1.1是OK的。

[root@RHOB-DB00 openssl-1.1.1c]# ldconfig -v |grep -Ei 'libssl|crypt' ldconfig: Can't stat /libx32: No such file or directory ldconfig: Path `/usr/lib' given more than once ldconfig: Path `/usr/lib64' given more than once ldconfig: Can't stat /usr/libx32: No such file or directory libssl.so.1.1 -> libssl.so.1.1 libcrypto.so.1.1 -> libcrypto.so.1.1 libbd_crypto.so.2 -> libbd_crypto.so.2.0.0 libcryptsetup.so.4 -> libcryptsetup.so.4.7.0 libcryptsetup.so.12 -> libcryptsetup.so.12.3.0 libssl3.so -> libssl3.so libk5crypto.so.3 -> libk5crypto.so.3.1 libssl.so.10 -> libssl.so.1.0.2k libcrypto.so.10 -> libcrypto.so.1.0.2k libgcrypt.so.11 -> libgcrypt.so.11.8.2 libcrypt.so.1 -> libcrypt-2.17.so

3.2.2.2.5 检查openssl的版本

[root@RHOB-DB00 openssl-1.1.1c]# openssl version OpenSSL 1.1.1c 28 May 2019

3.2.2.3 libmariadb.so

pencona的东西居然用到了mariadb,惊呆了小编了。

也许是因为检查没有mysqlclient,触发寻找mariab ?

这里有兴趣的同学在这一步可以尝试下是否需要libmariadb.so,在RHEL7的环境使用MySQL8编译Tpcc-MySQL源码包。

[root@RHOB-DB00 lib]# ln -s /mysql/app/mysql-8.0.27/lib/libmysqlclient.so.21.2.27 /usr/lib/libmariadb.so

3.2.2.4 解压 tpcc-mysql

[root@RHOB-DB00 DM]# pwd /software/DM [root@RHOB-DB00 DM]# unzip -qo tpcc-mysql-master.zip [root@RHOB-DB00 DM]# cd tpcc-mysql-master/src

3.2.2.5 修改Makefile

修改Makefile,不然编译不过去。

在tpcc_start处,添加"-lpthread -lm "。

[root@RHOB-DB00 src]# cat Makefile

#

# "make all" to build necessary executables.

#

LIBS= `mysql_config --libs_r` -lrt

INC= -I. `mysql_config --include`

#DEFS= -DDEBUG

CFLAGS= -w -O3 -g

TRANSACTIONS= neword.o payment.o ordstat.o delivery.o slev.o

OBJS= main.o spt_proc.o driver.o support.o sequence.o rthist.o sb_percentile.o $(TRANSACTIONS)

.SUFFIXES:

.SUFFIXES: .o .c

.c.o:

$(CC) $(CFLAGS) $(INC) $(DEFS) -c $*.c

all: ../tpcc_load ../tpcc_start

../tpcc_load : load.o support.o

$(CC) load.o support.o $(LIBS) -o ../tpcc_load

../tpcc_start : $(OBJS)

$(CC) $(OBJS) $(LIBS) -o ../tpcc_start -lpthread -lm

clean :

rm -f *.o

3.2.2.6 make (tpcc-mysql)

[root@RHOB-DB00 src]# pwd /software/DM/tpcc-mysql-master/src [root@RHOB-DB00 src]# make cc -w -O3 -g -I. `mysql_config --include` -c load.c cc -w -O3 -g -I. `mysql_config --include` -c support.c cc load.o support.o `mysql_config --libs_r` -lrt -o ../tpcc_load cc -w -O3 -g -I. `mysql_config --include` -c main.c cc -w -O3 -g -I. `mysql_config --include` -c spt_proc.c cc -w -O3 -g -I. `mysql_config --include` -c driver.c cc -w -O3 -g -I. `mysql_config --include` -c sequence.c cc -w -O3 -g -I. `mysql_config --include` -c rthist.c cc -w -O3 -g -I. `mysql_config --include` -c sb_percentile.c cc -w -O3 -g -I. `mysql_config --include` -c neword.c cc -w -O3 -g -I. `mysql_config --include` -c payment.c cc -w -O3 -g -I. `mysql_config --include` -c ordstat.c cc -w -O3 -g -I. `mysql_config --include` -c delivery.c cc -w -O3 -g -I. `mysql_config --include` -c slev.c cc main.o spt_proc.o driver.o support.o sequence.o rthist.o sb_percentile.o neword.o payment.o ordstat.o delivery.o slev.o `mysql_config --libs_r` -lrt -o ../tpcc_start -lpthread -lm

3.2.2.7 检查工具

检查 tpcc_load 和 tpcc_start 是否能够正常使用。

[root@RHOB-DB00 src]# cd .. [root@RHOB-DB00 tpcc-mysql-master]# ./tpcc_load -h ************************************* *** TPCC-mysql Data Loader *** ************************************* ./tpcc_load: option requires an argument -- 'h' Usage: tpcc_load -h server_host -P port -d database_name -u mysql_user -p mysql_password -w warehouses -l part -m min_wh -n max_wh * [part]: 1=ITEMS 2=WAREHOUSE 3=CUSTOMER 4=ORDERS [root@RHOB-DB00 tpcc-mysql-master]# ./tpcc_start -h *************************************** *** ###easy### TPC-C Load Generator *** *************************************** ./tpcc_start: option requires an argument -- 'h' Usage: tpcc_start -h server_host -P port -d database_name -u mysql_user -p mysql_password -w warehouses -c connections -r warmup_time -l running_time -i report_interval -f report_file -t trx_file [root@RHOB-DB00 tpcc-mysql-master]# pwd /software/DM/tpcc-mysql-master

3.2.2.8 制造数据

创建表和索引

mysql> create database tpcc10;

[root@RHOB-DB00 tpcc-mysql-master]# mysql -h 192.168.117.170 -P 3310 -uroot -p123456 tpcc10 < create_table.sql

[root@RHOB-DB00 tpcc-mysql-master]# mysql -h 192.168.117.170 -P 3310 -uroot -p123456 tpcc10 < add_fkey_idx.sql

加载数据,本次加载10个数据仓库。

[root@RHOB-DB00 tpcc-mysql-master]# ./tpcc_load -h192.168.117.170 -P 3310 -d tpcc10 -u root -p "123456" -w 10

检查数据量

mysql> show table status;

+------------+--------+---------+------------+---------+----------------+-------------+-----------------+--------------+-----------+----------------+---------------------+-------------+------------+--------------------+----------+----------------+---------+

| Name | Engine | Version | Row_format | Rows | Avg_row_length | Data_length | Max_data_length | Index_length | Data_free | Auto_increment | Create_time | Update_time | Check_time | Collation | Checksum | Create_options | Comment |

+------------+--------+---------+------------+---------+----------------+-------------+-----------------+--------------+-----------+----------------+---------------------+-------------+------------+--------------------+----------+----------------+---------+

| customer | InnoDB | 10 | Dynamic | 269210 | 657 | 176930816 | 0 | 20529152 | 6291456 | NULL | 2022-03-07 21:02:39 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

| district | InnoDB | 10 | Dynamic | 89 | 184 | 16384 | 0 | 0 | 0 | NULL | 2022-03-07 21:02:39 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

| history | InnoDB | 10 | Dynamic | 282980 | 68 | 19447808 | 0 | 10518528 | 6291456 | NULL | 2022-03-07 21:02:39 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

| item | InnoDB | 10 | Dynamic | 91354 | 97 | 8929280 | 0 | 0 | 4194304 | NULL | 2022-03-07 21:02:26 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

| new_orders | InnoDB | 10 | Dynamic | 85414 | 30 | 2637824 | 0 | 0 | 4194304 | NULL | 2022-03-07 21:02:39 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

| order_line | InnoDB | 10 | Dynamic | 2957421 | 71 | 210518016 | 0 | 102514688 | 5242880 | NULL | 2022-03-07 21:02:39 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

| orders | InnoDB | 10 | Dynamic | 285408 | 42 | 12075008 | 0 | 8929280 | 4194304 | NULL | 2022-03-07 21:02:39 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

| stock | InnoDB | 10 | Dynamic | 913125 | 374 | 341704704 | 0 | 21544960 | 5242880 | NULL | 2022-03-07 21:02:39 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

| warehouse | InnoDB | 10 | Dynamic | 8 | 2048 | 16384 | 0 | 0 | 0 | NULL | 2022-03-07 21:02:26 | NULL | NULL | utf8mb4_0900_ai_ci | NULL | | |

+------------+--------+---------+------------+---------+----------------+-------------+-----------------+--------------+-----------+----------------+---------------------+-------------+------------+--------------------+----------+----------------+---------+

9 rows in set (0.00 sec)

统计信息不一定准确,请以select count(*) 为准。

| ID | Tables_in_tpcc10 | 数据量 |

|---|---|---|

| 1 | customer | 300000 |

| 2 | district | 100 |

| 3 | history | 300000 |

| 4 | item | 100000 |

| 5 | new_orders | 90000 |

| 6 | order_line | 2999898 |

| 7 | orders | 300000 |

| 8 | stock | 1000000 |

| 9 | warehouse | 10 |

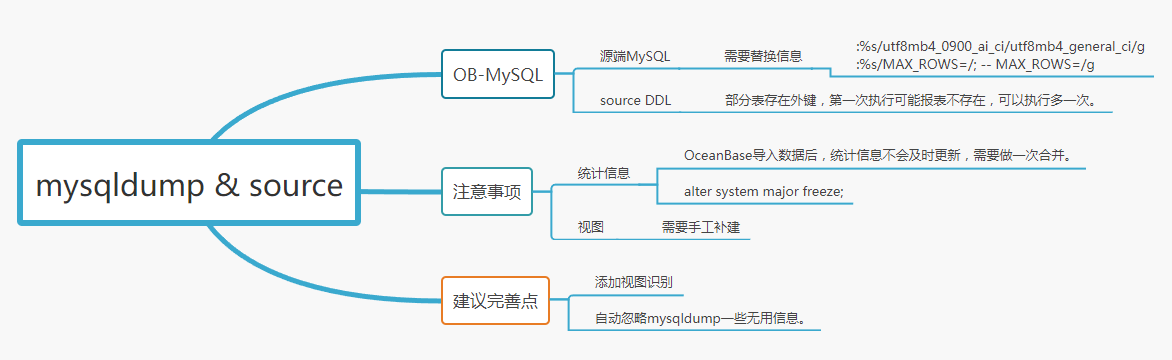

四. mysqldump 和 source

4.1 MySQL导出数据

数据源:EMPLOYESS 数据库

4.1.1 mysqldump 导出

4.1.1.1 mysqldump导出表结构

使用MySQL8 mysqldump 导出表结构

[root@RHOB-DB00 dump]# pwd /mysql/dump [root@RHOB-DB00 dump]# mysqldump -h 192.168.117.170 -uroot -P3310 -p123456 -d employees --compact > employees_ddl.sql

导出的脚本有几个特征:

- 视图的定义也会在里面,但是会以注释。视图可以后续补建。

- OceanBase MYSQL 不支持特别的语法,需要替换掉其中部分。比如说变量 SQL_NOTES,DEFINER 语句等。MAX_ROWS的问题可以直接替换掉。

vim employees_ddl.sql :%s/utf8mb4_0900_ai_ci/utf8mb4_general_ci/g :%s/MAX_ROWS=/; -- MAX_ROWS=/g

4.1.1.2 mysqldump导出表数据

[root@RHOB-DB00 dump]# mysqldump -h 192.168.117.170 -uroot -P3310 -p123456 -t employees > employees_data.sql

4.2 OB导入数据

数据源:EMPLOYESS 数据库

4.2.1 导入表结构

[root@RHOB-DB00 ~]# cd /mysql/dump/ [root@RHOB-DB00 dump]# pwd /mysql/dump [root@RHOB-DB00 dump]# obclient -h 192.168.117.170 -u root@mys_tpcc#obdemo -P 2883 -p'123456' -c -A employees MySQL [employees]> source employees_ddl.sql

检查对象是否存在,后续再补视图。

MySQL [employees]> show tables;

+---------------------+

| Tables_in_employees |

+---------------------+

| departments |

| dept_emp |

| dept_manager |

| employees |

| salaries |

| titles |

+---------------------+

6 rows in set (0.005 sec)

4.2.2 导入数据

导入数据前,需要禁用外键。

[root@RHOB-DB00 ~]# cd /mysql/dump/

[root@RHOB-DB00 dump]# pwd

/mysql/dump

[root@RHOB-DB00 dump]# obclient -h 192.168.117.170 -u root@mys_tpcc#obdemo -P 2883 -p'123456' -c -A employees

Welcome to the OceanBase. Commands end with ; or \g.

Your MySQL connection id is 524299

# 禁用外键

MySQL [employees]> set global foreign_key_checks=off;

Query OK, 0 rows affected (0.005 sec)

MySQL [employees]> source employees_data.sql;

...

4.2.3 创建视图

MySQL [employees]> CREATE VIEW `current_dept_emp` AS select `l`.`emp_no` AS `emp_no`,`d`.`dept_no` AS `dept_no`,`l`.`from_date` AS `from_date`,`l`.`to_date` AS `to_date` from (`dept_emp` `d` join `dept_emp_latest_date` `l` on(((`d`.`emp_no` = `l`.`emp_no`) and (`d`.`from_date` = `l`.`from_date`) and (`l`.`to_date` = `d`.`to_date`))));

ERROR 1146 (42S02): Table 'employees.dept_emp_latest_date' doesn't exist

(root@192.168.117.171) [employees]>CREATE VIEW `dept_emp_latest_date` AS select `dept_emp`.`emp_no` AS `emp_no`,max(`dept_emp`.`from_date`) AS `from_date`,max(`dept_emp`.`to_date`) AS `to_date` from `dept_emp` group by `dept_emp`.`emp_no`;

Query OK, 0 rows affected (0.166 sec)

MySQL [employees]> CREATE VIEW `current_dept_emp` AS select `l`.`emp_no` AS `emp_no`,`d`.`dept_no` AS `dept_no`,`l`.`from_date` AS `from_date`,`l`.`to_date` AS `to_date` from (`dept_emp` `d` join `dept_emp_latest_date` `l` on(((`d`.`emp_no` = `l`.`emp_no`) and (`d`.`from_date` = `l`.`from_date`) and (`l`.`to_date` = `d`.`to_date`))));

Query OK, 0 rows affected (0.084 sec)

# 查看对象是否齐全

MySQL [employees]> show tables;

+----------------------+

| Tables_in_employees |

+----------------------+

| current_dept_emp |

| departments |

| dept_emp |

| dept_emp_latest_date |

| dept_manager |

| employees |

| salaries |

| titles |

+----------------------+

8 rows in set (0.004 sec)

4.2.4 检查数据

经检查,OceanBase的数据与源端一致。

4.2.5 启用外键

MySQL [employees]> set global foreign_key_checks=on; Query OK, 0 rows affected (0.022 sec)

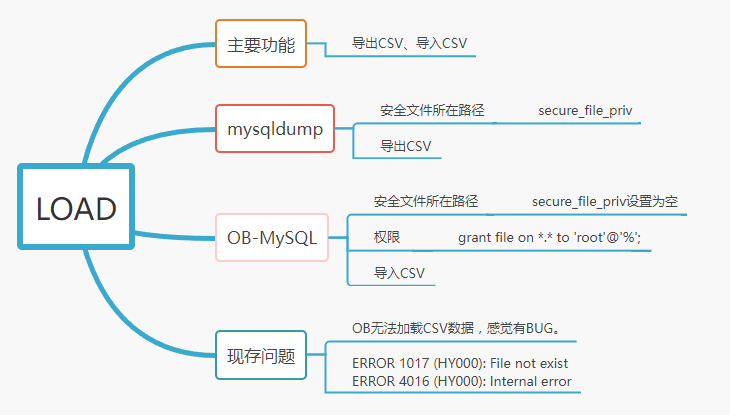

五. Load

5.1 MySQL 导出CSV

数据源:EMPLOYESS 数据库

5.1.1 设置安全文件路径

在参数文件设置,需要重启数据库。

mysql> show global variables like '%secure_file_priv%';

+------------------+--------------+

| Variable_name | Value |

+------------------+--------------+

| secure_file_priv | /mysql/dump/ |

+------------------+--------------+

1 row in set (0.02 sec)

5.1.2 检查表数据量

mysql> use employees;

Database changed

mysql> select count(*) from employees;

+----------+

| count(*) |

+----------+

| 300024 |

+----------+

1 row in set (0.08 sec)

5.1.3 导出CSV数据

mysql> select * from employees into outfile '/mysql/dump/t_employees.csv' fields terminated by ',' enclosed by '"' lines terminated by '\n' ;

Query OK, 300024 rows affected (0.26 sec)

[root@RHOB-DB00 dump]# ls -lh t_employees.csv

-rw-r----- 1 mysql mysql 17M Mar 12 00:24 t_employees.csv

5.1.4 传至OB服务器

[root@RHOB-DB00 dump]# scp /mysql/dump/t_employees.csv root@RHOB-DB01:/mysql/dump/

5.2 OceanBase加载CSV

数据源:EMPLOYESS 数据库

5.2.1 secure_file_priv

首先修改安全文件所在路径,设置为空(即无需检查)

set global secure_file_priv = "";

5.2.2 授予 file 权限

MySQL [employees]> grant file on *.* to 'root'@'%';

5.2.3 加载数据

这个环境小编感觉操作没有什么问题,待小编请教原厂后,再更新这个问题。

MySQL [employees]> create table employees2 select * from employees where 1=2;

MySQL [employees]> load data infile '/mysql/dump/t_employees.csv' into table employees2 fields terminated by ',' lines terminated by '\n';

ERROR 1017 (HY000): File not exist

感觉这里OceanBase加载CSV的操作应该没有问题,但检查了权限和路径都没有问题,真不知道是不是BUG。

ERROR 1017 (HY000): File not exist

ERROR 4016 (HY000): Internal error

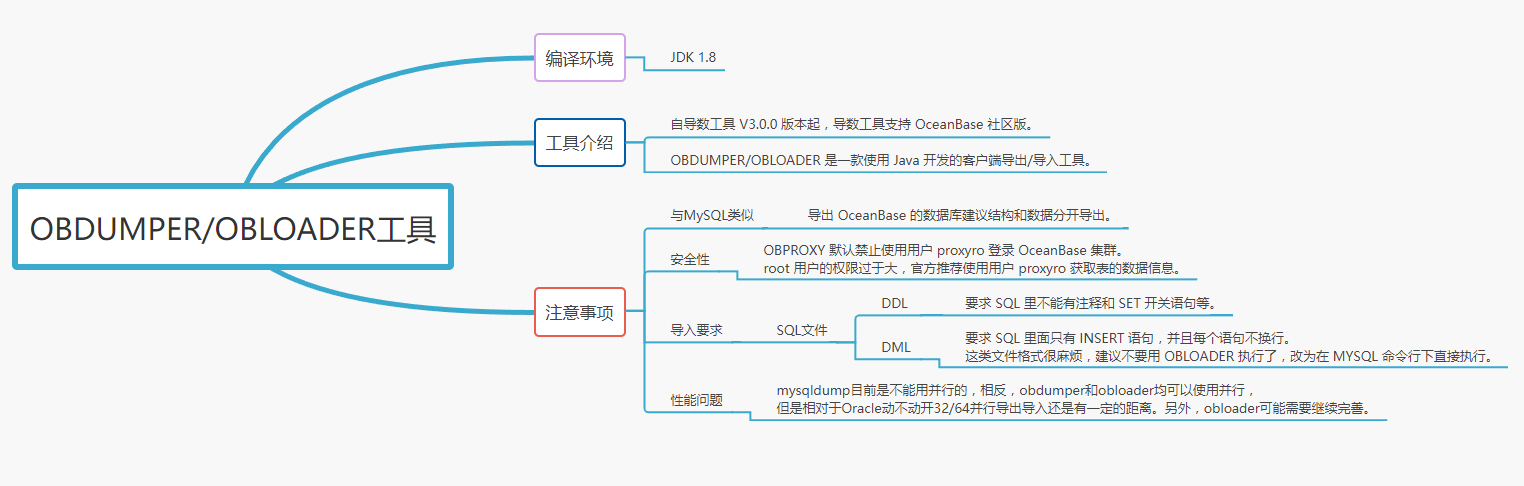

六. OBDUMPER 和 OBLOADER 工具

6.1 部署 OBDUMPER 和 OBLOADER 工具

6.1.1 解压压缩包

[root@RHOB-DB00 DM]# unzip ob-loader-dumper-3.0.0-RELEASE-ce.zip [root@RHOB-DB00 DM]# mv ob-loader-dumper-3.0.0-RELEASE-ce obdump

6.1.2 Java环境变量

如果是使用OpenJdk,请使用以下配置。

[root@RHOB-DB00 ~]# which java /usr/bin/java [root@RHOB-DB00 ~]# java -version openjdk version "1.8.0_181" OpenJDK Runtime Environment (build 1.8.0_181-b13) OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)

6.1.3 加载环境变量

编辑 profile,添加工具的路径,随时随地使用。

[root@RHOB-DB00 ~]# cat .bash_profile |grep -Ei 'export|path' export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.181-7.b13.el7.x86_64/jre export MYSQL_HOME=/mysql/app/mysql-8.0.27 export C_INCLUDE_PATH=$MYSQL_HOME/include export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase/lib:$MYSQL_HOME/lib PATH=$PATH:$HOME/bin:$MYSQL_HOME/bin:/software/DM/obdump/bin export PATH

检测工具是否能够使用,OK !

[root@RHOB-DB00 obdump]# pwd /software/DM/obdump [root@RHOB-DB00 ~]# obdumper --help ____ ____ / __ \ _____ ___ ____ _ ____ / __ ) ____ _ _____ ___ / / / / / ___/ / _ \ / __ `/ / __ \ / __ | / __ `/ / ___/ / _ \ / /_/ / / /__ / __// /_/ / / / / / / /_/ / / /_/ / (__ ) / __/ \____/ \___/ \___/ \__,_/ /_/ /_/ /_____/ \__,_/ /____/ \___/ Usage: obdumper [-HV] [--add-extra-message] [--all] [--csv] [--cut] [--ddl] [--distinct] [--drop-object] [--public-cloud] [--remove-newline] [--retain-empty-files] [--retry] ... ...

6.2 OBDUMPER导出

OB官方建议导出 MySQL 数据库一样,导出 OceanBase 的数据库建议结构和数据分开导出。

如果导出表结构,需要指定 SYS 租户的用户 root 的密码或者用户 proxyro 和 其密码。

为了安全性,OBPROXY 默认禁止使用用户 proxyro 登录 OceanBase 集群。

root 用户的权限过于大。所以这里推荐使用用户 proxyro 获取表的元数据信息。

6.2.1 跳过proxyro的检测

修改 OBPROXY 参数跳过用户 proxyro 登录检查。否则,就算输入正确的密码,也无法登陆,并且会报以下错误。

ERROR 2013 (HY000): Lost connection to MySQL server at 'reading authorization packet', system error: 11

修改skip_proxyro_check参数为true。

[admin@RHOB-DB00 ~]$ obclient -h 192.168.117.170 -u root@proxysys -P 2883 -p'Sysproxy@123' (root@proxysys@192.168.117.170) [(none)]>alter proxyconfig set skip_proxyro_check=true; Query OK, 0 rows affected (0.009 sec) (root@proxysys@192.168.117.170) [(none)]> show proxyconfig like '%skip_proxyro_check%'; +--------------------+-------+--------------------------------------------------+-------------+---------------+ | name | value | info | need_reboot | visible_level | +--------------------+-------+--------------------------------------------------+-------------+---------------+ | skip_proxyro_check | True | used for proxro@sys, if set false, access denied | false | SYS | +--------------------+-------+--------------------------------------------------+-------------+---------------+ 1 row in set (0.001 sec)

测试 proxyro 账号是否能够执行查询操作。

[admin@RHOB-DB01 ~]$ obclient -h 192.168.117.171 -P 2883 -u proxyro@sys#obdemo -p'Serproxy@123' -c -A -Ns -e "show databases;" oceanbase information_schema [admin@RHOB-DB01 ~]$ obclient -h 192.168.117.171 -P 2883 -u proxyro@sys#obdemo -p'Serproxy@123' -c -A Welcome to the OceanBase. Commands end with ; or \g. Your MySQL connection id is 45 Server version: 5.6.25 OceanBase 3.1.2 (r10000392021123010-d4ace121deae5b81d8f0b40afbc4c02705b7fc1d) (Built Dec 30 2021 02:47:29) (proxyro@192.168.117.171) [(none)]>show databases; +--------------------+ | Database | +--------------------+ | oceanbase | | information_schema | +--------------------+ 2 rows in set (0.102 sec) [admin@RHOB-DB01 ~]$ obclient -h 192.168.117.171 -uroot@mys_tpcc#obdemo -P2883 -p -c -A Enter password: Welcome to the OceanBase. Commands end with ; or \g. Your MySQL connection id is 46 Server version: 5.6.25 OceanBase 3.1.2 (r10000392021123010-d4ace121deae5b81d8f0b40afbc4c02705b7fc1d) (Built Dec 30 2021 02:47:29) (root@192.168.117.171) [(none)]>select user,host from mysql.user; +------------+------+ | user | host | +------------+------+ | root | % | | ORAAUDITOR | % | +------------+------+ 2 rows in set (0.361 sec) (root@192.168.117.171) [(none)]>alter user 'root'@'%' identified by "123456"; Query OK, 0 rows affected (0.138 sec)

6.2.2 OBDUMPER导出数据

本次主要演示导出 表结构DDL 和 数据(CSV格式)。

数据源:EMPLOYESS 数据库

6.2.2.1 导出表结构

6.2.2.1.1 导出sql语句

(可选) 一次性将表结构和表数据一起导出,此导出只做命令演示。

注意 : OBLOADER接受不了过于复杂的语句,而且要求文本格式比较严格,对于此类问题,官方推荐使用OB-MySQL的方式直接执行SQL脚本。

[admin@RHOB-DB01 test_obdump1]$ nohup obdumper -h 192.168.117.171 -P 2883 -u root -p 123456 --sys-user=proxyro --sys-password=Serproxy@123 -c obdemo -t mys_tpcc-D employees --sql --all -f /home/admin/dump/test_obdump2 > obdumper_employees_sql.log &

6.2.2.1.2 导出DDL

本文使用官方推荐命令导出DDL语句。

[admin@RHOB-DB01 ~]$ obdumper -h 192.168.117.171 -P 2883 -u root -p 123456 --sys-user=proxyro --sys-password=Serproxy@123 -c obdemo -t mys_tpcc-D employees --ddl --all -f /home/admin/dump/test_obdump1 2022-03-11 20:37:18 [INFO] Parsed args: [--host] 192.168.117.171 [--port] 2883 [--user] root [--tenant] mys_tpcc [--cluster] obdemo [--password] ****** [--database] employees [--sys-user] proxyro [--sys-password] ****** [--ddl] true [--file-path] /home/admin/dump/test_obdump1 [--all] true 2022-03-11 20:37:18 [INFO] Load jdbc driver class: "org.mariadb.jdbc.Driver" finished ... ... ... 2022-03-11 20:37:26 [INFO] Close connection count: 32 of the DataSource. Key: 172_16_43_14_11532_639183135_oceanbase 2022-03-11 20:37:26 [INFO] Shutdown task context finished 2022-03-11 20:37:26 [INFO] Finished Tasks: 2 Running Tasks: 0 Progress: 100.00% 2022-03-11 20:37:26 [INFO] All Dump Tasks Finished: ---------------------------------------------------------------------------------------------------------------------------- No.# | Type | Name | Count | Status ---------------------------------------------------------------------------------------------------------------------------- 1 | TABLE | salaries | 1 | SUCCESS 2 | TABLE | dept_emp | 2 | SUCCESS 3 | TABLE | dept_manager | 2 | SUCCESS 4 | TABLE | titles | 1 | SUCCESS 5 | TABLE | departments | 1 | SUCCESS 6 | TABLE | employees | 1 | SUCCESS 7 | VIEW | dept_emp_latest_date | 1 | SUCCESS 8 | VIEW | current_dept_emp | 1 | SUCCESS ---------------------------------------------------------------------------------------------------------------------------- Total Count: 10 End Time: 2022-03-11 20:37:26 2022-03-11 20:37:26 [INFO] Dump schema finished. Total Elapsed: 6.179 s 2022-03-11 20:37:26 [INFO] System exit 0

查看导出的文件是什么内容,就是一些SQL语句脚本。

[admin@RHOB-DB01 dump]$ tree test_obdump1/ test_obdump1/ ├── data │ ├── CHECKPOINT.bin │ ├── employees │ │ ├── TABLE │ │ │ ├── departments-schema.sql │ │ │ ├── dept_emp-schema.sql │ │ │ ├── dept_manager-schema.sql │ │ │ ├── employees-schema.sql │ │ │ ├── salaries-schema.sql │ │ │ └── titles-schema.sql │ │ └── VIEW │ │ ├── current_dept_emp-schema.sql │ │ └── dept_emp_latest_date-schema.sql │ └── MANIFEST.bin └── logs ├── ob-loader-dumper.error ├── ob-loader-dumper.info └── ob-loader-dumper.warn 5 directories, 13 files

6.2.2.2 导出表数据-CSV格式

数据源:EMPLOYESS 数据库

[admin@RHOB-DB01 test_obdump1]$ nohup obdumper -h 192.168.117.171 -P 2883 -u root -p 123456 --sys-user=proxyro --sys-password=Serproxy@123 -c obdemo -t mys_tpcc -D employees --csv --all -f /home/admin/dump/test_obdump1/csv > obdumper_employees.log &

...

...

2022-03-11 21:27:27 [INFO] Dump 2844047 rows employees.salaries to "/home/admin/dump/test_obdump1/csv/data/employees/TABLE/salaries.csv" finished

2022-03-11 21:27:27 [INFO] Close connection count: 8 of the DataSource. Key: 192_168_117_171_11532_413522392_employees

2022-03-11 21:27:27 [INFO] Close connection count: 6 of the DataSource. Key: 192_168_117_171_11532_639183135_oceanbase

2022-03-11 21:27:27 [INFO] Shutdown task context finished

2022-03-11 21:27:27 [INFO]

Finished Tasks: 6 Running Tasks: 0 Progress: 100.00%

2022-03-11 21:27:27 [INFO]

All Dump Tasks Finished:

----------------------------------------------------------------------------------------------------------------------------

No.# | Type | Name | Count | Status

----------------------------------------------------------------------------------------------------------------------------

1 | TABLE | salaries | 2844047 | SUCCESS

2 | TABLE | dept_emp | 331603 | SUCCESS

3 | TABLE | dept_manager | 24 | SUCCESS

4 | TABLE | departments | 9 | SUCCESS

5 | TABLE | titles | 443308 | SUCCESS

6 | TABLE | employees | 300024 | SUCCESS

----------------------------------------------------------------------------------------------------------------------------

Total Count: 3919015 End Time: 2022-03-11 21:27:27

2022-03-11 21:27:27 [INFO] Unnecessary to merge the data files. As --file-name is missing

2022-03-11 21:27:27 [INFO] Dump record finished. Total Elapsed: 43.99 s

2022-03-11 21:27:27 [INFO] Unnecessary to upload the data files to the remote cloud storage service

2022-03-11 21:27:27 [INFO] System exit 0

6.3 OBLOADER导入工具

6.3.1 导入表结构

因为本次演示是在同一个租户里面进行,所以,创建一个名为employees2数据库。

6.3.1.1 创建数据库

(root@192.168.117.170) [employees]>create database employees2;

Query OK, 1 row affected (0.105 sec)

6.3.1.2 执行导入表结构

[admin@RHOB-DB01 ~]$ obloader -h 192.168.117.170 -P 2883 -u root -p 123456 --sys-user=proxyro --sys-password=Serproxy@123 -c obdemo -t mys_tpcc -D employees2 --ddl --all -f /home/admin/dump/test_obdump1 2022-03-11 21:41:30 [INFO] Parsed args: [--host] 192.168.117.170 [--port] 2883 [--user] root [--tenant] mys_tpcc [--cluster] obdemo [--password] ****** [--database] employees2 [--sys-user] proxyro [--sys-password] ****** [--ddl] true [--file-path] /home/admin/dump/test_obdump1 [--all] true 2022-03-11 21:41:30 [INFO] No control files were defined in the path: "/home/admin/dump/test_obdump1" ... ... All Load Tasks Finished: ---------------------------------------------------------------------------------------------------------------------------- No.# | Type | Name | Count | Status ---------------------------------------------------------------------------------------------------------------------------- 1 | TABLE | salaries | 1 | SUCCESS 2 | TABLE | dept_emp | 2 | SUCCESS 3 | TABLE | dept_manager | 2 | SUCCESS 4 | TABLE | titles | 1 | SUCCESS 5 | TABLE | departments | 1 | SUCCESS 6 | TABLE | employees | 1 | SUCCESS 7 | VIEW | dept_emp_latest_date | 1 | SUCCESS 8 | VIEW | current_dept_emp | 1 | SUCCESS ---------------------------------------------------------------------------------------------------------------------------- Total Count: 10 End Time: 2022-03-11 21:41:35 2022-03-11 21:41:35 [INFO] Load schema finished. Total Elapsed: 4.123 s 2022-03-11 21:41:35 [INFO] System exit 0

查看表是否创建完成

(root@192.168.117.171) [employees2]>show tables; +----------------------+ | Tables_in_employees2 | +----------------------+ | current_dept_emp | | departments | | dept_emp | | dept_emp_latest_date | | dept_manager | | employees | | salaries | | titles | +----------------------+ 8 rows in set (0.008 sec)

6.3.2 导入表数据

6.3.2.1 禁用外键

不禁用外键,导入数据会报错。禁用外键这个操作,是实例级别,如果能提供以表级别的语句就更好了。

(root@192.168.117.171) [employees2]> set global foreign_key_checks=off;

6.3.2.2 导入CSV

这里希望OceanBase可以借鉴Oracle impdp的导入日志,比较简单清晰,直接告诉用户哪张表导入完成,显示数据行等等。

nohup obloader -h 192.168.117.171 -P 2883 -u root -p 123456 --sys-user=proxyro --sys-password=Serproxy@123 -c obdemo -t mys_tpcc -D employees2 --csv --all -f /home/admin/dump/test_obdump1/csv > obloader_employees2.log &

2022-03-11 21:47:19 [INFO]

Finished Tasks: 6 Running Tasks: 1 Progress: 85.73%

...

...

Total Count: 3919015 End Time: 2022-03-11 21:47:21

2022-03-11 21:47:21 [INFO] Load record finished. Total Elapsed: 2.193 min

2022-03-11 21:47:21 [INFO] System exit 0

6.3.2.3 启用外键

(root@192.168.117.171) [employees2]> set global foreign_key_checks=on;

6.4 统计信息

6.4.1 查看统计信息

发现统计信息没有变化

[admin@RHOB-DB00 ~]$ obclient -h 192.168.117.170 -u root@proxysys -P 2883 -p'Sysproxy@123'

root@192.168.117.170) [employees2]>show table status;

+----------------------+-----------+---------+------------+------+----------------+-------------+-----------------+--------------+-----------+----------------+----------------------------+----------------------------+------------+--------------------+----------+-------------------------------------------------------------------------------------------------------------------------------------------------+---------+

| Name | Engine | Version | Row_format | Rows | Avg_row_length | Data_length | Max_data_length | Index_length | Data_free | Auto_increment | Create_time | Update_time | Check_time | Collation | Checksum | Create_options | Comment |

+----------------------+-----------+---------+------------+------+----------------+-------------+-----------------+--------------+-----------+----------------+----------------------------+----------------------------+------------+--------------------+----------+-------------------------------------------------------------------------------------------------------------------------------------------------+---------+

| current_dept_emp | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | VIEW |

| departments | oceanbase | 7 | COMPACT | 0 | 0 | 0 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 12:12:05.348498 | NULL | utf8mb4_general_ci | 0 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| dept_emp | oceanbase | 7 | COMPACT | 0 | 0 | 0 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 12:22:54.868438 | NULL | utf8mb4_general_ci | 0 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| dept_emp_latest_date | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | VIEW |

| dept_manager | oceanbase | 7 | COMPACT | 0 | 0 | 0 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 12:12:09.251399 | NULL | utf8mb4_general_ci | 0 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| employees | oceanbase | 7 | COMPACT | 0 | 0 | 0 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 12:22:54.868438 | NULL | utf8mb4_general_ci | 0 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| salaries | oceanbase | 7 | COMPACT | 0 | 0 | 0 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 12:22:54.841843 | NULL | utf8mb4_general_ci | 0 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| titles | oceanbase | 7 | COMPACT | 0 | 0 | 0 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 12:22:54.868438 | NULL | utf8mb4_general_ci | 0 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

+----------------------+-----------+---------+------------+------+----------------+-------------+-----------------+--------------+-----------+----------------+----------------------------+----------------------------+------------+--------------------+----------+-------------------------------------------------------------------------------------------------------------------------------------------------+---------+

8 rows in set (0.017 sec)

6.4.2 Merge操作

做一次merge,当 __all_zone的info由"MERGING"变成"IDLE",请再次查看统计信息。

[admin@RHOB-DB01 dump]$ obclient -h 192.168.117.171 -u root -P 2881 -p 'rO0t&123' -c -A

Enter password:

(root@192.168.117.171) [(none)]>alter system major freeze ;

Query OK, 0 rows affected (0.017 sec)

(root@192.168.117.171) [oceanbase]>SELECT * FROM __all_zone WHERE name LIKE '%merge%' order by zone,gmt_modified;

+----------------------------+----------------------------+-------+---------------------+------------------+------+

| gmt_create | gmt_modified | zone | name | value | info |

+----------------------------+----------------------------+-------+---------------------+------------------+------+

| 2022-03-05 11:13:49.689616 | 2022-03-11 22:13:49.689616 | | is_merge_error | 0 | |

| 2022-03-05 11:13:49.688542 | 2022-03-11 22:57:16.927474 | | last_merged_version | 8 | |

| 2022-03-05 11:13:49.689616 | 2022-03-11 22:57:16.928564 | | merge_status | 0 | IDLE |

| 2022-03-05 11:13:49.692821 | 2022-03-11 22:54:28.891675 | zone1 | merge_start_time | 1646204068889931 | |

| 2022-03-05 11:13:49.691751 | 2022-03-11 22:56:53.272072 | zone1 | last_merged_version | 8 | |

| 2022-03-05 11:13:49.692821 | 2022-03-11 22:56:53.273151 | zone1 | last_merged_time | 1646204213250915 | |

| 2022-03-05 11:13:49.692821 | 2022-03-11 22:56:53.274230 | zone1 | all_merged_version | 8 | |

| 2022-03-05 11:13:49.692821 | 2022-03-11 22:56:53.274230 | zone1 | is_merge_timeout | 0 | |

| 2022-03-05 11:13:49.693894 | 2022-03-11 22:56:53.276819 | zone1 | merge_status | 0 | IDLE |

| 2022-03-05 11:13:49.697125 | 2022-03-11 22:54:28.906440 | zone2 | merge_start_time | 1646204068905678 | |

| 2022-03-05 11:13:49.696053 | 2022-03-11 22:57:10.550568 | zone2 | last_merged_version | 8 | |

| 2022-03-05 11:13:49.697125 | 2022-03-11 22:57:10.551645 | zone2 | is_merge_timeout | 0 | |

| 2022-03-05 11:13:49.696053 | 2022-03-11 22:57:10.551645 | zone2 | last_merged_time | 1646204230544629 | |

| 2022-03-05 11:13:49.696053 | 2022-03-11 22:57:10.552718 | zone2 | all_merged_version | 8 | |

| 2022-03-05 11:13:49.697125 | 2022-03-11 22:57:10.553854 | zone2 | merge_status | 0 | IDLE |

| 2022-03-05 11:13:49.700363 | 2022-03-11 22:54:28.920231 | zone3 | merge_start_time | 1646204068918732 | |

| 2022-03-05 11:13:49.699286 | 2022-03-11 22:57:16.747699 | zone3 | last_merged_version | 8 | |

| 2022-03-05 11:13:49.700363 | 2022-03-11 22:57:16.748771 | zone3 | all_merged_version | 8 | |

| 2022-03-05 11:13:49.700363 | 2022-03-11 22:57:16.748771 | zone3 | is_merge_timeout | 0 | |

| 2022-03-05 11:13:49.699286 | 2022-03-11 22:57:16.748771 | zone3 | last_merged_time | 1646204236747113 | |

| 2022-03-05 11:13:49.701450 | 2022-03-11 22:57:16.749839 | zone3 | merge_status | 0 | IDLE |

+----------------------------+----------------------------+-------+---------------------+------------------+------+

21 rows in set (0.012 sec)

6.4.3 再次检查统计信息

再次查看统计信息。Rows为NULL的对象可能是视图。

(root@192.168.117.171) [employees2]>show table status;

+----------------------+-----------+---------+------------+---------+----------------+-------------+-----------------+--------------+-----------+----------------+----------------------------+----------------------------+------------+--------------------+------------+-------------------------------------------------------------------------------------------------------------------------------------------------+---------+

| Name | Engine | Version | Row_format | Rows | Avg_row_length | Data_length | Max_data_length | Index_length | Data_free | Auto_increment | Create_time | Update_time | Check_time | Collation | Checksum | Create_options | Comment |

+----------------------+-----------+---------+------------+---------+----------------+-------------+-----------------+--------------+-----------+----------------+----------------------------+----------------------------+------------+--------------------+------------+-------------------------------------------------------------------------------------------------------------------------------------------------+---------+

| current_dept_emp | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | VIEW |

| departments | oceanbase | 8 | COMPACT | 9 | 142 | 1280 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 14:56:50.238230 | NULL | utf8mb4_general_ci | 3476010086 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| dept_emp | oceanbase | 8 | COMPACT | 331603 | 13 | 4518356 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 14:56:18.987714 | NULL | utf8mb4_general_ci | 1779699842 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| dept_emp_latest_date | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | VIEW |

| dept_manager | oceanbase | 8 | COMPACT | 24 | 59 | 1417 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 14:56:22.120036 | NULL | utf8mb4_general_ci | 1637053343 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| employees | oceanbase | 8 | COMPACT | 300024 | 28 | 8492426 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 14:56:50.187785 | NULL | utf8mb4_general_ci | 4042172956 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| salaries | oceanbase | 8 | COMPACT | 2844047 | 12 | 35166278 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 14:56:17.696830 | NULL | utf8mb4_general_ci | 754971188 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

| titles | oceanbase | 8 | COMPACT | 443308 | 11 | 5008940 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 2022-03-11 14:56:22.903927 | NULL | utf8mb4_general_ci | 87649341 | ROW_FORMAT = COMPACT COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0 | |

+----------------------+-----------+---------+------------+---------+----------------+-------------+-----------------+--------------+-----------+----------------+----------------------------+----------------------------+------------+--------------------+------------+-------------------------------------------------------------------------------------------------------------------------------------------------+---------+

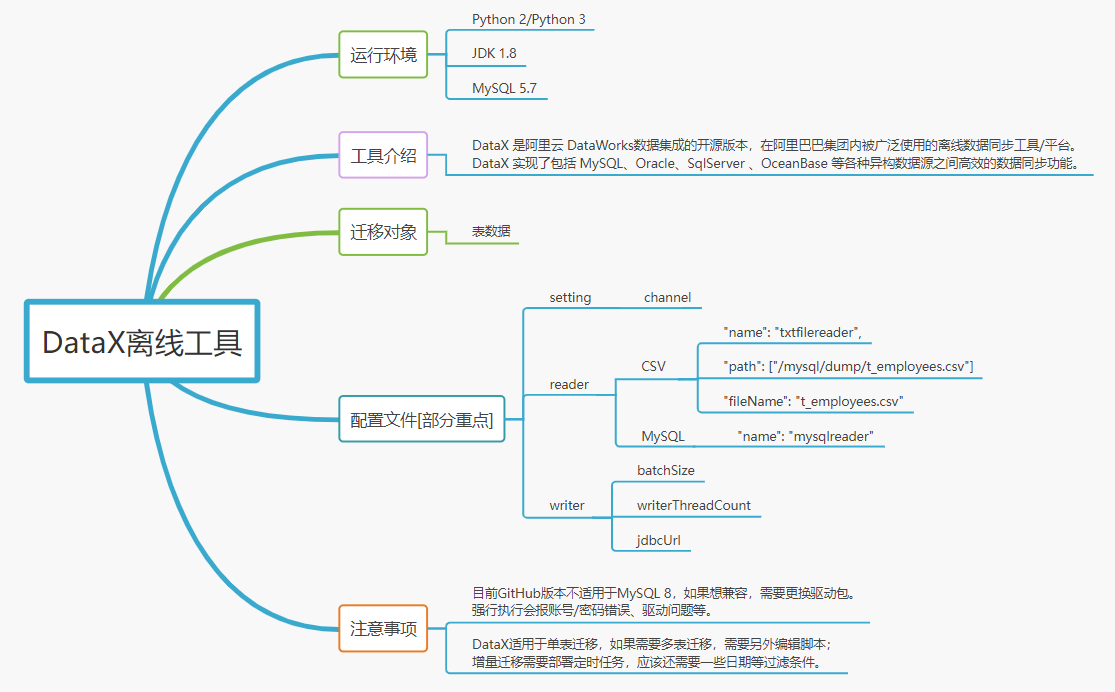

七. DataX

DataX 是阿里云 DataWorks数据集成的开源版本,在阿里巴巴集团内被广泛使用的离线数据同步工具/平台。

目前常规操作只能支持单表,需要结合脚本和定是任务可以实现多表迁移和增量迁移,这在易用性方面有待提升。

7.1 部署DataX

这是一款离线迁移工具,结合了Python和Java共同开发。

7.1.1 检查Python环境

[admin@RHOB-DB01 ~]$ python -V Python 2.7.5

7.1.2 检查Java环境

[admin@RHOB-DB01 ~]$ java -version openjdk version "1.8.0_181" OpenJDK Runtime Environment (build 1.8.0_181-b13) OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)

7.1.3 解压DataX

[root@RHOB-DB01 DM]# pwd /software/DM [root@RHOB-DB01 ~]# tar -zxvf datax.tar.gz [root@RHOB-DB01 ~]# chown -R admin:admin /software/DM/datax [root@RHOB-DB00 DM]# pwd /software/DM [root@RHOB-DB00 DM]# tar -zxvf datax.tar.gz [root@RHOB-DB00 DM]# cd datax/ [root@RHOB-DB00 datax]# ll total 4 drwxr-xr-x 2 502 games 118 Mar 7 22:03 bin drwxr-xr-x 2 502 games 136 Mar 7 22:02 conf drwxr-xr-x 2 502 games 40 Mar 7 22:02 job drwxr-xr-x 2 502 games 4096 Mar 7 22:02 lib drwxr-xr-x 4 502 games 42 Mar 7 22:02 log drwxr-xr-x 4 502 games 42 Mar 7 22:02 log_perf drwxr-xr-x 4 502 games 66 Mar 7 22:02 plugin drwxr-xr-x 2 502 games 42 Mar 7 22:02 script drwxr-xr-x 2 502 games 44 Oct 12 2019 tmp

7.1.4 删除隐藏文件

# 删除datax中的隐藏文件

[root@RHOB-DB00 DM]# find ./datax/plugin -name ".*" | xargs rm -f

7.2 DataX迁移测试

DataX 以任务的形式迁移数据。每个任务只处理一个表,每个任务有一个 json 格式的配置文件,配置文件里会包含 reader 和 writer 两节。具体的 reader 和 writer 都是 DataX 支持的数据库插件,可以随意搭配使用。

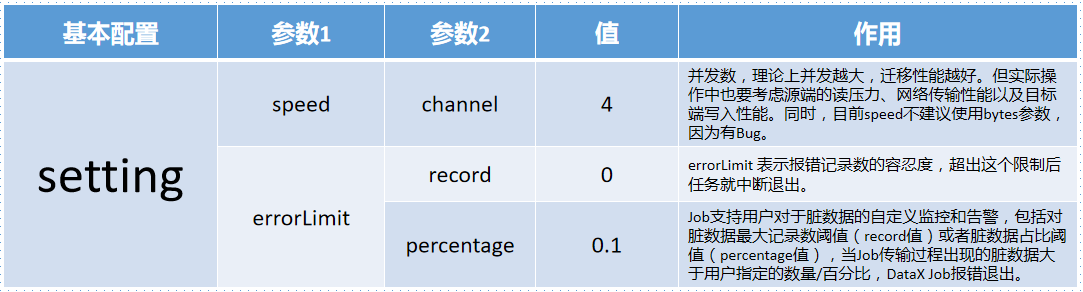

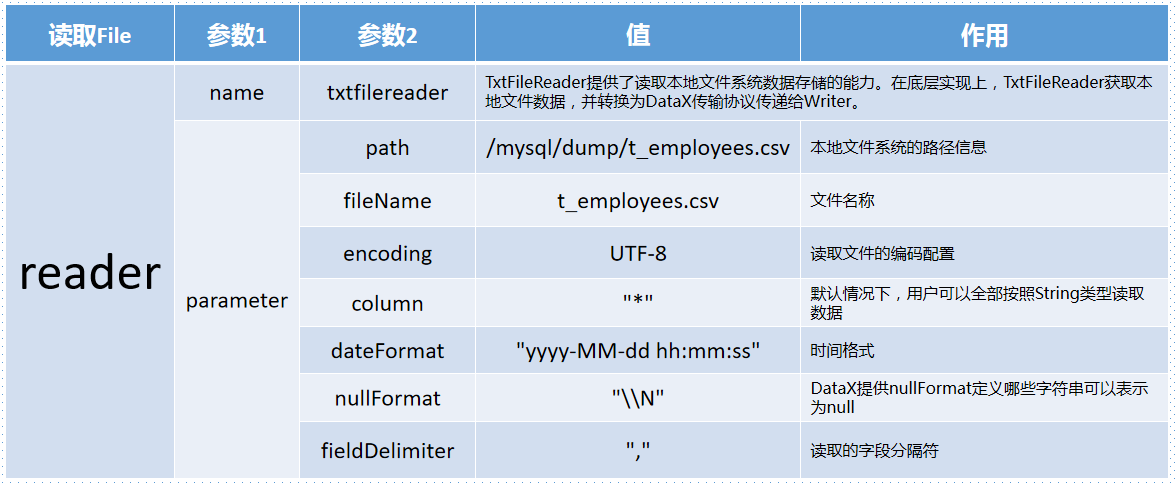

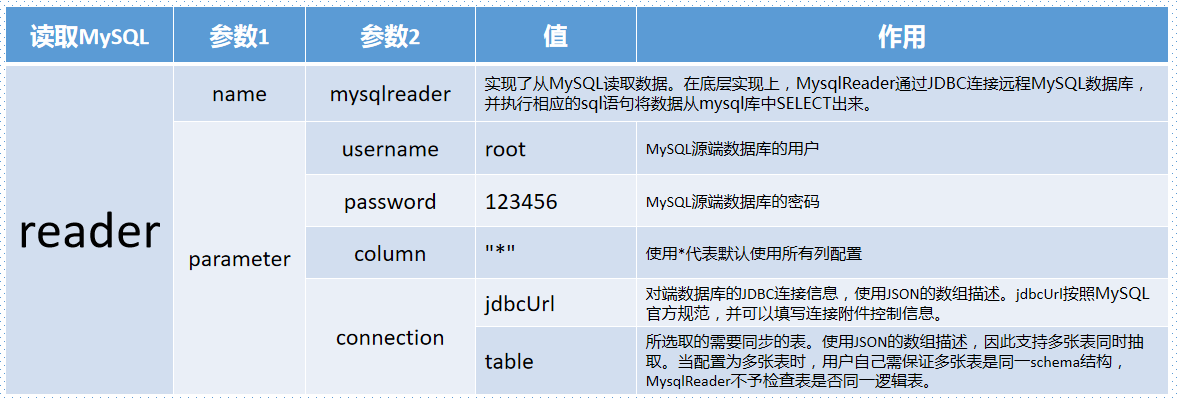

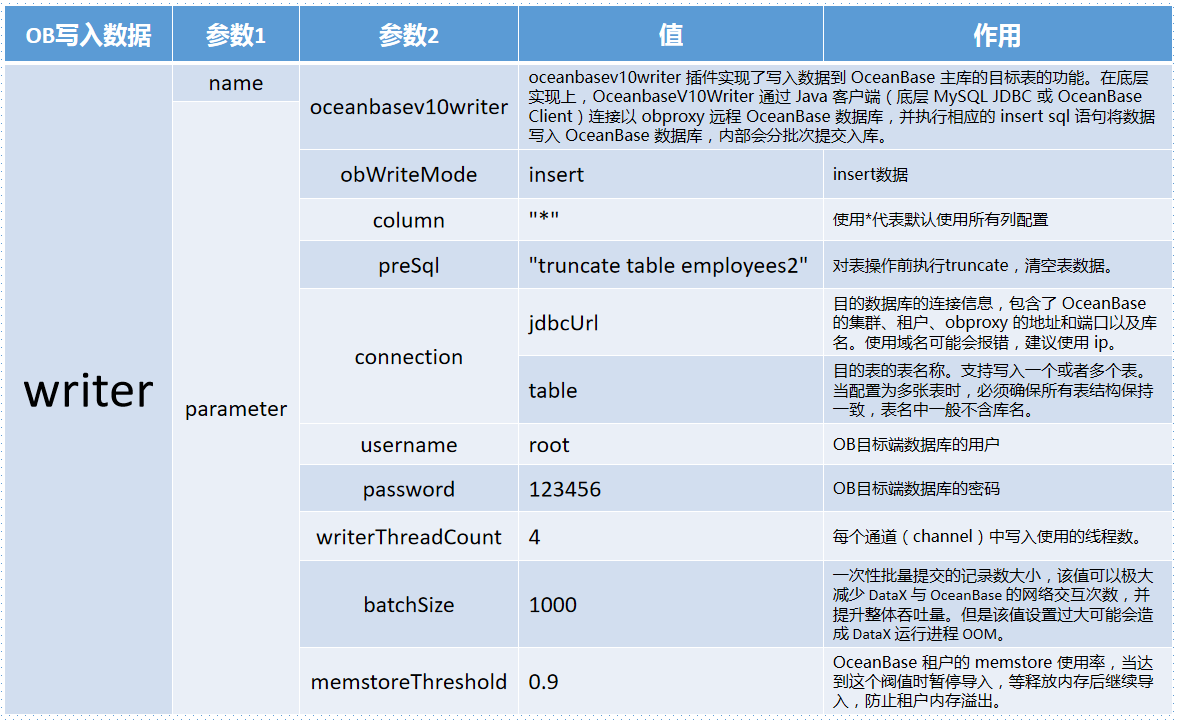

配置说明

-

setting 配置

-

CSV/TXT Reader 配置

-

MySQL Reader 配置

-

OceanBase Writer 配置

7.2.1 CSV导入OceanBase

将employees导出employee CSV表导入到employees的employee2表中。

7.2.1.1 配置文件

[root@RHOB-DB00 datax]# cat ./job/csv2ob_v1.json

{

"job": {

"setting": {

"speed": {

"channel": 4

},

"errorLimit": {

"record": 0,

"percentage": 0.1

}

},

"content": [

{

"reader": {

"name": "txtfilereader",

"parameter": {

"path": ["/mysql/dump/t_employees.csv"],

"fileName": "t_employees.csv",

"encoding": "UTF-8",

"column": ["*"],

"dateFormat": "yyyy-MM-dd hh:mm:ss" ,

"nullFormat": "\\N" ,

"fieldDelimiter": ","

}

},

"writer": {

"name": "oceanbasev10writer",

"parameter": {

"obWriteMode": "insert",

"column": [

"*"

],

"preSql": [

"truncate table employees2"

],

"connection": [

{

"jdbcUrl": "||_dsc_ob10_dsc_||obdemo:mys_tpcc||_dsc_ob10_dsc_||jdbc:oceanbase://192.168.117.171:2883/employees?useLocalSessionState=true&allowBatch=true&allowMultiQueries=true&rewriteBatchedStatements=true",

"table": [

"employees2"

]

}

],

"username": "root",

"password":"123456",

"writerThreadCount":4,

"batchSize": 1000,

"memstoreThreshold": "0.9"

}

}

}

]

}

}

7.2.1.2 导入CSV

导入成功!

[root@RHOB-DB00 datax]# python ./bin/datax.py ./job/csv2ob_v1.json ... 2022-03-11 01:06:09.958 [job-0] INFO JobContainer - PerfTrace not enable! 2022-03-11 01:06:09.958 [job-0] INFO StandAloneJobContainerCommunicator - Total 300024 records, 12021849 bytes | Speed 1.15MB/s, 30002 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 6.487s | All Task WaitReaderTime 0.571s | Percentage 100.00% 2022-03-11 01:06:09.959 [job-0] INFO JobContainer - 任务启动时刻 : 2022-03-11 01:05:59 任务结束时刻 : 2022-03-11 01:06:09 任务总计耗时 : 10s 任务平均流量 : 1.15MB/s 记录写入速度 : 30002rec/s 读出记录总数 : 300024 读写失败总数 : 0

7.2.1.3 检查数据量

源表与目标表数据量一致。

MySQL [employees]> select count(*) from employees2;

+----------+

| count(*) |

+----------+

| 300024 |

+----------+

MySQL [employees]> select count(*) from employees;

+----------+

| count(*) |

+----------+

| 300024 |

+----------+

7.2.2 MySQL直接迁移OceanBase

7.2.2.1 配置文件

[root@RHOB-DB00 datax]# pwd

/software/DM/datax

[root@RHOB-DB00 datax]# vim ./job/mysql2ob_v1.json

{

"job": {

"setting": {

"speed": {

"channel": 4

},

"errorLimit": {

"record": 0,

"percentage": 0.1

}

},

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "123456",

"column": [

"*"

],

"connection": [

{

"table": [

"item"

],

"jdbcUrl": ["jdbc:mysql://192.168.117.170:3306/tpcc10?useUnicode=true&characterEncoding=utf8"]

}

]

}

},

"writer": {

"name": "oceanbasev10writer",

"parameter": {

"obWriteMode": "insert",

"column": [

"*"

],

"preSql": [

"truncate table item"

],

"connection": [

{

"jdbcUrl": "||_dsc_ob10_dsc_||obdemo:mys_tpcc||_dsc_ob10_dsc_||jdbc:oceanbase://192.168.117.171:2883/tpcc10?useLocalSessionState=true&allowBatch=true&allowMultiQueries=true&rewriteBatchedStatements=true",

"table": [

"item"

]

}

],

"username": "root",

"password":"123456",

"writerThreadCount":4,

"batchSize": 1000,

"memstoreThreshold": "0.9"

}

}

}

]

}

}

开始执行直接迁移,迁移成功!

[root@RHOB-DB00 datax]# python ./bin/datax.py ./job/mysql2ob_v1.json DataX (DATAX-OPENSOURCE-3.0), From Alibaba ! Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved. 2022-03-10 00:21:52.992 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl 2022-03-10 00:21:53.000 [main] INFO Engine - the machine info => ... ... [total cpu info] => averageCpu | maxDeltaCpu | minDeltaCpu -1.00% | -1.00% | -1.00% [total gc info] => NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s PS Scavenge | 2 | 2 | 2 | 0.083s | 0.083s | 0.083s 2022-03-10 00:22:03.933 [job-0] INFO JobContainer - PerfTrace not enable! 2022-03-10 00:22:03.934 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 7065124 bytes | Speed 689.95KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 1.780s | All Task WaitReaderTime 0.297s | Percentage 100.00% 2022-03-10 00:22:03.935 [job-0] INFO JobContainer - 任务启动时刻 : 2022-03-10 00:21:53 任务结束时刻 : 2022-03-10 00:22:03 任务总计耗时 : 10s 任务平均流量 : 689.95KB/s 记录写入速度 : 10000rec/s 读出记录总数 : 100000 读写失败总数 : 0

八. 总结 和 思考

8.1 工具总结

- OB-MySQL source脚本,可以考虑过滤一些无用的信息,并且补充能够自动识别视图语句。

- 个人感觉OBDUMPER&OBLOADER应该在并行度、日志输出、执行单元、数据压缩、易操作性加大研发力度,让用户更好记住这款能够对标Oracle数据泵的工具。

- DataX目前兼容多种数据库,但只能进行离线单表迁移,如果要完善,可能要在多表迁移和增量迁移上下功夫。

总体感觉:OB的这几款工具比较容易上手,适合新人学习!

8.2 一些思考

数据迁移是一个临时性、重复性较高的工作。用户们都希望迁移工具能在短时间内实现一次完整迁移,并且在迁移完毕后,可以一键进行数据校验,为UAT提前做好充分准备。

整个过程行如流水,而不是定制化研发工具内部的问题。目前OceanBase的迁移工具确实比较多,但有些功能显得同质化。

也许是小编使用的场景比较少,但小编认为随着OB的日益强大,有必要将它们逐渐整合两款能够对标Oracle的数据泵(离线)和Goldengate(实时)的产品。原因有二 ,一是学习成本降低,研发和用户都能更加专注;二是爆款产品传播效应快,用户记忆深!

以上仅针对开源产品,之前有幸在国产数据库工作过一段时间,小编亲身体会到国产数据库商业产品(迁移工具)的易用性和高效性,时隔几年,越发期待!!!

虽然目前国产数据库在全面超越国外数据库仍然有一些差距,但攀登高峰是一种信念,虽然艰难,但只有在更高的峰顶,才能看到更美的风景。国产数据库,Fighting !