在实际的运行中,我们并没有把pulsar部署在kubernetes上面,因为bookkeeper涉及到持久化的问题,我们目前没有好的Persistent volume可供选择,所以我们以二进制的方式进行部署, 使用saltstack对其进行管理

一、准备阶段

硬件资源:

| 主机名 | IP | 角色 |

| temp-pulsar-test01 | 10.25.243.186 | zookeeper/bookie/broker |

| temp-pulsar-test02 | 10.25.109.7 | zookeeper/bookie/broker |

| temp-pulsar-test03 | 10.25.237.10 | zookeeper/bookie/broker |

软件:

```

salt-minions

java

pulsar 2.8.1

```

在三台主机安装salt-minions受控端, 安装脚本如下:

#!/bin/bashset -eMASTER='10.xx.xx.xx'if [ -z `rpm -qa | grep salt-py3-repo-latest` ];thensudo yum install https://repo.saltstack.com/py3/redhat/salt-py3-repo-latest.el7.noarch.rpm -yfiif [ -z `rpm -qa | grep minion` ];thensudo yum install salt-minion -yfiID=$(hostname)# sed -i 's/#id:/id: '"${ID}"'/' etc/salt/minionsed -i 's/#master: salt/master: '"${MASTER}"'/' /etc/salt/minionsystemctl enable salt-minionsystemctl start salt-minionecho "Done!"exit 0

安装成功后面master端accept三台minions,执行的命令:

# salt-key -a temp-pulsar-test01The following keys are going to be accepted:Unaccepted Keys:temp-pulsar-test01Proceed? [n/Y] yKey for minion temp-pulsar-test01 accepted.[root@cmdb-pro states]# salt-key -AThe following keys are going to be accepted:Unaccepted Keys:temp-pulsar-test02temp-pulsar-test03Proceed? [n/Y] yKey for minion temp-pulsar-test02 accepted.Key for minion temp-pulsar-test03 accepted.

这里展示了如何接受一台主机和批量接受两种方式,当master成功纳管了三个节点后,我们需要将三台机器配置成一个资产分组`pulsar-281-test`, 具体在master配置文件中找到nodegroups并添加如下一行内容:

nodegroups:...pulsar-281-test: L@temp-pulsar-test01,temp-pulsar-test02,temp-pulsar-test0

添加完成后,可以执行一个命令来验证是不是成功Accept:

# salt -N pulsar-281-test test.ping -vExecuting job with jid 20211008030018213478-------------------------------------------temp-pulsar-test02:Truetemp-pulsar-test03:Truetemp-pulsar-test01:True

salt -N 指主分组使用test.ping方式进行检测, 可以看到三台主机成功返回,说明分组成功网络通畅。

下载pulsar 2.8.1的二进制包,放在master相应目录下,我们这里放在"/srv/salt/pulsar/binary"下面

wget -c https://apache.website-solution.net/pulsar/pulsar-2.8.1/apache-pulsar-2.8.1-bin.tar.gz

那么基本环境就准备好了。

二、编写sls文件

pulsar_281_test.sls的内容如下:

{% set current_path = salt['environ.get']('PATH', '/bin:/usr/bin') %}{% set init_node = 'temp-pulsar-test01' %}{% set zkServers = '10.25.243.186:2181,10.25.109.7:2181,10.25.237.10:2181' %}{% set webSerivceURL = 'http://10.25.243.186:8080,10.25.109.7:8080,10.25.237.10:8080' %}{% set brokerServiceURL = 'pulsar://10.25.243.186:6650,10.25.109.7:6650,10.25.237.10:6650' %}{% set cluster_name = 'p281_test' %}pulsar_binary:file.managed:- name: tmp/apache-pulsar-2.8.1-bin.tar.gz- unless: test -e tmp/apache-pulsar-2.8.1-bin.tar.gz- user: root- group: root- makedirs: True- source: salt://pulsar/binary/apache-pulsar-2.8.1-bin.tar.gzpulsar_extract:cmd.run:- cwd: tmp- names:- tar zxf apache-pulsar-2.8.1-bin.tar.gz- mv apache-pulsar-2.8.1 opt/Apps/pulsar && chown -R dominos-op. opt/Apps/pulsar- unless: test -d opt/Apps/pulsar- require:- file: pulsar_binarysync_scripts:file.recurse:- name: opt/Apps/pulsar/scripts- user: dominos-op- group: dominos-op- clean: True- dir_mode: 0775- file_mode: '0777'- template: jinja- source: salt://pulsar/scripts- include_empty: Truesync_certs:file.recurse:- name: opt/Apps/pulsar/certs- user: dominos-op- group: dominos-op- clean: True- dir_mode: 0775- file_mode: '0777'- template: jinja- source: salt://pulsar/certs- include_empty: Truebookie-conf:file.managed:- name: opt/Apps/pulsar/conf/bookkeeper.conf- source: salt://pulsar/binary/apache-pulsar-2.8.1/conf/bookkeeper.conf- user: dominos-op- group: dominos-op- mode: 644- template: jinja- defaults:journalDir: opt/Apps/pulsar/data/bookkeeper/journalzkServers: {{ zkServers }}ledgerDir: opt/Apps/pulsar/data/bookkeeper/ledgerspremetheusPort: '8888'broker-conf:file.managed:- name: opt/Apps/pulsar/conf/broker.conf- source: salt://pulsar/binary/apache-pulsar-2.8.1/conf/broker.conf- user: dominos-op- group: dominos-op- mode: 644- template: jinja- defaults:zkServers: {{ zkServers }}premetheusPort: '8888'clusterName: {{ cluster_name }}tokenSecretKey: 'file:///opt/Apps/pulsar/certs/admin.key'brokerClientAuthenticationPlugin: 'org.apache.pulsar.client.impl.auth.AuthenticationToken'brokerClientAuthenticationParameters: 'token:eyJhbGciOiJIUzI1NiJ9.*.xMdFuLhKyqhDOainBIr3yGIKU9WgHlW4-NXHuWnro4o'superUserRoles: 'admin'authParams: 'file:///opt/Apps/pulsar/certs/admin.token'zookeeper-conf:file.managed:- name: opt/Apps/pulsar/conf/zookeeper.conf- source: salt://pulsar/binary/apache-pulsar-2.8.1/conf/zookeeper.conf- user: dominos-op- group: dominos-op- mode: 644- template: jinja- defaults:zk_node1: '10.25.243.186'zk_node2: '10.25.109.7'zk_node3: '10.25.237.10'zk_data_dir: data/pulsar/zookeeper/client-conf:file.managed:- name: opt/Apps/pulsar/conf/client.conf- source: salt://pulsar/binary/apache-pulsar-2.8.1/conf/client.conf- user: dominos-op- group: dominos-op- mode: 644- template: jinja- defaults:authPlugin: 'org.apache.pulsar.client.impl.auth.AuthenticationToken'authParams: 'file:///opt/Apps/pulsar/certs/admin.token'{% for node, id in pillar.get('p281_zk_ids', {}).items() %}{% if grains['nodename'] == node %}{{node}}:cmd.run:- cwd: opt/Apps/pulsar- names:- mkdir -p data/pulsar/zookeeper- echo {{id}} > data/pulsar/zookeeper/myid && chown -R dominos-op. data/pulsar- unless: test -e data/pulsar/zookeeper/myid{% endif %}{% endfor %}{% for node, id in pillar.get('p281_zk_ids', {}).items() %}start_zookeeper_{{id}}:cmd.script:- names:- opt/Apps/pulsar/scripts/start_zookeeper.sh- runas: dominos-op- success_retcodes: 1- env:- PATH: {{ [current_path, '/usr/local/java/jdk1.8.0_112/bin']|join(':') }}{% endfor %}{% if grains['nodename'] == init_node %}init-pulsar-cluster:cmd.run:- cwd: /opt/Apps/pulsar- names:- bin/pulsar initialize-cluster-metadata --cluster {{cluster_name}} --zookeeper {{ zkServers }} --configuration-store {{ zkServers }} --web-service-url {{ webSerivceURL }} --broker-service-url {{ brokerServiceURL }} && touch init.lock && chown -R dominos-op. init.lock- runas: dominos-op- unless: test -e /opt/Apps/pulsar/init.lock{% endif %}{% for node, id in pillar.get('p281_zk_ids', {}).items() %}start_bookie_{{id}}:cmd.script:- names:- /opt/Apps/pulsar/scripts/start_bookie.sh- runas: dominos-op- success_retcodes: 1- env:- PATH: {{ [current_path, '/usr/local/java/jdk1.8.0_112/bin']|join(':') }}{% endfor %}{% for node, id in pillar.get('p281_zk_ids', {}).items() %}start_broker_{{id}}:cmd.script:- names:- /opt/Apps/pulsar/scripts/start_broker.sh- runas: dominos-op- success_retcodes: 1- env:- PATH: {{ [current_path, '/usr/local/java/jdk1.8.0_112/bin']|join(':') }}{% endfor %}

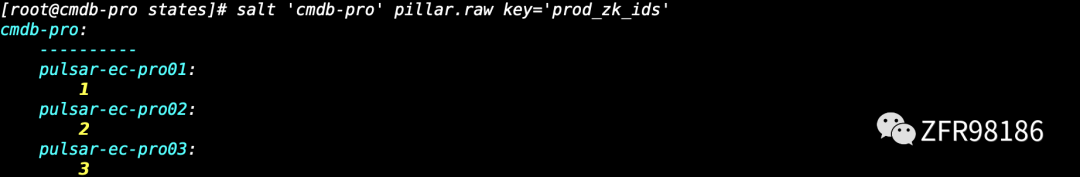

在这个文件中,我们还用到了pillar, 定义的内容如下:

# cat p281_zk_ids/init.slsp281_zk_ids:temp-pulsar-test01: 1temp-pulsar-test02: 2temp-pulsar-test03: 3

具体的参数说明,这里就不再赘述, 大家可以参阅salt官方说明,我这里是将所有步骤写在同一个文件中的,我们也可以将一个文件分成三四个部分,比如:安装、同步配置文件、初始化集群和启动进程,每个部分一个sls文件,这样就更清爽了。

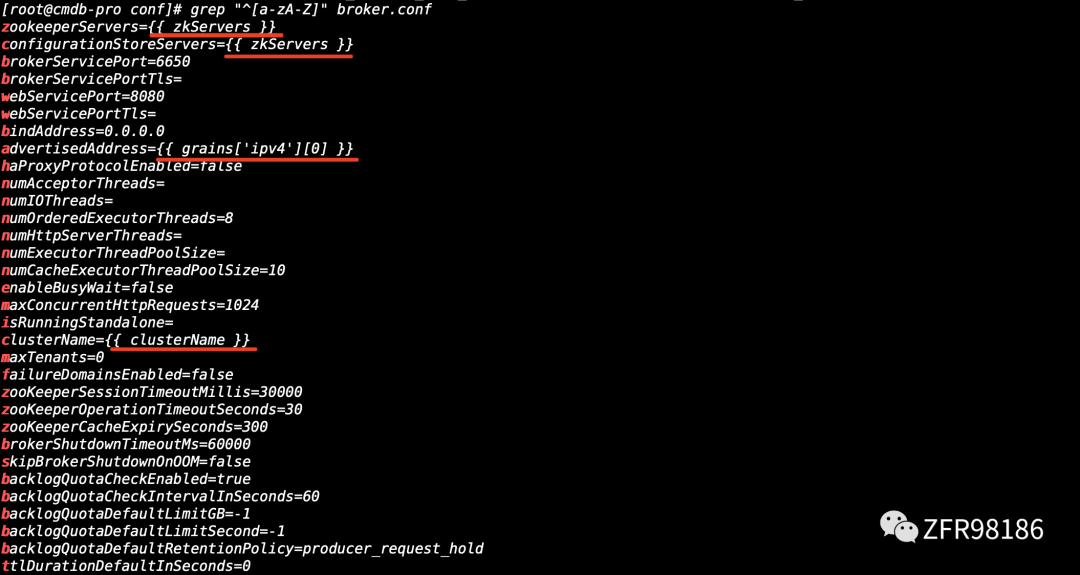

sls中用到了jinja模板,大致内容是这样的:

在调试的过程中,可以一步步的调试,执行的命令:

salt -N pulsar-281-test state.sls pulsar.states.pulsar_281_test

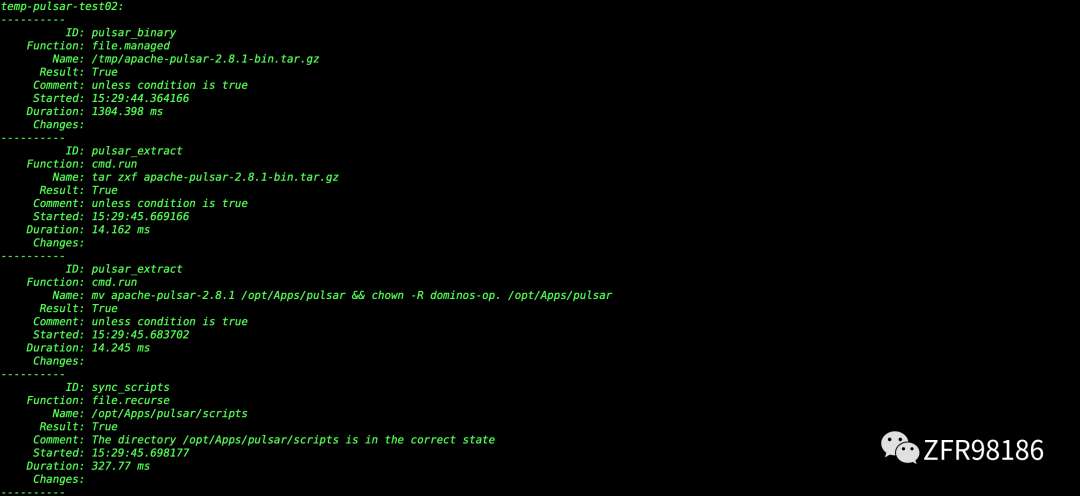

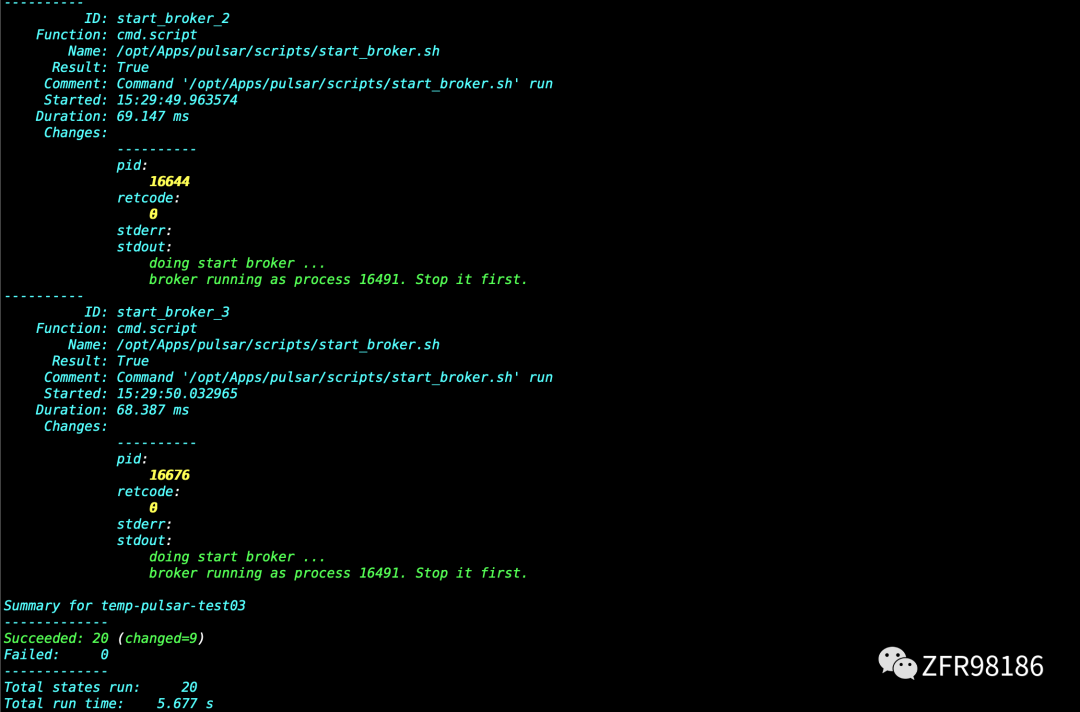

执行如下:

...省略N张图

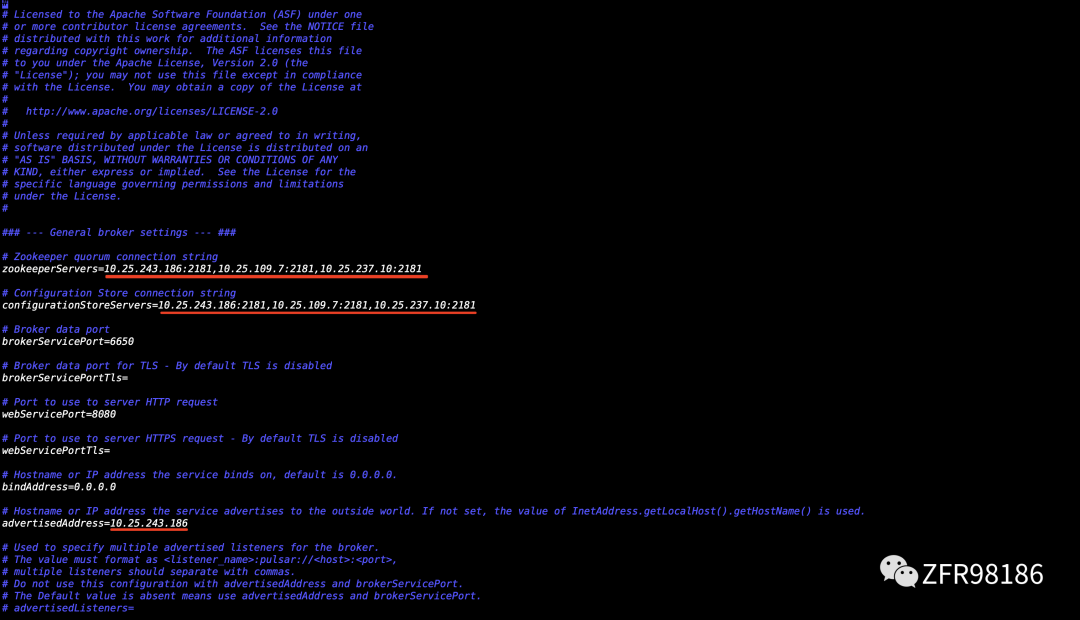

用salt管理的最大好处在于,当我们只修改某个配置文件的时候,我们不用登陆每台的修改,只需要修改在salt master上存放的模板文件就好,然后同步到每台机器上。同步到节点后的配置文件如下:

红色线标出来的是jinja模板转换后的参数,我们可以与之前的模板进行对照,我们定义的值成功的写入到配置文件中。

三、检测集群健康性

安装完成后,我们可以查看集群的一些状态,以便检测集群是不是OK的

[root@temp-pulsar-test01 pulsar]# bin/pulsar-admin brokers list p281_test"10.25.237.10:8080""10.25.109.7:8080""10.25.243.186:8080"[root@temp-pulsar-test01 pulsar]# bin/pulsar-admin tenants list"public""pulsar"[root@temp-pulsar-test01 pulsar]# bin/pulsar-admin tenants create apache[root@temp-pulsar-test01 pulsar]# bin/pulsar-admin tenants list"apache""public""pulsar"[root@temp-pulsar-test01 pulsar]# bin/pulsar-admin namespaces create apache/pulsar[root@temp-pulsar-test01 pulsar]# bin/pulsar-admin topics create-partitioned-topic apache/pulsar/test-topic -p 3[root@temp-pulsar-test01 pulsar]# bin/pulsar-admin topics list-partitioned-topics apache/pulsar"persistent://apache/pulsar/test-topic"

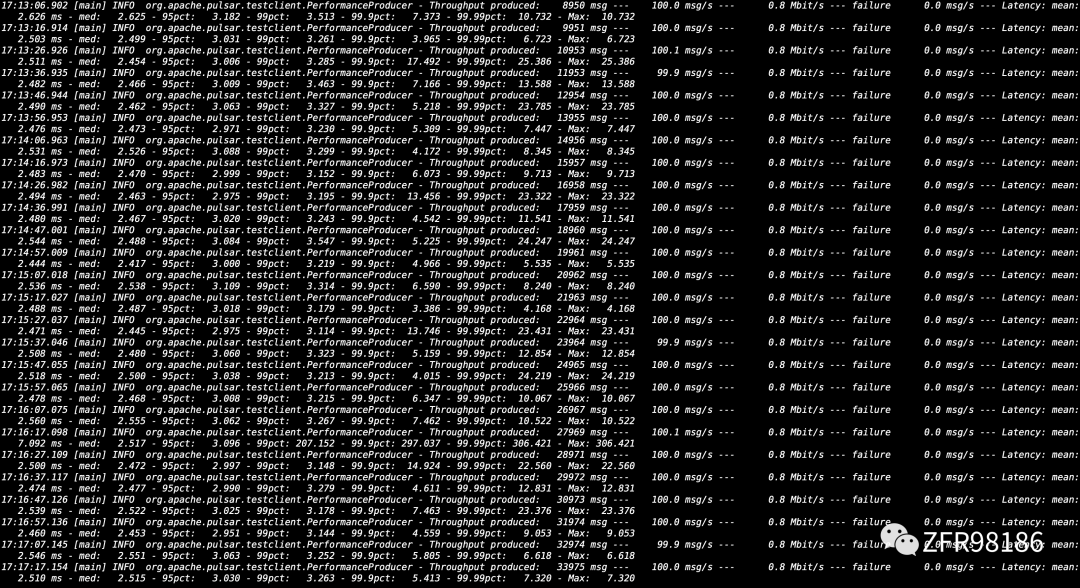

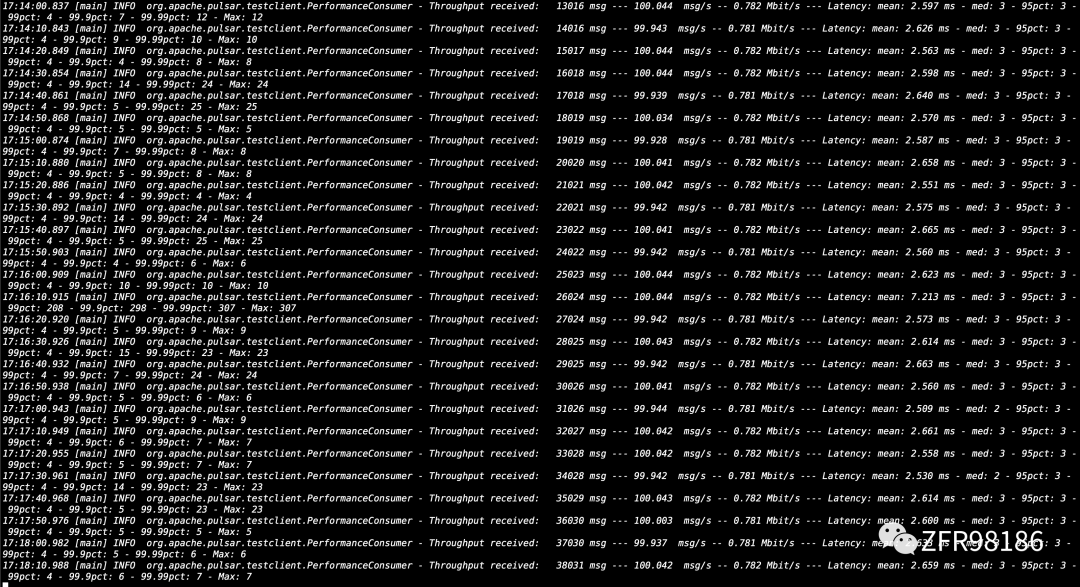

然后我们使用pulsar自带的压测工具,进行一些压测

生产:

bin/pulsar-perf produce -t 100 apache/pulsar/test-topic

消费:

bin/pulsar-perf consume -t 100 apache/pulsar/test-topic

负载报告:

[root@temp-pulsar-test01 pulsar]# bin/pulsar-admin broker-stats load-report{"webServiceUrl" : "http://10.25.243.186:8080","pulsarServiceUrl" : "pulsar://10.25.243.186:6650","persistentTopicsEnabled" : true,"nonPersistentTopicsEnabled" : true,"cpu" : {"usage" : 34.57446272937882,"limit" : 400.0},"memory" : {"usage" : 170.15445709228516,"limit" : 2048.0},"directMemory" : {"usage" : 128.0,"limit" : 4096.0},"bandwidthIn" : {"usage" : 1499.8351535025583,"limit" : 5.1198976E7},"bandwidthOut" : {"usage" : 2746.993439473991,"limit" : 5.1198976E7},"msgThroughputIn" : 23671.923555448626,"msgThroughputOut" : 23671.92307029856,"msgRateIn" : 21.999928954877905,"msgRateOut" : 21.999928503994937,"lastUpdate" : 1633685246764,"lastStats" : {"apache/pulsar/0x80000000_0xc0000000" : {"msgRateIn" : 21.999928954877905,"msgThroughputIn" : 23671.923555448626,"msgRateOut" : 21.999928503994937,"msgThroughputOut" : 23671.92307029856,"consumerCount" : 22,"producerCount" : 22,"topics" : 23,"cacheSize" : 1076}},"numTopics" : 23,"numBundles" : 1,"numConsumers" : 22,"numProducers" : 22,"bundles" : [ "apache/pulsar/0x80000000_0xc0000000" ],"lastBundleGains" : [ ],"lastBundleLosses" : [ ],"brokerVersionString" : "2.8.1","protocols" : { },"advertisedListeners" : { },"loadReportType" : "LocalBrokerData","maxResourceUsage" : 0.08643615990877151,"bundleStats" : {"apache/pulsar/0x80000000_0xc0000000" : {"msgRateIn" : 21.999928954877905,"msgThroughputIn" : 23671.923555448626,"msgRateOut" : 21.999928503994937,"msgThroughputOut" : 23671.92307029856,"consumerCount" : 22,"producerCount" : 22,"topics" : 23,"cacheSize" : 1076}}}

集群可以正常生产和消费,至些集群部署完成。