老板们,点个关注吧。

第一:为什么中断两天没有更新:

因为前两天突然发现kubespary已经更新到了2.18的版本了,所以想弄到最新版看看什么情况,觉得还是应该写最新版的部署方式,研究时间较长,就断了。

第二:本文能学到什么:

通过本文可以让你从只有几台裸机的情况,获得一个成熟的k8s集群。

声明

hostname | IP | role | cpu | mem |

| node1 | 192.168.112.130 | master | >=2 | >=2 |

| node2 | 192.168.112.131 | master | >=2 | >=2 |

| node3 | 192.168.112.132 | worker | >=2 | >=2 |

主机名修改

[root@localhost ~]# vim etc/hostnamenode1[root@localhost ~]# hostname node1[root@localhost ~]# bash[root@node1 ~]#

[root@localhost ~]# vim etc/hostnamenode2[root@localhost ~]# hostname node2[root@localhost ~]# bash[root@node2 ~]#

[root@localhost ~]# vim etc/hostnamenode3[root@localhost ~]# hostname node3[root@localhost ~]# bash[root@node3 ~]#

关闭安全策略

[root@node1 ~]# systemctl stop firewalld.service[root@node1 ~]# systemctl disable firewalld.service[root@node1 ~]# vim /etc/selinux/configSELINUX=disabled # 修改成这样[root@node1 ~]# getenforce 0[root@node1 ~]# reboot # 每台服务器重启一遍

设置iptables、dns

[root@node1 ~]# iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT[root@node1 ~]# systemctl stop dnsmasq.service[root@node1 ~]# systemctl disable dnsmasq.service

k8s参数配置

[root@node1 ~]# vim /etc/sysctl.d/kubernetes.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_nonlocal_bind = 1net.ipv4.ip_forward = 1vm.swappiness = 0vm.overcommit_memory = 1[root@node1 ~]# sysctl -p /etc/sysctl.d/kubernetes.conf

移除依赖

[root@node1 ~]# yum remove -y docker*[root@node1 ~]# rm -f /etc/docker/daemon.json

配置免密

往期推荐

下载软件包

往期推荐

[root@node-1 ~]# yum install -y epel-release python36 python36-pip git[root@node-1 ~]# tar xf kubespray-2.18.0.tar.gz[root@node-1 ~]# cd kubespray-2.18.0/[root@node1 kubespray-2.18.0]# pip3.6 install setuptools_rust[root@node1 kubespray-2.18.0]# pip3.6 install --upgrade pip[root@node1 kubespray-2.18.0]# cat requirements.txtansible==3.4.0ansible-base==2.10.15cryptography==2.8jinja2==2.11.3netaddr==0.7.19pbr==5.4.4jmespath==0.9.5ruamel.yaml==0.16.10ruamel.yaml.clib==0.2.4MarkupSafe==1.1.1[root@node1 kubespray-2.18.0]# pip3.6 install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple[root@node1 kubespray-2.18.0]# ln -s usr/bin/python3.6 usr/local/python3/bin/python3

生成配置

[root@node1 kubespray-2.18.0]# cp -rpf inventory/sample inventory/mycluster[root@node1 kubespray-2.18.0]# declare -a IPS=(192.168.112.130 192.168.112.131 192.168.112.132)[root@node1 kubespray-2.18.0]# CONFIG_FILE=inventory/mycluster/hosts.yaml python3 contrib/inventory_builder/inventory.py ${IPS[@]}

修改代理地址

[root@node1 kubespray-2.18.0]# vim inventory/mycluster/group_vars/all/all.yml# 原本是注释的,需要取消注释,并配置自己的代理地址http_proxy: "http://192.168.112.119:19000"https_proxy: "http://192.168.112.119:19000"[root@node1 kubespray-2.18.0]#

集群调整

[root@node1 kubespray-2.18.0]# vim inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.ymlkube_service_addresses: 10.200.0.0/16kube_pods_subnet: 10.233.0.0/16[root@node1 kubespray-2.18.0]#

添加界面和ingress

[root@node1 kubespray-2.18.0]# vim inventory/mycluster/group_vars/k8s_cluster/addons.ymldashboard_enabled: trueingress_nginx_enabled: true[root@node1 kubespray-2.18.0]#

修改超时时间

由于我们走的是代理的方式,可能会比较慢,这个时候我们就修改下默认的超时时间,否则会报错:

[root@node1 kubespray-2.18.0]# ansible --versionansible 2.10.15config file = root/jier/kubespray-2.18.0/ansible.cfgconfigured module search path = ['/root/jier/kubespray-2.18.0/library']ansible python module location = usr/local/lib/python3.6/site-packages/ansibleexecutable location = usr/local/bin/ansiblepython version = 3.6.8 (default, Nov 16 2020, 16:55:22) [GCC 4.8.5 20150623 (Red Hat 4.8.5-44)][root@node1 kubespray-2.18.0]## 从上面可以获取到目录,而后找到配置文件[root@node1 kubespray-2.18.0]# vim /usr/local/lib/python3.6/site-packages/ansible/config/base.yml# 找到参数 Gather facts timeout 和 Connection timeout,时间改为60DEFAULT_TIMEOUT:name: Connection timeoutdefault: 60DEFAULT_GATHER_TIMEOUT:name: Gather facts timeoutdefault: 60[root@node1 kubespray-2.18.0]#

直接安装

[root@node1 kubespray-2.18.0]# ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root cluster.yml -vvvv

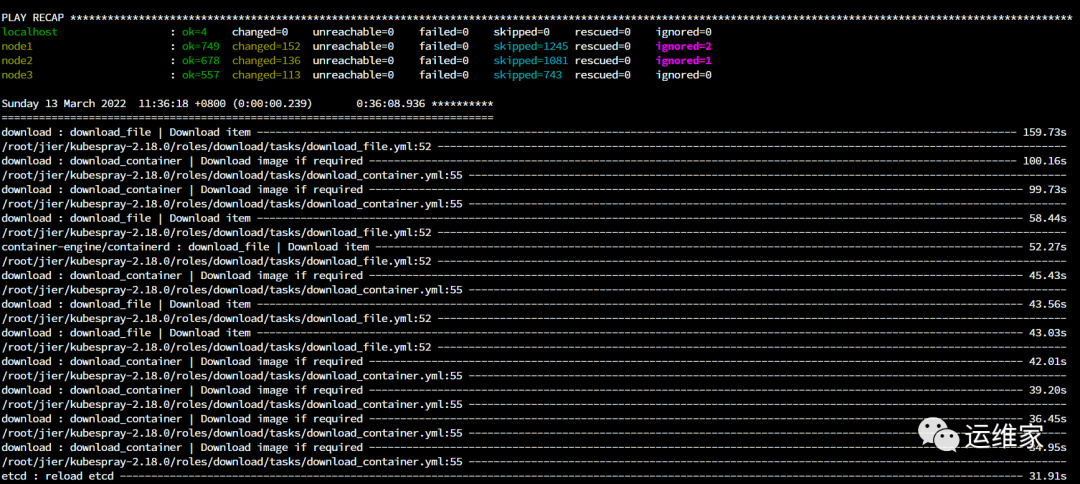

安装完成的结果

简单验证

[root@node1 ~]# crictl psCONTAINER IMAGE CREATED STATE NAME ATTEMPT POD IDa10b0cf798fbb 935d8fdc2d521 4 hours ago Running kube-scheduler 2 fc943ad8f7dcbda4b3d99e168a 059e6cd8cf78e 4 hours ago Running kube-apiserver 1 6bbf6ff712afbc00dcd8164b7e 1e7da779960fc 4 hours ago Running autoscaler 0 168c46aea2adb71679721e6633 a9f76bcccfb5f 4 hours ago Running ingress-nginx-controller 0 9c247cf93113b81c0dd9fd30d7 6570786a0fd3b 5 hours ago Running calico-node 0 a2ce1e05bebf6d9c3382a11f57 8f8fdd6672d48 5 hours ago Running kube-proxy 0 cf9793f097edb8131a023a6b9f 04185bc88e08d 5 hours ago Running kube-controller-manager 1 34eb68fbc2f2b[root@node1 ~]#

[root@node2 ~]# crictl psCONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID4eb4a1ab21752 059e6cd8cf78e 4 hours ago Running kube-apiserver 1 46bc29ffcd9ab6bb9658b6c8c6 296a6d5035e2d 4 hours ago Running coredns 0 254ee90fb6b8755b825a4e2955 a9f76bcccfb5f 4 hours ago Running ingress-nginx-controller 0 b274e82253e8172ce012725a80 6570786a0fd3b 5 hours ago Running calico-node 0 57256d2b63a4eb99e6936381fb 8f8fdd6672d48 5 hours ago Running kube-proxy 0 d7c121be4ed574ea53f055a757 935d8fdc2d521 5 hours ago Running kube-scheduler 1 dff35ce18f014112ec9508d319 04185bc88e08d 5 hours ago Running kube-controller-manager 1 71248f9ee9c0a[root@node2 ~]#

[root@node3 /]# crictl psCONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID1cd64eebf3a9c 7801cfc6d5c07 4 hours ago Running kubernetes-metrics-scraper 0 543ad0366baec67aa11de6a26f 72f07539ffb58 4 hours ago Running kubernetes-dashboard 0 9c0a3829ef2f0a4219a0ffc10f 296a6d5035e2d 4 hours ago Running coredns 0 4340e98cdc3eba441b90d709a2 5bae806f8f123 4 hours ago Running node-cache 0 6102acdf1c1062a66eec86647f a9f76bcccfb5f 4 hours ago Running ingress-nginx-controller 0 2d06e947bf21c022956555da76 fcd3512f2a7c5 5 hours ago Running calico-kube-controllers 1 97bfcf5ef728b42670699ef531 6570786a0fd3b 5 hours ago Running calico-node 0 751efe17dbea9e677dd716e584 8f8fdd6672d48 5 hours ago Running kube-proxy 0 92ba8a9f29f3bf6dec83dce9dc f6987c8d6ed59 5 hours ago Running nginx-proxy 0 12bf27ff79872[root@node3 /]#

至此,本文结束,后面我们会针对该集群做冒烟测试,以及如何界面访问。

往期推荐

添加关注,带你高效运维

文章转载自运维家,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。