公众号关注「WeiyiGeek」

设为「特别关注」,每天带你玩转网络安全运维、应用开发、物联网IOT学习!

本章目录:

6.Kubernetes 集群安装部署

1) 二进制软件包下载安装 (手动-此处以该实践为例)

2) 二进制部署配置 kube-apiserver

3) 二进制部署配置 kubectl

4) 二进制部署配置 kube-controller-manager

5) 二进制部署配置 kube-scheduler

6) 二进制部署配置 kubelet

7) 二进制部署配置 kube-proxy

8) 部署配置 Calico 插件

9) 部署配置 CoreDNS 插件

0x03 应用部署测试

1.部署Nginx Web服务

2.部署K8s原生Dashboard UI

3.部署K9s工具进行管理集群

0x04 入坑出坑

二进制搭建K8S集群部署calico网络插件始终有一个calico-node-xxx状态为Running但是READY为0/1解决方法

二进制部署 containerd 1.6.3 将用于环回的 CNI 配置版本提高到 1.0.0 导致 CNI loopback 无法启用

在集群中部署coredns时显示 CrashLoopBackOff 并且报 Readiness probe failed 8181 端口 connection refused

在进行授予管理员权限时利用 Token 登陆 kubernetes-dashboard 认证时始终报 Unauthorized (401): Invalid credentials provided

6.Kubernetes 集群安装部署

1) 二进制软件包下载安装 (手动-此处以该实践为例)

步骤 01.【master-225】手动从k8s.io下载Kubernetes软件包并解压安装, 当前最新版本为 v1.23.6 。

# 如果下载较慢请使用某雷wget -Lhttps://dl.k8s.io/v1.23.6/kubernetes-server-linux-amd64.tar.gz# 解压/app/k8s-init-work/tools# tar -zxf kubernetes-server-linux-amd64.tar.gz# 安装二进制文件到 usr/local/bin/app/k8s-init-work/tools/kubernetes/server/bin# ls # apiextensions-apiserver kube-apiserver.docker_tag kube-controller-manager.tar kube-log-runner kube-scheduler # kubeadm kube-apiserver.tar kubectl kube-proxy kube-scheduler.docker_tag # kube-aggregator kube-controller-manager kubectl-convert kube-proxy.docker_tag kube-scheduler.tar # kube-apiserver kube-controller-manager.docker_tag kubelet kube-proxy.tar mountercp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl usr/local/bin# 验证安装kubectl version # Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.6", GitCommit:"ad3338546da947756e8a88aa6822e9c11e7eac22", GitTreeState:"clean", BuildDate:"2022-04-14T08:49:13Z", GoVersion:"go1.17.9", Compiler:"gc", Platform:"linux/amd64"}

步骤 02.【master-225】利用scp将kubernetes相关软件包分发到其它机器中。

scp -P 20211 kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl weiyigeek@master-223:/tmpscp -P 20211 kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl weiyigeek@master-224:/tmpscp -P 20211 kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl weiyigeek@node-1:/tmpscp -P 20211 kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl weiyigeek@node-2:/tmp# 复制如下到指定bin目录中(注意node节点只需要 kube-proxy kubelet 、kubeadm 在此处可选)cp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubeadm kubectl usr/local/bin/

步骤 03.【所有节点】在集群所有节点上创建准备如下目录

mkdir -vp etc/kubernetes/{manifests,pki,ssl,cfg} var/log/kubernetes var/lib/kubelet2) 部署配置 kube-apiserver

描述: 它是集群所有服务请求访问的入口点, 通过 API 接口操作集群中的资源。

步骤 01.【master-225】创建apiserver证书请求文件并使用上一章生成的CA签发证书。

# 创建证书申请文件,注意下述文件hosts字段中IP为所有Master/LB/VIP IP,为了方便后期扩容可以多写几个预留的IP。# 同时还需要填写 service 网络的首个IP(一般是 kube-apiserver 指定的 service-cluster-ip-range 网段的第一个IP,如 10.96.0.1)。tee apiserver-csr.json <<'EOF'{ "CN": "kubernetes", "hosts": [ "127.0.0.1", "10.10.107.223", "10.10.107.224", "10.10.107.225", "10.10.107.222", "10.96.0.1", "weiyigeek.cluster.k8s", "master-223", "master-224", "master-225", "kubernetes", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "ChongQing", "ST": "ChongQing", "O": "k8s", "OU": "System" } ]}EOF# 使用自签CA签发 kube-apiserver HTTPS证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-csr.json | cfssljson -bare apiserver$ ls apiserver*apiserver.csr apiserver-csr.json apiserver-key.pem apiserver.pem# 复制到自定义目录cp *.pem etc/kubernetes/ssl/

步骤 02.【master-225】创建TLS机制所需TOKEN

cat > etc/kubernetes/bootstrap-token.csv << EOF$(head -c 16 dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:bootstrappers"EOF

温馨提示: 启用 TLS Bootstrapping 机制Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。

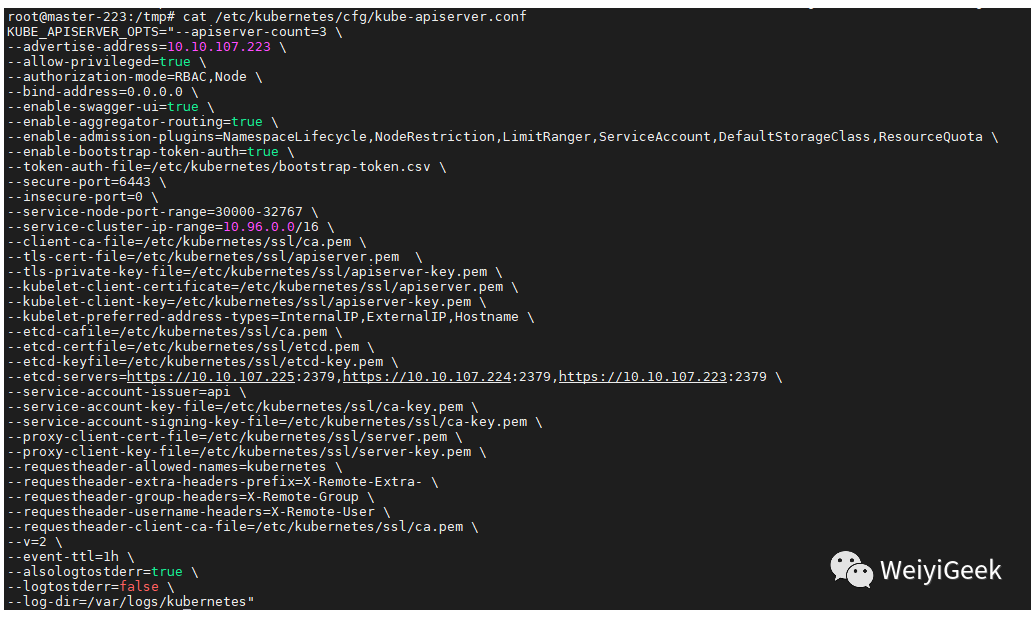

步骤 03.【master-225】创建 kube-apiserver 配置文件

cat > etc/kubernetes/cfg/kube-apiserver.conf <<'EOF'KUBE_APISERVER_OPTS="--apiserver-count=3 \--advertise-address=10.10.107.225 \--allow-privileged=true \--authorization-mode=RBAC,Node \--bind-address=0.0.0.0 \--enable-aggregator-routing=true \--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \--enable-bootstrap-token-auth=true \--token-auth-file=/etc/kubernetes/bootstrap-token.csv \--secure-port=6443 \--service-node-port-range=30000-32767 \--service-cluster-ip-range=10.96.0.0/16 \--client-ca-file=/etc/kubernetes/ssl/ca.pem \--tls-cert-file=/etc/kubernetes/ssl/apiserver.pem \--tls-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem \--kubelet-client-certificate=/etc/kubernetes/ssl/apiserver.pem \--kubelet-client-key=/etc/kubernetes/ssl/apiserver-key.pem \--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \--etcd-cafile=/etc/kubernetes/ssl/ca.pem \--etcd-certfile=/etc/kubernetes/ssl/etcd.pem \--etcd-keyfile=/etc/kubernetes/ssl/etcd-key.pem \--etcd-servers=https://10.10.107.225:2379,https://10.10.107.224:2379,https://10.10.107.223:2379 \--service-account-issuer=https://kubernetes.default.svc.cluster.local \--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \--proxy-client-cert-file=/etc/kubernetes/ssl/apiserver.pem \--proxy-client-key-file=/etc/kubernetes/ssl/apiserver-key.pem \--requestheader-allowed-names=kubernetes \--requestheader-extra-headers-prefix=X-Remote-Extra- \--requestheader-group-headers=X-Remote-Group \--requestheader-username-headers=X-Remote-User \--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \--v=2 \--event-ttl=1h \--feature-gates=TTLAfterFinished=true \--logtostderr=false \--log-dir=/var/log/kubernetes"EOF# 审计日志可选# --audit-log-maxage=30# --audit-log-maxbackup=3# --audit-log-maxsize=100# --audit-log-path=/var/log/kubernetes/kube-apiserver.log"# –logtostderr:启用日志# —v:日志等级# –log-dir:日志目录# –etcd-servers:etcd集群地址# –bind-address:监听地址# –secure-port:https安全端口# –advertise-address:集群通告地址# –allow-privileged:启用授权# –service-cluster-ip-range:Service虚拟IP地址段# –enable-admission-plugins:准入控制模块# –authorization-mode:认证授权,启用RBAC授权和节点自管理# –enable-bootstrap-token-auth:启用TLS bootstrap机制# –token-auth-file:bootstrap token文件# –service-node-port-range:Service nodeport类型默认分配端口范围# –kubelet-client-xxx:apiserver访问kubelet客户端证书# –tls-xxx-file:apiserver https证书# –etcd-xxxfile:连接Etcd集群证书# –audit-log-xxx:审计日志# 温馨提示: 在 1.23.* 版本之后请勿使用如下参数。Flag --enable-swagger-ui has been deprecated,Flag --insecure-port has been deprecated,Flag --alsologtostderr has been deprecated,Flag --logtostderr has been deprecated, will be removed in a future release,Flag --log-dir has been deprecated, will be removed in a future release,Flag -- TTLAfterFinished=true. It will be removed in a future release. (还可使用)

步骤 04.【master-225】创建kube-apiserver服务管理配置文件

cat > lib/systemd/system/kube-apiserver.service << "EOF"[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetesAfter=etcd.serviceWants=etcd.service[Service]EnvironmentFile=-/etc/kubernetes/cfg/kube-apiserver.confExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTSRestart=on-failureRestartSec=5Type=notifyLimitNOFILE=65536LimitNPROC=65535[Install]WantedBy=multi-user.targetEOF

步骤 05.将上述创建生成的文件目录同步到集群的其它master节点上.

# master - 225 etc/kubernetes/ 目录结构tree etc/kubernetes//etc/kubernetes/├── bootstrap-token.csv├── cfg│ └── kube-apiserver.conf├── manifests├── pki└── ssl ├── apiserver-key.pem ├── apiserver.pem ├── ca-key.pem ├── ca.pem ├── etcd-key.pem └── etcd.pem# 证书及kube-apiserver.conf配置文件scp -P 20211 -R etc/kubernetes/ weiyigeek@master-223:/tmpscp -P 20211 -R etc/kubernetes/ weiyigeek@master-224:/tmp# kube-apiserver 服务管理配置文件scp -P 20211 lib/systemd/system/kube-apiserver.service weiyigeek@master-223:/tmpscp -P 20211 lib/systemd/system/kube-apiserver.service weiyigeek@master-224:/tmp# 【master-223】 【master-224】 执行如下命令将上传到/tmp相关文件放入指定目录中cd tmp/ && cp -r tmp/kubernetes/ etc/mv kube-apiserver.service lib/systemd/system/kube-apiserver.servicemv kube-apiserver kubectl kube-proxy kube-scheduler kubeadm kube-controller-manager kubelet usr/local/bin

步骤 06.分别修改 etc/kubernetes/cfg/kube-apiserver.conf 文件中 --advertise-address=10.10.107.225

# 【master-223】sed -i 's#--advertise-address=10.10.107.225#--advertise-address=10.10.107.223#g' etc/kubernetes/cfg/kube-apiserver.conf# 【master-224】sed -i 's#--advertise-address=10.10.107.225#--advertise-address=10.10.107.224#g' etc/kubernetes/cfg/kube-apiserver.conf

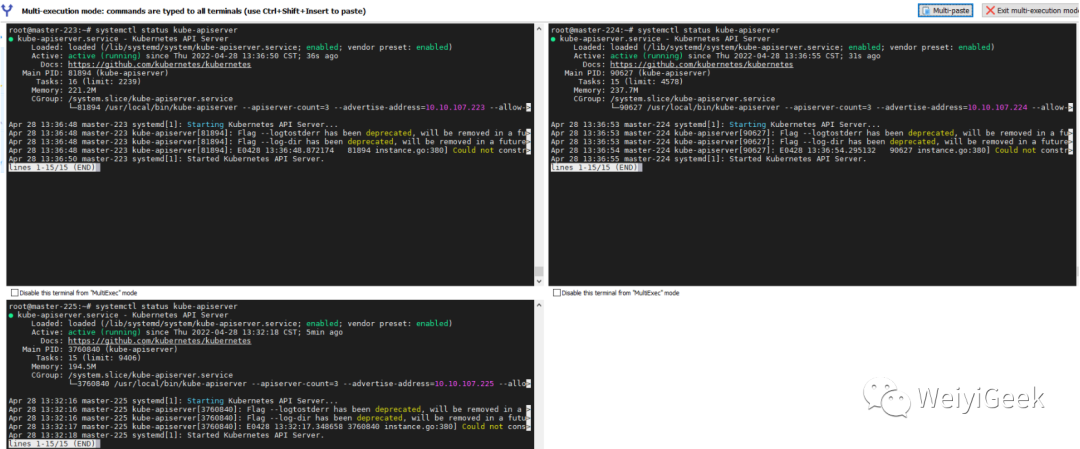

步骤 07.【master节点】完成上述操作后分别在三台master节点上启动apiserver服务。

# 重载systemd与自启设置systemctl daemon-reload systemctl enable --now kube-apiserversystemctl status kube-apiserver# 测试api-servercurl --insecure https://10.10.107.222:16443/curl --insecure https://10.10.107.223:6443/curl --insecure https://10.10.107.224:6443/curl --insecure https://10.10.107.225:6443/# 测试结果{ "kind": "Status", "apiVersion": "v1", "metadata": {}, "status": "Failure", "message": "forbidden: User \"system:anonymous\" cannot get path \"/\"", "reason": "Forbidden", "details": {}, "code": 403}

至此完毕!

3) 部署配置 kubectl

描述: 它是集群管理客户端工具,与 API-Server 服务请求交互, 实现资源的查看与管理。

步骤 01.【master-225】创建kubectl证书请求文件CSR并生成证书

tee admin-csr.json <<'EOF'{ "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "ChongQing", "ST": "ChongQing", "O": "system:masters", "OU": "System" } ]}EOF# 证书文件生成cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin# 复制证书相关文件到指定目录ls admin* # admin.csr admin-csr.json admin-key.pem admin.pemcp admin*.pem etc/kubernetes/ssl/

步骤 02.【master-225】生成kubeconfig配置文件 admin.conf 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书

cd etc/kubernetes # 配置集群信息# 此处也可以采用域名的形式 (https://weiyigeek.cluster.k8s:16443 )kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://10.10.107.222:16443 --kubeconfig=admin.conf # Cluster "kubernetes" set.# 配置集群认证用户kubectl config set-credentials admin --client-certificate=/etc/kubernetes/ssl/admin.pem --client-key=/etc/kubernetes/ssl/admin-key.pem --embed-certs=true --kubeconfig=admin.conf # User "admin" set.# 配置上下文kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=admin.conf # Context "kubernetes" created.# 使用上下文kubectl config use-context kubernetes --kubeconfig=admin.conf # Context "kubernetes" created.

步骤 03.【master-225】准备kubectl配置文件并进行角色绑定。

mkdir root/.kube && cp etc/kubernetes/admin.conf ~/.kube/configkubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes --kubeconfig=/root/.kube/config # clusterrolebinding.rbac.authorization.k8s.io/kube-apiserver:kubelet-apis created# 该 config 的内容$ cat root/.kube/configapiVersion: v1clusters:- cluster: certificate-authority-data: base64(CA证书) server: https://10.10.107.222:16443 name: kubernetescontexts:- context: cluster: kubernetes user: admin name: kubernetescurrent-context: kuberneteskind: Configpreferences: {}users:- name: admin user: client-certificate-data: base64(用户证书) client-key-data: base64(用户证书KEY)

步骤 04.【master-225】查看集群状态

export KUBECONFIG=$HOME/.kube/config# 查看集群信息kubectl cluster-info # Kubernetes control plane is running at https://10.10.107.222:16443 # To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.# 查看集群组件状态kubectl get componentstatuses # NAME STATUS MESSAGE ERROR # controller-manager Unhealthy Get "https://127.0.0.1:10257/healthz": dial tcp 127.0.0.1:10257: connect: connection refused # scheduler Unhealthy Get "https://127.0.0.1:10259/healthz": dial tcp 127.0.0.1:10259: connect: connection refused # etcd-0 Healthy {"health":"true","reason":""} # etcd-1 Healthy {"health":"true","reason":""} # etcd-2 Healthy {"health":"true","reason":""}# 查看命名空间以及所有名称中资源对象kubectl get all --all-namespaces # NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE # default service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8hkubectl get ns # NAME STATUS AGE # default Active 8h # kube-node-lease Active 8h # kube-public Active 8h # kube-system Active 8h

温馨提示: 由于我们还未进行 controller-manager 与 scheduler 部署所以此时其状态为 Unhealthy。

步骤 05.【master-225】同步kubectl配置文件到集群其它master节点

ssh -p 20211 weiyigeek@master-223 'mkdir ~/.kube/'ssh -p 20211 weiyigeek@master-224 'mkdir ~/.kube/'scp -P 20211 $HOME/.kube/config weiyigeek@master-223:~/.kube/scp -P 20211 $HOME/.kube/config weiyigeek@master-224:~/.kube/# 【master-223】weiyigeek@master-223:~$ kubectl get services # NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE # kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8h

步骤 06.配置 kubectl 命令补全 (建议新手勿用,等待后期熟悉相关命令后使用)

# 安装补全工具apt install -y bash-completionsource usr/share/bash-completion/bash_completion# 方式1source <(kubectl completion bash)# 方式2kubectl completion bash > ~/.kube/completion.bash.incsource ~/.kube/completion.bash.inc# 自动tee $HOME/.bash_profile <<'EOF'# source <(kubectl completion bash)source ~/.kube/completion.bash.incEOF

至此 kubectl 集群客户端配置 完毕.

4) 部署配置 kube-controller-manager

描述: 它是集群中的控制器组件,其内部包含多个控制器资源, 实现对象的自动化控制中心。

步骤 01.【master-225】创建 kube-controller-manager 证书请求文件CSR并生成证书

tee controller-manager-csr.json <<'EOF'{ "CN": "system:kube-controller-manager", "hosts": [ "127.0.0.1", "10.10.107.223", "10.10.107.224", "10.10.107.225" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "ChongQing", "ST": "ChongQing", "O": "system:kube-controller-manager", "OU": "System" } ]}EOF# 说明:* hosts 列表包含所有 kube-controller-manager 节点 IP;* CN 为 system:kube-controller-manager;* O 为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限# 利用 CA 颁发 kube-controller-manager 证书文件cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes controller-manager-csr.json | cfssljson -bare controller-manager$ ls controller*controller-manager.csr controller-manager-csr.json controller-manager-key.pem controller-manager.pem# 复制证书cp controller* etc/kubernetes/ssl

步骤 02.创建 kube-controller-manager 的 controller-manager.conf 配置文件.

cd etc/kubernetes/# 设置集群 也可采用 (https://weiyigeek.cluster.k8s:16443 ) 域名形式。kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://10.10.107.222:16443 --kubeconfig=controller-manager.conf# 设置认证kubectl config set-credentials system:kube-controller-manager --client-certificate=/etc/kubernetes/ssl/controller-manager.pem --client-key=/etc/kubernetes/ssl/controller-manager-key.pem --embed-certs=true --kubeconfig=controller-manager.conf# 设置上下文kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=controller-manager.conf# 且换上下文kubectl config use-context system:kube-controller-manager --kubeconfig=controller-manager.conf

步骤 03.准备创建 kube-controller-manager 配置文件。

cat > etc/kubernetes/cfg/kube-controller-manager.conf << "EOF"KUBE_CONTROLLER_MANAGER_OPTS="--allocate-node-cidrs=true \--bind-address=127.0.0.1 \--secure-port=10257 \--authentication-kubeconfig=/etc/kubernetes/controller-manager.conf \--authorization-kubeconfig=/etc/kubernetes/controller-manager.conf \--cluster-name=kubernetes \--client-ca-file=/etc/kubernetes/ssl/ca.pem \--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \--controllers=*,bootstrapsigner,tokencleaner \--cluster-cidr=10.128.0.0/16 \--service-cluster-ip-range=10.96.0.0/16 \--use-service-account-credentials=true \--root-ca-file=/etc/kubernetes/ssl/ca.pem \--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \--tls-cert-file=/etc/kubernetes/ssl/controller-manager.pem \--tls-private-key-file=/etc/kubernetes/ssl/controller-manager-key.pem \--leader-elect=true \--cluster-signing-duration=87600h \--v=2 \--logtostderr=false \--log-dir=/var/log/kubernetes \--kubeconfig=/etc/kubernetes/controller-manager.confEOF# 温馨提示:Flag --logtostderr has been deprecated, will be removed in a future release,Flag --log-dir has been deprecated, will be removed in a future release--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

步骤 04.创建 kube-controller-manager 服务启动文件

cat > lib/systemd/system/kube-controller-manager.service << "EOF"[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/etc/kubernetes/cfg/kube-controller-manager.confExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTSRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.targetEOF

步骤 05.【master-225】同样分发上述文件到其它master节点中。

# controller-manager 证书 及 kube-controller-manager.conf 配置文件、kube-controller-manager.service 服务管理配置文件scp -P 20211 etc/kubernetes/ssl/controller-manager.pem etc/kubernetes/ssl/controller-manager-key.pem etc/kubernetes/controller-manager.conf etc/kubernetes/cfg/kube-controller-manager.conf lib/systemd/system/kube-controller-manager.service weiyigeek@master-223:/tmpscp -P 20211 etc/kubernetes/ssl/controller-manager.pem etc/kubernetes/ssl/controller-manager-key.pem etc/kubernetes/controller-manager.conf etc/kubernetes/cfg/kube-controller-manager.conf lib/systemd/system/kube-controller-manager.service weiyigeek@master-224:/tmp# 【master-223】 【master-224】 执行如下命令将上传到/tmp相关文件放入指定目录中mv controller-manager*.pem etc/kubernetes/ssl/mv controller-manager.conf etc/kubernetes/controller-manager.confmv kube-controller-manager.conf etc/kubernetes/cfg/kube-controller-manager.confmv kube-controller-manager.service lib/systemd/system/kube-controller-manager.service

步骤 06.重载 systemd 以及自动启用 kube-controller-manager 服务。

systemctl daemon-reload systemctl enable --now kube-controller-managersystemctl status kube-controller-manager步骤 07.启动运行 kube-controller-manager 服务后查看集群组件状态, 发现原本 controller-manager 是 Unhealthy 的状态已经变成了 Healthy 状态。

kubectl get componentstatu # NAME STATUS MESSAGE ERROR # scheduler Unhealthy Get "https://127.0.0.1:10259/healthz": dial tcp 127.0.0.1:10259: connect: connection refused # controller-manager Healthy ok # etcd-2 Healthy {"health":"true","reason":""} # etcd-0 Healthy {"health":"true","reason":""} # etcd-1 Healthy {"health":"true","reason":""}

至此 kube-controller-manager 服务的安装、配置完毕!

5) 部署配置 kube-scheduler

描述: 在集群中kube-scheduler调度器组件, 负责任务调度选择合适的节点进行分配任务.

步骤 01.【master-225】创建 kube-scheduler 证书请求文件CSR并生成证书

tee scheduler-csr.json <<'EOF'{ "CN": "system:kube-scheduler", "hosts": [ "127.0.0.1", "10.10.107.223", "10.10.107.224", "10.10.107.225" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "ChongQing", "ST": "ChongQing", "O": "system:kube-scheduler", "OU": "System" } ]}EOF# 利用 CA 颁发 kube-scheduler 证书文件cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes scheduler-csr.json | cfssljson -bare scheduler$ ls scheduler*scheduler-csr.json scheduler.csr scheduler-key.pem scheduler.pem# 复制证书cp scheduler*.pem etc/kubernetes/ssl

步骤 02.完成后我们需要创建 kube-scheduler 的 kubeconfig 配置文件。

cd etc/kubernetes/# 设置集群 也可采用 (https://weiyigeek.cluster.k8s:16443 ) 域名形式。kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://10.10.107.222:16443 --kubeconfig=scheduler.conf# 设置认证kubectl config set-credentials system:kube-scheduler --client-certificate=/etc/kubernetes/ssl/scheduler.pem --client-key=/etc/kubernetes/ssl/scheduler-key.pem --embed-certs=true --kubeconfig=scheduler.conf# 设置上下文kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=scheduler.conf# 且换上下文kubectl config use-context system:kube-scheduler --kubeconfig=scheduler.conf

步骤 03.创建 kube-scheduler 服务配置文件

cat > etc/kubernetes/cfg/kube-scheduler.conf << "EOF"KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \--secure-port=10259 \--kubeconfig=/etc/kubernetes/scheduler.conf \--authentication-kubeconfig=/etc/kubernetes/scheduler.conf \--authorization-kubeconfig=/etc/kubernetes/scheduler.conf \--client-ca-file=/etc/kubernetes/ssl/ca.pem \--tls-cert-file=/etc/kubernetes/ssl/scheduler.pem \--tls-private-key-file=/etc/kubernetes/ssl/scheduler-key.pem \--leader-elect=true \--alsologtostderr=true \--logtostderr=false \--log-dir=/var/log/kubernetes \--v=2"EOF

步骤 04.创建 kube-scheduler 服务启动配置文件

cat > lib/systemd/system/kube-scheduler.service << "EOF"[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=-/etc/kubernetes/cfg/kube-scheduler.confExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTSRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.targetEOF

步骤 05.【master-225】同样分发上述文件到其它master节点中。

# scheduler 证书 及 kube-scheduler.conf 配置文件、kube-scheduler.service 服务管理配置文件scp -P 20211 etc/kubernetes/ssl/scheduler.pem etc/kubernetes/ssl/scheduler-key.pem etc/kubernetes/scheduler.conf /etc/kubernetes/cfg/kube-scheduler.conf /lib/systemd/system/kube-scheduler.service weiyigeek@master-223:/tmp

scp -P 20211 /etc/kubernetes/ssl/scheduler.pem /etc/kubernetes/ssl/scheduler-key.pem /etc/kubernetes/scheduler.conf /etc/kubernetes/cfg/kube-scheduler.conf /lib/systemd/system/kube-scheduler.service weiyigeek@master-224:/tmp

# 【master-223】 【master-224】 执行如下命令将上传到/tmp相关文件放入指定目录中

mv scheduler*.pem /etc/kubernetes/ssl/

mv scheduler.conf /etc/kubernetes/scheduler.conf

mv kube-scheduler.conf /etc/kubernetes/cfg/kube-scheduler.conf

mv kube-scheduler.service /lib/systemd/system/kube-scheduler.service

步骤 06.【所有master节点】重载 systemd 以及自动启用 kube-scheduler 服务。

systemctl daemon-reload

systemctl enable --now kube-scheduler

systemctl status kube-scheduler

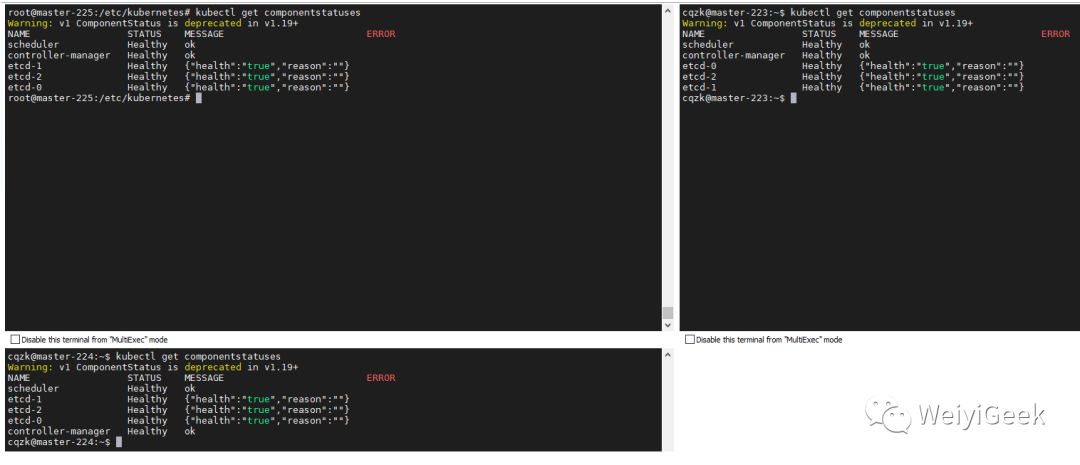

步骤 07.【所有master节点】验证所有master节点各个组件状态, 正常状态下如下, 如有错误请排查通过后在进行后面操作。

kubectl get componentstatuses

# Warning: v1 ComponentStatus is deprecated in v1.19+

# NAME STATUS MESSAGE ERROR

# controller-manager Healthy ok

# scheduler Healthy ok

# etcd-0 Healthy {"health":"true","reason":""}

# etcd-1 Healthy {"health":"true","reason":""}

# etcd-2 Healthy {"health":"true","reason":""}

6) 部署配置 kubelet

步骤 01.【master-225】读取BOOTSTRAP_TOKE 并 创建 kubelet 的 kubeconfig 配置文件 kubelet.conf。

cd /etc/kubernetes/

# 读取 bootstrap-token 值

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/bootstrap-token.csv)

# BOOTSTRAP_TOKEN="123456.httpweiyigeektop"

# 设置集群 也可采用 (https://weiyigeek.cluster.k8s:16443 ) 域名形式。

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://weiyigeek.cluster.k8s:16443 --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

# 设置认证

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

# 设置上下文

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

# 且换上下文

kubectl config use-context default --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

步骤 02.完成后我们需要进行集群角色绑定。

# 角色授权

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig

# 授权 kubelet 创建 CSR

kubectl create clusterrolebinding create-csrs-for-bootstrapping --clusterrole=system:node-bootstrapper --group=system:bootstrappers

# 对 CSR 进行批复

# 允许 kubelet 请求并接收新的证书

kubectl create clusterrolebinding auto-approve-csrs-for-group --clusterrole=system:certificates.k8s.io:certificatesigningrequests:nodeclient --group=system:bootstrappers

# 允许 kubelet 对其客户端证书执行续期操作

kubectl create clusterrolebinding auto-approve-renewals-for-nodes --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeclient --group=system:bootstrappers

步骤 03.创建 kubelet 配置文件,配置参考地址(https://kubernetes.io/docs/reference/config-api/kubelet-config.v1beta1/)