技术

文章

PolarDB-O主备部署及切换排雷指引

一

安装前准备

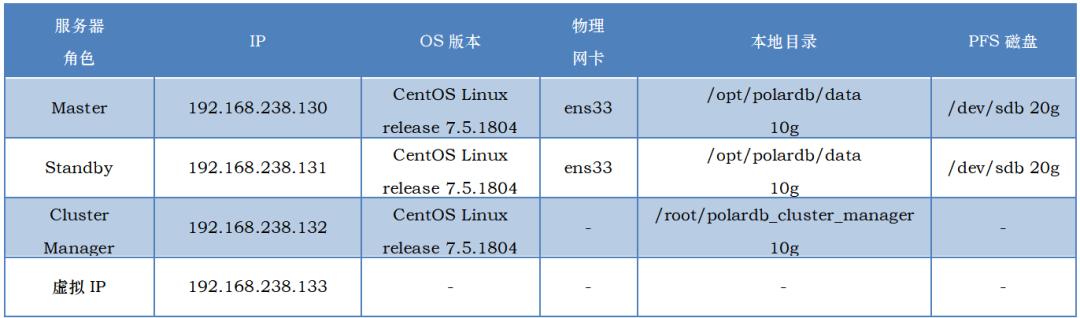

1.环境规划

2.软件目录

PGDATA=/opt/polardb/data #数据库的本地盘目录PGHOME=/usr/local/polardb_o_current #软件目录PFSHOME=/usr/local/polarstore/pfsd #PFS家目录PFSDIR=/sdb/data #PFS文件目录路径

3.安装介质

polardb-clustermanager-1.0.0-20200904155748.x86_64.rpm #集群管理安装包PolarDB-O-0200-2.0.0-20201009151903.alios7.x86_64.rpm #数据库安装包t-polarstore-pfsd-san-1.1.41-20200909132342.alios7.x86_64.rpm #PFS安装包

4.关闭透明大页

关闭透明大页,确保Hugepagesize=2048 kB:

cat etc/default/grub #添加标红部分GRUB_TIMEOUT=5GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' etc/system-release)"GRUB_DEFAULT=savedGRUB_DISABLE_SUBMENU=trueGRUB_TERMINAL_OUTPUT="console"GRUB_CMDLINE_LINUX="crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet transparent_hugepage=never"GRUB_DISABLE_RECOVERY="true"

使配置生效

grub2-mkconfig -o boot/grub2/grub.cfgcat /sys/kernel/mm/transparent_hugepage/enabledalways madvise [never]

5.配置/etc/sysctl.conf

添加以下内容:

vm.dirty_expire_centisecs=3000net.ipv4.tcp_synack_retries=2net.core.rmem_default=262144vm.dirty_background_bytes=409600000net.core.wmem_default=262144kernel.shmall=107374182vm.mmap_min_addr=65536vm.overcommit_ratio=90kernel.shmmni=819200net.core.rmem_max=4194304vm.dirty_writeback_centisecs=100fs.file-max=76724600net.core.somaxconn=4096fs.aio-max-nr=1048576net.ipv4.tcp_max_tw_buckets=262144vm.swappiness=0fs.nr_open=20480000net.ipv4.tcp_fin_timeout=5net.ipv4.ip_local_port_range=40000 65535net.ipv4.tcp_keepalive_probes=3net.ipv4.tcp_mem=8388608 12582912 16777216kernel.shmmax=274877906944kernel.sem=4096 2147483647 2147483646 512000net.ipv4.tcp_keepalive_intvl=20net.ipv4.tcp_keepalive_time=60vm.overcommit_memory=0net.ipv4.tcp_syncookies=1net.ipv4.tcp_max_syn_backlog=4096net.ipv4.tcp_timestamps=1net.ipv4.tcp_rmem=8192 87380 16777216net.ipv4.tcp_wmem=8192 65536 16777216net.core.wmem_max=4194304vm.dirty_ratio=80net.core.netdev_max_backlog=10000vm.zone_reclaim_mode=0net.ipv4.tcp_tw_reuse=1vm.nr_hugepages=0vm.nr_overcommit_hugepages=1000000sysctl -p使配置生效

6.创建polardb_limits

vi etc/security/limits.d/polardb_limits.conf* soft nofile 655360* hard nofile 655360* soft nproc 655360* hard nproc 655360* soft memlock unlimited* hard memlock unlimited* soft core unlimited* hard core unlimited

二

单机部署

1.创建polardb的组和用户

groupadd polardbuseradd -g polardb polardb

赋予polardb用户sudo权限

visudo添加

polardb ALL=(ALL) ALL

2.配置环境变量

su - polardbvi ~/.bash_profile添加export PGPORT=5432export PGDATA=/opt/polardb/dataexport LANG=en_US.utf8export PGHOME=/usr/local/polardb_o_currentexport PFSHOME=/usr/local/polarstore/pfsdexport PFSDISK=sdbexport PFSDIR=/sdb/dataexport LD_LIBRARY_PATH=$PGHOME/lib:$LD_LIBRARY_PATHexport PATH=$PGHOME/bin:$PFSHOME/bin/:$PATHexport PGHOST=$PGDATAexport PGUSER=polardbexport PGDATABASE=polardb

注意:PFSDISK=sdb,此处为磁盘设备名,例如我的环境添加的磁盘设备为/dev/sdb

3.安装PolarDB-O数据库

sudo rpm -ivh PolarDB-O-0200-2.0.0-20201009151903.alios7.x86_64.rpm

4.安装PFS文件系统

sudo rpm -ivh t-polarstore-pfsd-san-1.1.41-20200909132342.alios7.x86_64.rpm

5.PFS初始化配置

配置安装PFS时需要占用名称为$PFSDISK的设备。在安装过程中过程中会格式化该设备,如果/dev/$PFSDISK已经挂载过了,要umount该设备。本次安装为新添加的/dev/sdb盘。依次执行以下命令初始化:

1) 以polardb用户登录,执行以下命令,格式化$PFSDISK设备。

sudo usr/local/bin/pfs -C disk mkfs -u 30 -l 1073741824 -f $PFSDISK

sudo usr/local/polarstore/pfsd/bin/start_pfsd.sh -p $PFSDISK

sudo usr/local/bin/pfs -C disk mkdir $PFSDIR

排雷1:

注意:在创建完PFS数据库目录,进行后续操作时有可能会提示找不到$PFSDIR,此时需要重启PFS服务。常用PFS命令如下:

sudo usr/local/polarstore/pfsd/bin/stop_pfsd.sh #停止PFS服务sudo usr/local/polarstore/pfsd/bin/start_pfsd.sh -p $PFSDISK #启动PFS服务ps -ef |grep pfsdaemon|grep -v grep #检查pfs进程

排雷2:

注意:在最开始安装时PFS使用的磁盘我添加了一块10g大小的,在后面数据库初始化过程中会报错,在往PFS磁盘中拷贝文件的时候会缺少文件。前期规划尽量多预留空间,否则后面排错起来很耽误时间。(不该省的地儿别瞎省,领导我电脑配置还够用 )

)

部分报错信息:

[PFSD_SDK INF Oct 23 09:07:53.221552][12735]pfsd_open 517: open sdb/data//global/pg_control: no such file

可以先检查PFS目录下是否真的copy了如下文件,我之前的失败安装只拷贝成功了base和global

pfs -C disk ls sdb/data #查看PFS文件系统目录

Dir 1 768 Mon Nov 2 15:21:06 2020 pg_walDir 1 640 Fri Oct 30 10:44:47 2020 baseDir 1 9344 Fri Oct 30 10:44:53 2020 globalDir 1 0 Fri Oct 30 10:44:54 2020 pg_tblspcDir 1 128 Fri Oct 30 10:44:54 2020 pg_logindexDir 1 0 Fri Oct 30 10:44:54 2020 pg_twophaseDir 1 128 Fri Oct 30 10:44:54 2020 pg_xactDir 1 0 Fri Oct 30 10:44:54 2020 pg_commit_tsDir 1 256 Fri Oct 30 10:44:54 2020 pg_multixactDir 1 0 Fri Oct 30 10:44:54 2020 pg_csnlogDir 1 512 Fri Oct 30 10:44:59 2020 polar_fullpageDir 1 0 Fri Oct 30 10:45:01 2020 pg_replslotFile 1 226 Fri Oct 30 10:45:01 2020 polar_non_exclusive_backup_labeltotal 8192 (unit: 512Bytes)

注意:如果有PFS报错可以查PFS相关运行日志

/var/log/pfsd-sdb.log/var/log/pfsd-sdb/pfsd.log

6.PolarDB-O初始化配置

su - polardbinitdb -D $PGDATA -E UTF8 --locale=C -U polardb

-E 参数指定数据库字符集,--locale 指定本地化参数,-U 指定初始化用户,其他的参数可以执行initdb --help 命令查看。

7.初始化PFS数据

sudo usr/local/polardb_o_current/bin/polar-initdb.sh $PGDATA/ $PFSDIR/ disk

该步骤会将$PGDATA中polardb的一些初始化数据文件copy至$PFSDIR文件系统中保存

注意:可以验证下/sdb/data下文件是否copy成功后,再启动数据库

sudo usr/local/bin/pfs -C disk ls $PFSDIR[PFS_TRACE_LOG] [19726 ] trace_init notify_sock_name: var/run/polartrace/polartrace.sock, 11[PFS_TRACE_LOG] [19726 ] pfs trace start flush thread [140025597523712]Dir 1 640 Tue Nov 3 11:00:10 2020 baseDir 1 9344 Tue Nov 3 11:00:14 2020 globalDir 1 0 Tue Nov 3 11:00:14 2020 pg_tblspcDir 1 256 Tue Nov 3 11:00:17 2020 pg_walDir 1 128 Tue Nov 3 11:00:17 2020 pg_logindexDir 1 0 Tue Nov 3 11:00:18 2020 pg_twophaseDir 1 128 Tue Nov 3 11:00:18 2020 pg_xactDir 1 0 Tue Nov 3 11:00:18 2020 pg_commit_tsDir 1 256 Tue Nov 3 11:00:19 2020 pg_multixactDir 1 0 Tue Nov 3 11:00:19 2020 pg_csnlogtotal 0 (unit: 512Bytes)

8.修改PolarDB-O配置文件

初始化数据库集群后,数据库的参数文件 postgresql.conf 文件中都是默认参数,用户可以根据自身需要进行修改,常用修改的参数如下:

listen_addresses = '*' # 监听所有连接port = 5432 # 监听端口 (后文成为$port)max_connections = 2048 # 最大连接数unix_socket_directories = '.' # socket文件地址目录timezone = 'UTC-8' # 时区log_timezone = 'UTC-8' # 日志时区log_destination = 'csvlog' # 日志文件格式logging_collector = onlog_directory = 'polardb_log' # 日志存放目录polar_enable_shared_storage_mode=onpolar_hostid=1polar_datadir='/sdb/data/' # PFS目录polar_disk_name='sdb' # PFS设备名称polar_storage_cluster_name=disk # PFS设备类型wal_sender_timeout=30min #初始化备库过程中拉取wal日志进程超时设置

若修改postgresql.conf文件之前启动过数据库,确认$PGDATA/polar_node_static.conf是否存在,若存在则删除后再启动数据库。以后每次修改postgresql.con文件中的polar_datadir、polar_disk_name、polar_hostid参数时,均需要删除$PGDATA/polar_node_static.conf文件再重启数据库确保修改后的参数生效。

9.修改pg_hba.conf访问控制文件

为了实现其他机器对PolarDB数据库的访问,还需要修改访问控制文件,即

pg_hba.conf,一般是放开所有的ipv4的访问,也可以根据安全要求指定ip。

尾部添加如下配置:

vi $PGDATA/pg_hba.confhost all all 0.0.0.0/0 md5host replication all 0.0.0.0/0 md5

10.启动数据库

pg_ctl start -D $PGDATA -l tmp/logfiletail -f tmp/logfile #查看数据库启动日志pg_ctl stop -D $PGDATA #停库命令,此处不需要执行

11.进程检查

PolarDB-O数据库启动成功之后,会在数据库目录下生成一个postmaster.pid,其中第一行是PolarDB-O守护进程pid,用户可以通过ps -a|grep守护进程pid可以得到所有的PolarDB-O进程,其中有如下几类常见辅助进程。

/usr/local/polardb_o_current/bin/polar-postgres -D data 为PolarDB- O 的守护进程。postgres: logger 为PolarDB-O 的打印日志进程。postgres: checkpointer 为PolarDB-O 的周期性检查点进程。postgres: background writer 为PolarDB-O 的周期性刷脏进程。postgres: walwriter 为PolarDB-O 的定期WAL 日志刷盘进程。postgres: autovacuum launcher 为PolarDB-O 的自动清理调度进程。postgres: stats collector 为PolarDB-O 的统计信息收集进程。

12.连接测试

psql -h$PGDATA -p$PGPORTpsql -h$PGDATA -p$PGPORT -c"select version()" #版本查看------------------------------------------------------------------PostgreSQL 11.2 (POLARDB Database Compatible with Oracle 11.2.9)(1 row)

可以看到PolarDB是基于11.2版本的PG内核

主备高可用部署安装

1.初始化主节点

完成第二章单节点部署安装过程即可。

2.主节点创建复制用户

psql -h$PGDATA -p$PGPORT -c"create user replicator password '<replicat密码>' superuser;"psql -h$PGDATA -p$PGPORT -c"create user replicator password 'replicator' superuser;"

3.初始化备节点

1) 安装过程

对软硬件环境进行安装配置,具体请参见完成第一章的安装前准备、第二章的1-5节配置。

2) 初始化备库目录polar_basebackup -h 192.168.238.130 -p 5432 -U replicator -D $PGDATA --polardata=$PFSDIR --polar_storage_cluster_name=disk --polar_disk_name=$PFSDISK --polar_host_id=2 -X stream --progress --write-recovery-conf -v

命令中各项参数解释如下:

-h :主库ip地址。

-p :主库polardb的端口号。

-U :连接用户,这里使用上文创建的replicator。

-D :备库的数据目录。

--polardata :pfs的data目录路径。

--polar_storage_cluster_name :polardb data目录的storage cluster name。

--polar_disk_name :polardb data目录的disk home

--polar_host_id 可以任意取值,但需保证不能与主库的值相同。

-X :拉取wal日志的方式。

--write-recovery-conf :写recovery.conf文件。

-v :显示详细过程。

在复制过程中报错或超时,请修改主库 postgresql.conf 中的参数 wal_sender_timeout 。

3) 修改postgresql.conf配置文件

vi postgresql.conf

将原polar_hostid = 1改为polar_hostid = 2

4) 启动和连接测试

pg_ctl -c start -D $PGDATA -l tmp/logfilepsql -h$PGDATA -p$PGPORT

5) 检查主库流复制状态

在主库执行以下命令确认流复制建立成功:

psql -h$PGDATA -p$PGPORT -c"select * from pg_stat_replication;"

Expanded display is on.polardb=# select * from pg_stat_replication;-[ RECORD 1 ]----+---------------------------------pid | 22449usesysid | 16384usename | replicatorapplication_name | standby_3232296579_5432client_addr | 192.168.238.131client_hostname |client_port | 60866backend_start | 03-NOV-20 13:58:17.811323 +08:00backend_xmin |state | streamingsent_lsn | 0/41BD1648write_lsn | 0/41BD1648flush_lsn | 0/41BD1648replay_lsn | 0/41BD1648write_lag | 00:00:00.002793flush_lag | 00:00:00.002801replay_lag | 00:00:00.003894sync_priority | 1sync_state | sync

创建个表测试下主备是否同步

polardb=# create table t1( id int);polardb=# insert into t1 values(1);INSERT 0 1polardb=# insert into t1 values(2);INSERT 0 1polardb=# select * from t1;id----12(2 rows)

4.Cluster Manager部署

1) SSH配置

在CM节点root用户执行以下命令:

ssh-keygen -t rsassh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.238.131 #备节点IPssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.238.130 #主节点IP

systemctl disable firewalld.servicesystemctl stop firewalld.service

PolarDB:5432/tcpCluster Manager:5500,5501/tcp

rpm -ivh polardb-clustermanager-1.0.0-20200904155748.x86_64.rpm

安装后会有三个文件,如下所示:

# usr/local/polardb_cluster_manager/bin/polardb-cluster-manager#/usr/local/polardb_cluster_manager/bin/polardb_cluster_manager_control.py#/usr/local/polardb_cluster_manager/bin/supervisor.py

① 在主库主机上创建aurora探测用户

/usr/local/polardb_o_current/bin/createuser -p $PGPORT -h $PGDATA --login aurora -P -s

密码aurora

② 在CM主机上创建配置文件,如下所示:

排雷3:

注意:此处注意检查双引号间一定别有空格,用户及密码跟之前步骤探测和复制用户对应上

mkdir -p root/polardb_cluster_manager/confvi root/polardb_cluster_manager/conf/polardb_cluster_manager.conf{"work_mode":"PolarPure","consensus":{ "port":5001 },"account_info":{ "aurora_user":"aurora","aurora_password":"aurora","replica_user":"replicator","replica_password":"replicator" },"cluster_info":{ "port":5000 }}

consensus是内置一致性服务端口,不对外服务。

cluster_info是cm对外接口的服务端口。

aurora_user是刚创建的探测用户的账号、密码,权限为superuser。

replica_user是刚创建的复制用户的账号密码,权限可以为replication或superuser。

5.启动CM服务

1)启动服务

/usr/local/polardb_cluster_manager/bin/polardb_cluster_manager_control.py root/polardb_cluster_manager/ start

2)查询服务

/usr/local/polardb_cluster_manager/bin/polardb_cluster_manager_control.py root/polardb_cluster_manager/ status

输出结果应为:

PolarDB ClusterManager Work ON root/polardb_cluster_manager/ IS RUNNING

如需停止服务的话执行如下命令,此处不需要执行

3)停止服务

/usr/local/polardb_cluster_manager/bin/polardb_cluster_manager_control.py root/polardb_cluster_manager/ stop

6.配置CM集群

1) 在CM节点上配置主库

curl -H "Content-Type:application/json" -X POST --data "{\"user\":\"polardb\",\"dataPath\":\"/opt/polardb/data\",\"ip\":\"192.168.238.130\",\"port\":\"5432\",\"type\":\"Master\",\"storage_type\":\"local_ssd\",\"sync\":\"SYNC\"}" http://127.0.0.1:5000/v1/add_ins{"code":200}

返回结果200为正常,500为错误。

user:部署数据库的账号,polardb

dataPath:部署数据库的数据目录。即$PGDATA。

ip/port: 数据库的物理IP地址及端口。

type: 数据库角色,Master为主库。

sync: SYNC表示同步复制,采用异步复制切换可能会丢数据

2) 在CM节点上配置备库

curl -H "Content-Type:application/json" -X POST --data "{\"user\":\"polardb\",\"dataPath\":\"/opt/polardb/data\",\"ip\":\"192.168.238.131\",\"port\":\"5432\",\"type\":\"Standby\",\"storage_type\":\"local_ssd\",\"sync\":\"SYNC\"}" http://127.0.0.1:5000/v1/add_ins{"code":200}

返回结果200为正常,500为错误。

type: Standby表示备库。首次添加备库时会重启备库

高可用方式下,对外提供数据库服务器的主机除了有一个真实IP外还有一个虚拟IP,使用这两个IP都可以连接到该主机,所有项目中数据库链接配置的都是到虚拟IP,当服务器发生故障无法对外提供服务时,动态将这个虚IP切换到备用主机。

curl -H "Content-Type:application/json" -X POST --data "{\"vip\":\"192.168.238.133\",\"mask\":\"255.255.255.0\",\"interface\":\"ens33\"} " http://127.0.0.1:5000/v1/add_vip{"code":200}

返回结果200为正常,500为错误。

vip:虚拟IP地址,通过Linux Virtual IP实现,因此需要和主备库在同一交换机下的网段,且不冲突。也不能与其他物理地址冲突

mask: vip的网关掩码。

interface: 主备库的物理IP所在的网卡。

排雷4:

在使用curl配置主备库的过程中注意参数不要写错,如果需要重新调整可以使用remove_ins接口可以将原来的配置清除掉。

例如:清除master节点配置

curl -H "Content-Type:application/json" -X POST --data "{\"user\":\"polardb\",\"dataPath\":\"/opt/polardb/data\",\"ip\":\"192.168.238.130\",\"port\":\"5432\",\"type\":\"Master\",\"storage_type\":\"local_ssd\",\"sync\":\"SYNC\"}" http://127.0.0.1:5000/v1/remove_ins

注意:

CM的日志在/root/polardb_cluster_manager目录下,报错多看下log(主备切换的日志也在这里)。

排雷5:

CM异常关闭的时候pid文件会一直在(supervisor_pid),下次启动需要手工删掉。

curl -H "Content-Type:application/json" http://127.0.0.1:5000/v1/status?type=visual{"phase": "RunningPhase","master": {"endpoint": "192.168.238.130:5432","data_path": "/opt/polardb/data","user": "polardb","phase": "RUNNING","start_at": "2020-11-03 11:37:07"},"standby": [{"endpoint": "192.168.238.131:5432","data_path": "/opt/polardb/data","user": "polardb","phase": "RUNNING","start_at": "2020-11-03 11:37:40","sync_status": "SYNC"}],"vip": [{"vip": "192.168.238.133","interface": "ens33","mask": "255.255.255.0","endpoint": "192.168.238.130:5432"}]

状态为running表示正常。

5) 主库查询同步状态

select * from pg_stat_replication;Expanded display is on.polardb=# select * from pg_stat_replication;-[ RECORD 1 ]----+---------------------------------pid | 22449usesysid | 16384usename | replicatorapplication_name | standby_3232296579_5432client_addr | 192.168.238.131client_hostname |client_port | 60866backend_start | 03-NOV-20 13:58:17.811323 +08:00backend_xmin |state | streamingsent_lsn | 0/41BD1648write_lsn | 0/41BD1648flush_lsn | 0/41BD1648replay_lsn | 0/41BD1648write_lag | 00:00:00.002793flush_lag | 00:00:00.002801replay_lag | 00:00:00.003894sync_priority | 1sync_state | sync

新插入数据验证下:

polardb=# insert into t1 values (3);INSERT 0 1polardb=# select * from t1;id----123(3 rows)

7.主备切换

1) 手动方式切换

curl -H "Content-Type:application/json" -X POST --data "{\"from\":\"192.168.238.130:5432\",\"to\":\"192.168.238.131:5432\"}" http://127.0.0.1:5000/v1/switchover{"code":200}

返回结果200为正常,500为错误。

执行如下命令,验证主库是否手动切换成功。

curl -H "Content-Type:application/json" http://127.0.0.1:5000/v1/status?type=visual{"phase": "RunningPhase","master": {"endpoint": "192.168.238.131:5432","data_path": "/opt/polardb/data","user": "polardb","phase": "RUNNING","start_at": "2020-11-03 11:45:15","sync_status": "SYNC"},"standby": [{"endpoint": "192.168.238.130:5432","data_path": "/opt/polardb/data","user": "polardb","phase": "RUNNING","start_at": "2020-11-03 11:45:19","sync_status": "SYNC"}],"vip": [{"vip": "192.168.238.133","interface": "ens33","mask": "255.255.255.0","endpoint": "192.168.238.131:5432"}]}

可以看到master和standby的ip地址互换,vip也自动漂移到备节点上了。

验证下

select * from pg_stat_replication;pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start |backend_xmin | state | sent_lsn | write_lsn | flush_lsn | replay_lsn | write_lag | flush_lag | replay_lag | sync_priority | sync_state------+----------+------------+-------------------------+-----------------+-----------------+-------------+----------------------------------+--------------+-----------+------------+------------+------------+------------+-----------------+-----------------+-----------------+---------------+------------7189 | 16384 | replicator | standby_3232296578_5432 | 192.168.238.130 | | 47952 | 03-NOV-20 11:45:15.677601 +08:00 || streaming | 0/419D2FE8 | 0/419D2FE8 | 0/419D2FE8 | 0/419D2FE8 | 00:00:00.002503 | 00:00:00.002511 | 00:00:00.003535 |1 | sync(1 row)

vip也漂移到备节点了

ifconfigens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.238.131 netmask 255.255.255.0 broadcast 192.168.238.255inet6 fe80::be05:6c57:dd7c:6856 prefixlen 64 scopeid 0x20<link>inet6 fe80::3bff:7a9d:519:41ab prefixlen 64 scopeid 0x20<link>ether 00:0c:29:58:fa:6a txqueuelen 1000 (Ethernet)RX packets 2073965 bytes 3100280275 (2.8 GiB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 80015 bytes 81406434 (77.6 MiB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0ens33:5432: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.238.133 netmask 255.255.255.0 broadcast 192.168.238.255ether 00:0c:29:58:fa:6a txqueuelen 1000 (Ethernet)

停止现在的131主库

/usr/local/polardb_o_current/bin/pg_ctl stop -D $PGDATA -mi

查看切换状态

curl -H "Content-Type:application/json" http://127.0.0.1:5000/v1/status?type=visual

自动切换中:

curl -H "Content-Type:application/json" http://127.0.0.1:5000/v1/status?type=visual{"phase": "SwitchingPhase","master": {"endpoint": "192.168.238.131:5432","data_path": "/opt/polardb/data","user": "polardb","phase": "RUNNING","start_at": "2020-11-03 11:45:15","sync_status": "SYNC"},"standby": [{"endpoint": "192.168.238.130:5432","data_path": "/opt/polardb/data","user": "polardb","phase": "RUNNING","start_at": "2020-11-03 11:45:19","sync_status": "SYNC"}],"vip": [{"vip": "192.168.238.133","interface": "ens33","mask": "255.255.255.0","endpoint": "192.168.238.131:5432"}]}

自动切换后:

curl -H "Content-Type:application/json" http://127.0.0.1:5000/v1/status?type=visual{"phase": "RunningPhase","master": {"endpoint": "192.168.238.130:5432","data_path": "/opt/polardb/data","user": "polardb","phase": "RUNNING","start_at": "2020-11-03 13:58:17","sync_status": "SYNC"},"standby": [{"endpoint": "192.168.238.131:5432","data_path": "/opt/polardb/data","user": "polardb","phase": "RUNNING","start_at": "2020-11-03 13:58:21","sync_status": "SYNC"}],"vip": [{"vip": "192.168.238.133","interface": "ens33","mask": "255.255.255.0","endpoint": "192.168.238.130:5432"}]}

验证vip自动切换

vip自动切换到130上了

ifconfigens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.238.130 netmask 255.255.255.0 broadcast 192.168.238.255inet6 fe80::9398:2419:9c94:b1b3 prefixlen 64 scopeid 0x20<link>ether 00:0c:29:80:49:a0 txqueuelen 1000 (Ethernet)RX packets 390201 bytes 106430712 (101.5 MiB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 2307269 bytes 5939759826 (5.5 GiB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0ens33:5432: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 192.168.238.133 netmask 255.255.255.0 broadcast 192.168.238.255ether 00:0c:29:80:49:a0 txqueuelen 1000 (Ethernet)[polardb@dbnode1 polardb]$ psql -h 192.168.238.133 -p 5432 -Ureplicator -dpolardb -c "select pg_is_in_recovery()";Password for user replicator:pg_is_in_recovery-------------------f(1 row)

若显示此结果,表示vip切换成功。

总结

PolarDB的安装包括集群部署相对还是比较便捷,在安装中暴露的一些问题一部分和初步上手不熟悉有关,也有一部分因素是目前PolarDB的相关工具文档资料都相对较少,大家也都是摸着石头过河,后续还会做更多测试将生产迁移上的经验分享给大家,最后祝所有国产数据库大展宏图!

扫描二维码

关注我们

国内最大IT云-移动IT云IAAS产品、云管服务介绍、新鲜消息、产品体验、运营推广、前沿技术、精华文章分享