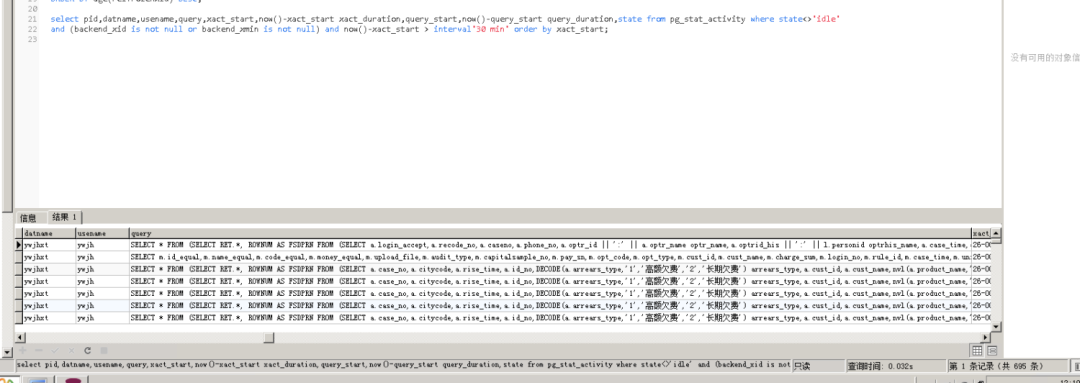

随着国产数据库Polardb的推广及应用,数据库故障开始增多,今天给大家分享一篇Polardb故障应急处理思路。10月下旬,应用反馈某模块登陆异常,查看数据库有大量进程active状态,持续时间超过30分钟,通过kill进程并重启应用无法恢复,通过与业务协商、尝试通过重启数据库实例恢复。

查看日志文件postgresql-10-26_024511.log,日志中存在以下日志:

ERROR: dsa_area could not attach to segment

涉及进程pid= 51789 53315 53316,其中53316和53315进程都退出了,日志如下:

53315 2020-10-26 03:07:11 UTC XX000 ERROR: dsa_area could not attach to segment

53316 2020-10-26 03:07:11 UTC XX000 ERROR: dsa_area could not attach to segment

......

53314 2020-10-26 03:07:11 UTC XX000 FATAL: cannot unpin a segment that is not pinned

54 2020-10-26 03:07:11 UTC 00000 LOG: background worker "parallel worker" (PID53316) exited withexit code 1

54 2020-10-26 03:07:11 UTC 00000 LOG: background worker "parallel worker" (PID53315) exitedwith exit code 1

而pid=51789的进程没有正常退出,很有可能是hang,并且影响到其他的进程。

……

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:07 UTC 00000 13 0 0 0 LOG: execute S_1: COMMIT

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:09 UTC 00000 43 0 0 0 LOG: statement: BEGIN

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:09 UTC 00000 59 1 0 40 LOG: execute <unnamed>: SELECTordersum,onestatus,twostatus,threestatus,fourstatus,archive FROM(……………… )

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:09 UTC 00000 22 0 0 0 LOG: execute S_1: COMMIT

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:11 UTC 00000 35 0 0 0 LOG: statement: BEGIN

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:11 UTC 00000 69701 1 0 170881 LOG: execute <unnamed>: SELECT count(1)FROM (SELECT ………………) XTABLE

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:11 UTC XX000 ERROR: dsa_area could not attach to segment

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:11 UTC XX000 STATEMENT: SELECT * FROM (SELECT RET.*, ROWNUM AS FSDPRN FROM(………………)WHERE FSDPRN BETWEEN 1 AND 10

51789 192.168.7.36(41423) ywxt ywxt 2020-10-26 03:07:11 UTC XX000 LOG: statement: SELECT * FROM (SELECT RET.*, ROWNUM AS FSDPRNFROM (………………) WHERE FSDPRN BETWEEN 1 AND 10

params:$1 = 'd6d9c702-8aa9-4e9b-8cc2-4d756b1adaa2', $2 = '202009', $3 ='HLW', $4 = '15255864202', $5 ='1a251789-9cc9-4e77-84cd-6b651da3be5a', $6 = '2020-09-14 11:06:18'

params:$1 = '40f12349-97cc-4945-94b1-e7fda75cc984', $2 = '202009', $3 ='HLW', $4 = '18326858272', $5 ='eaceb00f-9f78-4c6f-8cee-517898f0b200', $6 = '2020-09-15 15:16:40'

查过文档发现是属于11.3之前已知的问题:

Fix race conditions in management of dynamic shared memory (Thomas Munro)

These could lead to “dsa_area couldnot attach to segment” or “cannot unpin a segment thatis not pinned” errors.

if you just happen to hit PostgreSQL bugsuch as this one that was fixed in v11.3:

Once this problem occurs, your databasewill appear to be running, and can possibly be used to service somequeries... but most queries will hang, and eventually yourapplication servers will timeout causing application downtime.

通过重启实例恢复,由于重启过程中,实例无法正常停止,导致管控重启流程超时失败,通过手工修复拉起实例。手动流程如下:

将长时间运行的流程直接设置为中断

cat/root/.kube/config |grep client-certificate-data|awk '{print$2}'|base64 -d >/root/.kube/admin.crt

cat/root/.kube/config |grep client-key-data|awk '{print $2}'|base64 -d>/root/.kube/admin.key

KUBEAPISERVER=127.0.0.1

PPASCLUSTERNAME=polar-xxxxx

curl-k --cert root/.kube/admin.crt --key root/.kube/admin.keyhttps://$KUBEAPISERVER:6443/apis/ppas.polardb.aliyun.com/v1beta1/namespaces/default/ppasclusters/$PPASCLUSTERNAME| python -m json.tool > polar-single.yaml

vimpolar-single.yaml

找到clusterStatus:xxxxxx,改为clusterStatus:Interrupt,保存

curl-X PUT -H "Content-Type: application/json" -k --cert/root/.kube/admin.crt --key /root/.kube/admin.keyhttps://$KUBEAPISERVER:6443/apis/ppas.polardb.aliyun.com/v1beta1/namespaces/default/ppasclusters/$PPASCLUSTERNAME/status--data @polar-single.yaml

控制台查看集群列表,看是否进入到中断状态。

清理ins_lock文件

kubectlget ppascluster polar-xxxx -o yaml

找到status- masterCluster - rwHostIns - insId,这个值是实例ID。

在三个计算节点找以下路径:/data/polardb_ppas/{insId}/data,和直接进入容器查找文件效果一样。

找到ins_lock文件,删除。

将中断的集群进行重建

kubectledit ppascluster polar-xxxx

在annotations下面增加下面两项:

polarbox.interrupt.recover:"T"

polarbox.interrupt.previous.status:"RebuildingRW"

刷新控制台,查看集群状态进入重建中,等待重建完成

1、此问题是并行查询过程中触发,短期内通过关闭并行查询来规避,目前生产已经关闭并行。

2、Polardb应急手工拉起流程的熟悉。

3、相关工具配套的场景沉淀。