hadoop centos 7配置。

4个物理机

96vcpu

512内存

2T SSD硬盘

64T SAS存储,直接绑定jbod,不做raid,也没有Lvm。

这个是搭建成功的测试结果。为了给公司的同事做案例讲解,特意找了个没有特意优化过的,做了LVM的hadoop集群做对比。注意,两台实验机器都是物理机,配置都一样。512内存,vcpu 96

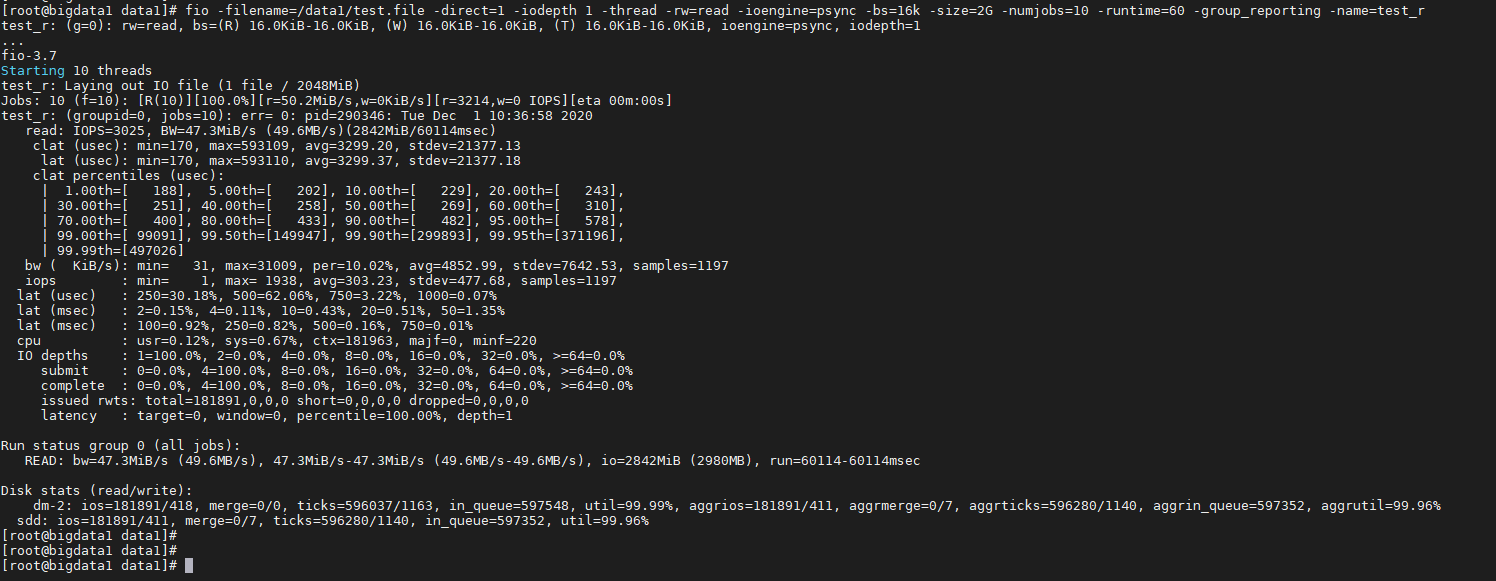

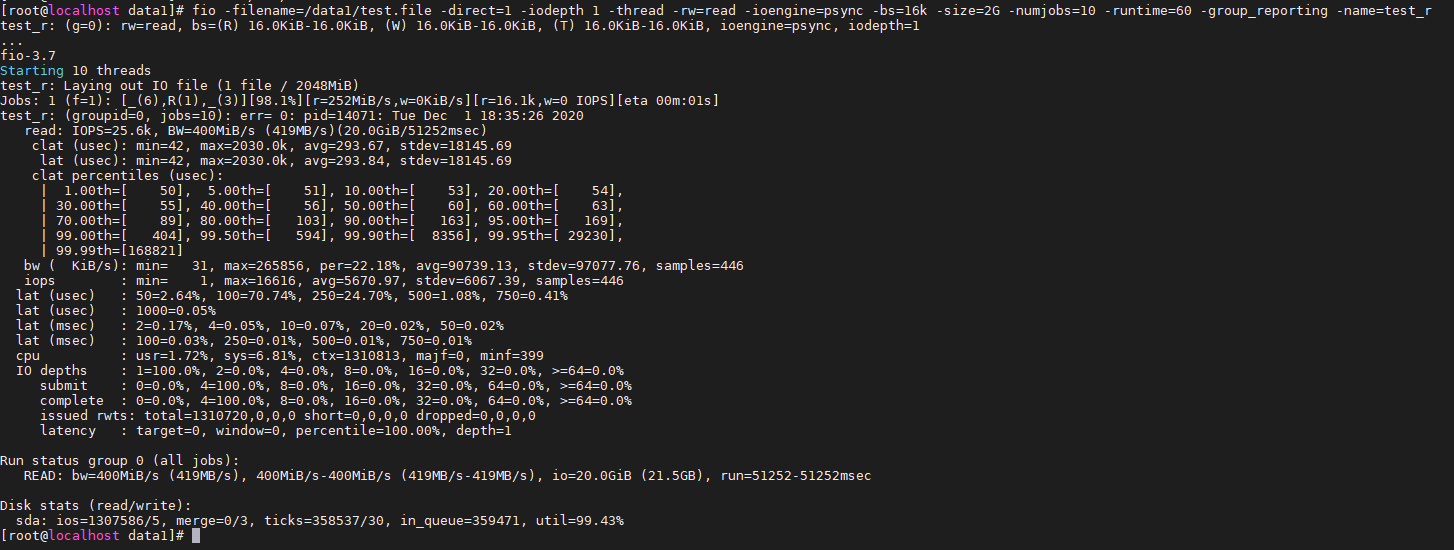

顺序读:

fio -filename=/data1/test.file -direct=1 -iodepth 1 -thread -rw=read -ioengine=psync -bs=16k -size=2G -numjobs=10 -runtime=60 -group_reporting -name=test_r

47.3 M/S vs 419M/S 差距很明显

lvm的机器

jbod的机器

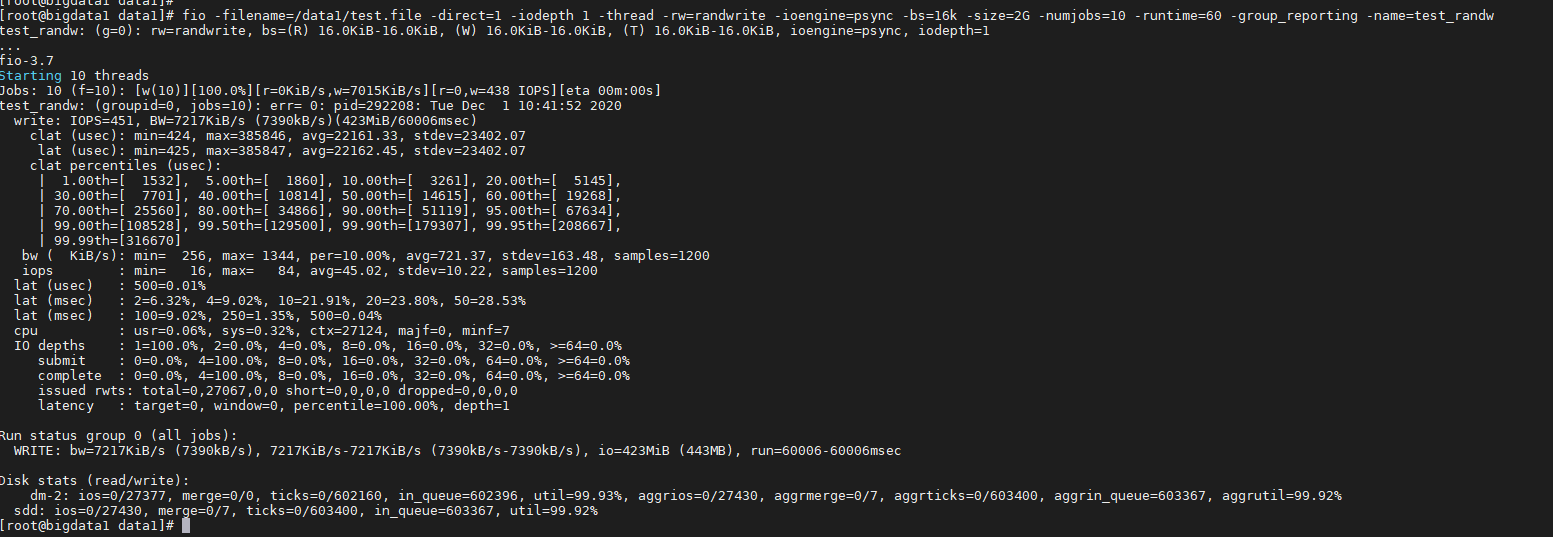

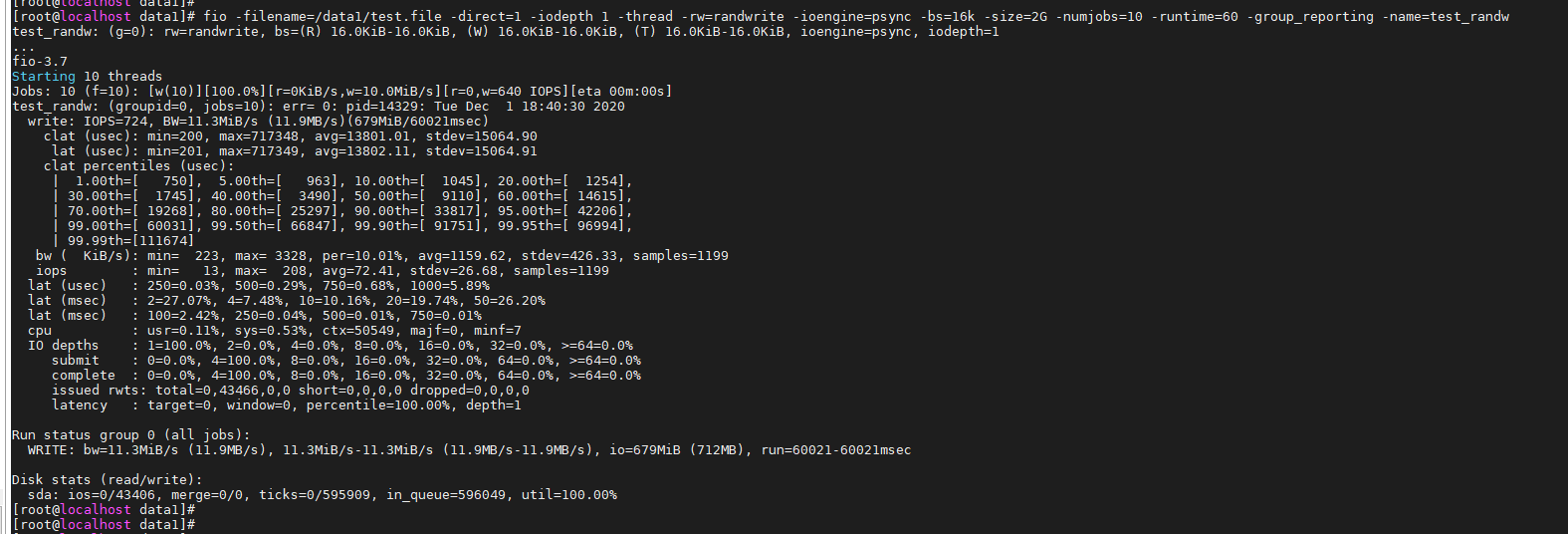

随机写:

fio -filename=/data1/test.file -direct=1 -iodepth 1 -thread -rw=randwrite -ioengine=psync -bs=16k -size=2G -numjobs=10 -runtime=60 -group_reporting -name=test_randw

7.3 M/S VS 11.3 M/S lvm还是落后

lvm的机器

jbod的机器

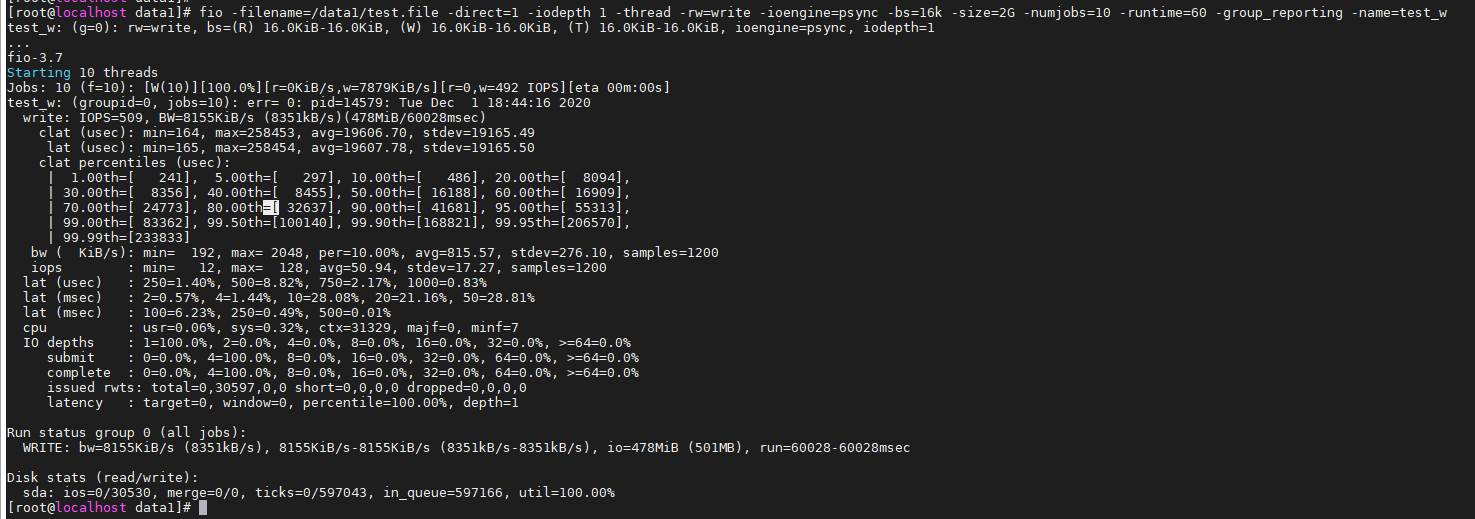

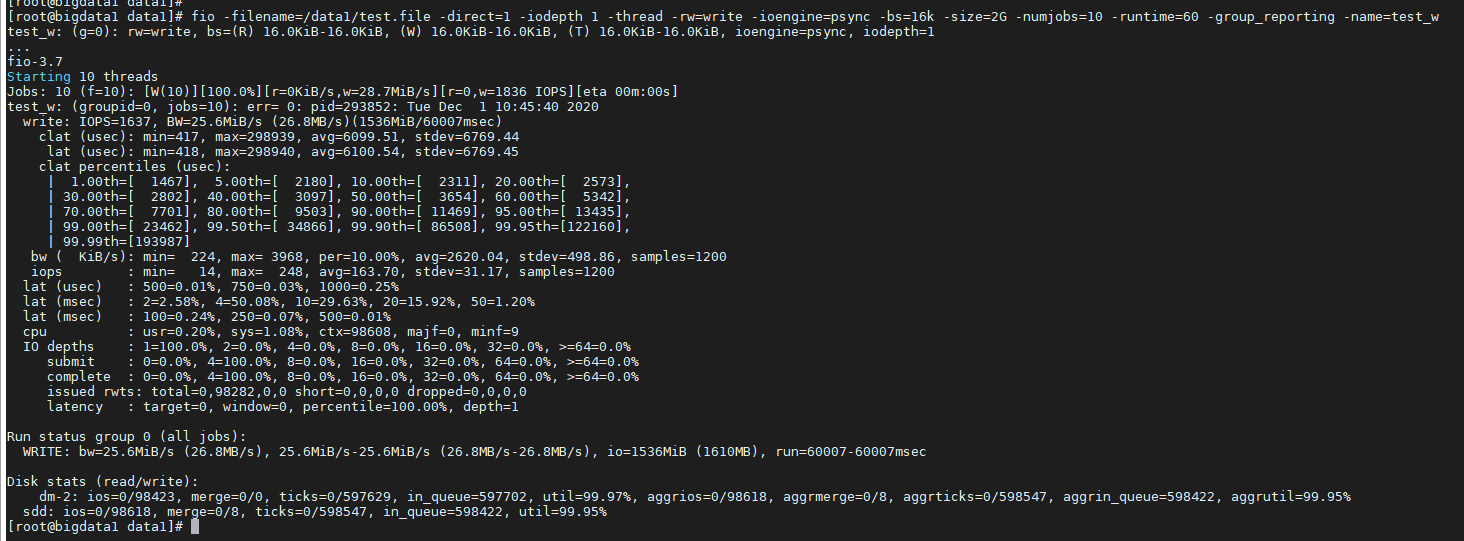

顺序写:

fio -filename=/data1/test.file -direct=1 -iodepth 1 -thread -rw=write -ioengine=psync -bs=16k -size=2G -numjobs=10 -runtime=60 -group_reporting -name=test_w

8.1 M/S VS 25.6M/S 只有顺序写这一项是LVM大幅度领先

jbod的机器

lvm的机器

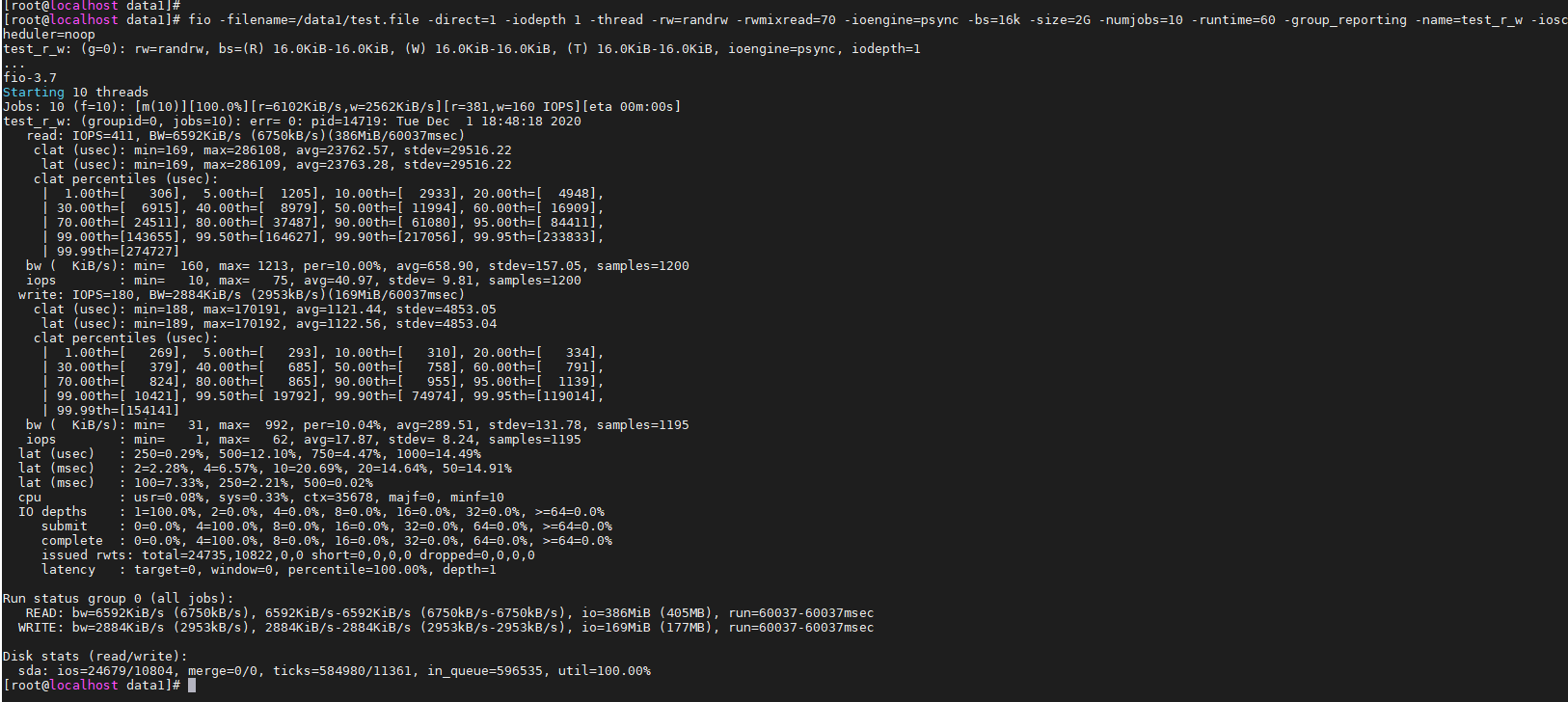

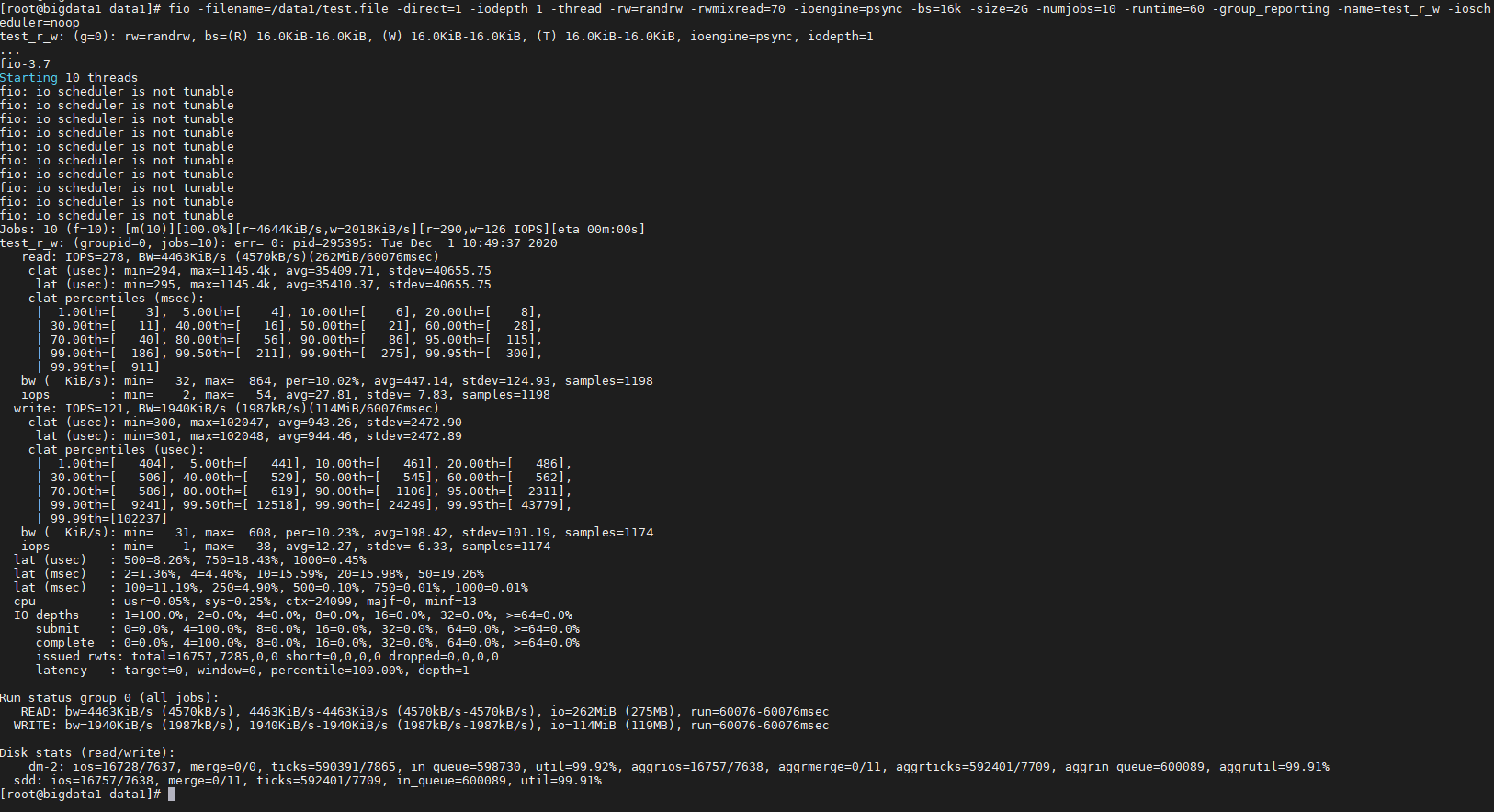

混合随机读写:

fio -filename=/data1/test.file -direct=1 -iodepth 1 -thread -rw=randrw -rwmixread=70 -ioengine=psync -bs=16k -size=2G -numjobs=10 -runtime=60 -group_reporting -name=test_r_w -ioscheduler=noop

读 6.5 M/S VS 4.4 M/S

写 2.9 M/S VS 1.9 M/S

这一项 lvm的机器全面落后。

jbod的机器

lvm的机器

jbod的配置步骤

[root@localhost ~]# cat /proc/scsi/scsi |more

Attached devices:

Host: scsi0 Channel: 00 Id: 00 Lun: 00

Vendor: LENOVO Model: ST8000NM001A X Rev: LCB9

Type: Direct-Access ANSI SCSI revision: 07

Host: scsi0 Channel: 00 Id: 01 Lun: 00

Vendor: LENOVO Model: ST8000NM001A X Rev: LCB9

Type: Direct-Access ANSI SCSI revision: 07

Host: scsi0 Channel: 00 Id: 02 Lun: 00

Vendor: LENOVO Model: ST8000NM001A X Rev: LCB9

Type: Direct-Access ANSI SCSI revision: 07

Host: scsi0 Channel: 00 Id: 03 Lun: 00

Vendor: LENOVO Model: ST8000NM001A X Rev: LCB9

Type: Direct-Access ANSI SCSI revision: 07

Host: scsi0 Channel: 00 Id: 04 Lun: 00

Vendor: LENOVO Model: ST8000NM001A X Rev: LCB9

Type: Direct-Access ANSI SCSI revision: 07

Host: scsi0 Channel: 00 Id: 05 Lun: 00

Vendor: LENOVO Model: ST8000NM001A X Rev: LCB9

Type: Direct-Access ANSI SCSI revision: 07

Host: scsi0 Channel: 00 Id: 06 Lun: 00

Vendor: LENOVO Model: ST8000NM001A X Rev: LCB9

Type: Direct-Access ANSI SCSI revision: 07

Host: scsi0 Channel: 00 Id: 08 Lun: 00

Vendor: ATA Model: SSDSC2KG960G8L Rev: LX41

Type: Direct-Access ANSI SCSI revision: 06

Host: scsi0 Channel: 00 Id: 09 Lun: 00

Vendor: LENOVO Model: ST8000NM001A X Rev: LCB9

Type: Direct-Access ANSI SCSI revision: 07

Host: scsi0 Channel: 00 Id: 10 Lun: 00

Vendor: ATA Model: SSDSC2KG960G8L Rev: LX41

Type: Direct-Access ANSI SCSI revision: 06

Host: scsi0 Channel: 01 Id: 06 Lun: 00

Vendor: LSI Model: VirtualSES Rev: 03

Type: Enclosure ANSI SCSI revision: 07

Host: scsi0 Channel: 02 Id: 00 Lun: 00

Vendor: Lenovo Model: RAID 930-16i-4GB Rev: 5.13

Type: Direct-Access ANSI SCSI revision: 05

[root@localhost ~]#

下载地址

https://www.ibm.com/support/pages/node/835788

#解压安装storcli

unzip ibm_utl_sraidmr_storcli-1.07.07_linux_32-64.zip

rpm -Uvh storcli-1.07.07-1.noarch.rpm

#设置软连接

ln -s /opt/MegaRAID/storcli/storcli64 /bin/storcli

ln -s /opt/MegaRAID/storcli/storcli64 /sbin/storcli

#Raid数量及当前状态

[root@localhost Linux]# storcli show ctrlcount

Status Code = 0

Status = Success

Description = None

Controller Count = 1

[root@localhost Linux]#

[root@localhost Linux]#

[root@localhost Linux]#

#查询虚拟磁盘大小,raid结构等

[root@localhost Linux]# storcli /c0 /v0 show

Controller = 0

Status = Success

Description = None

Virtual Drives :

DG/VD TYPE State Access Consist Cache sCC Size Name

0/0 RAID1 Optl RW Yes NRWBD - 446.103 GB OS

Cac=CacheCade|Rec=Recovery|OfLn=OffLine|Pdgd=Partially Degraded|dgrd=Degraded

Optl=Optimal|RO=Read Only|RW=Read Write|B=Blocked|Consist=Consistent|

R=Read Ahead Always|NR=No Read Ahead|WB=WriteBack|

AWB=Always WriteBack|WT=WriteThrough|C=Cached IO|D=Direct IO|sCC=Scheduled

Check Consistency

[root@localhost Linux]#

#查看control0的物理硬盘

[root@localhost Linux]#

[root@localhost Linux]# storcli /c0 /eall /sall show

Controller = 0

Status = Success

Description = Show Drive Information Succeeded.

Drive Information :

EID:Slt DID State DG Size Intf Med SED PI SeSz Model Sp

134:0 11 Onln 0 446.103 GB SATA SSD N N 512B SSDSC2KG480G8L 01PE343D7A09691LEN U

134:1 7 Onln 0 446.103 GB SATA SSD N N 512B SSDSC2KG480G8L 01PE343D7A09691LEN U

134:2 8 JBOD - 893.138 GB SATA SSD N N 512B SSDSC2KG960G8L 01PE344D7A09692LEN U

134:3 10 JBOD - 893.138 GB SATA SSD N N 512B SSDSC2KG960G8L 01PE344D7A09692LEN U

134:4 2 JBOD - 7.276 TB SAS HDD N N 512B ST8000NM001A X U

134:5 0 JBOD - 7.276 TB SAS HDD N N 512B ST8000NM001A X U

134:6 1 JBOD - 7.276 TB SAS HDD N N 512B ST8000NM001A X U

134:7 3 JBOD - 7.276 TB SAS HDD N N 512B ST8000NM001A X U

134:8 9 JBOD - 7.276 TB SAS HDD N N 512B ST8000NM001A X U

134:9 4 JBOD - 7.276 TB SAS HDD N N 512B ST8000NM001A X U

134:10 5 JBOD - 7.276 TB SAS HDD N N 512B ST8000NM001A X U

134:11 6 JBOD - 7.276 TB SAS HDD N N 512B ST8000NM001A X U

EID-Enclosure Device ID|Slt-Slot No.|DID-Device ID|DG-DriveGroup

DHS-Dedicated Hot Spare|UGood-Unconfigured Good|GHS-Global Hotspare

UBad-Unconfigured Bad|Onln-Online|Offln-Offline|Intf-Interface

Med-Media Type|SED-Self Encryptive Drive|PI-Protection Info

SeSz-Sector Size|Sp-Spun|U-Up|D-Down|T-Transition|F-Foreign

UGUnsp-Unsupported|UGShld-UnConfigured shielded|HSPShld-Hotspare shielded

CFShld-Configured shielded

[root@localhost Linux]#

存储绑定裸设备。

storcli /c0/e134/s4 show all

storcli /c0/e134/s0 start locate

storcli /c0/e134/s0 set offline

storcli /c0/e134/s0 set online

storcli /c0/e134/s4 start locate

storcli /c0/e134/s5 start locate

storcli /c0/e134/s6 start locate

storcli /c0/e134/s7 start locate

storcli /c0/e134/s8 start locate

storcli /c0/e134/s9 start locate

storcli /c0/e134/s10 start locate

storcli /c0/e134/s11 start locate

storcli /c0/e134/s4 set offline

storcli /c0/e134/s5 set offline

storcli /c0/e134/s6 set offline

storcli /c0/e134/s7 set offline

storcli /c0/e134/s8 set offline

storcli /c0/e134/s9 set offline

storcli /c0/e134/s10 set offline

storcli /c0/e134/s11 set offline

storcli /c0/e134/s4 set online

storcli /c0/e134/s5 set online

storcli /c0/e134/s6 set online

storcli /c0/e134/s7 set online

storcli /c0/e134/s8 set online

storcli /c0/e134/s9 set online

storcli /c0/e134/s10 set online

storcli /c0/e134/s11 set online

storcli /c0/e134/s4 set jbod

storcli /c0/e134/s5 set jbod

storcli /c0/e134/s6 set jbod

storcli /c0/e134/s7 set jbod

storcli /c0/e134/s8 set jbod

storcli /c0/e134/s9 set jbod

storcli /c0/e134/s10 set jbod

storcli /c0/e134/s11 set jbod

mkfs.xfs -f /dev/sda

mkfs.xfs -f /dev/sdb

mkfs.xfs -f /dev/sdc

mkfs.xfs -f /dev/sdd

mkfs.xfs -f /dev/sde

mkfs.xfs -f /dev/sdf

mkfs.xfs -f /dev/sdg

mkfs.xfs -f /dev/sdi

mkfs.xfs /dev/sdh

mkfs.xfs /dev/sdj

mkdir -p /data1

mkdir -p /data2

mkdir -p /data3

mkdir -p /data4

mkdir -p /data5

mkdir -p /data6

mkdir -p /data7

mkdir -p /data8

mount -t xfs /dev/sdi /data1

mount -t xfs /dev/sdb /data2

mount -t xfs /dev/sdd /data4

mount -t xfs /dev/sde /data5

mount -t xfs /dev/sdf /data6

mount -t xfs /dev/sdg /data7

mount -t xfs /dev/sdj /data3

mount -t xfs /dev/sdj /data8

mount /dev/sdc /ssd_data1

mount /dev/sdi /ssd_data2

sysctl vm.swappiness=0

##自动加载存储

/dev/sda /data1 xfs defaults 0 0

/dev/sdb /data2 xfs defaults 0 0

/dev/sdd /data4 xfs defaults 0 0

/dev/sde /data5 xfs defaults 0 0

/dev/sdf /data6 xfs defaults 0 0

/dev/sdg /data7 xfs defaults 0 0

/dev/sdh /data8 xfs defaults 0 0

/dev/sdj /data8 xfs defaults 0 0

/dev/sdc /ssd_data xfs defaults 0 0

/dev/sdi /ssd_data xfs defaults 0 0

#做预读。

blockdev --setra 16384 /dev/sda

blockdev --setra 16384 /dev/sdb

blockdev --setra 16384 /dev/sdc

blockdev --setra 16384 /dev/sdd

blockdev --setra 16384 /dev/sde

blockdev --setra 16384 /dev/sdf

blockdev --setra 16384 /dev/sdg

blockdev --setra 16384 /dev/sdh

blockdev --setra 16384 /dev/sdi

blockdev --setra 16384 /dev/sdj

关闭atime时间更新

/dev/sda /data1 xfs defaults,noatime 0 0

/dev/sdb /data2 xfs defaults,noatime 0 0

/dev/sdd /data4 xfs defaults,noatime 0 0

/dev/sde /data5 xfs defaults,noatime 0 0

/dev/sdf /data6 xfs defaults,noatime 0 0

/dev/sdg /data7 xfs defaults,noatime 0 0

/dev/sdh /data8 xfs defaults,noatime 0 0

/dev/sdj /data8 xfs defaults,noatime 0 0

/dev/sdc /ssd_data xfs defaults,noatime 0 0

/dev/sdi /ssd_data xfs defaults,noatime 0 0

关闭透明大页

sudo echo “never” > /sys/kernel/mm/transparent_hugepage/enabled

sudo echo “never” > /sys/kernel/mm/transparent_hugepage/defrag

系统配置优化

内核配置

/etc/grub.conf

numa=off

elevator=deadline

编译器版本

gcc version 4.4.6 20110731 (Red Hat 4.4.6-3) (GCC)

/etc/sysctl.conf

vm.swappiness = 0

vm.overcommit_memory = 0

信号量65535

[root@localhost ~]# ulimit -u

65535

[root@localhost ~]#

/etc/security/limits.conf

- soft nofile 655360

- hard nofile 655360

- soft nproc 655360

- hard nproc 655360

- soft stack unlimited

- hard stack unlimited

- soft memlock 250000000

- hard memlock 250000000

关闭swap分区

more /etc/sysctl.conf | vm.swappiness echo vm.swappiness = 0 >> /etc/sysctl.conf

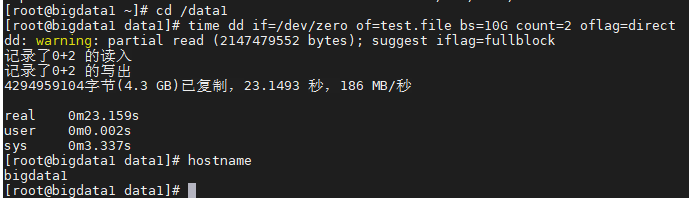

这个是做了LVM的,做了存储预读缓存的。IO测试186M/S

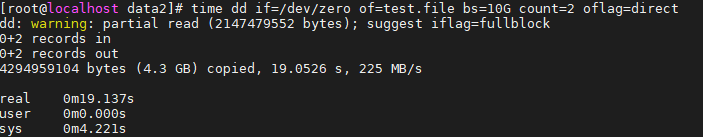

这个是直接用jbod,没有raid,没有lvm, 没有做预读缓存。 IO是225M/s

虽然用dd命令来测试不够全面。但是也能反映出一定问题。。。所以不要用lvm,raid什么的来搭建hadoop。。。