环境准备

Pulsar 集群组件和 K8S Node 节点规划

基于上面测试环境的规划,我们将 node1~node3 三个节点打上 Label 和 Taint:

$ kubectl label node node1 node-role.kubernetes.io/pulsar=pulsar$ kubectl label node node2 node-role.kubernetes.io/pulsar=pulsar$ kubectl label node node3 node-role.kubernetes.io/pulsar=pulsar$ kubectl taint nodes node1 dedicated=pulsar:NoSchedule$ kubectl taint nodes node2 dedicated=pulsar:NoSchedule$ kubectl taint nodes node3 dedicated=pulsar:NoSchedule

Pulsar 集群组件容器镜像准备

创建 JWT 认证所需的 K8S Secret

$ git clone -b pulsar-2.7.7 --depth 1 https://github.com/apache/pulsar-helm-chart.git$ cd pulsar-helm-chart/

$ ./scripts/pulsar/prepare_helm_release.sh \-n pulsar \-k pulsar \-l

generate the token keys for the pulsar cluster---The private key and public key are generated to ... successfully.apiVersion: v1data:PRIVATEKEY: <...>PUBLICKEY: <...>kind: Secretmetadata:creationTimestamp: nullname: pulsar-token-asymmetric-keynamespace: pulsargenerate the tokens for the super-users: proxy-admin,broker-admin,admingenerate the token for proxy-admin---pulsar-token-asymmetric-keyapiVersion: v1data:TOKEN: <...>TYPE: YXN5bW1ldHJpYw==kind: Secretmetadata:creationTimestamp: nullname: pulsar-token-proxy-adminnamespace: pulsargenerate the token for broker-admin---pulsar-token-asymmetric-keyapiVersion: v1data:TOKEN: <...>TYPE: YXN5bW1ldHJpYw==kind: Secretmetadata:creationTimestamp: nullname: pulsar-token-broker-adminnamespace: pulsargenerate the token for admin---pulsar-token-asymmetric-keyapiVersion: v1data:TOKEN: <...>TYPE: YXN5bW1ldHJpYw==kind: Secretmetadata:creationTimestamp: nullname: pulsar-token-adminnamespace: pulsar-------------------------------------The jwt token secret keys are generated under:- 'pulsar-token-asymmetric-key'The jwt tokens for superusers are generated and stored as below:- 'proxy-admin':secret('pulsar-token-proxy-admin')- 'broker-admin':secret('pulsar-token-broker-admin')- 'admin':secret('pulsar-token-admin')

kubectl get secret -n pulsar | grep pulsar-tokenpulsar-token-admin Opaque 2 5mpulsar-token-asymmetric-key Opaque 2 5mpulsar-token-broker-admin Opaque 2 5mpulsar-token-proxy-admin Opaque 2 5m

创建 Zookeeper 和 Bookie 的 Local PV

apiVersion: storage.k8s.io/v1kind: StorageClassmetadata:name: local-storageprovisioner: kubernetes.io/no-provisionervolumeBindingMode: WaitForFirstConsumerreclaimPolicy: Retain

$ mkdir -p home/puslar/data/zookeeper-data$ mkdir -p home/puslar/data/bookie-data/ledgers$ mkdir -p home/puslar/data/bookie-data/journal

---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-zookeeper-data-pulsar-zookeeper-0spec:capacity:storage: 20GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/zookeeper-dataclaimRef:name: pulsar-zookeeper-data-pulsar-zookeeper-0namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node1---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-zookeeper-data-pulsar-zookeeper-1spec:capacity:storage: 20GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/zookeeper-dataclaimRef:name: pulsar-zookeeper-data-pulsar-zookeeper-1namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node2---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-zookeeper-data-pulsar-zookeeper-2spec:capacity:storage: 20GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/zookeeper-dataclaimRef:name: pulsar-zookeeper-data-pulsar-zookeeper-2namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node3

---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-bookie-ledgers-pulsar-bookie-0spec:capacity:storage: 50GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/bookie-data/ledgersclaimRef:name: pulsar-bookie-ledgers-pulsar-bookie-0namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node1---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-bookie-journal-pulsar-bookie-0spec:capacity:storage: 50GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/bookie-data/journalclaimRef:name: pulsar-bookie-journal-pulsar-bookie-0namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node1---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-bookie-ledgers-pulsar-bookie-1spec:capacity:storage: 50GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/bookie-data/ledgersclaimRef:name: pulsar-bookie-ledgers-pulsar-bookie-1namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node2---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-bookie-journal-pulsar-bookie-1spec:capacity:storage: 50GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/bookie-data/journalclaimRef:name: pulsar-bookie-journal-pulsar-bookie-1namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node2---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-bookie-ledgers-pulsar-bookie-2spec:capacity:storage: 50GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/bookie-data/ledgersclaimRef:name: pulsar-bookie-ledgers-pulsar-bookie-2namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node3---apiVersion: v1kind: PersistentVolumemetadata:name: pulsar-bookie-journal-pulsar-bookie-2spec:capacity:storage: 50GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: home/puslar/data/bookie-data/journalclaimRef:name: pulsar-bookie-journal-pulsar-bookie-2namespace: pulsarnodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- node3

准备 Pulsar Manager 的 PostgreSQL 数据库

CREATE USER pulsar_manager WITH PASSWORD '<password>';CREATE DATABASE pulsar_manager OWNER pulsar_manager;GRANT ALL PRIVILEGES ON DATABASE pulsar_manager to pulsar_manager;GRANT ALL PRIVILEGES ON ALL TABLES IN SCHEMA pulsar_manager TO pulsar_manager;ALTER SCHEMA public OWNER to pulsar_manager;GRANT ALL PRIVILEGES ON ALL TABLES IN SCHEMA public TO pulsar_manager;GRANT ALL PRIVILEGES ON ALL SEQUENCES IN SCHEMA public TO pulsar_manager;

CREATE TABLE IF NOT EXISTS environments (name varchar(256) NOT NULL,broker varchar(1024) NOT NULL,CONSTRAINT PK_name PRIMARY KEY (name),UNIQUE (broker));CREATE TABLE IF NOT EXISTS topics_stats (topic_stats_id BIGSERIAL PRIMARY KEY,environment varchar(255) NOT NULL,cluster varchar(255) NOT NULL,broker varchar(255) NOT NULL,tenant varchar(255) NOT NULL,namespace varchar(255) NOT NULL,bundle varchar(255) NOT NULL,persistent varchar(36) NOT NULL,topic varchar(255) NOT NULL,producer_count BIGINT,subscription_count BIGINT,msg_rate_in double precision ,msg_throughput_in double precision ,msg_rate_out double precision ,msg_throughput_out double precision ,average_msg_size double precision ,storage_size double precision ,time_stamp BIGINT);CREATE TABLE IF NOT EXISTS publishers_stats (publisher_stats_id BIGSERIAL PRIMARY KEY,producer_id BIGINT,topic_stats_id BIGINT NOT NULL,producer_name varchar(255) NOT NULL,msg_rate_in double precision ,msg_throughput_in double precision ,average_msg_size double precision ,address varchar(255),connected_since varchar(128),client_version varchar(36),metadata text,time_stamp BIGINT,CONSTRAINT fk_publishers_stats_topic_stats_id FOREIGN KEY (topic_stats_id) References topics_stats(topic_stats_id));CREATE TABLE IF NOT EXISTS replications_stats (replication_stats_id BIGSERIAL PRIMARY KEY,topic_stats_id BIGINT NOT NULL,cluster varchar(255) NOT NULL,connected BOOLEAN,msg_rate_in double precision ,msg_rate_out double precision ,msg_rate_expired double precision ,msg_throughput_in double precision ,msg_throughput_out double precision ,msg_rate_redeliver double precision ,replication_backlog BIGINT,replication_delay_in_seconds BIGINT,inbound_connection varchar(255),inbound_connected_since varchar(255),outbound_connection varchar(255),outbound_connected_since varchar(255),time_stamp BIGINT,CONSTRAINT FK_replications_stats_topic_stats_id FOREIGN KEY (topic_stats_id) References topics_stats(topic_stats_id));CREATE TABLE IF NOT EXISTS subscriptions_stats (subscription_stats_id BIGSERIAL PRIMARY KEY,topic_stats_id BIGINT NOT NULL,subscription varchar(255) NULL,msg_backlog BIGINT,msg_rate_expired double precision ,msg_rate_out double precision ,msg_throughput_out double precision ,msg_rate_redeliver double precision ,number_of_entries_since_first_not_acked_message BIGINT,total_non_contiguous_deleted_messages_range BIGINT,subscription_type varchar(16),blocked_subscription_on_unacked_msgs BOOLEAN,time_stamp BIGINT,UNIQUE (topic_stats_id, subscription),CONSTRAINT FK_subscriptions_stats_topic_stats_id FOREIGN KEY (topic_stats_id) References topics_stats(topic_stats_id));CREATE TABLE IF NOT EXISTS consumers_stats (consumer_stats_id BIGSERIAL PRIMARY KEY,consumer varchar(255) NOT NULL,topic_stats_id BIGINT NOT NUll,replication_stats_id BIGINT,subscription_stats_id BIGINT,address varchar(255),available_permits BIGINT,connected_since varchar(255),msg_rate_out double precision ,msg_throughput_out double precision ,msg_rate_redeliver double precision ,client_version varchar(36),time_stamp BIGINT,metadata text);CREATE TABLE IF NOT EXISTS tokens (token_id BIGSERIAL PRIMARY KEY,role varchar(256) NOT NULL,description varchar(128),token varchar(1024) NOT NUll,UNIQUE (role));CREATE TABLE IF NOT EXISTS users (user_id BIGSERIAL PRIMARY KEY,access_token varchar(256),name varchar(256) NOT NULL,description varchar(128),email varchar(256),phone_number varchar(48),location varchar(256),company varchar(256),expire BIGINT,password varchar(256),UNIQUE (name));CREATE TABLE IF NOT EXISTS roles (role_id BIGSERIAL PRIMARY KEY,role_name varchar(256) NOT NULL,role_source varchar(256) NOT NULL,description varchar(128),resource_id BIGINT NOT NULL,resource_type varchar(48) NOT NULL,resource_name varchar(48) NOT NULL,resource_verbs varchar(256) NOT NULL,flag INT NOT NULL);CREATE TABLE IF NOT EXISTS tenants (tenant_id BIGSERIAL PRIMARY KEY,tenant varchar(255) NOT NULL,admin_roles varchar(255),allowed_clusters varchar(255),environment_name varchar(255),UNIQUE(tenant));CREATE TABLE IF NOT EXISTS namespaces (namespace_id BIGSERIAL PRIMARY KEY,tenant varchar(255) NOT NULL,namespace varchar(255) NOT NULL,UNIQUE(tenant, namespace));CREATE TABLE IF NOT EXISTS role_binding(role_binding_id BIGSERIAL PRIMARY KEY,name varchar(256) NOT NULL,description varchar(256),role_id BIGINT NOT NULL,user_id BIGINT NOT NULL);

使用 Helm 在 K8S 中部署 Pulsar

定制编写 helm chart 的 values.yaml

auth:authentication:enabled: true # 开启jwt认证provider: "jwt"jwt:usingSecretKey: false # jwt认证使用非对称秘钥对authorization:enabled: true # 开启授权superUsers:# broker to broker communicationbroker: "broker-admin"# proxy to broker communicationproxy: "proxy-admin"# pulsar-admin client to broker/proxy communicationclient: "admin"components: # 启用的组件autorecovery: truebookkeeper: truebroker: truefunctions: trueproxy: truepulsar_manager: truetoolset: truezookeeper: truemonitoring: # 关闭监控组件, 后续尝试使用外部Prometheus对pulsar集群进行监控grafana: falseprometheus: falsenode_exporter: falsevolumes:local_storage: true # 数据卷使用local storageproxy: # proxy的配置(这里是测试环境, 将proxy也调度到node1或node2或node3)nodeSelector:node-role.kubernetes.io/pulsar: pulsartolerations:- key: "dedicated"operator: "Equal"value: "pulsar"effect: "NoSchedule"configData:PULSAR_PREFIX_authenticateMetricsEndpoint: "false"broker: # broker的配置(这里是测试环境, 将proxy也调度到node1或node2或node3)nodeSelector:node-role.kubernetes.io/pulsar: pulsartolerations:- key: "dedicated"operator: "Equal"value: "pulsar"effect: "NoSchedule"zookeeper: # broker的配置replicaCount: 3tolerations:- key: "dedicated"operator: "Equal"value: "pulsar"effect: "NoSchedule"volumes:data: # 配置使用local pv, 需要与前面手动创建的local pv信息一致local_storage: truesize: 20Gibookkeeper: # bookkeeper的配置replicaCount: 3tolerations:- key: "dedicated"operator: "Equal"value: "pulsar"effect: "NoSchedule"volumes:journal: # 配置使用local pv, 需要与前面手动创建的local pv信息一致local_storage: truesize: 50Giledgers: # 配置使用local pv, 需要与前面手动创建的local pv信息一致local_storage: truesize: 50Gipulsar_manager: # pulsar_manager的配置(这里是测试环境, 将pulsar_manager也调度到node1或node2或node3)replicaCount: 1admin:# 文档中描述这里是pulsar manager web界面登录用户密码,但实际上当使用外部PostgreSQL数据库时,这里需要指定PostgreSQL的数据库和密码,不知道是否是pulsar-helm-chart 2.7.7的问题user: pulsar_managerpassword: 05aM3Braz_M4RWpnconfigData:DRIVER_CLASS_NAME: org.postgresql.DriverURL: jdbc:postgresql://<ip>:5432/pulsar_manager# 文档中描述这里PostgreSQL数据库的密码,但实际上这里不能指定USERNAME和PASSWORD, 不知道是否是pulsar-helm-chart 2.7.7的问题# USERNAME: pulsar_manager# PASSWORD: 05aM3Braz_M4RWpnLOG_LEVEL: INFO## 开启JWT认证后, 这里需要指定pulsar-token-admin这个Secret中的JWT TokenJWT_TOKEN: <jwt token...>autorecovery: # autorecovery的配置(这里是测试环境, 将autorecovery也调度到node1或node2或node3)replicaCount: 1nodeSelector:node-role.kubernetes.io/pulsar: pulsartolerations:- key: "dedicated"operator: "Equal"value: "pulsar"effect: "NoSchedule"toolset: # toolset的配置(这里是测试环境, 将toolset也调度到node1或node2或node3)replicaCount: 1nodeSelector:node-role.kubernetes.io/pulsar: pulsartolerations:- key: "dedicated"operator: "Equal"value: "pulsar"effect: "NoSchedule"images: # 对个组件使用私有镜像仓库的配置imagePullSecrets:- regsecret # 私有镜像仓库的image pull secret, 需要提前在k8s命名空间中创建autorecovery:repository: harbor.example.com/library/apachepulsar/pulsar-alltag: 2.7.4bookie:repository: harbor.example.com/library/apachepulsar/pulsar-alltag: 2.7.4broker:repository: harbor.example.com/library/apachepulsar/pulsar-alltag: 2.7.4functions:repository: harbor.example.com/library/apachepulsar/pulsar-alltag: 2.7.4proxy:repository: harbor.example.com/library/apachepulsar/pulsar-alltag: 2.7.4pulsar_manager:repository: harbor.example.com/library/apachepulsar/pulsar-managertag: v0.2.0zookeeper:repository: harbor.example.com/library/apachepulsar/pulsar-alltag: 2.7.4pulsar_metadata:component: pulsar-initimage:# the image used for running `pulsar-cluster-initialize` jobrepository: harbor.example.com/library/apachepulsar/pulsar-alltag: 2.7.4

$ kubectl patch serviceaccount default -p '{"imagePullSecrets": [{"name": "regsecret"}]}' -n pulsar

使用 helm install 安装 pulsar

$ helm install \

--values values.yaml \

--set initialize=true \

--namespace pulsar \

pulsar pulsar-2.7.7.tgz

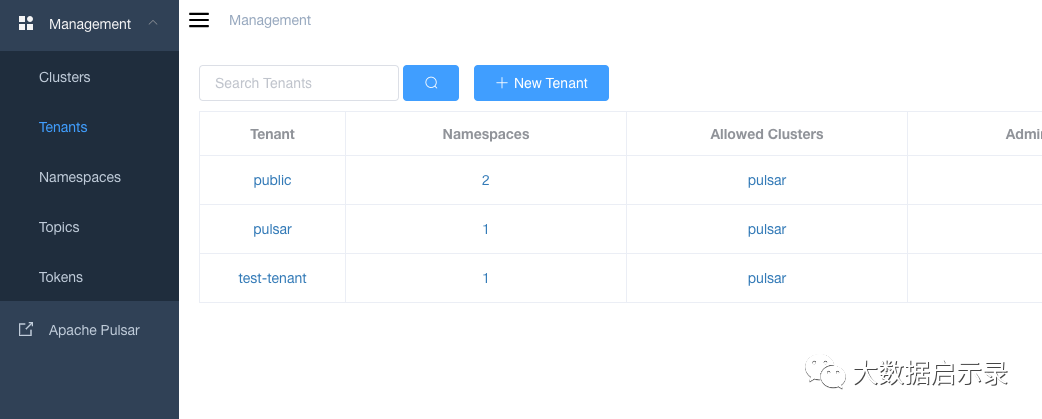

在 toolset pod 中测试创建 tenant, namespace 和 topic

创建 pulsar-manager 的管理员用户并登录查看

$ kubectl get svc -l app=pulsar -n pulsarNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEpulsar-bookie ClusterIP None <none> 3181/TCP,8000/TCP 40mpulsar-broker ClusterIP None <none> 8080/TCP,6650/TCP 40mpulsar-proxy LoadBalancer 10.104.105.137 <pending> 80:31970/TCP,6650:32631/TCP 40mpulsar-pulsar-manager LoadBalancer 10.110.207.9 <pending> 9527:32764/TCP 40mpulsar-recovery ClusterIP None <none> 8000/TCP 40mpulsar-toolset ClusterIP None <none> <none> 40mpulsar-zookeeper ClusterIP None <none> 8000/TCP,2888/TCP,3888/TCP,2181/TCP 40m

helm repo add incubator http://mirror.azure.cn/kubernetes/charts-incubator[root@k8s-centos7-master-150 kafka]# helm repo listNAME URLminio https://helm.min.io/bitnami https://charts.bitnami.com/bitnamiincubator http://mirror.azure.cn/kubernetes/charts-incubator

创建 StorageClass

apiVersion: storage.k8s.io/v1kind: StorageClassmetadata:name: local-storageprovisioner: kubernetes.io/no-provisionervolumeBindingMode: WaitForFirstConsumerreclaimPolicy: Retain

kubectl apply -f local-storage.yamlstorageclass.storage.k8s.io/local-storage created

[root@k8s-centos7-master-150 kafka]# kubectl get sc --all-namespaces -o wideNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGElocal-storage kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 83m

因为要在 k8s-centos7-node3-151 ,k8s-centos7-node4-152 这两个 k8s 节点上部署 2 个 Kafka 的 broker 节点,因此先在三个节点上创建这 2 个kafka broker节点的Local PV

kafka-local-pv.yaml

apiVersion: v1kind: PersistentVolumemetadata:name: kafka-pv-0spec:capacity:storage: 5GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: /data/kafka/data-0nodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- k8s-centos7-node3-151---apiVersion: v1kind: PersistentVolumemetadata:name: kafka-pv-1spec:capacity:storage: 5GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: /data/kafka/data-1nodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- k8s-centos7-node4-152

kubectl apply -f kafka-local-pv.yaml

[root@k8s-centos7-master-150 kafka]# kubectl get pv,pvc --all-namespacesNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEpersistentvolume/kafka-pv-0 5Gi RWO Retain Bound default/data-kafka-zookeeper-1 local-storage 53mpersistentvolume/kafka-pv-1 5Gi RWO Retain Bound default/data-kafka-zookeeper-0 local-storage 53mpersistentvolume/kafka-zk-pv-0 5Gi RWO Retain Bound default/datadir-kafka-0 local-storage 52mpersistentvolume/kafka-zk-pv-1 5Gi RWO Retain Bound default/datadir-kafka-1 local-storage 52mNAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEdefault persistentvolumeclaim/data-kafka-zookeeper-0 Bound kafka-pv-1 5Gi RWO local-storage 39mdefault persistentvolumeclaim/data-kafka-zookeeper-1 Bound kafka-pv-0 5Gi RWO local-storage 26mdefault persistentvolumeclaim/datadir-kafka-0 Bound kafka-zk-pv-0 5Gi RWO local-storage 39mdefault persistentvolumeclaim/datadir-kafka-1 Bound kafka-zk-pv-1 5Gi RWO local-storage 20m

因为要在 k8s-centos7-node3-151 ,k8s-centos7-node4-152 这两个 k8s 节点上部署 2 个 Zookeeper 节点,因此先在三个节点上创建这 2 个 Zookeeper 节点的 Local PV

zookeeper-local-pv.yaml

apiVersion: v1kind: PersistentVolumemetadata:name: kafka-zk-pv-0spec:capacity:storage: 5GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: /data/kafka/zkdata-0nodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- k8s-centos7-node3-151---apiVersion: v1kind: PersistentVolumemetadata:name: kafka-zk-pv-1spec:capacity:storage: 5GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: /data/kafka/zkdata-1nodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- k8s-centos7-node4-152

执行命令:

kubectl apply -f zookeeper-local-pv.yaml

同理,也要在相应的节点上创建目录,不再赘述

[root@k8s-centos7-master-150 kafka]# kubectl get pv,pvc --all-namespacesNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEpersistentvolume/kafka-pv-0 5Gi RWO Retain Bound default/data-kafka-zookeeper-1 local-storage 57mpersistentvolume/kafka-pv-1 5Gi RWO Retain Bound default/data-kafka-zookeeper-0 local-storage 57mpersistentvolume/kafka-zk-pv-0 5Gi RWO Retain Bound default/datadir-kafka-0 local-storage 56mpersistentvolume/kafka-zk-pv-1 5Gi RWO Retain Bound default/datadir-kafka-1 local-storage 56mNAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEdefault persistentvolumeclaim/data-kafka-zookeeper-0 Bound kafka-pv-1 5Gi RWO local-storage 42mdefault persistentvolumeclaim/data-kafka-zookeeper-1 Bound kafka-pv-0 5Gi RWO local-storage 30mdefault persistentvolumeclaim/datadir-kafka-0 Bound kafka-zk-pv-0 5Gi RWO local-storage 42mdefault persistentvolumeclaim/datadir-kafka-1 Bound kafka-zk-pv-1 5Gi RWO local-storage 24m

部署 Kafka-values.yaml

image:repository: zenko/kafka-managertag: 1.3.3.22zkHosts: kafka-zookeeper:2181basicAuth:enabled: trueusername: adminpassword: adminingress:enabled: truehosts:- km.hongda.comtls:- secretName: hongda-com-tls-secrethosts:- km.hongda.com

helm install kafka -f kafka-values.yaml incubator/kafka

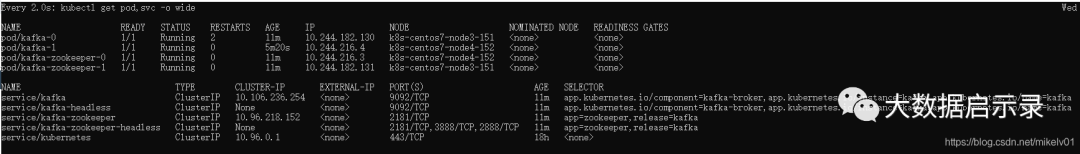

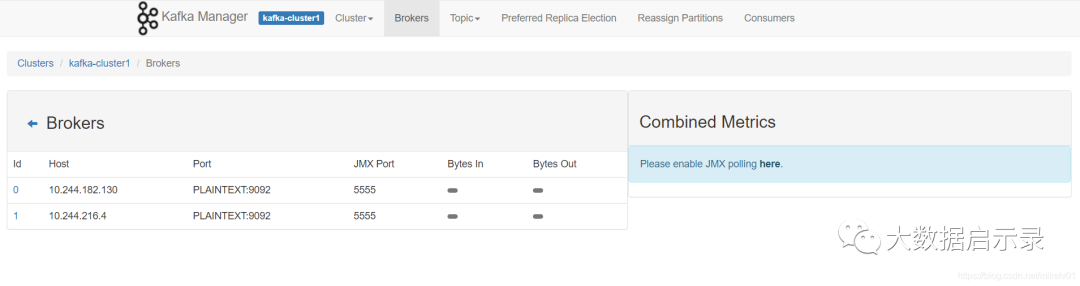

# pod,svc[root@k8s-centos7-master-150 kafka]# kubectl get po,svc -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESpod/kafka-0 1/1 Running 2 33m 10.244.182.130 k8s-centos7-node3-151 <none> <none>pod/kafka-1 1/1 Running 0 26m 10.244.216.4 k8s-centos7-node4-152 <none> <none>pod/kafka-zookeeper-0 1/1 Running 0 33m 10.244.216.3 k8s-centos7-node4-152 <none> <none>pod/kafka-zookeeper-1 1/1 Running 0 32m 10.244.182.131 k8s-centos7-node3-151 <none> <none>NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTORservice/kafka ClusterIP 10.106.236.254 <none> 9092/TCP 33m app.kubernetes.io/component=kafka-broker,app.kubernetes.io/instance=kafka,app.kubernetes.io/name=kafkaservice/kafka-headless ClusterIP None <none> 9092/TCP 33m app.kubernetes.io/component=kafka-broker,app.kubernetes.io/instance=kafka,app.kubernetes.io/name=kafkaservice/kafka-zookeeper ClusterIP 10.96.218.152 <none> 2181/TCP 33m app=zookeeper,release=kafkaservice/kafka-zookeeper-headless ClusterIP None <none> 2181/TCP,3888/TCP,2888/TCP 33m app=zookeeper,release=kafkaservice/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18h <none># statefulset[root@k8s-centos7-master-150 kafka]# kubectl get statefulsetNAME READY AGEkafka 2/2 35mkafka-zookeeper 2/2 35m

Kafka可视化–安装 Kafka Manager

image:repository: zenko/kafka-managertag: 1.3.3.22zkHosts: kafka-zookeeper:2181basicAuth:enabled: trueusername: adminpassword: adminingress:enabled: truehosts:- km.hongda.comtls:- secretName: hongda-com-tls-secrethosts:- km.hongda.com

执行命令

helm install kafka-manager --set service.type=NodePort -f kafka-manager-values.yaml stable/kafka-manager

安装完成后,确认kafka-manager的Pod已经正常启动:

[root@k8s-centos7-master-150 kafka]# kubectl get pod -l app=kafka-managerNAME READY STATUS RESTARTS AGEkafka-manager-5979b5b6c8-spmzz 1/1 Running 0 6m36s

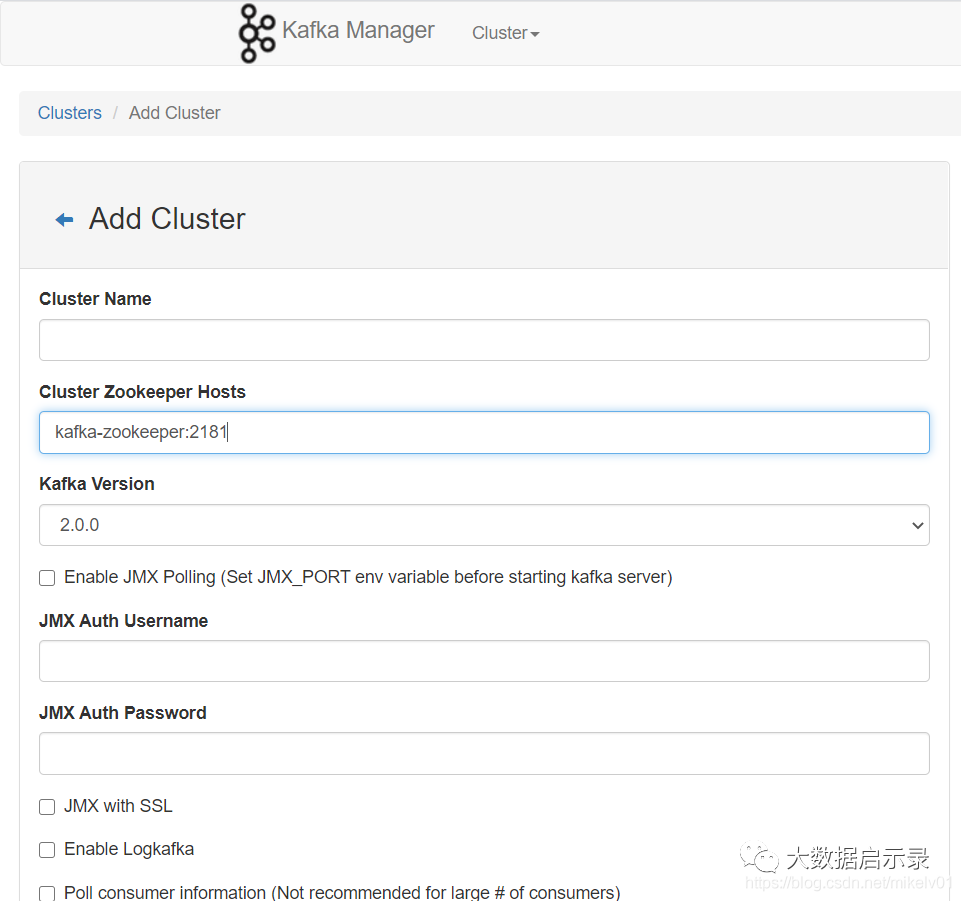

并配置Cluster Zookeeper Hosts为 kafka-zookeeper:2181,即可将前面部署的kafka集群纳入kafka-manager管理当中。

参见:https://blog.csdn.net/boling_cavalry/article/details/105466163?utm_medium=distribute.pc_aggpage_search_result.none-task-blog-2~aggregatepage~first_rank_ecpm_v1~rank_v31_ecpm-2-105466163-null-null.pc_agg_new_rank&utm_term=kafka+on+k8s&spm=1000.2123.3001.4430