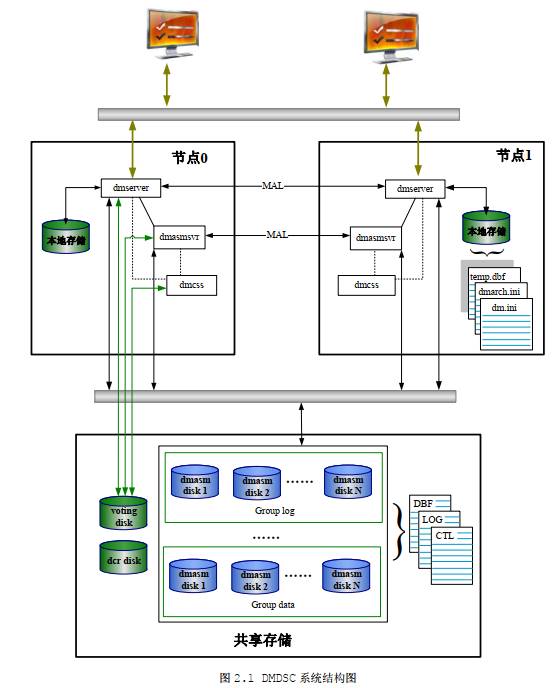

达梦8数据共享集群DSC搭建部署手册

- 部署前准备

1、操作系统参数调整

2、安装多路径管理相关软件包

3、编写多路径管理配置文件

4、启动服务

5、通过命令查看存储是否部署成功

6、UDEV方式绑定裸磁盘

7、裸设备分区

8.裸设备绑定

二、达梦DSC集群部署

2、各个节点安装数据库软件

5、配置dmdcr_cfg.ini文件

6、初始化ASM磁盘

7、创建dmasvrmal.ini配置文件

8、配置dmdcr.ini配置文件

9、启动ASM相关服务

10、创建 DMASM 磁盘组

11、创建数据库初始化文件

11、初始化数据库

12、测试启动实例

13、在任意一节点创建监视器

14、各个实例注册服务

三、配置应用连接

一、部署前准备

操作系统要求

DM 数据库安装在 Linux 操作系统所需条件:glibc 2.3 以上,内核 2.6,预先安装 UnixODBC,系统性能监控等组件。

集群规划

A节点 | B节点 | DW节点 | |

节点IP | 192.168.3.99 | 192.168.3.100 | 192.168.3.119 |

内网通信IP | 192.168.3.109 | 192.168.3.110 | 192.168.3.106 |

实例 | DSC0 | DSC1 | DW01 |

实例端口 | 5236 | 5236 | |

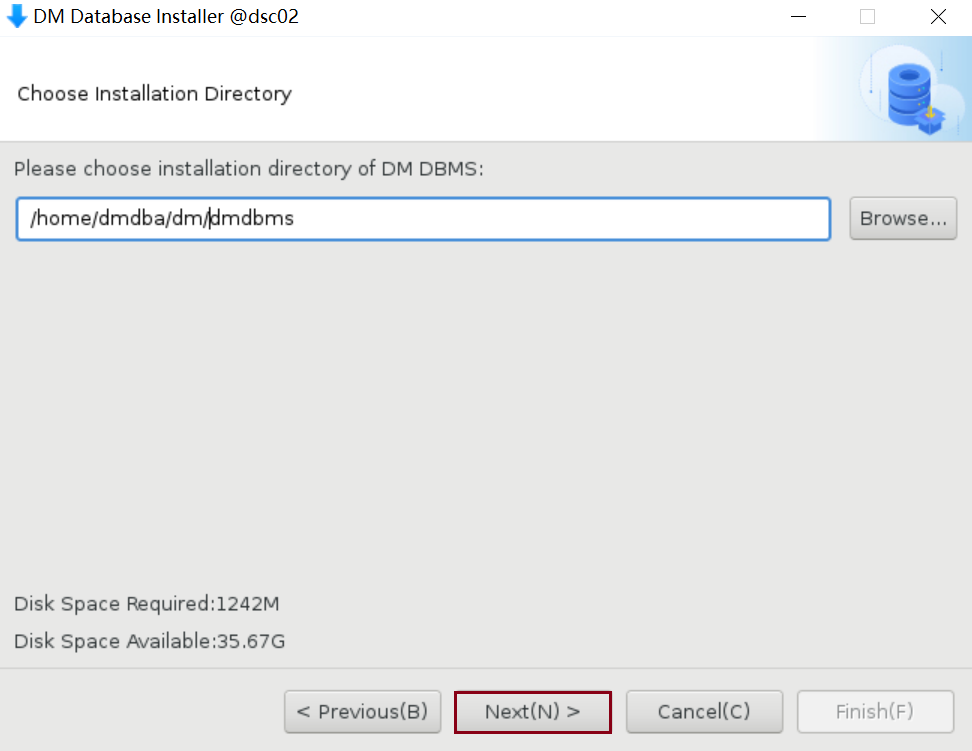

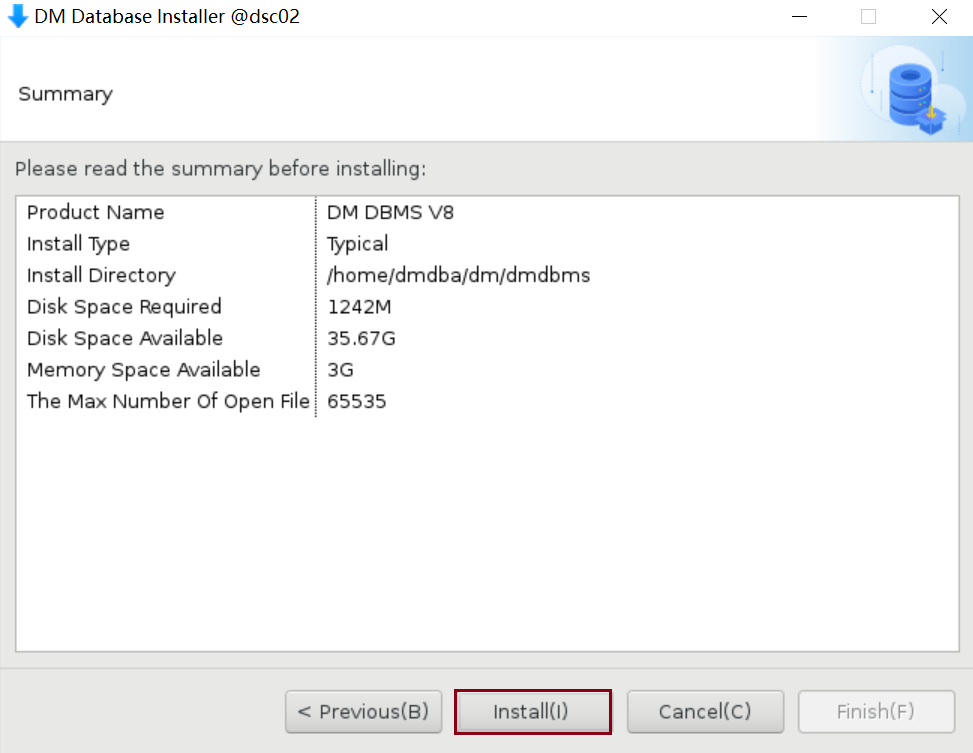

安装路径 | /home/dmdba/dm/dmdbms | /home/dmdba/dm/dmdbms | |

配置文件路径 | /home/dmdba/dm/config | /home/dmdba/dm/config | |

归档路径 | +DMARCH | +DMARCH | 单独挂载磁盘 |

数据存放 | + DMDATA | + DMDATA | 单独挂载磁盘 |

DCR信息 | +DCR | +DCR | 单独挂载磁盘 |

DMVTD信息 | +DMVTD | +DMVTD | 单独挂载磁盘 |

REDO日志 | +DMLOG | +DMLOG | 单独挂载磁盘 |

CSS端口 | 9341 | 9342 | |

DCR检查端口 | 9741 | 9742 | |

DCR_OGUID | 63635 | ||

NTP服务器 | NTP | NTP |

搭建 2 节点共享存储集群,端口规划如下:(实际中可以按需要修改端口号)

防火墙集群之间需开放以上所有端口,集群对客户端只需要开通数据库实例监听端口。

操作系统参数调整

修改HOSTS,所有节点这样配置设定

[root@dsc01 ~]# cat /etc/hosts 127.0.0.1 localhost ::1 localhost 192.168.3.99 dsc01 192.168.3.100 dsc02 |

DM 数据库不应该使用 root 用户安装和维护。需要在安装之前为 DM 数据库创建一个专用的系统用户 (dmdba) 和用户组 (dinstall)。

执行以下命令,新建用户组 dinstall:

groupadd dinstall useradd -g dinstall -m -d /home/dmdba -s /bin/bash dmdba echo 'dmdba' | passwd --stdin dmdba |

创建相关用户目录及权限

mkdir -p /home/dmdba/dm/dmdbms mkdir -p /home/dmdba/dm/config mkdir –p /home/dmdba/dm/config/{dsc1_config,dsc2_config} chown -R dmdba:dinstall /home/dmdba/dm |

禁用防火墙 systemctl status firewalld systemctl stop firewalld systemctl disable firewalld 禁用SELINUX setenforce 0 /etc/sysconfig/selinux SELINUX=disabled |

cp -rp /etc/sysconfig/selinux /etc/sysconfig/selinuxbak sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux |

sed -i 's/^HISTSIZE=1000/HISTSIZE=10000/' /etc/profile source /etc/profile |

cp -rp /etc/security/limits.conf /etc/security/limits.conf.bak echo "dmdba soft nice 0" >>/etc/security/limits.conf echo "dmdba hard nice 0" >>/etc/security/limits.conf echo "dmdba soft as unlimited" >>/etc/security/limits.conf echo "dmdba hard as unlimited" >>/etc/security/limits.conf echo "dmdba soft fsize unlimited" >>/etc/security/limits.conf echo "dmdba hard fsize unlimited" >>/etc/security/limits.conf echo "dmdba soft nproc 65536" >>/etc/security/limits.conf echo "dmdba hard nproc 65536" >>/etc/security/limits.conf echo "dmdba soft nofile 65536" >>/etc/security/limits.conf echo "dmdba hard nofile 65536" >>/etc/security/limits.conf echo "dmdba soft core unlimited" >>/etc/security/limits.conf echo "dmdba hard core unlimited" >>/etc/security/limits.conf echo "dmdba soft data unlimited" >>/etc/security/limits.conf echo "dmdba hard data unlimited" >>/etc/security/limits.conf echo "vm.dirty_background_ratio = 3" >>/etc/security/limits.conf echo "vm.dirty_ratio = 80" >>/etc/security/limits.conf echo "vm.dirty_expire_centisecs = 500" >>/etc/security/limits.conf echo "vm.dirty_writeback_centisecs = 100" >>/etc/security/limits.conf echo "Modifing the /etc/security/limits.conf has been succeed." |

cp -rp /etc/pam.d/login /etc/pam.d/login.bak /etc/pam.d/login echo "session required pam_limits.so" >> /etc/pam.d/login echo "session required /lib64/security/pam_limits.so" >> /etc/pam.d/login |

cp -rp /etc/security/limits.d/90-nproc.conf /etc/security/limits.d/90-nproc.bak echo "* soft nproc 65536" >> /etc/security/limits.d/90-nproc.conf |

cp -rp /etc/sysctl.conf /etc/sysctl.conf.bak echo "fs.aio-max-nr = 1048576" >> /etc/sysctl.conf echo "kernel.core_pattern = /home/dmdba/dm_coredump/core-%e-%p-%t" >> /etc/sysctl.conf echo "fs.file-max = 6815744" >> /etc/sysctl.conf echo "kernel.shmall = 2097152" >> /etc/sysctl.conf echo "kernel.shmmax = 2074470400" >> /etc/sysctl.conf echo "kernel.shmmni = 4096" >> /etc/sysctl.conf echo "kernel.sem = 250 32000 100 128" >> /etc/sysctl.conf echo "net.ipv4.ip_local_port_range = 9000 65500" >> /etc/sysctl.conf echo "net.core.rmem_default = 262144" >> /etc/sysctl.conf echo "net.core.rmem_max = 4194304" >> /etc/sysctl.conf echo "net.core.wmem_default = 262144" >> /etc/sysctl.conf echo "net.core.wmem_max = 1048586" >> /etc/sysctl.conf echo "vm.swappiness=0" >> /etc/sysctl.conf echo "vm.overcommit_memory=0" >> /etc/sysctl.conf 参数生效 Sysctl –p |

echo "echo deadline > /sys/block/sda/queue/scheduler" >> /etc/rc.local echo "echo never > /sys/kernel/mm/redhat_transparent_hugepage/enabled" >> /etc/rc.local bash /etc/rc.local |

echo "ulimit -n 65535" >> /etc/profile echo "export LANG=en_US.UTF-8" >> /etc/profile source /etc/profile |

sed -i '/^#GSSAPIAuthentication/cGSSAPIAuthentication no' /etc/ssh/sshd_config sed -i '/^GSSAPIAuthentication/cGSSAPIAuthentication no' /etc/ssh/sshd_config sed -i '/^#UseDNS/cUseDNS no' /etc/ssh/sshd_config sed -i '/^UseDNS/cUseDNS no' /etc/ssh/sshd_config |

echo "limits.conf" cat /etc/security/limits.conf echo " limit login" cat /etc/pam.d/login echo "sysctl" cat /etc/sysctl.conf echo "rc.local" cat /etc/rc.local echo "environment variable" cat /etc/profile echo "environment variable" cat /etc/security/limits.d/90-nproc.conf |

各个节点服务器查看是否安装sicsi软件

rpm -qa|grep iscsi-initiator-utils iscsi-initiator-utils-6.2.0.874-4.el7.x86_64 iscsi-initiator-utils-iscsiuio-6.2.0.874-4.el7.x86_64 |

各个节点获取存储IQN

iscsiadm --mode discovery --type sendtargets --portal 192.168.3.120 192.168.3.120:3260,1 iqn.2006-01.com.openfiler:tsn.aeb038ba3ada 192.168.3.121:3260,1 iqn.2006-01.com.openfiler:tsn.aeb038ba3ada 192.168.3.122:3260,1 iqn.2006-01.com.openfiler:tsn.aeb038ba3ada 192.168.3.123:3260,1 iqn.2006-01.com.openfiler:tsn.aeb038ba3ada |

挂载命令,当前服务器版本原因没有需要做挂载,这里只做记录命令

iscsiadm --mode node --targetname iqn.2006-01.com.openfiler:tsn.aeb038ba3ada -portal 192.168.3.120:3260 --login |

查看磁盘挂载

iscsiadm -m session -P 3 |

另外通过查看SCSI服务状态也可以查看到相关信息(只做纪录)

systemctl status iscsi.service |

安装多路径管理相关软件包

rpm -qa|grep device-mapper-multipath device-mapper-multipath-libs-0.4.9-111.el7.x86_64 device-mapper-multipath-0.4.9-111.el7.x86_64 #最好使用yum命令安装 Yum install device-mapper-multipath |

多路径管理软件安装方法(只做记录,不纳入本次安装步骤)

rpm -ivh device-mapper-multipath-libs-0.4.9-111.el7.x86_64.rpm rpm -ivh device-mapper-multipath-0.4.9-111.el7.x86_64.rpm |

多路径管理软件加载内核中

modprobe dm-multipath modprobe dm-round-robin #查看软件是否加载内核成功 Lsmod |grep multipath |

裸设备分区

据规划将数据库安装到/dm8/dmdbms下 只需要安装数据库软件,暂时不要安装数据库实例 fdisk /dev/sdxxx 依次输入 n p 1 回车 +XXM,完成第x块磁盘划分 输入w命令,将裸设备划分的设置进行保存 |

其它节点服务器依次执行以下命令,更新分区表

partprobe /dev/sdXX |

Centos7如何获取服务器WWID

for i in `cat /proc/partitions |awk {'print $4'} |grep sd`; do echo "Device: $i WWID: `/usr/lib/udev/scsi_id --page=0x83 --whitelisted --device=/dev/$i` "; done |sort -k4 |

编写多路径管理配置文件

这一步操作均为root操作,两个实例节点都需要执行

编辑/etc/multipath.conf配置文件

defaults { user_friendly_names yes find_multipaths yes } blacklist { devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*" devnode "^hd[a-z]" #我这里sda是本地硬盘,所以加黑名单 devnode "^sda" } multipaths { multipath { wwid 14f504e46494c45524c71563055302d75556c762d4c715834 alias asmdb01 path_grouping_policy multibus path_selector "round-robin 0" failback immediate } multipath { wwid 14f504e46494c4552335541484b782d5255564c2d43584848 alias asmredo01 path_grouping_policy multibus path_selector "round-robin 0" failback immediate } multipath { wwid 14f504e46494c455248715978684a2d5a456f662d42616144 alias asmdcr01 path_grouping_policy multibus path_selector "round-robin 0" failback immediate } multipath { wwid 14f504e46494c455263306b6b30332d696e564a2d4a767234 alias asmdvote01 path_grouping_policy multibus path_selector "round-robin 0" failback immediate } |

启动服务

multipathd服务

--查看状态 systemctl status multipathd --启动 systemctl start multipathd --设置开机启动 systemctl enable multipathd --查看开机启动列表 systemctl list-unit-files|grep multipathd --查看存储盘 multipath -ll --重新加载 systemctl reload multipathd |

通过命令查看存储是否部署成功

multipath -ll |

UDEV方式绑定裸磁盘

确认LINUX是否有UDEV软件

rpm -qa|grep udev system-config-printer-udev-1.4.1-19.el7.x86_64 libgudev1-219-78.el7_9.5.x86_64 python-pyudev-0.15-9.el7.noarch [root@dsc01 ~]# yum install udev* Loaded plugins: fastestmirror, langpacks Loading mirror speeds from cached hostfile * base: mirrors.ustc.edu.cn * extras: mirrors.ustc.edu.cn * updates: mirrors.ustc.edu.cn Package systemd-219-78.el7_9.5.x86_64 already installed and latest version |

查看操作系统层面WWID

udevadm info --query=all --name=/dev/mapper/asmdb01 |grep -i DM_UUID |

裸设备绑定

配置udev规则

vim /etc/udev/rules.d/99-dm-asm.rules KERNEL=="dm-*",ENV{DM_UUID}=="mpath-14f504e46494c45524c71563055302d75556c762d4c715834",SYMLINK+="raw/dscdb01",OWNER="dmdba",GROUP="dinstall",MODE="0660" KERNEL=="dm-*",ENV{DM_UUID}=="mpath-14f504e46494c4552335541484b782d5255564c2d43584848",SYMLINK+="raw/dscredo01",OWNER="gdmdba",GROUP="dinstall",MODE="0660" KERNEL=="dm-*",ENV{DM_UUID}=="mpath-14f504e46494c455248715978684a2d5a456f662d42616144",SYMLINK+="raw/dscdcr01",OWNER="dmdba",GROUP="dinstall",MODE="0660" KERNEL=="dm-*",ENV{DM_UUID}=="mpath-14f504e46494c455263306b6b30332d696e564a2d4a767234",SYMLINK+="raw/dscvote01",OWNER="dmdba",GROUP="dinstall",MODE="0660" |

将配置好的规则文件放到DSC每个节点服务器中/etc/udev/rules.d/目录下

加载udev规则

#修改udev后台的内部状态信息

udevadm control --reload udevadm trigger |

# 查看设备文件的符号链接是否生效,权限,所属用户组是否正确

ls -la /dev/dsc* |

这一步操作均为root操作,两个实例节点都需要执行

vi /etc/rc.local 追加以下内容: chown dmdba.dinstall /dev/raw/dsc* chown dmdba.dinstall /dev/sd* |

通过blockdev --getsize64 /dev/raw/dsc* 检查裸设备是否挂载成功,能够正常反馈各个裸设备空间大小即为成功

blockdev --getsize64 /dev/raw/dscdb01 |

每个节点服务器安装达梦数据库软件

创建相关用户,组及权限

groupadd dinstall -g 2001 useradd -g dinstall -m -d /home/dmdba -s /bin/bash dmdba mkdir -p /home/dmdba/dm/dmdbms chown -R dmdba:dinstall /home/dmdba/dm |

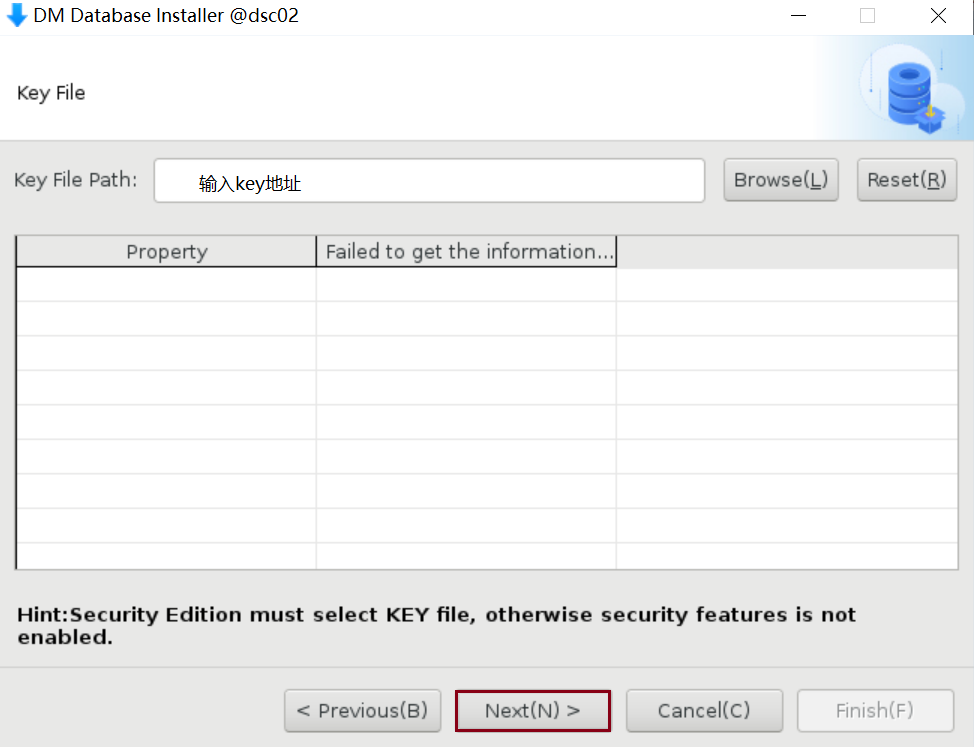

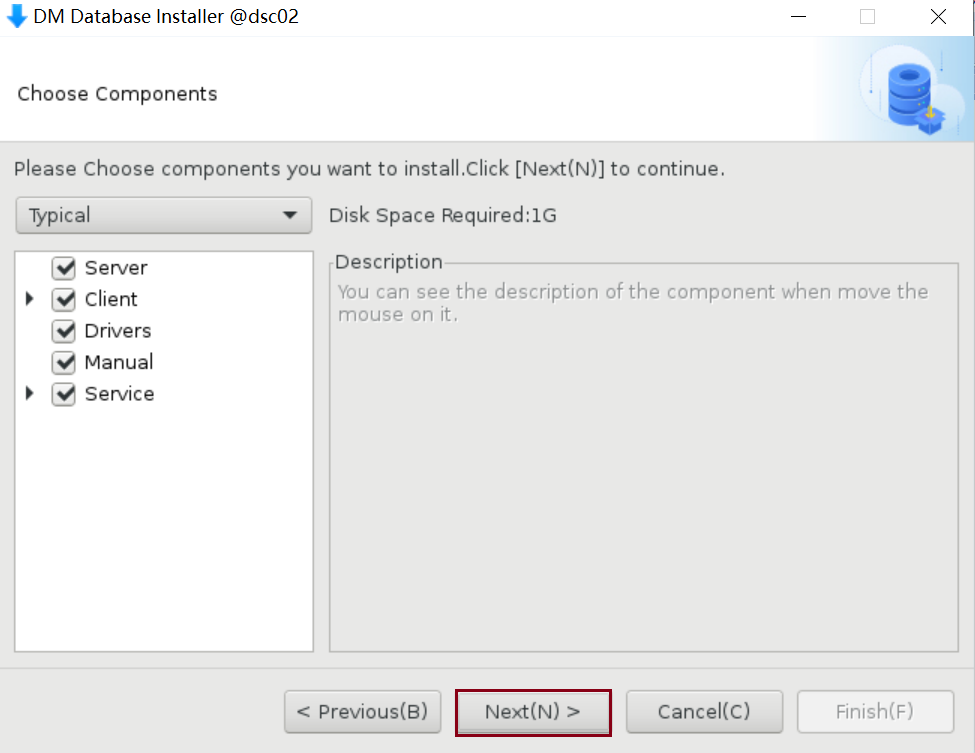

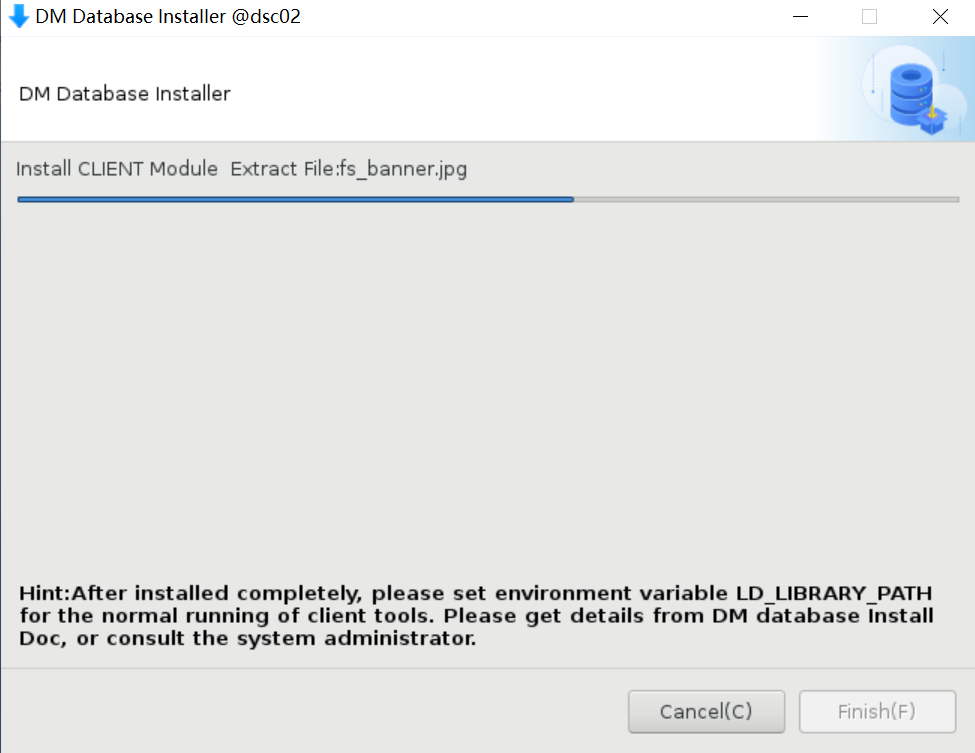

安装达梦数据库软件

export DISPLAY=192.168.3.49:0.0 ./DMInstall.bin |

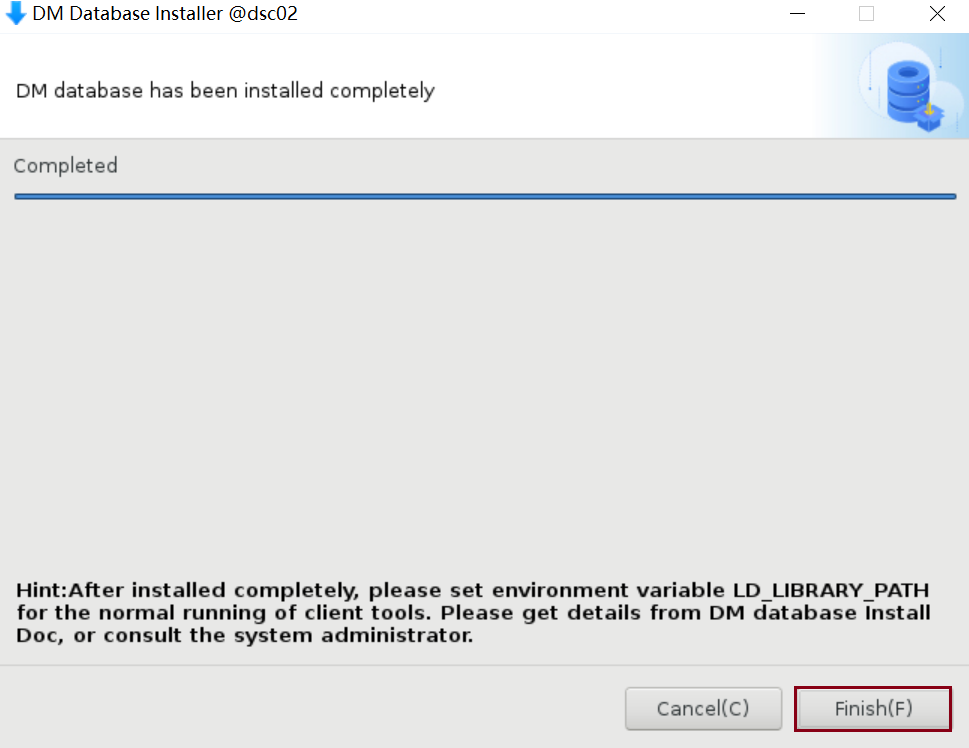

每个节点都要安装达梦数据库软件

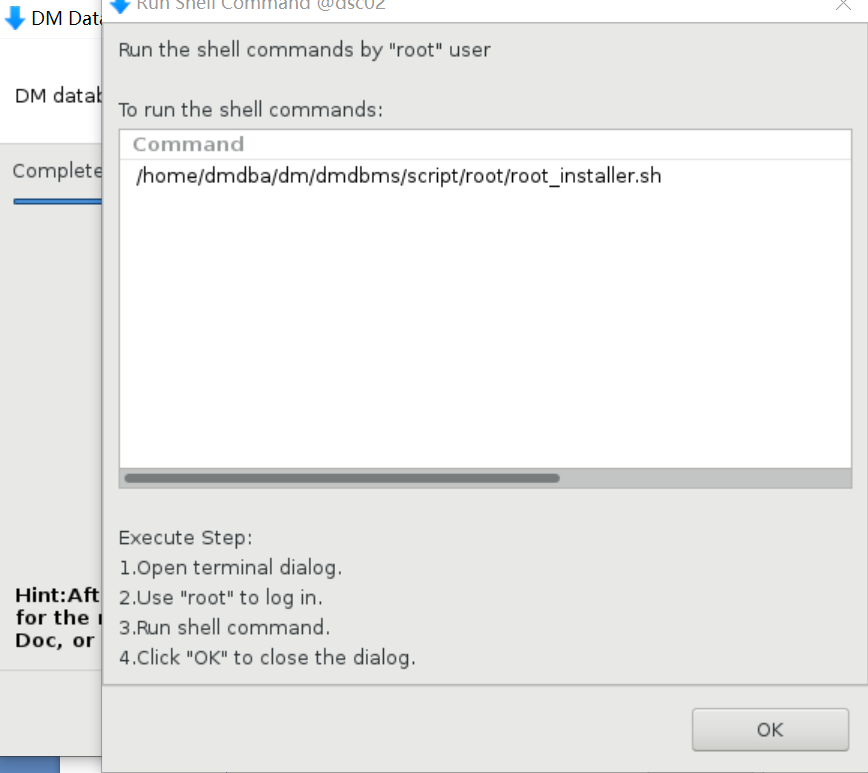

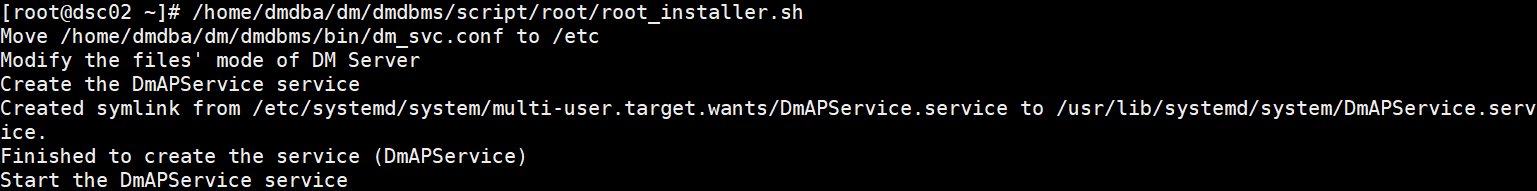

Root帐户执行脚本

点击结束,先不安装数据库

配置dmdcr_cfg.ini配置文件(每个节点均做相同配置)

达梦数据库软件/home/dmdba/dm/config下配置

vim /home/dmdba/dm/config/dmdcr_cfg.ini

dmdcr_cfg.ini 是用于格式化 DCR 和 Voting Disk 的配置文件

配置内容包括:集群环境全局信息、集群组信息、ASM组、以及组内实例节点信息。

DCR_N_GRP = 3 DCR_VTD_PATH =/dev/raw/dscvote01 DCR_OGUID = 63635 [GRP] DCR_GRP_TYPE = CSS DCR_GRP_NAME = GRP_CSS DCR_GRP_N_EP = 2 DCR_GRP_DSKCHK_CNT = 60 [GRP_CSS] DCR_EP_NAME = CSS0 DCR_EP_HOST = 192.168.3.99 DCR_EP_PORT = 9341 [GRP_CSS] DCR_EP_NAME = CSS1 DCR_EP_HOST = 192.168.3.100 DCR_EP_PORT = 9342 [GRP] DCR_GRP_TYPE = ASM DCR_GRP_NAME = GRP_ASM DCR_GRP_N_EP = 2 DCR_GRP_DSKCHK_CNT = 60 [GRP_ASM] DCR_EP_NAME = ASM0 DCR_EP_SHM_KEY = 93360 DCR_EP_SHM_SIZE = 1024 DCR_EP_HOST = 192.168.3.99 DCR_EP_PORT = 9351 DCR_EP_ASM_LOAD_PATH =/dev/raw [GRP_ASM] DCR_EP_NAME =ASM1 DCR_EP_SHM_KEY = 93361 DCR_EP_SHM_SIZE = 1024 DCR_EP_HOST = 192.168.3.100 DCR_EP_PORT = 9352 DCR_EP_ASM_LOAD_PATH =/dev/raw [GRP] DCR_GRP_TYPE = DB DCR_GRP_NAME = GRP_DSC DCR_GRP_N_EP = 2 DCR_GRP_DSKCHK_CNT = 60 [GRP_DSC] DCR_EP_NAME = DSC0 DCR_EP_SEQNO = 0 DCR_EP_PORT = 5236 DCR_CHECK_PORT = 9741 [GRP_DSC] DCR_EP_NAME =DSC1 DCR_EP_SEQNO = 1 DCR_EP_PORT = 5236 DCR_CHECK_PORT = 9742 |

配置初始化asm磁盘,每个节点做相同操作

/home/dmdba/dm/dmdbms/bin

执行./dmasmcmd |

初始化ASM数据盘

create asmdisk '/dev/raw/dscdb01' 'dmdata01' create votedisk '/dev/raw/dscvote01' 'dmvtd01' create asmdisk '/dev/raw/dscredo01' 'dmrlog01' create dcrdisk '/dev/raw/dscdcr01' 'dmdcr' init dcrdisk '/dev/raw/dscdcr01' from '/home/dmdba/dm/config/dmdcr_cfg.ini' identified by 'aaabbb' init votedisk '/dev/raw/dscvote01' from '/home/dmdba/dm/config/dmdcr_cfg.ini' |

创建dmasvrmal.ini配置文件(每个节点配置此文件)

/home/dmdba/dm/config

MAL_CHECK_INTERVAL = 61 MAL_CONN_FAIL_INTERVAL = 61 [MAL_INST1] MAL_INST_NAME = ASM0 MAL_HOST = 192.168.3.99 MAL_PORT = 9355 [MAL_INST2] MAL_INST_NAME = ASM1 MAL_HOST = 192.168.3.100 MAL_PORT = 9366 |

dmrach.ini归档配置每个节点

节点1

/home/dmdba/dm/config/dsc1_config

DSC0: [ARCHIVE_LOCAL1] ARCH_TYPE = LOCAL ARCH_DEST = +DMLOG/ARCH0 ARCH_FILE_SIZE = 2048 ARCH_SPACE_LIMIT = 204800 [ARCH_REMOTE1] ARCH_TYPE = REMOTE ARCH_DEST = DSC1 ARCH_INCOMING_PATH = +DDMLOG/ARCH1 ARCH_FILE_SIZE = 2048 ARCH_SPACE_LIMIT = 204800 ARCH_LOCAL_SHARE = 1 |

节点2

/home/dmdba/dm/config/dsc2_config

DSC1: [ARCHIVE_LOCAL1] ARCH_TYPE = LOCAL ARCH_DEST = +DMLOG/ARCH1 ARCH_FILE_SIZE = 2048 ARCH_SPACE_LIMIT = 204800 [ARCH_REMOTE1] ARCH_TYPE = REMOTE ARCH_DEST = DSC1 ARCH_INCOMING_PATH = +DMLOG/ARCH0 ARCH_FILE_SIZE = 2048 ARCH_SPACE_LIMIT = 204800 ARCH_LOCAL_SHARE = 1 |

dmmal.ini每个节点均配置相同,这里测试两个节点

节点1

/home/dmdba/dm/config/dsc1_config

节点2

/home/dmdba/dm/config/dsc2_config

[mal_inst0] mal_inst_name = DSC0 mal_host = 192.168.3.99 mal_port = 9255 [mal_inst1] mal_inst_name = DSC1 mal_host = 192.168.3.100 mal_port = 9266 |

配置dmdcr.ini配置文件(每个节点配置这个文件)

dmdcr.ini 配置文件记录了 DCR 磁盘路径、实例序列号等信息;如果不指定DCR_INI 参数, dmasmsvr 默认在当前路径下查找 dmdcr.ini 文件

/home/dmdba/dm/config

DMDCR_PATH = /dev/raw/dscdcr01 DMDCR_MAL_PATH =/home/dmdba/dm/config/dmasvrmal.ini 使用的MA配置文件路径 DMDCR_SEQNO = 0 #DMDCR_ASM_RESTART_INTERVAL = 70 #ASM 重启参数,命令行方式启动 #DMDCR_ASM_STARTUP_CMD =/home/dmdba/dm/dmdbms/bin/DmASMSvrService start #DMDCR_DB_RESTART_INTERVAL = 70 #DB 重启参数,命令行方式启动 #DMDCR_DB_STARTUP_CMD = /home/dmdba/dm/dmdbms/bin/DmService start #DMDCR_AUTO_OPEN_CHECK= 90 |

其它节点参数只有一个不同DMDCR_SEQNO = 0----10

DMDCR_SEQNO = 1 |

启动ASM相关服务

手动启动 dmcss 命令

cd /home/dmdba/dm/dmdbms/bin

nohup ./dmcss DCR_INI=/home/dmdba/dm/config/dmdcr.ini & |

手动拉起dmasm命令

nohup ./dmasmsvr DCR_INI=/home/dmdba/dm/config/dmdcr.ini & |

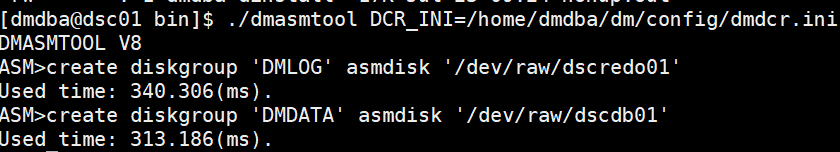

创建 DMASM 磁盘组(单节点,dmdba执行)

/home/dmdba/dm/dmdbms/bin目录下执行

./dmasmtool DCR_INI=/home/dmdba/dm/config/dmdcr.ini create diskgroup 'DMLOG' asmdisk '/dev/raw/dscredo01' #创建日志磁盘组 create diskgroup 'DMDATA' asmdisk '/dev/raw/dscdb01' #创建数据磁盘组 |

创建数据库初始化文件

这一步为dmdba操作,两个节点都要配置,两个实例配置文件相同

配置编辑dminit.ini, /home/dmdba/dm/config目录下

db_name =DSC system_path = +DMDATA/ system = +DMDATA/DAMENG/system.dbf system_size = 128 roll = +DMDATA/DAMENG/roll.dbf roll_size = 128 main = +DMDATA/DAMENG/main.dbf main_size = 128 ctl_path = +DMDATA/DAMENG/dm.ctl ctl_size = 8 dcr_path= /dev/raw/dscdcr01 dcr_seqno = 0 auto_overwrite = 1 [DSC0] config_path= /home/dmdba/dm/config/dsc1_config port_num = 5236 mal_host = 192.168.3.99 mal_port = 9255 log_path = +DMLOG/dsc0_01.log log_path = +DMLOG/dsc0_02.log log_size = 2048 page_size= 32 extent_size=16 case_sensitive=Y [DSC1] config_path= /home/dmdba/dm/config/dsc2_config port_num = 5236 mal_host = 192.169.3.100 mal_port = 9266 log_path = +DMLOG/dsc1_01.log log_path = +DMLOG/dsc1_02.log log_size = 2048 page_size= 32 extent_size=16 case_sensitive=Y |

初始化数据库/home/dmdba/dm/dmdbms/bin

这一步为dmdba操作,在任意一个节点操作即可

./dminit control=/home/dmdba/dm/config/dminit.ini |

1.初始化完成后,数据库实例的数据文件会在dminit.ini中配置的config_path的路径下。

2.初始化完成后在config_path会有一个dsc1_config和dsc2_config两个实例文件。

3.将其中的一个发送到另一个节点。

scp -rp * dmdba@192.168.3.100:/home/dmdba/dm/config/dsc2_config/ |

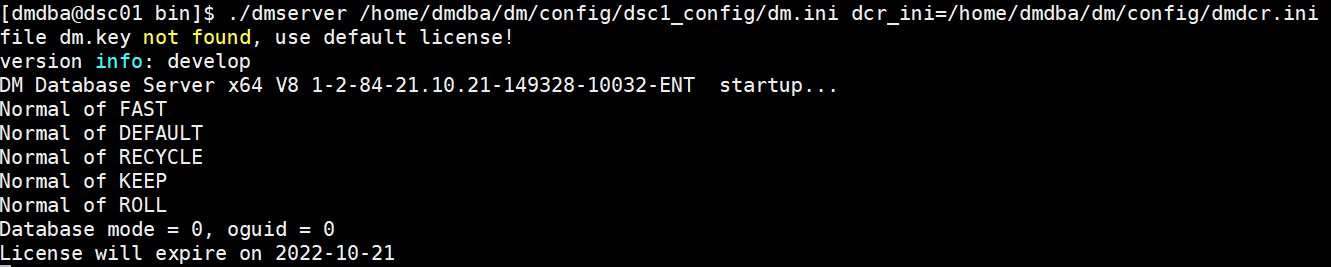

测试启动实例(dmdba操作,每个节点执行)

#实例A cd /home/dmdba/dm/dmdbms/bin ./dmserver /home/dmdba/dm/config/dsc1_config/dm.ini dcr_ini=/home/dmdba/dm/config/dmdcr.ini #实例B cd /home/dmdba/dm/dmdbms/bin ./dmserver /home/dmdba/dm/config/dsc2_config/dm.ini dcr_ini=/home/dmdba/dm/config/dmdcr.ini |

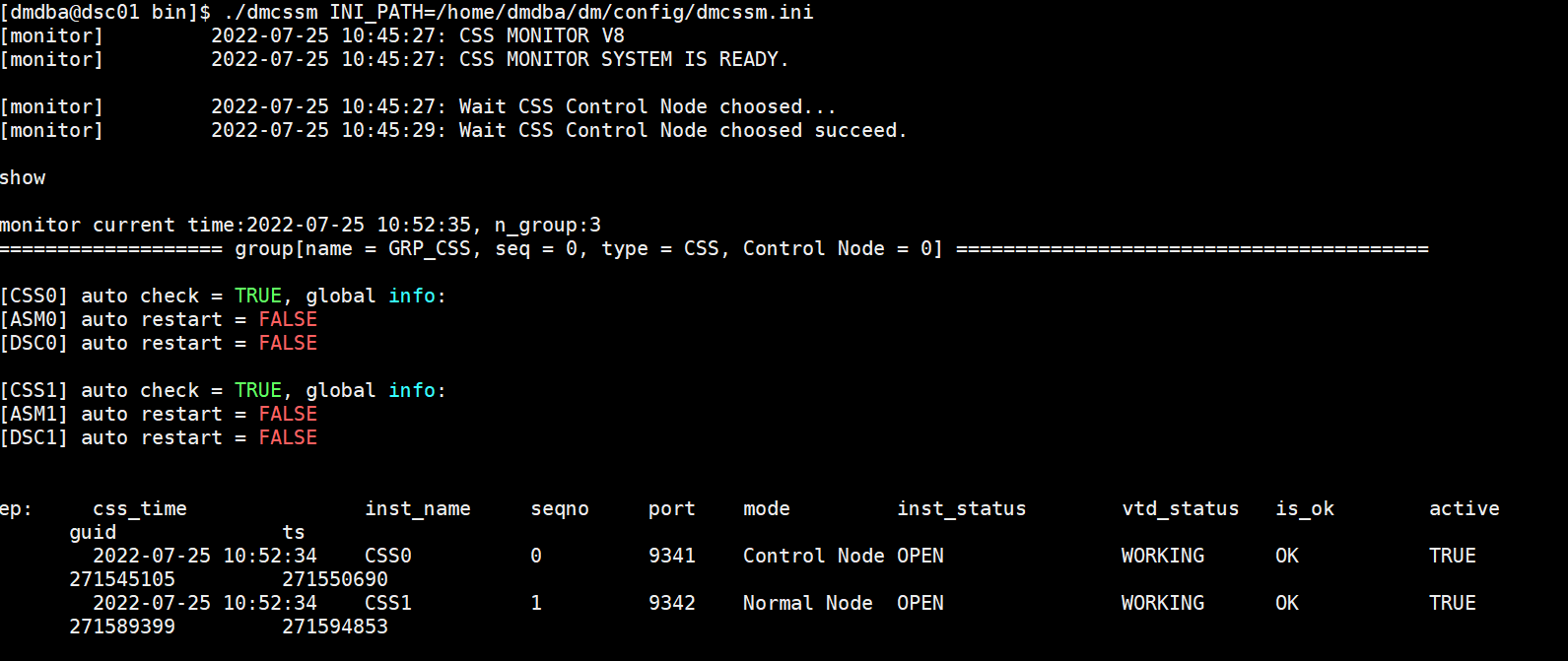

在任意一节点创建监视器dmcssm.ini

这一步为dmdba操作,在任意一个节点操作即可

CSSM_OGUID=63635 CSSM_CSS_IP=192.168.3.99:9341 CSSM_CSS_IP=192.168.3.100:9342 CSSM_LOG_PATH=/home/dmdba/dm/dmdbms/log CSSM_LOG_FILE_SIZE=32 CSSM_LOG_SPACE_LIMIT=1024 |

创建监视器可以观察集群的整体运行状况

创建配置文件:

cd /home/dmdba/dm/dmdbms/bin ./dmcssm INI_PATH=/home/dmdba/dm/config/dmcssm.ini |

常用命令

show 查看所有组的信息 show config 查看dmdcr_cfg.ini 的配置信息 show monitor 查看当前连接到主 CSS 的所有监视器信息 |

各个实例注册服务

节点1 cd /home/dmdba/dm/dmdbms/script/root/ /home/dmdba/dm/dmdbms/script/root/dm_service_installer.sh -t dmcss -dcr_ini /home/dmdba/dm/config/dmdcr.ini -p CSS /home/dmdba/dm/dmdbms/script/root/dm_service_installer.sh -t dmasmsvr -dcr_ini /home/dmdba/dm/config/dmdcr.ini -y DmCSSServiceCSS.service -p ASM /home/dmdba/dm/dmdbms/script/root/dm_service_installer.sh -t dmserver -dm_ini /home/dmdba/dm/config/dsc1_config/dm.ini -dcr_ini /home/dmdba/dm/config/dmdcr.ini -y DmASMSvrServiceASM.service -p DSC 节点2 cd /home/dmdba/dm/dmdbms/script/root/ /home/dmdba/dm/dmdbms/script/root/dm_service_installer.sh -t dmcss -dcr_ini /home/dmdba/dm/config/dmdcr.ini -p CSS /home/dmdba/dm/dmdbms/script/root/dm_service_installer.sh -t dmasmsvr -dcr_ini /home/dmdba/dm/config/dmdcr.ini -y DmCSSServiceCSS.service -p ASM /home/dmdba/dm/dmdbms/script/root/dm_service_installer.sh -t dmserver -dm_ini /home/dmdba/dm/config/dsc2_config/dm.ini -dcr_ini /home/dmdba/dm/config/dmdcr.ini -y DmASMSvrServiceASM.service -p DSC |

删除服务

./dm_service_uninstaller.sh -n DmCSSServiceDSC0 ./dm_service_uninstaller.sh -n DmASMSvrServiceDSC01 |

disql SYSDBA/SYSDBA@rac

四、配置应用连接

客户端主机上需要配置 dm_svc.conf 文件(未安装 DM 数据库的机器新建即可),文件路径:

- 32 位的 DM 安装在 Win32 操作平台下,此文件位于 %SystemRoot%\system32 目录;

- 64 位的 DM 安装在 Win64 操作平台下,此文件位于 %SystemRoot%\system32 目录;

- 32 位的 DM 安装在 Win64 操作平台下,此文件位于 %SystemRoot%\SysWOW64 目录;

- 在 Linux 平台下,此文件位于/etc 目录。

vi /etc/dm_svc.conf TIME_ZONE=(+8:00) LANGUAGE=(cn) DSC1=(192.168.3.99:5236,192.168.3.100:5236) [DSC1] LOGIN_ENCRYPT=(0) SWITCH_TIME=(20) SWITCH_INTERVAL=(2000) |

关闭DB自动拉起

在dmcssm控制台中输入命令关闭DB组自动拉起, set GRP_DSC auto restart off |

使用自动调整参数的脚本(AutoParaAdj2-6.sql)修改dm.ini,放到/home/dmdba/dm/dm_config/目录下达梦8集群搭建完成

DSC数据备份(dmdba)

dmrman

BACKUP DATABASE '/home/dmdba/dm/config/dsc1_config/dm.ini' FULL BACKUPSET '/backup';

scp -r /backup/dmrman dmdba@192.168.3.119:/backup/

初始化实例,还原数据

DW机器

--还原数据

dmrman

RMAN> restore database '/home/dmdba/dm/dm.ini' from backupset '/backup'

restore database '/home/dmdba/dm/dm.ini' from backupset '/backup'

file dm.key not found, use default license!

Normal of FAST

Normal of DEFAULT

Normal of RECYCLE

Normal of KEEP

Normal of ROLL

[Percent:100.00%][Speed:0.00M/s][Cost:00:00:02][Remaining:00:00:00]

restore successfully.

time used: 00:00:02.671

RMAN> recover database '/home/dmdba/dm/dm.ini' from backupset '/backup'

recover database '/dm8/data/DAMENG/dm.ini' from backupset '/backup'

Database mode = 0, oguid = 0

Normal of FAST

Normal of DEFAULT

Normal of RECYCLE

Normal of KEEP

Normal of ROLL

EP[0]'s cur_lsn[84680], file_lsn[84680]

recover successfully!

time used: 306.495(ms)

RMAN> recover database '/home/dmdba/dm/dm.ini' update db_magic

recover database '/dm8/data/DAMENG/dm.ini' update db_magic

Database mode = 0, oguid = 0

Normal of FAST

Normal of DEFAULT

Normal of RECYCLE

Normal of KEEP

Normal of ROLL

EP[0]'s cur_lsn[84680], file_lsn[84680]

recover successfully!

time used: 00:00:01.042

DW机器

创建dmarch.ini文件

vi /home/dmdba/dm/dmarch.ini

[ARCHIVE_LOCAL]

ARCH_TYPE = LOCAL

ARCH_DEST = /arch

ARCH_FILE_SIZE = 1024

ARCH_SPACE_LIMIT = 102400

[ARCHIVE_REALTIME]

ARCH_TYPE = REALTIME

ARCH_DEST = DSC0/DSC1

DW机器

vi /home/dmdba/dm/dmmal.ini文件

MAL_CHECK_INTERVAL = 30 #MAL链路检测时间间隔

MAL_CONN_FAIL_INTERVAL = 10 #判定MAL链路断开的时间

[MAL_INST0]

MAL_INST_NAME = DSC0 #实例名,和 dm.ini的INSTANCE_NAME一致

MAL_HOST = 192.168.3.99 #MAL系统监听TCP连接的IP地址

MAL_PORT = 5736 #MAL系统监听TCP连接的端口

MAL_INST_HOST = 192.168.3.109 #实例的对外服务IP地址

MAL_INST_PORT = 5236 #实例对外服务端口,和dm.ini的PORT_NUM一致

MAL_DW_PORT = 5836 #实例对应的守护进程监听TCP连接的端口

MAL_INST_DW_PORT = 5936 #实例监听守护进程TCP连接的端口

[MAL_INST1]

MAL_INST_NAME = DSC1

MAL_HOST = 192.168.3.100

MAL_PORT = 5737

MAL_INST_HOST = 192.168.3.110

MAL_INST_PORT = 5236

MAL_DW_PORT = 5837

MAL_INST_DW_PORT = 5937

[MAL_INST2]

MAL_INST_NAME = DW01

MAL_HOST = 192.168.3.102

MAL_PORT = 5738

MAL_INST_HOST = 192.168.3.106

MAL_INST_PORT = 5236

MAL_DW_PORT = 5838

MAL_INST_DW_PORT = 5938

创建dmwatcher.ini文件

C机器

[dmdba@dmdw01 ~]$ vi /home/dmdba/dm/dmwatcher.ini

[GDSCDW1]

DW_TYPE = GLOBAL

DW_MODE = MANUAL

DW_ERROR_TIME = 60

INST_ERROR_TIME = 35

INST_RECOVER_TIME = 60

INST_OGUID = 45332

INST_INI = /home/dmdba/dm/dm.ini

INST_STARTUP_CMD = /home/dmdba/dm/bin/dmserver

INST_AUTO_RESTART = 1 #开启自动启动

RLOG_SEND_THRESHOLD = 0

RLOG_APPLY_THRESHOLD = 0

--注意:DMDSC集群各节点实例的自动拉起是由各自本地的dmcss执行的,不是由守护进程执行。所以INST_AUTO_RESTART=0,而单节点需要设置为1进行启动。

注册服务

机器

./dm_service_installer.sh -t dmwatcher -watcher_ini /home/dmdba/dm/dmwatcher.ini -p Watcher

Created symlink from /etc/systemd/system/multi-user.target.wants/DmWatcherServiceWatcher.service to /usr/lib/systemd/system/DmWatcherServiceWatcher.service.

创建服务(DmWatcherServiceWatcher)完成

./dm_service_installer.sh -t dmserver -p DW1_01 -dm_ini /home/dmdba/dm/dm.ini -m mount

备注:删除自启

DW机器

./dm_service_uninstaller.sh -n DmWatcherServiceWatcher

配置监视器

[dmdba@dmdsc01 ~]$ vi /home/dmdba/dm/dmmonitor.ini

MON_DW_CONFIRM = 0 #0为非确认,1为确认

MON_LOG_PATH = ../log #监视器日志文件存放路径

MON_LOG_INTERVAL = 60 #每隔 60s 定时记录系统信息到日志文件

MON_LOG_FILE_SIZE = 512 #单个日志大小

MON_LOG_SPACE_LIMIT = 2048 #日志上限

[GDSCDW1]

MON_INST_OGUID = 45332 #组GDSCDW1的唯一OGUID 值

MON_DW_IP = 192.168.3.109:5836/192.168.3.110:5837 #IP对应MAL_HOST,PORT对应MAL_DW_PORT

MON_DW_IP = 192.168.3.106:5838

注册DSC+DW监视器服务

./dm_service_installer.sh -t dmmonitor -monitor_ini /home/dmdba/dm/dmmonitor.ini -p Monitor

Created symlink from /etc/systemd/system/multi-user.target.wants/DmMonitorServiceMonitor.service to /usr/lib/systemd/system/DmMonitorServiceMonitor.service.

创建服务(DmMonitorServiceMonitor)完成

./dm_service_installer.sh -t dmmonitor -monitor_ini /home/dmdba/dm/dmmonitor.ini -p Monitor

Created symlink from /etc/systemd/system/multi-user.target.wants/DmMonitorServiceMonitor.service to /usr/lib/systemd/system/DmMonitorServiceMonitor.service.

创建服务(DmMonitorServiceMonitor)完成

./dm_service_installer.sh -t dmmonitor -monitor_ini /home/dmdba/dm/dmmonitor.ini -p Monitor

Created symlink from /etc/systemd/system/multi-user.target.wants/DmMonitorServiceMonitor.service to /usr/lib/systemd/system/DmMonitorServiceMonitor.service.

创建服务(DmMonitorServiceMonitor)完成

备注:删除自启

./dm_service_uninstaller.sh -n DmMonitorServiceMonitor

配置DSC监视器

DW机器该配置相同

C机器:[dmdba@dmdw01 bin]$ vi /home/dmdba/dm/dmcssm.ini

CSSM_OGUID = 45331

CSSM_CSS_IP = 192.168.3.99:5336

CSSM_CSS_IP = 192.168.3.109:5337

CSSM_LOG_PATH = ../log

CSSM_LOG_FILE_SIZE = 512

CSSM_LOG_SPACE_LIMIT = 2048

注册DSC监视器服务

DW机器

./dm_service_installer.sh -t dmcssm -cssm_ini /home/dmdba/dm/dmcssm.ini -p Monitor

Created symlink from /etc/systemd/system/multi-user.target.wants/DmCSSMonitorServiceMonitor.service to /usr/lib/systemd/system/DmCSSMonitorServiceMonitor.service.

创建服务(DmCSSMonitorServiceMonitor)完成

备注:删除自启

./dm_service_uninstaller.sh -n DmCSSMonitorServiceMonitor

启动数据库并修改参数

DW机器

DmServiceDW1_01 start

--修改主备库参数(连接DMDSC集群中的任意一个节点,设置DMDSC主库的OGUID 值)

A机器设置OGUID并修改为主库模式:

disql SYSDBA/SYSDBA

服务器[LOCALHOST:5236]:处于普通配置状态

登录使用时间 : 2.473(ms)

disql V8

SQL> SP_SET_PARA_VALUE(1, 'ALTER_MODE_STATUS', 1);

DMSQL 过程已成功完成

已用时间: 11.551(毫秒). 执行号:0.

SQL> sp_set_oguid(45332);

DMSQL 过程已成功完成

已用时间: 16.767(毫秒). 执行号:1.

SQL> alter database primary;

操作已执行

已用时间: 9.642(毫秒). 执行号:0.

SQL> SP_SET_PARA_VALUE(1, 'ALTER_MODE_STATUS', 0);

DMSQL 过程已成功完成

已用时间: 8.306(毫秒). 执行号:2.

SQL>

C机器设置OGUID并修改为主库模式:

disql SYSDBA/SYSDBA

服务器[LOCALHOST:5236]:处于普通配置状态

登录使用时间 : 2.473(ms)

disql V8

SQL> SP_SET_PARA_VALUE(1, 'ALTER_MODE_STATUS', 1);

DMSQL 过程已成功完成

已用时间: 11.551(毫秒). 执行号:0.

SQL> sp_set_oguid(45332);

DMSQL 过程已成功完成

已用时间: 16.767(毫秒). 执行号:1.

SQL> alter database standby;

操作已执行

已用时间: 9.642(毫秒). 执行号:0.

SQL> SP_SET_PARA_VALUE(1, 'ALTER_MODE_STATUS', 0);

DMSQL 过程已成功完成

已用时间: 8.306(毫秒). 执行号:2.

SQL>

启动守护进程

DmWatcherServiceWatcher start

Starting DmWatcherServiceWatcher: [ OK ]

启动DW监视器

DW机器

dmmonitor dmmonitor.ini

启停集群

启动:A/B机器CSS→A/B/C机器守护进程→A/B/C(DSC+DW)监视器

DmWatcherServiceWatcher start

DmMonitorServiceMonitor start

DmCSSMonitorServiceMonitor start

说明:CSS启动后30秒自动拉起ASM,60秒自动拉起DMSERVER。

停止: A/B/C机器(DSC+DW)监视器→A/B机器DMSERVER→A/B机器ASM→A/B机器CSS→A/B/C机器守护进程→C机器DMSERVER

DW机器

DmCSSMonitorServiceMonitor stop

DmMonitorServiceMonitor stop

DmWatcherServiceWatcher stop

DmServiceDW1_01 stop

--查看DW监视器信息

dmmonitor dmmonitor.ini

[monitor] 2022-08-09 11:00:15: DMMONITOR[4.0] V8

[monitor] 2022-08-09 11:00:15: DMMONITOR[4.0] IS READY.

[monitor] 2022-08-09 11:00:15: 收到守护进程(DW1_01)消息

pg WTIME WSTATUS INST_OK INAME ISTATUS IMODE RSTAT N_OPEN FLSN CLSN

2022-08-09 11:00:15 OPEN OK DW1_01 OPEN STANDBY NULL 24 143259 143259

[monitor] 2022-08-09 11:00:15: 收到守护进程(DSC1)消息

WTIME WSTATUS INST_OK INAME ISTATUS IMODE RSTAT N_OPEN FLSN CLSN

2022-08-09 11:00:14 STARTUP OK DSC0 OPEN PRIMARY VALID 24 143259 143259

[monitor] 2022-08-09 11:00:16: 收到守护进程(DSC0)消息

WTIME WSTATUS INST_OK INAME ISTATUS IMODE RSTAT N_OPEN FLSN CLSN

2022-08-09 11:00:16 OPEN OK DSC0 OPEN PRIMARY VALID 24 143259 143260

show

创建dm_svc.conf

vi /etc/dm_svc.conf

TIME_ZONE=(+8:00)

LANGUAGE=(cn)

DSC1=(192.168.3.109:5236,192.168.3.110:5236,192.168.3.106:5236)

[DSC1]

LOGIN_ENCRYPT=(0)

SWITCH_TIME=(20)

SWITCH_INTERVAL=(1000)

故障自动切换

disql SYSDBA/SYSDBA@DSC1

服务器[192.168.3.99:5236]:处于普通打开状态

登录使用时间 : 5.344(ms)

disql V8

SQL>

SQL> select instance_name from v$instance;

行号 INSTANCE_NAME

---------- -------------

1 DSC1

已用时间: 3.172(毫秒). 执行号:100.

--以上会话不要关闭,关闭101上的实例

disql SYSDBA/SYSDBA

服务器[LOCALHOST:5236]:处于普通打开状态

登录使用时间 : 2.765(ms)

disql V8

SQL> stop instance;

服务器[LOCALHOST:5236]:处于普通打开状态

已连接

操作已执行

已用时间: 1.097(毫秒). 执行号:0.

--查看监视器状态

--继续使用上面的窗口进行查询

SQL> select instance_name from v$instance;

服务器[192.168.3.100:5236]:处于普通打开状态

已连接

行号 INSTANCE_NAME

---------- -------------

1 DSC0

已用时间: 2.718(毫秒). 执行号:0.

已完成故障切换,实例变为了DSC0

--查看监视器,发现CSS已经将stop的实例进行了重启

[CSS1] [2022-08-09 14:12:28:857] [CSS]: 重启本地DB实例,命令:[/home/dmdba/dm/dmdbms/bin/dmserver path=/home/dmdba/dm/config/dsc1_config/dm.ini dcr_ini=/dm8/dsc/config/dmdcr.ini]

[CSS1] [2022-08-09 14:12:38:888] [DB]: 设置EP DSC1[1]为故障重加入EP

[CSS1] [2022-08-09 14:12:38:890] [DB]: 设置命令[START NOTIFY], 目标站点 DSC1[1], 命令序号[523]

[CSS1] [2022-08-09 14:12:39:911] [DB]: 设置命令[SUSPEND EP WORKER THREAD], 目标站点 DSC0[0], 命令序号[524]

[CSS1] [2022-08-09 14:12:40:215] [DB]: 暂停工作线程结束

[CSS1] [2022-08-09 14:12:40:235] [DB]: 设置命令[DCR_LOAD], 目标站点 DSC0[0], 命令序号[525]

[CSS1] [2022-08-09 14:12:40:260] [DB]: 设置命令[DCR_LOAD], 目标站点 DSC1[1], 命令序号[526]

[CSS1] [2022-08-09 14:12:41:194] [DB]: 故障EP重新加入DSC结束

[CSS1] [2022-08-09 14:12:41:195] [DB]: 设置命令[ERROR EP ADD], 目标站点 DSC0[0], 命令序号[528]

[CSS1] [2022-08-09 14:12:41:220] [DB]: 设置命令[ERROR EP ADD], 目标站点 DSC1[1], 命令序号[529]

[CSS1] [2022-08-09 14:12:41:348] [DB]: 故障EP重新加入DSC结束

[CSS1] [2022-08-09 14:12:41:348] [DB]: 设置命令[EP RECV], 目标站点 DSC0[0], 命令序号[531]

[CSS1] [2022-08-09 14:12:41:475] [DB]: 故障EP恢复结束

[CSS1] [2022-08-09 14:12:41:478] [DB]: 设置命令[EP START], 目标站点 DSC1[1], 命令序号[533]

[CSS1] [2022-08-09 14:12:41:706] [DB]: 设置命令[EP START2], 目标站点 DSC1[1], 命令序号[535]

[CSS1] [2022-08-09 14:12:43:675] [DB]: 设置命令[EP OPEN], 目标站点 DSC1[1], 命令序号[537]

[CSS1] [2022-08-09 14:12:43:778] [DB]: 设置命令[NONE], 目标站点 DSC1[1], 命令序号[0]

[CSS1] [2022-08-09 14:12:43:778] [DB]: 设置命令[RESUME EP WORKER THREAD], 目标站点 DSC0[0], 命令序号[539]

[CSS1] [2022-08-09 14:12:43:907] [DB]: 继续工作线程结束

[CSS1] [2022-08-09 14:12:43:907] [DB]: 设置命令[NONE], 目标站点 DSC0[0], 命令序号[0]

[CSS1] [2022-08-09 14:12:44:932] [DB]: 设置命令[EP REAL OPEN], 目标站点 DSC1[1], 命令序号[541]

[CSS1] [2022-08-09 14:12:45:971] [DB]: 设置命令[NONE], 目标站点 DSC1[1], 命令序号[0]

show

monitor current time:2022-08-09 14:19:59, n_group:3

=================== group[name = GRP_CSS, seq = 0, type = CSS, Control Node = 1] ========================================

[CSS0] auto check = TRUE, global info:

[ASM0] auto restart = TRUE

[DSC0] auto restart = TRUE

[CSS1] auto check = TRUE, global info:

[ASM1] auto restart = TRUE

[DSC1] auto restart = TRUE

ep: css_time inst_name seqno port mode inst_status vtd_status is_ok active guid ts

2022-08-09 14:19:59 CSS0 0 5336 Normal Node OPEN WORKING OK TRUE 6470 11659

2022-08-09 14:19:59 CSS1 1 5337 Control Node OPEN WORKING OK TRUE 2184748 2193315

=================== group[name = GRP_ASM, seq = 1, type = ASM, Control Node = 1] ========================================

n_ok_ep = 2

ok_ep_arr(index, seqno):

(0, 0)

(1, 1)

sta = OPEN, sub_sta = STARTUP

break ep = NULL

recover ep = NULL

crash process over flag is TRUE

ep: css_time inst_name seqno port mode inst_status vtd_status is_ok active guid ts

2022-08-09 14:19:59 ASM0 0 5436 Normal Node OPEN WORKING OK TRUE 11817 16987

2022-08-09 14:19:59 ASM1 1 5437 Control Node OPEN WORKING OK TRUE 2198072 2206596

=================== group[name = GRP_DSC, seq = 2, type = DB, Control Node = 0] ========================================

n_ok_ep = 2

ok_ep_arr(index, seqno):

(0, 0)

(1, 1)

sta = OPEN, sub_sta = STARTUP

break ep = NULL

recover ep = NULL

crash process over flag is TRUE

ep: css_time inst_name seqno port mode inst_status vtd_status is_ok active guid ts

2022-08-09 14:19:59 DSC0 0 5236 Control Node OPEN WORKING OK TRUE 421057 424896

2022-08-09 14:19:59 DSC1 1 5236 Normal Node OPEN WORKING OK TRUE 4644622 4645064

社区地址:https://eco.dameng.com