两台主机安装了pcs+oracle ha可正常切换,任意重启一台机器,pcs resource均可正常切换。

但如果同时关闭了两台主机,然后再起其中任意一台(另外一台保持关闭状态,模拟无法修复启动),那么起来的那台资源resource显示都是stopped状态。

此时只能强制启动资源了。

pcs resource

根据上述结果的顺序依赖关系依次启动资源

pcs resource debug-start xxx

模拟操作记录如下:

*****[2022-08-11 10:49:48]*****

Last login: Thu Aug 11 10:31:59 2022 from 192.168.52.1

[root@pcs01 ~]# pcs status

Cluster name: cluster01

Stack: corosync

Current DC: NONE

Last updated: Thu Aug 11 10:49:51 2022

Last change: Thu Aug 11 10:48:32 2022 by root via cibadmin on pcs01

2 nodes configured

6 resource instances configured

Node pcs01: UNCLEAN (offline)

Node pcs02: UNCLEAN (offline)

Full list of resources:

Resource Group: oracle

virtualip (ocf::heartbeat:IPaddr2): Stopped

vg01 (ocf::heartbeat:LVM): Stopped

u01 (ocf::heartbeat:Filesystem): Stopped

listener (ocf::heartbeat:oralsnr): Stopped

orcl (ocf::heartbeat:oracle): Stopped

sbd_fencing (stonith:fence_sbd): Stopped

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

sbd: active/enabled

[root@pcs01 ~]# date

Thu Aug 11 10:50:05 CST 2022

[root@pcs01 ~]# date

Thu Aug 11 10:52:02 CST 2022

[root@pcs01 ~]# pcs status

Cluster name: cluster01

Stack: corosync

Current DC: pcs01 (version 1.1.23-1.0.1.el7_9.1-9acf116022) - partition WITHOUT quorum

Last updated: Thu Aug 11 10:52:07 2022

Last change: Thu Aug 11 10:48:32 2022 by root via cibadmin on pcs01

2 nodes configured

6 resource instances configured

Node pcs02: UNCLEAN (offline)

Online: [ pcs01 ]

Full list of resources:

Resource Group: oracle

virtualip (ocf::heartbeat:IPaddr2): Stopped

vg01 (ocf::heartbeat:LVM): Stopped

u01 (ocf::heartbeat:Filesystem): Stopped

listener (ocf::heartbeat:oralsnr): Stopped

orcl (ocf::heartbeat:oracle): Stopped

sbd_fencing (stonith:fence_sbd): Stopped

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

sbd: active/enabled

[root@pcs01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.8G 0 3.8G 0% /dev

tmpfs 3.8G 65M 3.7G 2% /dev/shm

tmpfs 3.8G 8.7M 3.8G 1% /run

tmpfs 3.8G 0 3.8G 0% /sys/fs/cgroup

/dev/mapper/ol-root 26G 7.1G 19G 28% /

/dev/sda1 1014M 184M 831M 19% /boot

tmpfs 768M 0 768M 0% /run/user/0

[root@pcs01 ~]# pcs resource

Resource Group: oracle

virtualip (ocf::heartbeat:IPaddr2): Stopped

vg01 (ocf::heartbeat:LVM): Stopped

u01 (ocf::heartbeat:Filesystem): Stopped

listener (ocf::heartbeat:oralsnr): Stopped

orcl (ocf::heartbeat:oracle): Stopped

[root@pcs01 ~]# pcs config

Cluster Name: cluster01

Corosync Nodes:

pcs01 pcs02

Pacemaker Nodes:

pcs01 pcs02

Resources:

Group: oracle

Resource: virtualip (class=ocf provider=heartbeat type=IPaddr2)

Attributes: cidr_netmask=24 ip=192.168.52.190 nic=ens32

Operations: monitor interval=10s (virtualip-monitor-interval-10s)

start interval=0s timeout=20s (virtualip-start-interval-0s)

stop interval=0s timeout=20s (virtualip-stop-interval-0s)

Resource: vg01 (class=ocf provider=heartbeat type=LVM)

Attributes: exclusive=true volgrpname=vg01

Operations: methods interval=0s timeout=5s (vg01-methods-interval-0s)

monitor interval=10s timeout=30s (vg01-monitor-interval-10s)

start interval=0s timeout=30s (vg01-start-interval-0s)

stop interval=0s timeout=30s (vg01-stop-interval-0s)

Resource: u01 (class=ocf provider=heartbeat type=Filesystem)

Attributes: device=/dev/vg01/lvol01 directory=/u01 fstype=xfs

Operations: monitor interval=20s timeout=40s (u01-monitor-interval-20s)

notify interval=0s timeout=60s (u01-notify-interval-0s)

start interval=0s timeout=60s (u01-start-interval-0s)

stop interval=0s timeout=60s (u01-stop-interval-0s)

Resource: listener (class=ocf provider=heartbeat type=oralsnr)

Attributes: listener=listener sid=orcl

Operations: methods interval=0s timeout=5s (listener-methods-interval-0s)

monitor interval=10s timeout=30s (listener-monitor-interval-10s)

start interval=0s timeout=120s (listener-start-interval-0s)

stop interval=0s timeout=120s (listener-stop-interval-0s)

Resource: orcl (class=ocf provider=heartbeat type=oracle)

Attributes: sid=orcl

Operations: methods interval=0s timeout=5s (orcl-methods-interval-0s)

monitor interval=120s timeout=30s (orcl-monitor-interval-120s)

start interval=0s timeout=120s (orcl-start-interval-0s)

stop interval=0s timeout=120s (orcl-stop-interval-0s)

Stonith Devices:

Resource: sbd_fencing (class=stonith type=fence_sbd)

Attributes: devices=/dev/sdc

Operations: monitor interval=60s (sbd_fencing-monitor-interval-60s)

Fencing Levels:

Location Constraints:

Ordering Constraints:

start virtualip then start vg01 (kind:Mandatory) (id:order-virtualip-vg01-mandatory)

start vg01 then start u01 (kind:Mandatory) (id:order-vg01-u01-mandatory)

start u01 then start listener (kind:Mandatory) (id:order-u01-listener-mandatory)

start listener then start orcl (kind:Mandatory) (id:order-listener-orcl-mandatory)

Colocation Constraints:

vg01 with virtualip (score:INFINITY) (id:colocation-vg01-virtualip-INFINITY)

u01 with vg01 (score:INFINITY) (id:colocation-u01-vg01-INFINITY)

listener with u01 (score:INFINITY) (id:colocation-listener-u01-INFINITY)

orcl with listener (score:INFINITY) (id:colocation-orcl-listener-INFINITY)

Ticket Constraints:

Alerts:

No alerts defined

Resources Defaults:

No defaults set

Operations Defaults:

No defaults set

Cluster Properties:

cluster-infrastructure: corosync

cluster-name: cluster01

dc-version: 1.1.23-1.0.1.el7_9.1-9acf116022

have-watchdog: true

last-lrm-refresh: 1660024694

stonith-enabled: true

Quorum:

Options:

[root@pcs01 ~]# pcs resource show

Resource Group: oracle

virtualip (ocf::heartbeat:IPaddr2): Stopped

vg01 (ocf::heartbeat:LVM): Stopped

u01 (ocf::heartbeat:Filesystem): Stopped

listener (ocf::heartbeat:oralsnr): Stopped

orcl (ocf::heartbeat:oracle): Stopped

[root@pcs01 ~]# pcs resource debug-start orcl

Operation start for orcl (ocf:heartbeat:oracle) returned: 'not installed' (5)

> stderr: ocf-exit-reason:sqlplus: required binary not installed

[root@pcs01 ~]# pcs resource debug-start virtualip

Operation start for virtualip (ocf:heartbeat:IPaddr2) returned: 'ok' (0)

> stderr: Aug 11 10:53:08 INFO: Adding inet address 192.168.52.190/24 with broadcast address 192.168.52.255 to device ens32

> stderr: Aug 11 10:53:08 INFO: Bringing device ens32 up

> stderr: Aug 11 10:53:08 INFO: /usr/libexec/heartbeat/send_arp -i 200 -r 5 -p /var/run/resource-agents/send_arp-192.168.52.190 ens32 192.168.52.190 auto not_used not_used

[root@pcs01 ~]# ip a|grep 190

inet 192.168.52.190/24 brd 192.168.52.255 scope global secondary ens32

[root@pcs01 ~]# pcs resource debug-start vg01

Operation start for vg01 (ocf:heartbeat:LVM) returned: 'ok' (0)

> stdout: volume_list=[]

> stdout: volume_list=[]

> stderr: Aug 11 10:53:21 WARNING: Disable lvmetad in lvm.conf. lvmetad should never be enabled in a clustered environment. Set use_lvmetad=0 and kill the lvmetad process

> stderr: Aug 11 10:53:21 INFO: Activating volume group vg01

> stderr: Aug 11 10:53:21 INFO: Reading volume groups from cache. Found volume group "ol" using metadata type lvm2 Found volume group "vg01" using metadata type lvm2

> stderr: Aug 11 10:53:21 INFO: 1 logical volume(s) in volume group "vg01" now active

[root@pcs01 ~]# pcs resource debug-start u01

Operation start for u01 (ocf:heartbeat:Filesystem) returned: 'ok' (0)

> stderr: Aug 11 10:53:26 INFO: Running start for /dev/vg01/lvol01 on /u01

[root@pcs01 ~]# pcs resource debug-start listener

Operation start for listener (ocf:heartbeat:oralsnr) returned: 'ok' (0)

> stderr: Aug 11 10:53:33 INFO: Listener listener running:

> stderr: LSNRCTL for Linux: Version 11.2.0.4.0 - Production on 11-AUG-2022 10:53:31

> stderr:

> stderr: Copyright (c) 1991, 2013, Oracle. All rights reserved.

> stderr:

> stderr: Starting /u01/db/oracle/product/11.2.0/dbhome_1/bin/tnslsnr: please wait...

> stderr:

> stderr: TNSLSNR for Linux: Version 11.2.0.4.0 - Production

> stderr: System parameter file is /u01/db/oracle/product/11.2.0/dbhome_1/network/admin/listener.ora

> stderr: Log messages written to /u01/db/oracle/diag/tnslsnr/pcs01/listener/alert/log.xml

> stderr: Listening on: (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=EXTPROC1521)))

> stderr: Listening on: (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.52.190)(PORT=1521)))

> stderr:

> stderr: Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=EXTPROC1521)))

> stderr: STATUS of the LISTENER

> stderr: ------------------------

> stderr: Alias listener

> stderr: Version TNSLSNR for Linux: Version 11.2.0.4.0 - Production

> stderr: Start Date 11-AUG-2022 10:53:33

> stderr: Uptime 0 days 0 hr. 0 min. 0 sec

> stderr: Trace Level off

> stderr: Security ON: Local OS Authentication

> stderr: SNMP OFF

> stderr: Listener Parameter File /u01/db/oracle/product/11.2.0/dbhome_1/network/admin/listener.ora

> stderr: Listener Log File /u01/db/oracle/diag/tnslsnr/pcs01/listener/alert/log.xml

> stderr: Listening Endpoints Summary...

> stderr: (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=EXTPROC1521)))

> stderr: (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.52.190)(PORT=1521)))

> stderr: Services Summary...

> stderr: Service "orcl" has 1 instance(s).

> stderr: Instance "orcl", status UNKNOWN, has 1 handler(s) for this service...

> stderr: The command completed successfully

> stderr: Last login: Thu Aug 11 10:45:54 CST 2022 on pts/0

[root@pcs01 ~]# pcs resource debug-start orcl

Operation start for orcl (ocf:heartbeat:oracle) returned: 'ok' (0)

> stderr: Aug 11 10:53:46 INFO: Oracle instance orcl started:

[root@pcs01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.8G 0 3.8G 0% /dev

tmpfs 3.8G 65M 3.7G 2% /dev/shm

tmpfs 3.8G 8.7M 3.8G 1% /run

tmpfs 3.8G 0 3.8G 0% /sys/fs/cgroup

/dev/mapper/ol-root 26G 7.1G 19G 28% /

/dev/sda1 1014M 184M 831M 19% /boot

tmpfs 768M 0 768M 0% /run/user/0

/dev/mapper/vg01-lvol01 10G 6.2G 3.9G 62% /u01

[root@pcs01 ~]# su - oracle

Last login: Thu Aug 11 10:53:46 CST 2022

[oracle@pcs01 ~]$ sqlplus system/oracle@orcl

SQL*Plus: Release 11.2.0.4.0 Production on Thu Aug 11 10:53:58 2022

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL> show parameter name;

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

cell_offloadgroup_name string

db_file_name_convert string

db_name string orcl

db_unique_name string orcl

global_names boolean FALSE

instance_name string orcl

lock_name_space string

log_file_name_convert string

processor_group_name string

service_names string orcl

SQL> exit

Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

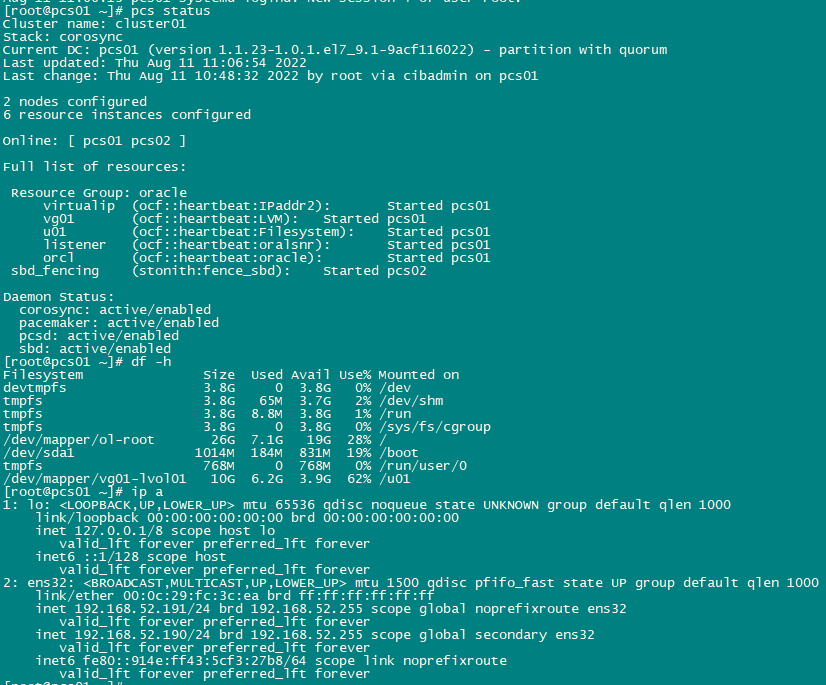

此时模拟节点2已经恢复启动了,显示的记录如下:此时发现pcs status的resource状态恢复正常started

[root@pcs01 ~]# pcs status

Cluster name: cluster01

Stack: corosync

Current DC: pcs01 (version 1.1.23-1.0.1.el7_9.1-9acf116022) - partition with quorum

Last updated: Thu Aug 11 10:59:46 2022

Last change: Thu Aug 11 10:48:32 2022 by root via cibadmin on pcs01

2 nodes configured

6 resource instances configured

Online: [ pcs01 pcs02 ]

Full list of resources:

Resource Group: oracle

virtualip (ocf::heartbeat:IPaddr2): Stopped

vg01 (ocf::heartbeat:LVM): Stopped

u01 (ocf::heartbeat:Filesystem): Stopped

listener (ocf::heartbeat:oralsnr): Stopped

orcl (ocf::heartbeat:oracle): Stopped

sbd_fencing (stonith:fence_sbd): Stopped

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

sbd: active/enabled

[root@pcs01 ~]# pcs status

Cluster name: cluster01

Stack: corosync

Current DC: pcs01 (version 1.1.23-1.0.1.el7_9.1-9acf116022) - partition with quorum

Last updated: Thu Aug 11 10:59:52 2022

Last change: Thu Aug 11 10:48:32 2022 by root via cibadmin on pcs01

2 nodes configured

6 resource instances configured

Online: [ pcs01 pcs02 ]

Full list of resources:

Resource Group: oracle

virtualip (ocf::heartbeat:IPaddr2): Started pcs01

vg01 (ocf::heartbeat:LVM): Started pcs01

u01 (ocf::heartbeat:Filesystem): Started pcs01

listener (ocf::heartbeat:oralsnr): Started pcs01

orcl (ocf::heartbeat:oracle): Started pcs01

sbd_fencing (stonith:fence_sbd): Started pcs02

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

sbd: active/enabled

[root@pcs01 ~]# pcs status

Cluster name: cluster01

Stack: corosync

Current DC: pcs01 (version 1.1.23-1.0.1.el7_9.1-9acf116022) - partition with quorum

Last updated: Thu Aug 11 10:59:54 2022

Last change: Thu Aug 11 10:48:32 2022 by root via cibadmin on pcs01

2 nodes configured

6 resource instances configured

Online: [ pcs01 pcs02 ]

Full list of resources:

Resource Group: oracle

virtualip (ocf::heartbeat:IPaddr2): Started pcs01

vg01 (ocf::heartbeat:LVM): Started pcs01

u01 (ocf::heartbeat:Filesystem): Started pcs01

listener (ocf::heartbeat:oralsnr): Started pcs01

orcl (ocf::heartbeat:oracle): Started pcs01

sbd_fencing (stonith:fence_sbd): Started pcs02

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

sbd: active/enabled

[root@pcs01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.8G 0 3.8G 0% /dev

tmpfs 3.8G 65M 3.7G 2% /dev/shm

tmpfs 3.8G 8.7M 3.8G 1% /run

tmpfs 3.8G 0 3.8G 0% /sys/fs/cgroup

/dev/mapper/ol-root 26G 7.1G 19G 28% /

/dev/sda1 1014M 184M 831M 19% /boot

tmpfs 768M 0 768M 0% /run/user/0

/dev/mapper/vg01-lvol01 10G 6.2G 3.9G 62% /u01

[root@pcs01 ~]# su - oracle

Last login: Thu Aug 11 10:59:48 CST 2022

[oracle@pcs01 ~]$ sqlplus system/oracle@orcl

SQL*Plus: Release 11.2.0.4.0 Production on Thu Aug 11 11:00:03 2022

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL> exit

手动启动资源和后期启动节点2后的系统日志显示如下:

[root@pcs01 ~]# tail -200 /var/log/messages

Aug 11 10:49:43 pcs01 systemd: Started System Logging Service.

Aug 11 10:49:43 pcs01 rhnsd: Starting Spacewalk Daemon: [ OK ]

Aug 11 10:49:43 pcs01 rhnsd[1169]: Spacewalk Services Daemon starting up, check in interval 240 minutes.

Aug 11 10:49:43 pcs01 rsyslogd: imjournal: fscanf on state file `/var/lib/rsyslog/imjournal.state' failed [v8.24.0-55.el7 try http://www.rsyslog.com/e/2027 ]

Aug 11 10:49:43 pcs01 rsyslogd: imjournal: ignoring invalid state file [v8.24.0-55.el7]

Aug 11 10:49:43 pcs01 systemd: Started LSB: Starts the Spacewalk Daemon.

Aug 11 10:49:43 pcs01 corosync[1184]: [MAIN ] Corosync Cluster Engine ('2.4.5'): started and ready to provide service.

Aug 11 10:49:43 pcs01 corosync[1184]: [MAIN ] Corosync built-in features: dbus systemd xmlconf qdevices qnetd snmp libcgroup pie relro bindnow

Aug 11 10:49:43 pcs01 corosync[1216]: [TOTEM ] Initializing transport (UDP/IP Unicast).

Aug 11 10:49:43 pcs01 corosync[1216]: [TOTEM ] Initializing transmit/receive security (NSS) crypto: none hash: none

Aug 11 10:49:43 pcs01 corosync[1216]: [TOTEM ] The network interface [192.168.52.191] is now up.

Aug 11 10:49:43 pcs01 corosync[1216]: [SERV ] Service engine loaded: corosync configuration map access [0]

Aug 11 10:49:43 pcs01 corosync[1216]: [QB ] server name: cmap

Aug 11 10:49:43 pcs01 corosync[1216]: [SERV ] Service engine loaded: corosync configuration service [1]

Aug 11 10:49:43 pcs01 corosync[1216]: [QB ] server name: cfg

Aug 11 10:49:43 pcs01 corosync[1216]: [SERV ] Service engine loaded: corosync cluster closed process group service v1.01 [2]

Aug 11 10:49:43 pcs01 corosync[1216]: [QB ] server name: cpg

Aug 11 10:49:43 pcs01 corosync[1216]: [SERV ] Service engine loaded: corosync profile loading service [4]

Aug 11 10:49:43 pcs01 corosync[1216]: [QUORUM] Using quorum provider corosync_votequorum

Aug 11 10:49:43 pcs01 corosync[1216]: [VOTEQ ] Waiting for all cluster members. Current votes: 1 expected_votes: 2

Aug 11 10:49:43 pcs01 corosync[1216]: [SERV ] Service engine loaded: corosync vote quorum service v1.0 [5]

Aug 11 10:49:43 pcs01 corosync[1216]: [QB ] server name: votequorum

Aug 11 10:49:43 pcs01 corosync[1216]: [SERV ] Service engine loaded: corosync cluster quorum service v0.1 [3]

Aug 11 10:49:43 pcs01 corosync[1216]: [QB ] server name: quorum

Aug 11 10:49:43 pcs01 corosync[1216]: [TOTEM ] adding new UDPU member {192.168.52.191}

Aug 11 10:49:43 pcs01 corosync[1216]: [TOTEM ] adding new UDPU member {192.168.52.192}

Aug 11 10:49:43 pcs01 corosync[1216]: [TOTEM ] A new membership (192.168.52.191:261) was formed. Members joined: 1

Aug 11 10:49:43 pcs01 corosync[1216]: [VOTEQ ] Waiting for all cluster members. Current votes: 1 expected_votes: 2

Aug 11 10:49:43 pcs01 corosync[1216]: [CPG ] downlist left_list: 0 received

Aug 11 10:49:43 pcs01 corosync[1216]: [VOTEQ ] Waiting for all cluster members. Current votes: 1 expected_votes: 2

Aug 11 10:49:43 pcs01 corosync[1216]: [VOTEQ ] Waiting for all cluster members. Current votes: 1 expected_votes: 2

Aug 11 10:49:43 pcs01 corosync[1216]: [QUORUM] Members[1]: 1

Aug 11 10:49:43 pcs01 corosync[1216]: [MAIN ] Completed service synchronization, ready to provide service.

Aug 11 10:49:44 pcs01 systemd: Started Postfix Mail Transport Agent.

Aug 11 10:49:44 pcs01 systemd: Started Dynamic System Tuning Daemon.

Aug 11 10:49:44 pcs01 corosync: Starting Corosync Cluster Engine (corosync): [ OK ]

Aug 11 10:49:44 pcs01 systemd: Started Corosync Cluster Engine.

Aug 11 10:49:44 pcs01 sbd[895]: notice: watchdog_init: Using watchdog device '/dev/watchdog'

Aug 11 10:49:44 pcs01 sbd[905]: cluster: notice: sbd_get_two_node: Corosync is in 2Node-mode

Aug 11 10:49:44 pcs01 systemd: Started Shared-storage based fencing daemon.

Aug 11 10:49:44 pcs01 systemd: Started Pacemaker High Availability Cluster Manager.

Aug 11 10:49:44 pcs01 pacemakerd[1551]: notice: Additional logging available in /var/log/pacemaker.log

Aug 11 10:49:44 pcs01 sbd[895]: notice: inquisitor_child: Servant cluster is healthy (age: 0)

Aug 11 10:49:44 pcs01 pacemakerd[1551]: notice: Switching to /var/log/cluster/corosync.log

Aug 11 10:49:44 pcs01 pacemakerd[1551]: notice: Additional logging available in /var/log/cluster/corosync.log

Aug 11 10:49:44 pcs01 pacemakerd[1551]: notice: Starting Pacemaker 1.1.23-1.0.1.el7_9.1

Aug 11 10:49:44 pcs01 systemd: Started PCS GUI and remote configuration interface.

Aug 11 10:49:44 pcs01 systemd: Reached target Multi-User System.

Aug 11 10:49:44 pcs01 systemd: Starting Update UTMP about System Runlevel Changes...

Aug 11 10:49:44 pcs01 systemd: Started Update UTMP about System Runlevel Changes.

Aug 11 10:49:44 pcs01 pacemakerd[1551]: warning: Quorum lost

Aug 11 10:49:44 pcs01 pacemakerd[1551]: notice: Node pcs01 state is now member

Aug 11 10:49:44 pcs01 stonith-ng[1563]: notice: Additional logging available in /var/log/cluster/corosync.log

Aug 11 10:49:44 pcs01 stonith-ng[1563]: notice: Connecting to cluster infrastructure: corosync

Aug 11 10:49:44 pcs01 lrmd[1564]: notice: Additional logging available in /var/log/cluster/corosync.log

Aug 11 10:49:44 pcs01 attrd[1565]: notice: Additional logging available in /var/log/cluster/corosync.log

Aug 11 10:49:44 pcs01 cib[1562]: notice: Additional logging available in /var/log/cluster/corosync.log

Aug 11 10:49:44 pcs01 attrd[1565]: notice: Connecting to cluster infrastructure: corosync

Aug 11 10:49:44 pcs01 pengine[1566]: notice: Additional logging available in /var/log/cluster/corosync.log

Aug 11 10:49:44 pcs01 attrd[1565]: notice: Node pcs01 state is now member

Aug 11 10:49:44 pcs01 crmd[1567]: notice: Additional logging available in /var/log/cluster/corosync.log

Aug 11 10:49:44 pcs01 cib[1562]: notice: Connecting to cluster infrastructure: corosync

Aug 11 10:49:44 pcs01 stonith-ng[1563]: notice: Node pcs01 state is now member

Aug 11 10:49:44 pcs01 cib[1562]: notice: Node pcs01 state is now member

Aug 11 10:49:44 pcs01 kdumpctl: /usr/bin/kdumpctl: line 586: /sys/class/watchdog/watchdog0/device/modalias: No such file or directory

Aug 11 10:49:45 pcs01 crmd[1567]: notice: Connecting to cluster infrastructure: corosync

Aug 11 10:49:45 pcs01 attrd[1565]: notice: Recorded local node as attribute writer (was unset)

Aug 11 10:49:45 pcs01 stonith-ng[1563]: notice: Watchdog will be used via SBD if fencing is required

Aug 11 10:49:45 pcs01 crmd[1567]: warning: Quorum lost

Aug 11 10:49:45 pcs01 crmd[1567]: notice: Node pcs01 state is now member

Aug 11 10:49:45 pcs01 crmd[1567]: notice: The local CRM is operational

Aug 11 10:49:45 pcs01 crmd[1567]: notice: State transition S_STARTING -> S_PENDING

Aug 11 10:49:45 pcs01 kdumpctl: kexec: loaded kdump kernel

Aug 11 10:49:45 pcs01 kdumpctl: Starting kdump: [OK]

Aug 11 10:49:45 pcs01 systemd: Started Crash recovery kernel arming.

Aug 11 10:49:45 pcs01 systemd: Startup finished in 606ms (kernel) + 1.203s (initrd) + 2.762s (userspace) = 4.572s.

Aug 11 10:49:45 pcs01 stonith-ng[1563]: notice: Added 'sbd_fencing' to the device list (1 active devices)

Aug 11 10:49:47 pcs01 systemd: Created slice User Slice of root.

Aug 11 10:49:47 pcs01 systemd: Started Session 1 of user root.

Aug 11 10:49:47 pcs01 systemd-logind: New session 1 of user root.

Aug 11 10:49:47 pcs01 crmd[1567]: notice: Fencer successfully connected

Aug 11 10:49:47 pcs01 chronyd[879]: Selected source 203.107.6.88

Aug 11 10:50:01 pcs01 systemd: Started Session 2 of user root.

Aug 11 10:50:06 pcs01 crmd[1567]: warning: Input I_DC_TIMEOUT received in state S_PENDING from crm_timer_popped

Aug 11 10:50:06 pcs01 crmd[1567]: notice: State transition S_ELECTION -> S_INTEGRATION

Aug 11 10:50:07 pcs01 pengine[1566]: notice: Watchdog will be used via SBD if fencing is required

Aug 11 10:50:07 pcs01 pengine[1566]: warning: Fencing and resource management disabled due to lack of quorum

Aug 11 10:50:07 pcs01 pengine[1566]: warning: Node pcs02 is unclean!

Aug 11 10:50:07 pcs01 pengine[1566]: notice: Cannot fence unclean nodes until quorum is attained (or no-quorum-policy is set to ignore)

Aug 11 10:50:07 pcs01 pengine[1566]: notice: * Start virtualip ( pcs01 ) due to no quorum (blocked)

Aug 11 10:50:07 pcs01 pengine[1566]: notice: * Start vg01 ( pcs01 ) due to no quorum (blocked)

Aug 11 10:50:07 pcs01 pengine[1566]: notice: * Start u01 ( pcs01 ) due to no quorum (blocked)

Aug 11 10:50:07 pcs01 pengine[1566]: notice: * Start listener ( pcs01 ) due to no quorum (blocked)

Aug 11 10:50:07 pcs01 pengine[1566]: notice: * Start orcl ( pcs01 ) due to no quorum (blocked)

Aug 11 10:50:07 pcs01 pengine[1566]: notice: * Start sbd_fencing ( pcs01 ) due to no quorum (blocked)

Aug 11 10:50:07 pcs01 pengine[1566]: warning: Calculated transition 0 (with warnings), saving inputs in /var/lib/pacemaker/pengine/pe-warn-18.bz2

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Initiating monitor operation virtualip_monitor_0 locally on pcs01

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Initiating monitor operation vg01_monitor_0 locally on pcs01

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Initiating monitor operation u01_monitor_0 locally on pcs01

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Initiating monitor operation listener_monitor_0 locally on pcs01

Aug 11 10:50:07 pcs01 Filesystem(u01)[1857]: WARNING: Couldn't find device [/dev/vg01/lvol01]. Expected /dev/??? to exist

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Result of probe operation for virtualip on pcs01: 7 (not running)

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Initiating monitor operation orcl_monitor_0 locally on pcs01

Aug 11 10:50:07 pcs01 LVM(vg01)[1825]: WARNING: LVM Volume vg01 is not available (stopped)

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Result of probe operation for vg01 on pcs01: 7 (not running)

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Initiating monitor operation sbd_fencing_monitor_0 locally on pcs01

Aug 11 10:50:07 pcs01 oralsnr(listener)[1904]: INFO: lsnrctl: required binary not installed

Aug 11 10:50:07 pcs01 oracle(orcl)[1961]: INFO: sqlplus: required binary not installed

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Result of probe operation for listener on pcs01: 7 (not running)

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Result of probe operation for u01 on pcs01: 7 (not running)

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Result of probe operation for orcl on pcs01: 7 (not running)

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Result of probe operation for sbd_fencing on pcs01: 7 (not running)

Aug 11 10:50:07 pcs01 crmd[1567]: notice: Transition 0 (Complete=6, Pending=0, Fired=0, Skipped=0, Incomplete=0, Source=/var/lib/pacemaker/pengine/pe-warn-18.bz2): Complete

Aug 11 10:50:07 pcs01 crmd[1567]: notice: State transition S_TRANSITION_ENGINE -> S_IDLE

Aug 11 10:53:21 pcs01 systemd: Reloading.

Aug 11 10:53:21 pcs01 systemd: [/usr/lib/systemd/system/systemd-pstore.service:22] Unknown lvalue 'StateDirectory' in section 'Service'

Aug 11 10:53:26 pcs01 kernel: XFS (dm-2): Mounting V4 Filesystem

Aug 11 10:53:26 pcs01 kernel: XFS (dm-2): Starting recovery (logdev: internal)

Aug 11 10:53:26 pcs01 kernel: XFS (dm-2): Ending recovery (logdev: internal)

Aug 11 10:53:26 pcs01 kernel: xfs filesystem being mounted at /u01 supports timestamps until 2038 (0x7fffffff)

Aug 11 10:53:31 pcs01 su: (to oracle) root on none

Aug 11 10:53:39 pcs01 su: (to oracle) root on none

Aug 11 10:53:45 pcs01 su: (to oracle) root on none

Aug 11 10:53:45 pcs01 su: (to oracle) root on none

Aug 11 10:53:45 pcs01 su: (to oracle) root on none

Aug 11 10:53:45 pcs01 su: (to oracle) root on none

Aug 11 10:53:46 pcs01 su: (to oracle) root on none

Aug 11 10:53:46 pcs01 su: (to oracle) root on none

Aug 11 10:53:51 pcs01 su: (to oracle) root on pts/0

------以上是手动启动的日志

------以下是比如节点2已经恢复启动后的日志

Aug 11 10:59:42 pcs01 corosync[1216]: [TOTEM ] A new membership (192.168.52.191:265) was formed. Members joined: 2

Aug 11 10:59:42 pcs01 corosync[1216]: [CPG ] downlist left_list: 0 received

Aug 11 10:59:42 pcs01 corosync[1216]: [CPG ] downlist left_list: 0 received

Aug 11 10:59:42 pcs01 corosync[1216]: [QUORUM] This node is within the primary component and will provide service.

Aug 11 10:59:42 pcs01 corosync[1216]: [QUORUM] Members[2]: 1 2

Aug 11 10:59:42 pcs01 corosync[1216]: [MAIN ] Completed service synchronization, ready to provide service.

Aug 11 10:59:42 pcs01 pacemakerd[1551]: notice: Quorum acquired

Aug 11 10:59:42 pcs01 pacemakerd[1551]: notice: Node pcs02 state is now member

Aug 11 10:59:42 pcs01 crmd[1567]: notice: Quorum acquired

Aug 11 10:59:42 pcs01 crmd[1567]: notice: Node pcs02 state is now member

Aug 11 10:59:43 pcs01 attrd[1565]: notice: Node pcs02 state is now member

Aug 11 10:59:43 pcs01 stonith-ng[1563]: notice: Node pcs02 state is now member

Aug 11 10:59:43 pcs01 cib[1562]: notice: Node pcs02 state is now member

Aug 11 10:59:43 pcs01 sbd[895]: notice: inquisitor_child: Servant pcmk is healthy (age: 0)

Aug 11 10:59:44 pcs01 crmd[1567]: notice: State transition S_IDLE -> S_INTEGRATION

Aug 11 10:59:44 pcs01 attrd[1565]: notice: Recorded local node as attribute writer (was unset)

Aug 11 10:59:47 pcs01 pengine[1566]: notice: Watchdog will be used via SBD if fencing is required

Aug 11 10:59:47 pcs01 pengine[1566]: notice: * Start virtualip ( pcs01 )

Aug 11 10:59:47 pcs01 pengine[1566]: notice: * Start vg01 ( pcs01 )

Aug 11 10:59:47 pcs01 pengine[1566]: notice: * Start u01 ( pcs01 )

Aug 11 10:59:47 pcs01 pengine[1566]: notice: * Start listener ( pcs01 )

Aug 11 10:59:47 pcs01 pengine[1566]: notice: * Start orcl ( pcs01 )

Aug 11 10:59:47 pcs01 pengine[1566]: notice: * Start sbd_fencing ( pcs02 )

Aug 11 10:59:47 pcs01 pengine[1566]: notice: Calculated transition 1, saving inputs in /var/lib/pacemaker/pengine/pe-input-89.bz2

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation virtualip_monitor_0 on pcs02

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation vg01_monitor_0 on pcs02

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation u01_monitor_0 on pcs02

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation listener_monitor_0 on pcs02

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating start operation virtualip_start_0 locally on pcs01

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation orcl_monitor_0 on pcs02

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation sbd_fencing_monitor_0 on pcs02

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Result of start operation for virtualip on pcs01: 0 (ok)

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation virtualip_monitor_10000 locally on pcs01

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating start operation vg01_start_0 locally on pcs01

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating start operation sbd_fencing_start_0 on pcs02

Aug 11 10:59:47 pcs01 LVM(vg01)[3468]: WARNING: Disable lvmetad in lvm.conf. lvmetad should never be enabled in a clustered environment. Set use_lvmetad=0 and kill the lvmetad process

Aug 11 10:59:47 pcs01 systemd: Reloading.

Aug 11 10:59:47 pcs01 systemd: [/usr/lib/systemd/system/systemd-pstore.service:22] Unknown lvalue 'StateDirectory' in section 'Service'

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation sbd_fencing_monitor_60000 on pcs02

Aug 11 10:59:47 pcs01 LVM(vg01)[3468]: INFO: Activating volume group vg01

Aug 11 10:59:47 pcs01 LVM(vg01)[3468]: INFO: Reading volume groups from cache. Found volume group "ol" using metadata type lvm2 Found volume group "vg01" using metadata type lvm2

Aug 11 10:59:47 pcs01 LVM(vg01)[3468]: INFO: 1 logical volume(s) in volume group "vg01" now active

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Result of start operation for vg01 on pcs01: 0 (ok)

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation vg01_monitor_10000 locally on pcs01

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating start operation u01_start_0 locally on pcs01

Aug 11 10:59:47 pcs01 Filesystem(u01)[3618]: INFO: Running start for /dev/vg01/lvol01 on /u01

Aug 11 10:59:47 pcs01 Filesystem(u01)[3618]: INFO: Filesystem /u01 is already mounted.

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Result of start operation for u01 on pcs01: 0 (ok)

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation u01_monitor_20000 locally on pcs01

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating start operation listener_start_0 locally on pcs01

Aug 11 10:59:47 pcs01 oralsnr(listener)[3706]: INFO: Listener listener already running

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Result of start operation for listener on pcs01: 0 (ok)

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating monitor operation listener_monitor_10000 locally on pcs01

Aug 11 10:59:47 pcs01 crmd[1567]: notice: Initiating start operation orcl_start_0 locally on pcs01

Aug 11 10:59:48 pcs01 su: (to oracle) root on none

Aug 11 10:59:48 pcs01 systemd: Created slice User Slice of oracle.

Aug 11 10:59:48 pcs01 systemd: Started Session c1 of user oracle.

Aug 11 10:59:48 pcs01 oracle(orcl)[3798]: INFO: Oracle instance orcl already running

Aug 11 10:59:48 pcs01 crmd[1567]: notice: Result of start operation for orcl on pcs01: 0 (ok)

Aug 11 10:59:48 pcs01 crmd[1567]: notice: Initiating monitor operation orcl_monitor_120000 locally on pcs01

Aug 11 10:59:48 pcs01 systemd: Removed slice User Slice of oracle.

Aug 11 10:59:48 pcs01 su: (to oracle) root on none

Aug 11 10:59:48 pcs01 systemd: Created slice User Slice of oracle.

Aug 11 10:59:48 pcs01 systemd: Started Session c2 of user oracle.

Aug 11 10:59:48 pcs01 crmd[1567]: notice: Transition 1 (Complete=20, Pending=0, Fired=0, Skipped=0, Incomplete=0, Source=/var/lib/pacemaker/pengine/pe-input-89.bz2): Complete

Aug 11 10:59:48 pcs01 crmd[1567]: notice: State transition S_TRANSITION_ENGINE -> S_IDLE

Aug 11 10:59:48 pcs01 systemd: Removed slice User Slice of oracle.

Aug 11 11:00:00 pcs01 su: (to oracle) root on pts/0

Aug 11 11:00:01 pcs01 systemd: Started Session 3 of user root.

Aug 11 11:00:13 pcs01 systemd: Started Session 4 of user root.

Aug 11 11:00:13 pcs01 systemd-logind: New session 4 of user root.

最后修改时间:2022-08-11 11:08:18

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。