测试目标:ceph单机192.168.52.193修改为192.168.52.198,保障ceph集群正常。

Ceph版本:v15.2.14

系统版本:Ubuntu18.04

参考文档:https://blog.csdn.net/qq_36607860/article/details/115413824

https://www.cnblogs.com/CLTANG/p/4332682.html

1.修改/etc/hosts中的ip设置(集群所有节点hosts都需要修改)

vi /etc/hosts

192.168.1.185 node1

192.168.1.186 node2

192.168.1.187 node3

2.修改/etc/ceph/ceph.conf中的ip地址

vi ceph.conf

[global]

fsid = b679e017-68bd-4f06-83f3-f03be36d97fe

mon_initial_members = node1, node2, node3

mon_host = 192.168.1.185,192.168.1.186,192.168.1.187

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

修改完成后执行 ceph-deploy --overwrite-conf config push node1 node2 node3

3.获取monmap

monmaptool --create --generate -c /etc/ceph/ceph.conf ./monmap 在当前目录生成monmap文件

这里如果是集群 ip还未更改,可以通过ceph mon getmap -o ./monmap来在当前目录下生成monmap文件

4.使用monmaptool --print monmap查看集群monmap如下

[root@node1 ~]# monmaptool --print monmap

monmaptool: monmap file monmap

epoch 0

fsid b679e017-68bd-4f06-83f3-f03be36d97fe

last_changed 2021-04-02 15:21:23.495691

created 2021-04-02 15:21:23.495691

0: 192.168.1.185:6789/0 mon.node1 #主要是更改这一部分,将我们新集群的集群网络ip写入到这里

1: 192.168.1.186:6789/0 mon.node2

2: 192.168.1.187:6789/0 mon.node3

5.删除对应mon.id的配置

使用monmaptool --rm node1 --rm node2 --rm node3 ./monmap删除对应mon.id的配置(node1、node2、node3取自上面的mon.node1、mon.node2、mon.node3)

[root@node1 ~]# monmaptool --rm node1 --rm node2 --rm node3 monmap

monmaptool: monmap file monmap

monmaptool: removing node1

monmaptool: removing node2

monmaptool: removing node3

monmaptool: writing epoch 0 to monmap (0 monitors)

6.再次查看monmap内容如下

[root@node1 ~]# monmaptool --print monmap

monmaptool: monmap file monmap

epoch 0

fsid b679e017-68bd-4f06-83f3-f03be36d97fe

last_changed 2021-04-02 15:21:23.495691

created 2021-04-02 15:21:23.495691

7.将我们当前设备的ip加入到monmap中即可

[root@node1 ~]# monmaptool --add node1 192.168.1.185:6789 --add node2 192.168.1.186:6789 --add node3 192.168.1.187:6789 monmap

monmaptool: monmap file monmap

monmaptool: writing epoch 0 to monmap (3 monitors)

[root@node1 ~]# monmaptool --print monmap

epoch 0

fsid b679e017-68bd-4f06-83f3-f03be36d97fe

last_changed 2021-04-02 15:21:23.495691

created 2021-04-02 15:21:23.495691

0: 192.168.1.185:6789/0 mon.node1

1: 192.168.1.186:6789/0 mon.node2

2: 192.168.1.187:6789/0 mon.node3

8.此时我们已成功完成修改monmap内容,我们将修改后的monmap 通过scp 同步到集群所有节点

9.将monmap注入到集群

注入之前请先停止集群内所有的ceph服务,systemctl stop ceph.service 因为需要所有的组件重新读配置文件,主要是mon重新加载monmap来更改集群通信ip

# 每个节点都需要执行,{{node1}}跟着本机的name修改

systemctl stop ceph

ceph-mon -i {{node1}} --inject-monmap ./monmap

# 当执行ceph-mon -i 报错的时候LOCK时候,可以尝试一下方法

# rocksdb: IO error: While lock file: /var/lib/ceph/mon/ceph-node1/store.db/LOCK: Resource temporarily unavailable

ps -ef|grep ceph

# 通过ps查询到ceph进程,kill -9 pid 杀掉所有关于ceph的进程

kill -9 1379

# 杀掉这些进程后,有些进程可能会重启,但是没有关系,接着在执行ceph-mon -i {{node1}} --inject-monmap ./monmap

ceph-mon -i {{node1}} --inject-monmap ./monmap

10.注入完成后重启systemctl restart ceph.service

systemctl restart ceph.service

重启所有机器

init 6

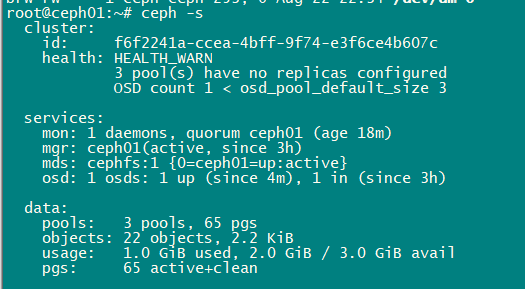

重启所有机器后,查看ceph集群状态

root@node1:~# ceph -s

遇到问题:(总之根据日志去排查问题就OK了)

1.修改完成后,mon无法启动,查看ceph日志/var/log/ceph/下的mon日志提示无权限,

处理:修改属主ceph即可:chown -R ceph:ceph /var/lib/ceph/mon/ceph-ceph01/store.db

systemctl restart ceph-mon@ceph01

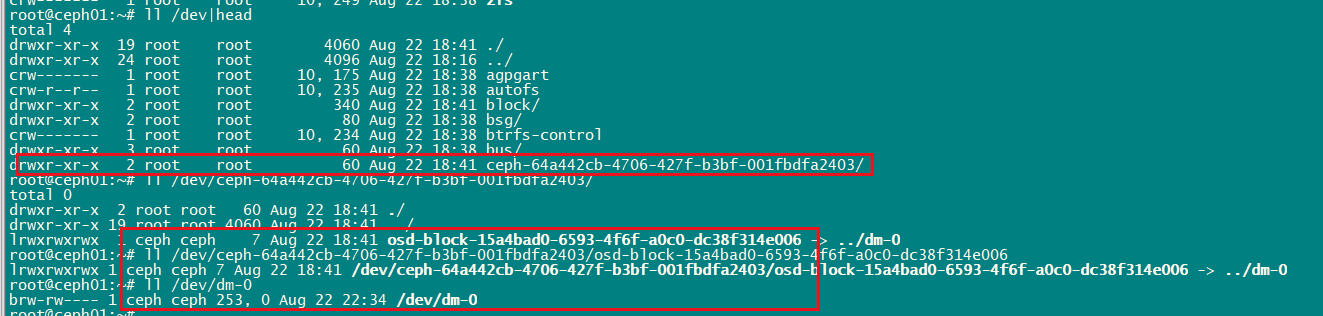

2.修改完成后,ceph -s发现osd显示unknown,且无法启动,查看ceph日志/var/log/ceph/下的osd.0日志同样提示无权限,

处理:磁盘修改属主ceph即可:chown ceph:ceph /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006

systemctl restart ceph-osd@0

注意层级目录权限不同。

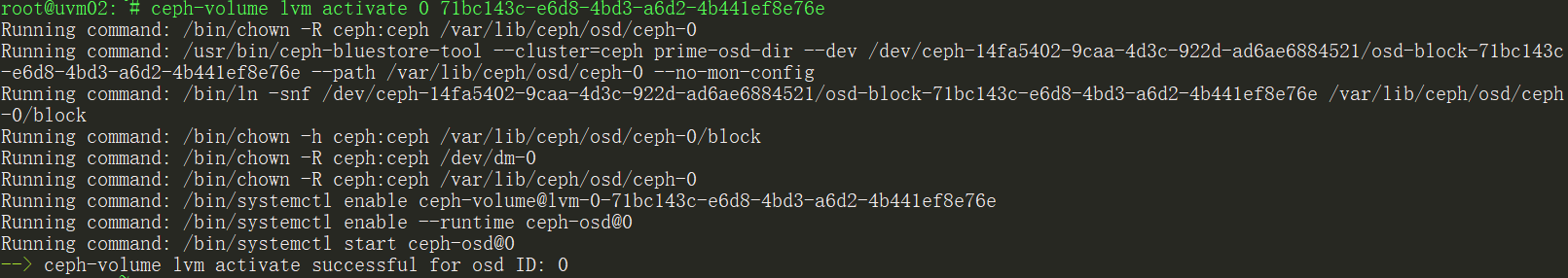

如果还有问题,可能需active激活osd–bug

ceph-volume lvm list

ceph-volume lvm activate 0 15a4bad0-6593-4f6f-a0c0-dc38f314e006

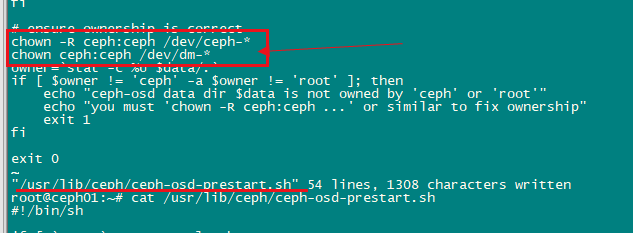

以上操作发现系统重启后权限还是变成了root属主,无法启动osd服务。

如下最终修复bug:在/usr/lib/ceph/ceph-osd-prestart.sh添加下划线内容:

chown -R ceph:ceph /dev/ceph-*

chown ceph:ceph /dev/dm-*

owner=stat -c %U $data/.

if [ $owner != ‘ceph’ -a $owner != ‘root’ ]; then

echo “ceph-osd data dir $data is not owned by ‘ceph’ or ‘root’”

echo “you must ‘chown -R ceph:ceph …’ or similar to fix ownership”

exit 1

fi

~

“/usr/lib/ceph/ceph-osd-prestart.sh”

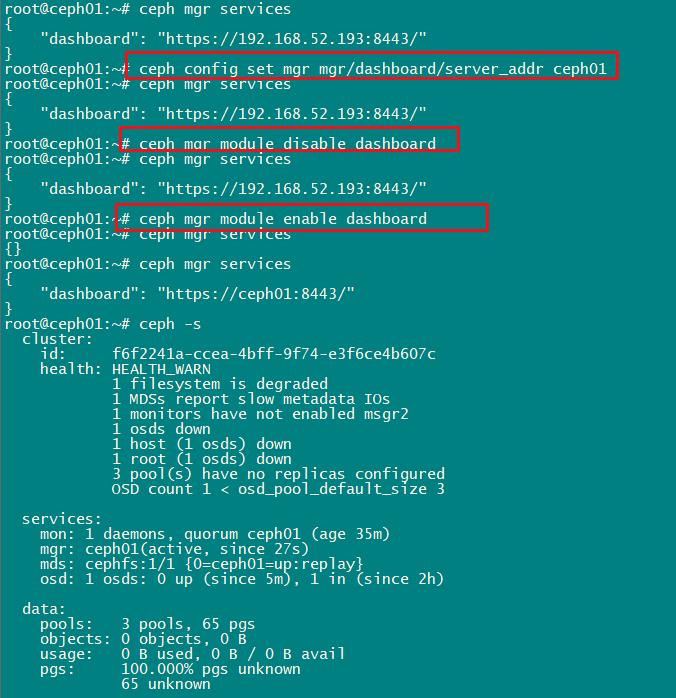

3.修改完成后,ceph -s发现Module ‘dashboard’ has failed: OSError(“No socket could be created – ((‘192.168.52.193’, 8443): [Errno 99] Cannot assign requested address)”,)

处理:

root@ceph01:~# ceph mgr services

{

"dashboard": "https://192.168.52.193:8443/"

}

root@ceph01:~# ceph config set mgr mgr/dashboard/server_addr ceph01

root@ceph01:~# ceph mgr services

{

"dashboard": "https://192.168.52.193:8443/"

}

root@ceph01:~# ceph mgr module disable dashboard

root@ceph01:~# ceph mgr services

{

"dashboard": "https://192.168.52.193:8443/"

}

root@ceph01:~# ceph mgr module enable dashboard

root@ceph01:~# ceph mgr services

{}

root@ceph01:~# ceph mgr services

{

"dashboard": "https://ceph01:8443/"

}

此处注意/etc/hosts的ceph01对应为192.168.52.198即可,去掉127.0.1.1的这行设置。

root@ceph01:~# echo "linux123" > 1.txt

root@ceph01:~# ceph mgr services

{

"dashboard": "https://ceph01:8443/"

}

root@ceph01:~# ceph dashboard ac-user-create cephadmin -i 1.txt administrator

{"username": "cephadmin", "password": "$2b$12$qri7YF5NP.DoltHPJE.i/ulY0qkC3koJUDLCJh44yUItds6rB5chm", "roles": ["administrator"], "name": null, "email": null, "lastUpdate": 1661222817, "enabled": true, "pwdExpirationDate": null, "pwdUpdateRequired": false}

root@ceph01:~#

完整操作日志如下:

*****[2022-08-23 10:41:50]*****

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-84-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

229 updates can be applied immediately.

204 of these updates are standard security updates.

To see these additional updates run: apt list --upgradable

New release '20.04.4 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

Your Hardware Enablement Stack (HWE) is supported until April 2023.

Last login: Mon Aug 22 18:38:57 2022 from 192.168.52.1

omnisky@ceph01:~$ sudo -i -uroot

[sudo] password for omnisky:

root@ceph01:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:a9:d0:fd brd ff:ff:ff:ff:ff:ff

inet 192.168.52.193/24 brd 192.168.52.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::2eec:1cec:6b06:2a13/64 scope link noprefixroute

valid_lft forever preferred_lft forever

root@ceph01:~# cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 ceph01

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.52.193 ceph01

root@ceph01:~# ll /var/lib/ceph/

total 60

drwxr-x--- 15 ceph ceph 4096 Aug 22 18:36 ./

drwxr-xr-x 66 root root 4096 Aug 22 18:35 ../

drwxr-xr-x 2 ceph ceph 4096 Aug 22 18:40 bootstrap-mds/

drwxr-xr-x 2 ceph ceph 4096 Aug 22 18:40 bootstrap-mgr/

drwxr-xr-x 2 ceph ceph 4096 Aug 22 18:41 bootstrap-osd/

drwxr-xr-x 2 ceph ceph 4096 Aug 5 2021 bootstrap-rbd/

drwxr-xr-x 2 ceph ceph 4096 Aug 5 2021 bootstrap-rbd-mirror/

drwxr-xr-x 2 ceph ceph 4096 Aug 5 2021 bootstrap-rgw/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:35 crash/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 mds/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 mgr/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 mon/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:41 osd/

drwxr-xr-x 2 ceph ceph 4096 Aug 5 2021 radosgw/

drwxr-xr-x 2 ceph ceph 4096 Aug 22 18:40 tmp/

root@ceph01:~# ll /var/lib/ceph/mon

total 12

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ./

drwxr-x--- 15 ceph ceph 4096 Aug 22 18:36 ../

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ceph-ceph01/

root@ceph01:~# ll /var/lib/ceph/mon/ceph-ceph01/

total 24

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ./

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ../

-rw-r--r-- 1 ceph ceph 0 Aug 22 18:40 done

-rw------- 1 ceph ceph 77 Aug 22 18:40 keyring

-rw------- 1 ceph ceph 8 Aug 22 18:40 kv_backend

-rw------- 1 ceph ceph 8 Aug 22 18:40 min_mon_release

drwxr-xr-x 2 ceph ceph 4096 Aug 22 19:41 store.db/

-rw-r--r-- 1 ceph ceph 0 Aug 22 18:40 systemd

root@ceph01:~# ll /var/lib/ceph/mon/ceph-ceph01/store.db/

total 60724

drwxr-xr-x 2 ceph ceph 4096 Aug 22 19:41 ./

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ../

-rw------- 1 ceph ceph 1444246 Aug 22 19:42 000018.log

-rw------- 1 ceph ceph 21039973 Aug 22 19:41 000020.sst

-rw------- 1 ceph ceph 16 Aug 22 18:40 CURRENT

-rw------- 1 ceph ceph 37 Aug 22 18:40 IDENTITY

-rw-r--r-- 1 ceph ceph 0 Aug 22 18:40 LOCK

-rw------- 1 ceph ceph 664 Aug 22 19:41 MANIFEST-000005

-rw------- 1 ceph ceph 4943 Aug 22 18:40 OPTIONS-000005

-rw------- 1 ceph ceph 4944 Aug 22 18:40 OPTIONS-000008

root@ceph01:~# ceph mgr services

{

"dashboard": "https://ceph01:8443/"

}

root@ceph01:~# ceph dashboard ac-user-create cephadmin cephpassword administrator

Error EINVAL: Please specify the file containing the password/secret with "-i" option.

root@ceph01:~# ceph dashboard ac-user-create cephadmin -i cephpassword administrator

Can't open input file cephpassword: [Errno 2] No such file or directory: 'cephpassword'

root@ceph01:~# ceph mgr services

{

"dashboard": "https://ceph01:8443/"

}

root@ceph01:~# pwd

/root

root@ceph01:~# echo "123456" > 1.txt

root@ceph01:~# ceph dashboard ac-user-create admin -i 1.txt administrator

Error EINVAL: Password is too weak.

root@ceph01:~# echo "linux123" > 1.txt

root@ceph01:~# ceph dashboard ac-user-create admin -i 1.txt administrator

User 'admin' already exists

root@ceph01:~# ceph mgr services

{

"dashboard": "https://ceph01:8443/"

}

root@ceph01:~# ceph dashboard ac-user-create cephadmin -i 1.txt administrator

{"username": "cephadmin", "password": "$2b$12$qri7YF5NP.DoltHPJE.i/ulY0qkC3koJUDLCJh44yUItds6rB5chm", "roles": ["administrator"], "name": null, "email": null, "lastUpdate": 1661222817, "enabled": true, "pwdExpirationDate": null, "pwdUpdateRequired": false}

root@ceph01:~#

root@ceph01:~#

root@ceph01:~#

root@ceph01:~#

root@ceph01:~# systemctl status ceph-mon@ceph01

● ceph-mon@ceph01.service - Ceph cluster monitor daemon

Loaded: loaded (/lib/systemd/system/ceph-mon@.service; indirect; vendor preset: enabled)

Active: active (running) since Mon 2022-08-22 18:40:31 PDT; 1h 11min ago

Main PID: 1761 (ceph-mon)

Tasks: 27

CGroup: /system.slice/system-ceph\x2dmon.slice/ceph-mon@ceph01.service

└─1761 /usr/bin/ceph-mon -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

Aug 22 18:40:31 ceph01 systemd[1]: Started Ceph cluster monitor daemon.

Aug 22 19:39:48 ceph01 ceph-mon[1761]: 2022-08-22T19:39:48.203-0700 7f2ec6bb5700 -1 mon.ceph01@0(leader).osd e28 no beacon from osd.0

root@ceph01:~# systemctl status ceph-mds@ceph01

● ceph-mds@ceph01.service - Ceph metadata server daemon

Loaded: loaded (/lib/systemd/system/ceph-mds@.service; indirect; vendor preset: enabled)

Active: active (running) since Mon 2022-08-22 18:40:46 PDT; 1h 11min ago

Main PID: 2266 (ceph-mds)

Tasks: 23

CGroup: /system.slice/system-ceph\x2dmds.slice/ceph-mds@ceph01.service

└─2266 /usr/bin/ceph-mds -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

Aug 22 18:40:46 ceph01 systemd[1]: Started Ceph metadata server daemon.

Aug 22 18:40:46 ceph01 ceph-mds[2266]: starting mds.ceph01 at

root@ceph01:~# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_WARN

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 26m)

mgr: ceph01(active, since 70m)

mds: cephfs:1 {0=ceph01=up:active}

osd: 1 osds: 1 up (since 12m), 1 in (since 70m)

data:

pools: 3 pools, 65 pgs

objects: 22 objects, 2.2 KiB

usage: 1.0 GiB used, 2.0 GiB / 3.0 GiB avail

pgs: 65 active+clean

root@ceph01:~# cd /var/log/ceph/

root@ceph01:/var/log/ceph# ll

total 1040

drwxrws--T 2 ceph ceph 4096 Aug 22 18:41 ./

drwxrwxr-x 13 root syslog 4096 Aug 22 19:39 ../

-rw------- 1 ceph ceph 42040 Aug 22 19:52 ceph.audit.log

-rw------- 1 ceph ceph 148339 Aug 22 19:52 ceph.log

-rw-r--r-- 1 ceph ceph 4674 Aug 22 18:50 ceph-mds.ceph01.log

-rw-r--r-- 1 ceph ceph 153154 Aug 22 19:52 ceph-mgr.ceph01.log

-rw-r--r-- 1 ceph ceph 322831 Aug 22 19:52 ceph-mon.ceph01.log

-rw-r--r-- 1 ceph ceph 337307 Aug 22 19:49 ceph-osd.0.log

-rw-r--r-- 1 root ceph 18393 Aug 22 18:41 ceph-volume.log

root@ceph01:/var/log/ceph# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_WARN

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 42m)

mgr: ceph01(active, since 85m)

mds: cephfs:1 {0=ceph01=up:active}

osd: 1 osds: 1 up (since 28m), 1 in (since 86m)

data:

pools: 3 pools, 65 pgs

objects: 22 objects, 2.2 KiB

usage: 1.0 GiB used, 2.0 GiB / 3.0 GiB avail

pgs: 65 active+clean

root@ceph01:/var/log/ceph# vi /etc/hosts

127.0.0.1 localhost

127.0.1.1 ceph01

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.52.193 ceph01

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

127.0.0.1 localhost

127.0.1.1 ceph01

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

"/etc/hosts" 10 lines, 243 characters written

root@ceph01:/var/log/ceph# cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 ceph01

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.52.198 ceph01

root@ceph01:/var/log/ceph# find / -name ceph.conf

/usr/lib/tmpfiles.d/ceph.conf

find: ‘/run/user/1000/gvfs’: Permission denied

/etc/ceph/ceph.conf

/root/soft/cephFs-20220315/ceph_deploy/ceph.conf

root@ceph01:/var/log/ceph# cat /usr/lib/tmpfiles.d/ceph.conf

d /run/ceph 0770 ceph ceph -

root@ceph01:/var/log/ceph# cat /etc/ceph/ceph.conf

[global]

fsid = f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

public_network = 192.168.52.0/24

cluster_network = 192.168.52.0/24

mon_initial_members = ceph01

mon_host = 192.168.52.193

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

mon clock drift allowed = 2

mon clock drift warn backoff = 30

root@ceph01:/var/log/ceph# cat /root/soft/cephFs-20220315/ceph_deploy/ceph.conf

[global]

fsid = f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

public_network = 192.168.52.0/24

cluster_network = 192.168.52.0/24

mon_initial_members = ceph01

mon_host = 192.168.52.193

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

mon clock drift allowed = 2

mon clock drift warn backoff = 30

root@ceph01:/var/log/ceph# cd /etc/ceph/

root@ceph01:/etc/ceph# vi ceph.conf

[global]

fsid = f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

public_network = 192.168.52.0/24

cluster_network = 192.168.52.0/24

mon_initial_members = ceph01

mon_host = 192.168.52.193

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

mon clock drift allowed = 2

mon clock drift warn backoff = 30

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

~

[global]

fsid = f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

public_network = 192.168.52.0/24

cluster_network = 192.168.52.0/24

mon_initial_members = ceph01

"ceph.conf" 12 lines, 327 characters written

root@ceph01:/etc/ceph# ceph-deploy --overwrite-conf config push ceph01

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy --overwrite-conf config push ceph01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : push

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f874a53b6e0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph01']

[ceph_deploy.cli][INFO ] func : <function config at 0x7f874a5848d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.config][DEBUG ] Pushing config to ceph01

[ceph01][DEBUG ] connected to host: ceph01

[ceph01][DEBUG ] detect platform information from remote host

[ceph01][DEBUG ] detect machine type

[ceph01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

root@ceph01:/etc/ceph# cat /etc/ceph/ceph.conf

[global]

fsid = f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

public_network = 192.168.52.0/24

cluster_network = 192.168.52.0/24

mon_initial_members = ceph01

mon_host = 192.168.52.198

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

mon clock drift allowed = 2

mon clock drift warn backoff = 30

root@ceph01:/etc/ceph# ceph -s

^CCluster connection aborted

root@ceph01:/etc/ceph# monmaptool --create --generate -c /etc/ceph/ceph.conf ./monmap

monmaptool: monmap file ./monmap

monmaptool: set fsid to f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

monmaptool: writing epoch 0 to ./monmap (1 monitors)

root@ceph01:/etc/ceph# monmaptool --print monmap

monmaptool: monmap file monmap

epoch 0

fsid f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

last_changed 2022-08-22T20:10:02.177085-0700

created 2022-08-22T20:10:02.177085-0700

min_mon_release 0 (unknown)

0: [v2:192.168.52.198:3300/0,v1:192.168.52.198:6789/0] mon.noname-a

root@ceph01:/etc/ceph# monmaptool --rm noname-a ./monmap

monmaptool: monmap file ./monmap

monmaptool: removing noname-a

monmaptool: writing epoch 0 to ./monmap (0 monitors)

root@ceph01:/etc/ceph# monmaptool --print monmap

monmaptool: monmap file monmap

epoch 0

fsid f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

last_changed 2022-08-22T20:10:02.177085-0700

created 2022-08-22T20:10:02.177085-0700

min_mon_release 0 (unknown)

root@ceph01:/etc/ceph# monmaptool --add ceph01 192.168.52.198:6789

monmaptool: must specify monmap filename

monmaptool -h for usage

root@ceph01:/etc/ceph# monmaptool --add ceph01 192.168.52.198:6789 monmap

monmaptool: monmap file monmap

monmaptool: writing epoch 0 to monmap (1 monitors)

root@ceph01:/etc/ceph# monmaptool --print monmap

monmaptool: monmap file monmap

epoch 0

fsid f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

last_changed 2022-08-22T20:10:02.177085-0700

created 2022-08-22T20:10:02.177085-0700

min_mon_release 0 (unknown)

0: v1:192.168.52.198:6789/0 mon.ceph01

root@ceph01:/etc/ceph# systemctl stop ceph

root@ceph01:/etc/ceph# ceph-mon -i ceph01 --inject-monmap ./monmap

2022-08-22T20:12:14.684-0700 7f6d8574e540 -1 rocksdb: IO error: While lock file: /var/lib/ceph/mon/ceph-ceph01/store.db/LOCK: Resource temporarily unavailable

2022-08-22T20:12:14.684-0700 7f6d8574e540 -1 error opening mon data directory at '/var/lib/ceph/mon/ceph-ceph01': (22) Invalid argument

root@ceph01:/etc/ceph# ps -ef|grep ceph

root 746 1 0 19:23 ? 00:00:00 /usr/bin/python3.6 /usr/bin/ceph-crash

avahi 759 1 0 19:23 ? 00:00:00 avahi-daemon: running [ceph01.local]

ceph 1761 1 0 19:25 ? 00:00:25 /usr/bin/ceph-mon -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

ceph 2082 1 0 19:25 ? 00:00:23 /usr/bin/ceph-mgr -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

ceph 2266 1 0 19:25 ? 00:00:03 /usr/bin/ceph-mds -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

ceph 2637 1 0 19:26 ? 00:00:09 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

root 5580 4898 0 20:14 pts/1 00:00:00 grep --color=auto ceph

root@ceph01:/etc/ceph# kill -9 1761 2082 2266 2637

root@ceph01:/etc/ceph# ps -ef|grep ceph

root 746 1 0 19:23 ? 00:00:00 /usr/bin/python3.6 /usr/bin/ceph-crash

avahi 759 1 0 19:23 ? 00:00:00 avahi-daemon: running [ceph01.local]

ceph 5596 1 1 20:15 ? 00:00:00 /usr/bin/ceph-mds -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

root 5606 4898 0 20:15 pts/1 00:00:00 grep --color=auto ceph

root@ceph01:/etc/ceph# kill -9 5596

root@ceph01:/etc/ceph# ps -ef|grep ceph

root 746 1 0 19:23 ? 00:00:00 /usr/bin/python3.6 /usr/bin/ceph-crash

avahi 759 1 0 19:23 ? 00:00:00 avahi-daemon: running [ceph01.local]

ceph 5608 1 0 20:15 ? 00:00:00 /usr/bin/ceph-mgr -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

ceph 5609 1 1 20:15 ? 00:00:00 /usr/bin/ceph-mon -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

ceph 5613 1 0 20:15 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

ceph 5672 1 1 20:15 ? 00:00:00 /usr/bin/ceph-mds -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

root 5682 4898 0 20:15 pts/1 00:00:00 grep --color=auto ceph

root@ceph01:/etc/ceph# kill -9 746 5608 5609 5613 5672

root@ceph01:/etc/ceph# ps -ef|grep ceph

avahi 759 1 0 19:23 ? 00:00:00 avahi-daemon: running [ceph01.local]

ceph 5691 1 0 20:16 ? 00:00:00 /usr/bin/ceph-mds -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

root 5701 4898 0 20:16 pts/1 00:00:00 grep --color=auto ceph

root@ceph01:/etc/ceph# ceph-mon -i ceph01 --inject-monmap ./monmap

root@ceph01:/etc/ceph# ps -ef|grep ceph

avahi 759 1 0 19:23 ? 00:00:00 avahi-daemon: running [ceph01.local]

ceph 5691 1 0 20:16 ? 00:00:00 /usr/bin/ceph-mds -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

ceph 5727 1 1 20:16 ? 00:00:00 /usr/bin/ceph-mgr -f --cluster ceph --id ceph01 --setuser ceph --setgroup ceph

root 5729 1 1 20:16 ? 00:00:00 /usr/bin/python3.6 /usr/bin/ceph-crash

ceph 5733 1 0 20:16 ? 00:00:00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

root 5760 4898 0 20:16 pts/1 00:00:00 grep --color=auto ceph

root@ceph01:/etc/ceph# systemctl restart ceph

root@ceph01:/etc/ceph# systemctl status ceph

● ceph.service - LSB: Start Ceph distributed file system daemons at boot time

Loaded: loaded (/etc/init.d/ceph; generated)

Active: active (exited) since Mon 2022-08-22 20:16:26 PDT; 4s ago

Docs: man:systemd-sysv-generator(8)

Process: 5773 ExecStart=/etc/init.d/ceph start (code=exited, status=0/SUCCESS)

Aug 22 20:16:26 ceph01 systemd[1]: Starting LSB: Start Ceph distributed file system daemons at boot time...

Aug 22 20:16:26 ceph01 systemd[1]: Started LSB: Start Ceph distributed file system daemons at boot time.

root@ceph01:/etc/ceph# systemctl status ceph-mon@ceph01

● ceph-mon@ceph01.service - Ceph cluster monitor daemon

Loaded: loaded (/lib/systemd/system/ceph-mon@.service; indirect; vendor preset: enabled)

Active: failed (Result: exit-code) since Mon 2022-08-22 20:16:55 PDT; 24s ago

Process: 5827 ExecStart=/usr/bin/ceph-mon -f --cluster ${CLUSTER} --id ceph01 --setuser ceph --setgroup ceph (code=exited, status=1

Main PID: 5827 (code=exited, status=1/FAILURE)

Aug 22 20:16:45 ceph01 systemd[1]: ceph-mon@ceph01.service: Main process exited, code=exited, status=1/FAILURE

Aug 22 20:16:45 ceph01 systemd[1]: ceph-mon@ceph01.service: Failed with result 'exit-code'.

Aug 22 20:16:55 ceph01 systemd[1]: ceph-mon@ceph01.service: Service hold-off time over, scheduling restart.

Aug 22 20:16:55 ceph01 systemd[1]: ceph-mon@ceph01.service: Scheduled restart job, restart counter is at 6.

Aug 22 20:16:55 ceph01 systemd[1]: Stopped Ceph cluster monitor daemon.

Aug 22 20:16:55 ceph01 systemd[1]: ceph-mon@ceph01.service: Start request repeated too quickly.

Aug 22 20:16:55 ceph01 systemd[1]: ceph-mon@ceph01.service: Failed with result 'exit-code'.

Aug 22 20:16:55 ceph01 systemd[1]: Failed to start Ceph cluster monitor daemon.

root@ceph01:/etc/ceph# tail -50 /var/log/ceph/ceph-m

ceph-mds.ceph01.log ceph-mgr.ceph01.log ceph-mon.ceph01.log

root@ceph01:/etc/ceph# tail -50 /var/log/ceph/ceph-m

ceph-mds.ceph01.log ceph-mgr.ceph01.log ceph-mon.ceph01.log

root@ceph01:/etc/ceph# tail -50 /var/log/ceph/ceph-mon.ceph01.log

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.new_table_reader_for_compaction_inputs: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.random_access_max_buffer_size: 1048576

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.use_adaptive_mutex: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.rate_limiter: (nil)

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.sst_file_manager.rate_bytes_per_sec: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.wal_recovery_mode: 2

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.enable_thread_tracking: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.enable_pipelined_write: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.allow_concurrent_memtable_write: 1

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.enable_write_thread_adaptive_yield: 1

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.write_thread_max_yield_usec: 100

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.write_thread_slow_yield_usec: 3

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.row_cache: None

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.wal_filter: None

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.avoid_flush_during_recovery: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.allow_ingest_behind: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.preserve_deletes: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.two_write_queues: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.manual_wal_flush: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.atomic_flush: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.avoid_unnecessary_blocking_io: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.max_background_jobs: 2

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.max_background_compactions: -1

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.avoid_flush_during_shutdown: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.writable_file_max_buffer_size: 1048576

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.delayed_write_rate : 16777216

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.max_total_wal_size: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.delete_obsolete_files_period_micros: 21600000000

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.stats_dump_period_sec: 600

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.stats_persist_period_sec: 600

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.stats_history_buffer_size: 1048576

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.max_open_files: -1

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.bytes_per_sync: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.wal_bytes_per_sync: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.compaction_readahead_size: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Compression algorithms supported:

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: kZSTDNotFinalCompression supported: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: kZSTD supported: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: kXpressCompression supported: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: kLZ4HCCompression supported: 1

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: kLZ4Compression supported: 1

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: kBZip2Compression supported: 0

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: kZlibCompression supported: 1

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: kSnappyCompression supported: 1

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: Fast CRC32 supported: Supported on x86

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: [db/db_impl_readonly.cc:22] Opening the db in read only mode

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: [db/db_impl.cc:390] Shutdown: canceling all background work

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: [db/db_impl.cc:563] Shutdown complete

2022-08-22T20:16:45.097-0700 7f88130ec540 -1 rocksdb: IO error: While opening a file for sequentially reading: /var/lib/ceph/mon/ceph-ceph01/store.db/CURRENT: Permission denied

2022-08-22T20:16:45.097-0700 7f88130ec540 -1 error opening mon data directory at '/var/lib/ceph/mon/ceph-ceph01': (22) Invalid argument

root@ceph01:/etc/ceph# ll /var/lib/ceph/mon

total 12

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ./

drwxr-x--- 15 ceph ceph 4096 Aug 22 18:36 ../

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ceph-ceph01/

root@ceph01:/etc/ceph# ll /var/lib/ceph/mon/ceph-ceph01/

total 24

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ./

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ../

-rw-r--r-- 1 ceph ceph 0 Aug 22 18:40 done

-rw------- 1 ceph ceph 77 Aug 22 18:40 keyring

-rw------- 1 ceph ceph 8 Aug 22 18:40 kv_backend

-rw------- 1 ceph ceph 8 Aug 22 18:40 min_mon_release

drwxr-xr-x 2 ceph ceph 4096 Aug 22 20:16 store.db/

-rw-r--r-- 1 ceph ceph 0 Aug 22 18:40 systemd

root@ceph01:/etc/ceph# ll /var/lib/ceph/mon/ceph-ceph01/store.db/

total 70508

drwxr-xr-x 2 ceph ceph 4096 Aug 22 20:16 ./

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ../

-rw------- 1 ceph ceph 25493929 Aug 22 20:12 000062.sst

-rw------- 1 ceph ceph 5314674 Aug 22 20:15 000064.sst

-rw------- 1 root root 242005 Aug 22 20:16 000067.sst

-rw------- 1 root root 410 Aug 22 20:16 000069.log

-rw------- 1 root root 16 Aug 22 20:16 CURRENT

-rw------- 1 ceph ceph 37 Aug 22 18:40 IDENTITY

-rw-r--r-- 1 ceph ceph 0 Aug 22 18:40 LOCK

-rw------- 1 root root 219 Aug 22 20:16 MANIFEST-000068

-rw------- 1 ceph ceph 4944 Aug 22 20:15 OPTIONS-000068

-rw------- 1 root root 4944 Aug 22 20:16 OPTIONS-000071

root@ceph01:/etc/ceph# chown -R ceph:ceph /var/lib/ceph/mon/ceph-ceph01/store.db

root@ceph01:/etc/ceph# ll /var/lib/ceph/mon/ceph-ceph01/store.db/

total 70508

drwxr-xr-x 2 ceph ceph 4096 Aug 22 20:16 ./

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ../

-rw------- 1 ceph ceph 25493929 Aug 22 20:12 000062.sst

-rw------- 1 ceph ceph 5314674 Aug 22 20:15 000064.sst

-rw------- 1 ceph ceph 242005 Aug 22 20:16 000067.sst

-rw------- 1 ceph ceph 410 Aug 22 20:16 000069.log

-rw------- 1 ceph ceph 16 Aug 22 20:16 CURRENT

-rw------- 1 ceph ceph 37 Aug 22 18:40 IDENTITY

-rw-r--r-- 1 ceph ceph 0 Aug 22 18:40 LOCK

-rw------- 1 ceph ceph 219 Aug 22 20:16 MANIFEST-000068

-rw------- 1 ceph ceph 4944 Aug 22 20:15 OPTIONS-000068

-rw------- 1 ceph ceph 4944 Aug 22 20:16 OPTIONS-000071

root@ceph01:/etc/ceph# systemctl restart ceph-mon@ceph01

Job for ceph-mon@ceph01.service failed because the control process exited with error code.

See "systemctl status ceph-mon@ceph01.service" and "journalctl -xe" for details.

root@ceph01:/etc/ceph# systemctl restart ceph

root@ceph01:/etc/ceph# systemctl status ceph-mon@ceph01

● ceph-mon@ceph01.service - Ceph cluster monitor daemon

Loaded: loaded (/lib/systemd/system/ceph-mon@.service; indirect; vendor preset: enabled)

Active: failed (Result: exit-code) since Mon 2022-08-22 20:16:55 PDT; 1min 53s ago

Process: 5827 ExecStart=/usr/bin/ceph-mon -f --cluster ${CLUSTER} --id ceph01 --setuser ceph --setgroup ceph (code=exited, status=1

Main PID: 5827 (code=exited, status=1/FAILURE)

Aug 22 20:16:45 ceph01 systemd[1]: ceph-mon@ceph01.service: Failed with result 'exit-code'.

Aug 22 20:16:55 ceph01 systemd[1]: ceph-mon@ceph01.service: Service hold-off time over, scheduling restart.

Aug 22 20:16:55 ceph01 systemd[1]: ceph-mon@ceph01.service: Scheduled restart job, restart counter is at 6.

Aug 22 20:16:55 ceph01 systemd[1]: Stopped Ceph cluster monitor daemon.

Aug 22 20:16:55 ceph01 systemd[1]: ceph-mon@ceph01.service: Start request repeated too quickly.

Aug 22 20:16:55 ceph01 systemd[1]: ceph-mon@ceph01.service: Failed with result 'exit-code'.

Aug 22 20:16:55 ceph01 systemd[1]: Failed to start Ceph cluster monitor daemon.

Aug 22 20:18:32 ceph01 systemd[1]: ceph-mon@ceph01.service: Start request repeated too quickly.

Aug 22 20:18:32 ceph01 systemd[1]: ceph-mon@ceph01.service: Failed with result 'exit-code'.

Aug 22 20:18:32 ceph01 systemd[1]: Failed to start Ceph cluster monitor daemon.

root@ceph01:/etc/ceph# tail -20 /var/log/ceph/ceph-mon.ceph01.log

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.stats_history_buffer_size: 1048576

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.max_open_files: -1

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.bytes_per_sync: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.wal_bytes_per_sync: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Options.compaction_readahead_size: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: Compression algorithms supported:

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: kZSTDNotFinalCompression supported: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: kZSTD supported: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: kXpressCompression supported: 0

2022-08-22T20:16:45.093-0700 7f88130ec540 4 rocksdb: kLZ4HCCompression supported: 1

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: kLZ4Compression supported: 1

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: kBZip2Compression supported: 0

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: kZlibCompression supported: 1

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: kSnappyCompression supported: 1

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: Fast CRC32 supported: Supported on x86

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: [db/db_impl_readonly.cc:22] Opening the db in read only mode

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: [db/db_impl.cc:390] Shutdown: canceling all background work

2022-08-22T20:16:45.097-0700 7f88130ec540 4 rocksdb: [db/db_impl.cc:563] Shutdown complete

2022-08-22T20:16:45.097-0700 7f88130ec540 -1 rocksdb: IO error: While opening a file for sequentially reading: /var/lib/ceph/mon/ceph-ceph01/store.db/CURRENT: Permission denied

2022-08-22T20:16:45.097-0700 7f88130ec540 -1 error opening mon data directory at '/var/lib/ceph/mon/ceph-ceph01': (22) Invalid argument

root@ceph01:/etc/ceph# ll /var/lib/ceph/mon/ceph-ceph01/store.db/

total 70508

drwxr-xr-x 2 ceph ceph 4096 Aug 22 20:16 ./

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 ../

-rw------- 1 ceph ceph 25493929 Aug 22 20:12 000062.sst

-rw------- 1 ceph ceph 5314674 Aug 22 20:15 000064.sst

-rw------- 1 ceph ceph 242005 Aug 22 20:16 000067.sst

-rw------- 1 ceph ceph 410 Aug 22 20:16 000069.log

-rw------- 1 ceph ceph 16 Aug 22 20:16 CURRENT

-rw------- 1 ceph ceph 37 Aug 22 18:40 IDENTITY

-rw-r--r-- 1 ceph ceph 0 Aug 22 18:40 LOCK

-rw------- 1 ceph ceph 219 Aug 22 20:16 MANIFEST-000068

-rw------- 1 ceph ceph 4944 Aug 22 20:15 OPTIONS-000068

-rw------- 1 ceph ceph 4944 Aug 22 20:16 OPTIONS-000071

root@ceph01:/etc/ceph# nmtui

*****[2022-08-23 11:20:24]*****

root@ceph01:~# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_ERR

1 filesystem is degraded

1 MDSs report slow metadata IOs

Module 'dashboard' has failed: OSError("No socket could be created -- (('192.168.52.193', 8443): [Errno 99] Cannot assign requested address)",)

1 monitors have not enabled msgr2

Reduced data availability: 65 pgs inactive

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 4m)

mgr: ceph01(active, since 4m)

mds: cephfs:1/1 {0=ceph01=up:replay}

osd: 1 osds: 1 up (since 45m), 1 in (since 103m)

data:

pools: 3 pools, 65 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

65 unknown

root@ceph01:~#

root@ceph01:~#

root@ceph01:~# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_ERR

1 filesystem is degraded

1 MDSs report slow metadata IOs

Module 'dashboard' has failed: OSError("No socket could be created -- (('192.168.52.193', 8443): [Errno 99] Cannot assign requested address)",)

1 monitors have not enabled msgr2

1 osds down

1 host (1 osds) down

1 root (1 osds) down

Reduced data availability: 65 pgs inactive

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 32m)

mgr: ceph01(active, since 31m)

mds: cephfs:1/1 {0=ceph01=up:replay}

osd: 1 osds: 0 up (since 2m), 1 in (since 2h)

data:

pools: 3 pools, 65 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

65 unknown

root@ceph01:~# ceph mgr services

{

"dashboard": "https://192.168.52.193:8443/"

}

root@ceph01:~# ceph config set mgr mgr/dashboard/server_addr ceph01

root@ceph01:~# ceph mgr services

{

"dashboard": "https://192.168.52.193:8443/"

}

root@ceph01:~# ceph mgr module disable dashboard

root@ceph01:~# ceph mgr services

{

"dashboard": "https://192.168.52.193:8443/"

}

root@ceph01:~# ceph mgr module enable dashboard

root@ceph01:~# ceph mgr services

{}

root@ceph01:~# ceph mgr services

{

"dashboard": "https://ceph01:8443/"

}

root@ceph01:~# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_WARN

1 filesystem is degraded

1 MDSs report slow metadata IOs

1 monitors have not enabled msgr2

1 osds down

1 host (1 osds) down

1 root (1 osds) down

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 35m)

mgr: ceph01(active, since 27s)

mds: cephfs:1/1 {0=ceph01=up:replay}

osd: 1 osds: 0 up (since 5m), 1 in (since 2h)

data:

pools: 3 pools, 65 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

65 unknown

root@ceph01:~# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_WARN

1 filesystem is degraded

1 MDSs report slow metadata IOs

1 monitors have not enabled msgr2

1 osds down

1 host (1 osds) down

1 root (1 osds) down

Reduced data availability: 65 pgs inactive

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 36m)

mgr: ceph01(active, since 66s)

mds: cephfs:1/1 {0=ceph01=up:replay}

osd: 1 osds: 0 up (since 6m), 1 in (since 2h)

data:

pools: 3 pools, 65 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

65 unknown

root@ceph01:/var/log/ceph# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_WARN

1 filesystem is degraded

1 MDSs report slow metadata IOs

1 monitors have not enabled msgr2

1 osds down

1 host (1 osds) down

1 root (1 osds) down

Reduced data availability: 65 pgs inactive

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 47m)

mgr: ceph01(active, since 47m)

mds: cephfs:1/1 {0=ceph01=up:replay}

osd: 1 osds: 0 up (since 61m), 1 in (since 3h)

data:

pools: 3 pools, 65 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

65 unknown

root@ceph01:/var/log/ceph# ll /var/lib/ceph/

total 60

drwxr-x--- 15 ceph ceph 4096 Aug 22 18:36 ./

drwxr-xr-x 66 root root 4096 Aug 22 18:35 ../

drwxr-xr-x 2 ceph ceph 4096 Aug 22 18:40 bootstrap-mds/

drwxr-xr-x 2 ceph ceph 4096 Aug 22 18:40 bootstrap-mgr/

drwxr-xr-x 2 ceph ceph 4096 Aug 22 18:41 bootstrap-osd/

drwxr-xr-x 2 ceph ceph 4096 Aug 5 2021 bootstrap-rbd/

drwxr-xr-x 2 ceph ceph 4096 Aug 5 2021 bootstrap-rbd-mirror/

drwxr-xr-x 2 ceph ceph 4096 Aug 5 2021 bootstrap-rgw/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:35 crash/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 mds/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 mgr/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:40 mon/

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:41 osd/

drwxr-xr-x 2 ceph ceph 4096 Aug 5 2021 radosgw/

drwxr-xr-x 2 ceph ceph 4096 Aug 22 18:40 tmp/

root@ceph01:/var/log/ceph# ll /var/lib/ceph/osd

total 8

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:41 ./

drwxr-x--- 15 ceph ceph 4096 Aug 22 18:36 ../

drwxrwxrwt 2 ceph ceph 180 Aug 22 21:04 ceph-0/

root@ceph01:/var/log/ceph# ll /var/lib/ceph/osd/ceph-0/

total 28

drwxrwxrwt 2 ceph ceph 180 Aug 22 21:04 ./

drwxr-xr-x 3 ceph ceph 4096 Aug 22 18:41 ../

lrwxrwxrwx 1 ceph ceph 93 Aug 22 21:04 block -> /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006

-rw------- 1 ceph ceph 37 Aug 22 21:04 ceph_fsid

-rw------- 1 ceph ceph 37 Aug 22 21:04 fsid

-rw------- 1 ceph ceph 55 Aug 22 21:04 keyring

-rw------- 1 ceph ceph 6 Aug 22 21:04 ready

-rw------- 1 ceph ceph 10 Aug 22 21:04 type

-rw------- 1 ceph ceph 2 Aug 22 21:04 whoami

root@ceph01:/var/log/ceph# ll /var/lib/ceph/osd/ceph-0/block

lrwxrwxrwx 1 ceph ceph 93 Aug 22 21:04 /var/lib/ceph/osd/ceph-0/block -> /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006

root@ceph01:/var/log/ceph# ll /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006

lrwxrwxrwx 1 root root 7 Aug 22 21:04 /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006 -> ../dm-0

root@ceph01:/var/log/ceph# chown ceph:ceph /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006

root@ceph01:/var/log/ceph# ll /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006

lrwxrwxrwx 1 root root 7 Aug 22 21:04 /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006 -> ../dm-0

root@ceph01:/var/log/ceph# ll /dev/dm-0

brw-rw---- 1 ceph ceph 253, 0 Aug 22 21:04 /dev/dm-0

root@ceph01:/var/log/ceph# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_WARN

1 filesystem is degraded

1 MDSs report slow metadata IOs

1 monitors have not enabled msgr2

1 osds down

1 host (1 osds) down

1 root (1 osds) down

Reduced data availability: 65 pgs inactive

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 49m)

mgr: ceph01(active, since 48m)

mds: cephfs:1/1 {0=ceph01=up:replay}

osd: 1 osds: 0 up (since 63m), 1 in (since 3h)

data:

pools: 3 pools, 65 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs: 100.000% pgs unknown

65 unknown

root@ceph01:/var/log/ceph# systemctl restart ceph-osd@ceph01

Job for ceph-osd@ceph01.service failed because the control process exited with error code.

See "systemctl status ceph-osd@ceph01.service" and "journalctl -xe" for details.

root@ceph01:/var/log/ceph# systemctl restart ceph-osd@0

root@ceph01:/var/log/ceph# systemctl status ceph-osd@0

● ceph-osd@0.service - Ceph object storage daemon osd.0

Loaded: loaded (/lib/systemd/system/ceph-osd@.service; indirect; vendor preset: enabled)

Active: active (running) since Mon 2022-08-22 21:54:09 PDT; 5s ago

Process: 2648 ExecStartPre=/usr/lib/ceph/ceph-osd-prestart.sh --cluster ${CLUSTER} --id 0 (code=exited, status=0/SUCCESS)

Main PID: 2652 (ceph-osd)

Tasks: 58

CGroup: /system.slice/system-ceph\x2dosd.slice/ceph-osd@0.service

└─2652 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

Aug 22 21:54:09 ceph01 systemd[1]: Starting Ceph object storage daemon osd.0...

Aug 22 21:54:09 ceph01 systemd[1]: Started Ceph object storage daemon osd.0.

Aug 22 21:54:10 ceph01 ceph-osd[2652]: 2022-08-22T21:54:10.053-0700 7f5ff3471d80 -1 osd.0 31 log_to_monitors {default=true}

Aug 22 21:54:10 ceph01 ceph-osd[2652]: 2022-08-22T21:54:10.813-0700 7f5fe789d700 -1 osd.0 31 set_numa_affinity unable to identify public interface '' numa node: (2) No such file or director

root@ceph01:/var/log/ceph# ceph -s

cluster:

id: f6f2241a-ccea-4bff-9f74-e3f6ce4b607c

health: HEALTH_WARN

1 monitors have not enabled msgr2

3 pool(s) have no replicas configured

OSD count 1 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph01 (age 49m)

mgr: ceph01(active, since 49m)

mds: cephfs:1 {0=ceph01=up:active}

osd: 1 osds: 1 up (since 10s), 1 in (since 3h)

data:

pools: 3 pools, 65 pgs

objects: 22 objects, 2.2 KiB

usage: 1.0 GiB used, 2.0 GiB / 3.0 GiB avail

pgs: 65 active+clean

root@ceph01:/var/log/ceph# ll /var/lib/ceph/osd/ceph-0/block

lrwxrwxrwx 1 ceph ceph 93 Aug 22 21:04 /var/lib/ceph/osd/ceph-0/block -> /dev/ceph-64a442cb-4706-427f-b3bf-001fbdfa2403/osd-block-15a4bad0-6593-4f6f-a0c0-dc38f314e006

root@ceph01:/var/log/ceph# pwd

/var/log/ceph

root@ceph01:/var/log/ceph# ll

total 6276

drwxrws--T 2 ceph ceph 4096 Aug 22 20:20 ./

drwxrwxr-x 13 root syslog 4096 Aug 22 21:04 ../

-rw------- 1 ceph ceph 356827 Aug 22 21:54 ceph.audit.log

-rw------- 1 ceph ceph 925743 Aug 22 21:54 ceph.log

-rw-r--r-- 1 ceph ceph 118345 Aug 22 21:54 ceph-mds.ceph01.log

-rw-r--r-- 1 ceph ceph 791284 Aug 22 21:54 ceph-mgr.ceph01.log

-rw-r--r-- 1 ceph ceph 3666612 Aug 22 21:54 ceph-mon.ceph01.log

-rw-r--r-- 1 ceph ceph 490669 Aug 22 21:54 ceph-osd.0.log

-rw-r--r-- 1 root ceph 25775 Aug 22 21:04 ceph-volume.log

-rw-r--r-- 1 root ceph 3650 Aug 22 21:04 ceph-volume-systemd.log