动机

完成第一篇文章后,我意识到通过CockroachDB支持的FerretDB代理MongoDB集合有很多可能性。CockroachDB具有独特的数据定位功能,可通过多区域抽象、反转索引和部分索引、计算列,当然还有强大的一致性。今天,我们将通过FerretDB将MongoDB集合恢复为CockroachDB,并扩展我们之前的学习。

高级步骤

-

启动9节点多区域集群(CockroachDB专用)

-

启动FerretDB(Docker)

-

使用mongorestore恢复MongoDB数据集

-

考虑

-

结论

逐步说明

启动9节点多区域集群(CockroachDB专用)

在本教程中,我将使用CockroachDB专用集群,因为我需要访问多区域集群。您可以通过cockroach demo使用本地多区域集群——没有示例数据库——全局——不安全——节点9,但由于我将摄入大量数据,这可能不可行。这也是一个演示FerretDB如何使用CockroachDB证书身份验证的机会。我将我的集群称为artem mr,并使用我们全新的云CLI访问集群。

ccloud cluster sql artem-mr

Retrieving cluster info: succeeded

Downloading cluster cert to /Users/artem/Library/CockroachCloud/certs/artem-mr-ca.crt: succeeded

Retrieving SQL user list: succeeded

SQL username: artem

SQL user password: *****

Starting CockroachDB SQL shell...

#

# Welcome to the CockroachDB SQL shell.

# All statements must be terminated by a semicolon.

# To exit, type: \q.

#

artem@artem-mr-7xw.aws-us-east-1.cockroachlabs.cloud:26257/defaultdb>

让我们快速浏览一下参与集群的地区:

SHOW REGIONS FROM CLUSTER;

region | zones

----------------+-------------------------------------------------

aws-us-east-1 | {aws-us-east-1a,aws-us-east-1b,aws-us-east-1c}

aws-us-east-2 | {aws-us-east-2a,aws-us-east-2b,aws-us-east-2c}

aws-us-west-2 | {aws-us-west-2a,aws-us-west-2b,aws-us-west-2c}

让我们验证访问集群的区域:

select gateway_region();

gateway_region

------------------

aws-us-east-1

让我们创建一个数据库,用于托管MongoDB数据。

CREATE DATABASE ferretdb;

USE ferretdb;

启动FerretDB(Docker)

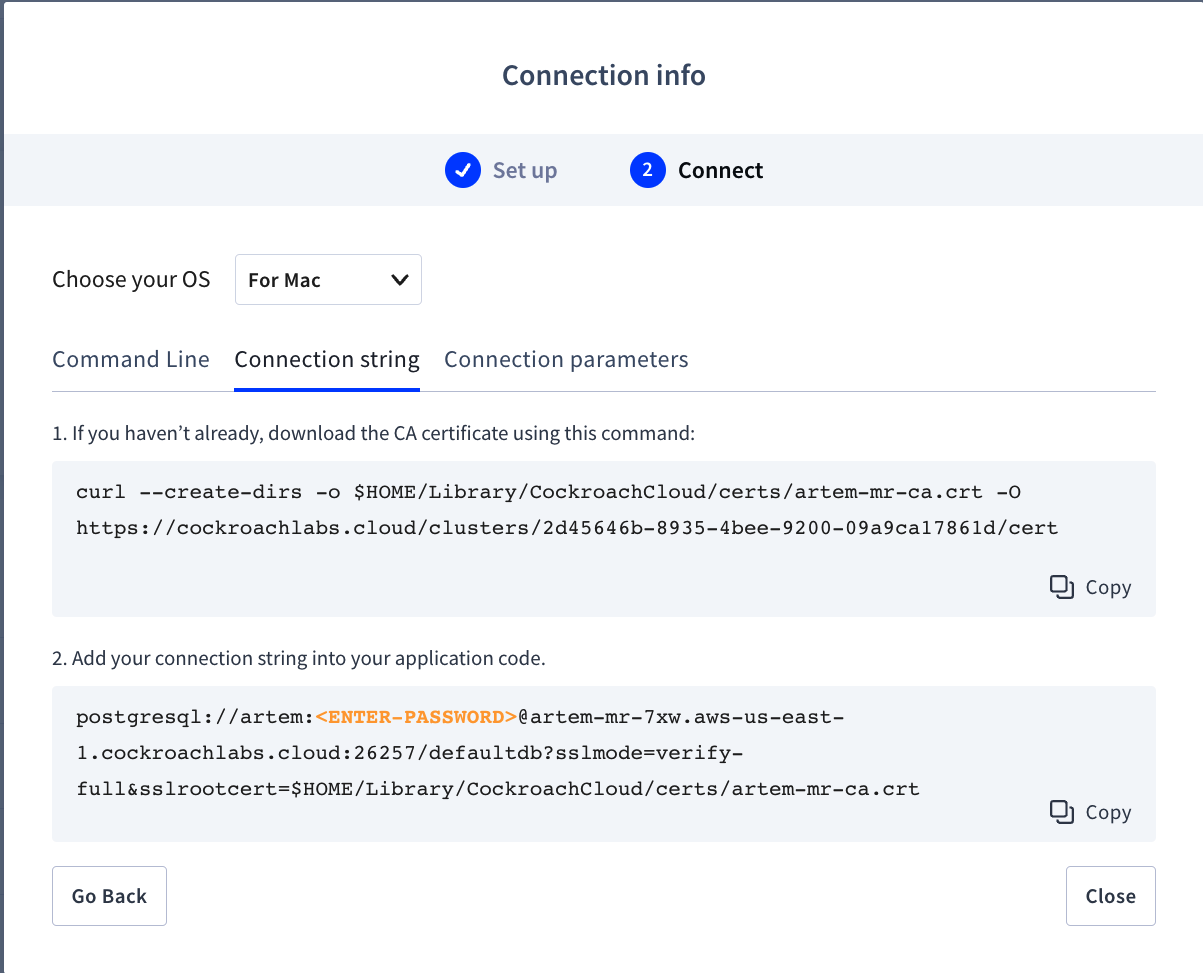

我将在本地启动FerretDB的Docker Compose实例。对于连接字符串,我们需要导航到Cockroach Cloud控制台并切换连接模式。在连接字符串下,我们可以找到填写组合文件所需的所有必要信息。

version: "3"

services:

ferretdb:

image: ghcr.io/ferretdb/ferretdb:latest

hostname: 'ferretdb'

container_name: 'ferretdb'

restart: 'on-failure'

command:

[

'-listen-addr=:27017',

## Dedicated multiregion cluster

'-postgresql-url=postgresql://artem:password@artem-mr-7xw.aws-us-east-1.cockroachlabs.cloud:26257/ferretdb?sslmode=verify-full&sslrootcert=/certs/artem-mr-ca.crt'

]

ports:

- 27017:27017

volumes:

- /Users/artem/Library/CockroachCloud/certs/artem-mr-ca.crt:/certs/artem-mr-ca.crt

请记住,我们需要在连接字符串中指定适当的数据库。因为我使用的是ferretdb,所以我将其包含在我的撰写文件中。

我们还需要安装连接到CockroachDB所需的CA证书。它是我第一次通过CLI连接时自动下载的。

将文件保存为docker compose。yml并发出docker compose-up-d命令来启动容器。

docker compose up -d

[+] Running 1/1

⠿ Container ferretdb Started

检查FerretDB容器日志可能是个好主意,以确保一切正常。

docker logs ferretdb

2022-09-08T13:51:35.456Z INFO pgdb v4@v4.16.1/conn.go:354 Exec {"sql": ";", "args": [], "time": "17.351386ms", "commandTag": "", "pid": 1849370}

2022-09-08T13:51:35.456Z DEBUG // 172.24.0.1:63788 -> 172.24.0.2:27017 clientconn/conn.go:437Response header: length: 190, id: 49, response_to: 5362, opcode: OP_MSG

2022-09-08T13:51:35.457Z DEBUG // 172.24.0.1:63788 -> 172.24.0.2:27017 clientconn/conn.go:438Response message:

{

"Checksum": 0,

"FlagBits": 0,

"Sections": [

{

"Document": {

"$k": [

"ismaster",

"maxBsonObjectSize",

"maxMessageSizeBytes",

"maxWriteBatchSize",

"localTime",

"minWireVersion",

"maxWireVersion",

"readOnly",

"ok"

],

"ismaster": true,

"maxBsonObjectSize": 16777216,

"maxMessageSizeBytes": 48000000,

"maxWriteBatchSize": 100000,

"localTime": {

"$d": 1662645095456

},

"minWireVersion": 13,

"maxWireVersion": 13,

"readOnly": false,

"ok": {

"$f": 1

}

},

"Kind": 0

}

]

}

既然我们没有看到任何明显的错误,让我们继续。

本地安装mongosh或在Docker Compose中为mongosh添加另一个容器。请参阅我上一篇文章中的示例。

我在本地安装了mongosh。我们可以通过在终端发布mongosh来访问集装箱:

mongosh

或以下:

mongosh mongodb://localhost/

Current Mongosh Log ID: 631a77e81460be94daef222e

Connecting to: mongodb://localhost/?directConnection=true&serverSelectionTimeoutMS=2000&appName=mongosh+1.5.4

Using MongoDB: 6.0.42

Using Mongosh: 1.5.4

For mongosh info see: https://docs.mongodb.com/mongodb-shell/

------

The server generated these startup warnings when booting

2022-09-08T23:16:58.426Z: Powered by FerretDB v0.5.3 and PostgreSQL 13.0.0.

2022-09-08T23:16:58.426Z: Please star us on GitHub: https://github.com/FerretDB/FerretDB

------

test>

按照说明在主机上安装Mongo工具。您也可以在安装了这些工具的情况下添加另一个容器,但我将把它作为作业留给您。

brew tap mongodb/brew

brew install mongodb-database-tools

让我们将数据加载到数据库中。我们将使用Mflix数据集,但任何数据集都可以。

在新终端窗口中:

wget https://atlas-education.s3.amazonaws.com/sampledata.archive

使用mongorestore恢复MongoDB数据集

使用我们之前安装的mongodb数据库工具包将存档恢复到FerretDB中。

mongorestore --archive=sampledata.archive

2022-09-08T09:57:55.587-0400 preparing collections to restore from

2022-09-08T09:57:55.595-0400 reading metadata for sample_mflix.comments from archive 'sampledata.archive'

2022-09-08T09:57:55.596-0400 reading metadata for sample_mflix.users from archive 'sampledata.archive'

2022-09-08T09:57:55.596-0400 reading metadata for sample_training.grades from archive 'sampledata.archive'

2022-09-08T09:57:55.596-0400 reading metadata for sample_airbnb.listingsAndReviews from archive 'sampledata.archive'

2022-09-08T09:57:55.596-0400 reading metadata for sample_analytics.customers from archive 'sampledata.archive'

2022-09-08T09:57:55.596-0400 reading metadata for sample_restaurants.restaurants from archive 'sampledata.archive'

2022-09-08T09:57:55.596-0400 reading metadata for sample_restaurants.neighborhoods from archive 'sampledata.archive'

...

2022-09-08T09:59:40.537-0400 sample_mflix.comments 278KB

2022-09-08T09:59:43.536-0400 sample_mflix.comments 278KB

2022-09-08T09:59:46.536-0400 sample_mflix.comments 278KB

2022-09-08T09:59:49.536-0400 sample_mflix.comments 278KB

2022-09-08T09:59:52.537-0400 sample_mflix.comments 278KB

我的FerretDB位于新泽西州北部的某个地方,我们的CockroachDB网关区域是aws-us-east-1。回想一下,我们在设置Docker Compose时指定了它。它可能有一些网络延迟。

下面是我们可以使用Cockroach CLI进行的快速测试:

SELECT 1;

?column?

------------

1

(1 row)

Time: 20ms total (execution 1ms / network 19ms)

我们正在处理19ms的往返时间,以及通过FerretDB Docker容器代理MongoDB的事实。让我们做一个快速的检查。在运行mongosh的终端中,发出以下命令:

use sample_mflix

show collections

test> use sample_mflix

switched to db sample_mflix

sample_mflix> show collections

comments

让我们快速计算行数:

db.comments.count()

4257

查看数据集的描述,我们可以看到这些行是否存在:

db.comments.findOne({ "name": "Andrea Le" })

{

_id: ObjectId("5a9427648b0beebeb6957ef5"),

name: 'Andrea Le',

email: 'andrea_le@fakegmail.com',

movie_id: ObjectId("573a1392f29313caabcda653"),

text: 'Odio expedita impedit sed provident at. Mollitia distinctio laborum optio earum voluptates recusandae ad. Voluptates quas et placeat atque.',

date: ISODate("1973-08-07T04:00:34.000Z")

}

示例数据集为304MB。我不确定记录总数,但我知道恢复需要一段时间。您的里程可能会有所不同,因此请继续。

2022-09-08T10:34:07.610-0400 sample_mflix.comments 2.17MB

2022-09-08T10:34:09.218-0400 sample_mflix.comments 2.17MB

2022-09-08T10:34:09.218-0400 finished restoring sample_mflix.comments (7000 documents, 0 failures)

2022-09-08T10:34:09.218-0400 Failed: sample_mflix.comments: error restoring from archive 'sampledata.archive': (InternalError) [pool.go:361 pgdb.(*Pool).InTransaction] read tcp 172.24.0.2:55922->54.208.245.52:26257: read: connection reset by peer

2022-09-08T10:34:09.219-0400 7000 document(s) restored successfully. 0 document(s) failed to restore.

好,让我暂停一下,只说几句话,忽略由于网络错误而中断的恢复:我感到震惊的是,首先,FerretDB无缝且毫不费力地代理MongoDB集合,并将其恢复到CockroachDB的边缘!我敢说,这是迄今为止我从事的最简单的迁移!

我想我在评论集里有足够的记录。让我们分别从数据集中恢复其他集合。

mongorestore --archive=sampledata.archive --nsInclude=sample_mflix.movies

mongorestore --archive=sampledata.archive --nsInclude=sample_mflix.sessions

mongorestore --archive=sampledata.archive --nsInclude=sample_mflix.theaters

mongorestore --archive=sampledata.archive --nsInclude=sample_mflix.users

幸运的是,其余的集合足够小,可以在短时间内加载。让我向您展示成功恢复的情况。

2022-09-08T14:53:57.086-0400 sample_mflix.users 28.9KB

2022-09-08T14:54:00.086-0400 sample_mflix.users 28.9KB

2022-09-08T14:54:01.347-0400 sample_mflix.users 28.9KB

2022-09-08T14:54:01.347-0400 finished restoring sample_mflix.users (185 documents, 0 failures)

2022-09-08T14:54:01.373-0400 restoring indexes for collection sample_mflix.users from metadata

2022-09-08T14:54:01.373-0400 index: &idx.IndexDocument{Options:primitive.M{"name":"email_1", "unique":true, "v":2}, Key:primitive.D{primitive.E{Key:"email", Value:1}}, PartialFilterExpression:primitive.D(nil)}

2022-09-08T14:54:01.376-0400 185 document(s) restored successfully. 0 document(s) failed to restore.

让我们将mongosh中的文档计数与CockroachDB中的计数进行比较。

sample_mflix> db.comments.count()

7340

sample_mflix> db.movies.count()

3599

sample_mflix> db.sessions.count()

1

sample_mflix> db.theaters.count()

1564

sample_mflix> db.users.count()

185

show tables;

schema_name | table_name | type | owner | estimated_row_count | locality

---------------+--------------------+-------+-------+---------------------+-----------

sample_mflix | _ferretdb_settings | table | artem | 1 | NULL

sample_mflix | comments_5886d2d7 | table | artem | 6027 | NULL

sample_mflix | movies_257fbbf4 | table | artem | 3599 | NULL

sample_mflix | sessions_130573cc | table | artem | 1 | NULL

sample_mflix | theaters_cf846063 | table | artem | 1493 | NULL

sample_mflix | users_5e7cc513 | table | artem | 185 | NULL

我不信任estimated_row_count字段。让我们快速浏览一下表计数。

select count(*) from sample_mflix.comments_5886d2d7;

count

---------

7340

select count(*) from sample_mflix.movies_257fbbf4;

count

---------

3599

select count(*) from sample_mflix.sessions_130573cc;

count

---------

1

select count(*) from sample_mflix.theaters_cf846063;

count

---------

1564

select count(*) from sample_mflix.users_5e7cc513;

count

---------

185

看起来一切都匹配。让我们检查模式。

SHOW CREATE TABLE sample_mflix.comments_5886d2d7;

table_name | create_statement

---------------------------------+----------------------------------------------------------------

sample_mflix.comments_5886d2d7 | CREATE TABLE sample_mflix.comments_5886d2d7 (

| _jsonb JSONB NULL,

| rowid INT8 NOT VISIBLE NOT NULL DEFAULT unique_rowid(),

| CONSTRAINT comments_5886d2d7_pkey PRIMARY KEY (rowid ASC)

让我们提取CREATETABLE语句并进行一些修改。

SELECT create_statement FROM [SHOW CREATE TABLE sample_mflix.comments_5886d2d7];

CREATE TABLE sample_mflix.comments_5886d2d7 (

_jsonb JSONB NULL,

rowid INT8 NOT VISIBLE NOT NULL DEFAULT unique_rowid(),

CONSTRAINT comments_5886d2d7_pkey PRIMARY KEY (rowid ASC)

)

我们看到一个rowid列,如果没有明确指定,它是CockroachDB中的主键,还有一个包含文档的_jsonb列。我们将用存储在JSON文档中的id替换rowid。我们将使用计算列来提取id。Mongo ObjectID看起来像一个散列。我们将把它传递给一个md5()函数。

select md5(_jsonb->>'_id') from sample_mflix.comments_5886d2d7 limit 5;

md5

------------------------------------

e15a7dcdd0e149effb3305129a924195

以下步骤是灵活CockroachDB模式模型的一部分,它是MongoDB无模式模型的补充。

考虑到这是一个多区域集群,在后台执行以下架构更改可能需要很长时间。为了加快这些更改,以下查询将系统数据库固定到本地区域,并使模式更改更快。

ALTER DATABASE system CONFIGURE ZONE USING constraints = '{"+region=aws-us-east-1": 1}', lease_preferences = '[[+region=aws-us-east-1]]';

向现有表中添加新列:

ALTER TABLE sample_mflix.comments_5886d2d7 ADD COLUMN id STRING NOT NULL AS (md5(_jsonb->>'_id')::STRING) VIRTUAL;

现在,我们可以将主键从内部行ID更改为新的计算列,但在此之前,我们先截断表。这不是必要的,但我们还是这样做吧,因为在将数据加载到CockroachDB时还有其他因素需要考虑。我将很快谈论它。

TRUNCATE TABLE sample_mflix.comments_5886d2d7;

让我们将主键从内部rowid列更改为新添加的列。

ALTER TABLE sample_mflix.comments_5886d2d7 ALTER PRIMARY KEY USING COLUMNS (id);

最后,让我们删除rowid列,因为我们不再需要它。

set sql_safe_updates = false;

ALTER TABLE sample_mflix.comments_5886d2d7 DROP COLUMN rowid;

set sql_safe_updates = true;

让我们再次查看模式:

SELECT create_statement FROM [SHOW CREATE TABLE sample_mflix.comments_5886d2d7];

create_statement

---------------------------------------------------------------------

CREATE TABLE sample_mflix.comments_5886d2d7 (

_jsonb JSONB NULL,

id STRING NOT NULL AS (md5(_jsonb->>'_id':::STRING)) VIRTUAL,

CONSTRAINT comments_5886d2d7_pkey PRIMARY KEY (id ASC)

)

我们返回了MongoDB中的行为,并引用了ObjectID。现在剩下的是恢复comments集合。

基于我们现在基于MongoDB ObjectID强制执行主键的事实,我们可以利用CockroachDB一致性并防止以下错误:

2022-09-01T14:28:11.291-0400 demux finishing when there are still outs (1)

2022-09-01T14:28:11.291-0400 Failed: sample_mflix.comments: error restoring from archive 'sampledata.archive': (InternalError) [pool.go:356 pgdb.(*Pool).InTransaction] [msg_insert.go:108 pg.(*Handler).insert.func1] ERROR: duplicate key value violates unique constraint "comments_5886d2d7_pkey" (SQLSTATE 23505)

2022-09-01T14:28:11.291-0400 0 document(s) restored successfully. 0 document(s) failed to restore.

我们有散列主键的事实也有助于负载分布。当您从空表开始时,您只有一个范围,所有读写请求都将命中该范围,从而创建热点。为了实现均匀分布和更好的性能,我们需要在多个范围内平衡负载。现在,表是空的,但它由一个范围支持。

SELECT start_key, end_key, range_id, range_size_mb FROM [SHOW RANGES FROM TABLE sample_mflix.comments_5886d2d7];

start_key | end_key | range_id | range_size_mb

------------+---------+----------+----------------

NULL | NULL | 85 | 0

让我们在重新加载数据之前预拆分表。由于我们的主键现在是一个基于md5的ObjectID哈希,因此我们可以预先划分为16个范围。

ALTER TABLE sample_mflix.comments_5886d2d7 SPLIT AT

SELECT

(

first_letter

|| md5(random()::STRING)

)::STRING

FROM

(

SELECT

CASE

WHEN i < 10 THEN i::STRING

WHEN i = 10 THEN 'a'

WHEN i = 11 THEN 'b'

WHEN i = 12 THEN 'c'

WHEN i = 13 THEN 'd'

WHEN i = 14 THEN 'e'

ELSE 'f'

END

AS first_letter

FROM

generate_series(0, 15) AS g (i)

);

下一个命令将在集群中扩展范围:

ALTER TABLE sample_mflix.comments_5886d2d7 SCATTER;

让我们看看新的范围:

SELECT start_key, end_key, range_id, range_size_mb FROM [SHOW RANGES FROM TABLE sample_mflix.comments_5886d2d7];

start_key | end_key | range_id | range_size_mb

---------------------------------------+--------------------------------------+----------+----------------

NULL | /"0d545f8e17e030f0c5181e1afc5de6224" | 85 | 0

/"0d545f8e17e030f0c5181e1afc5de6224" | /"1cab56eb5ce49a61e99ccf31c5ae19c67" | 86 | 0

/"1cab56eb5ce49a61e99ccf31c5ae19c67" | /"29f6b8beefa6573ec41470968e54bca42" | 106 | 0

/"29f6b8beefa6573ec41470968e54bca42" | /"33a54c6c02a9989a978aa8e5a5f7e9d73" | 107 | 0

/"33a54c6c02a9989a978aa8e5a5f7e9d73" | /"4618f2a8a2cc498d98943c6a19b9c6c7b" | 108 | 0

/"4618f2a8a2cc498d98943c6a19b9c6c7b" | /"5946ca647ea8270a37574c1a898056961" | 109 | 0

/"5946ca647ea8270a37574c1a898056961" | /"6f2112e4bdad3a2237cb00d50acd0e05e" | 125 | 0

/"6f2112e4bdad3a2237cb00d50acd0e05e" | /"793517eb63ea208ad2e5baea51c23ad05" | 126 | 0

/"793517eb63ea208ad2e5baea51c23ad05" | /"8d81118af05e64980c10e5a1625ea250a" | 127 | 0

/"8d81118af05e64980c10e5a1625ea250a" | /"9c293b5d497d94c029f34e288961415b9" | 128 | 0

/"9c293b5d497d94c029f34e288961415b9" | /"a497c26e97b1136fb0b7ae1174cf575e6" | 129 | 0

/"a497c26e97b1136fb0b7ae1174cf575e6" | /"b4fe34ea032cbded1b4004a9548ff1883" | 130 | 0

/"b4fe34ea032cbded1b4004a9548ff1883" | /"c420a9bf42e53d5c980fc18931bf1dc79" | 131 | 0

/"c420a9bf42e53d5c980fc18931bf1dc79" | /"d32739d1ed370496ccee3f23f87c36e01" | 132 | 0

/"d32739d1ed370496ccee3f23f87c36e01" | /"e587ad032ca91e63f1a72999acb6baec5" | 133 | 0

/"e587ad032ca91e63f1a72999acb6baec5" | /"fbb21b41dbeaf54b21a19f4b0b204195c" | 134 | 0

/"fbb21b41dbeaf54b21a19f4b0b204195c" | NULL | 135 | 0

我们现在可以将数据重新加载到comments表中,并查看是否有任何差异。

mongorestore --archive=sampledata.archive --nsInclude=sample_mflix.comments

我们可以重新运行show ranges命令以查看正在进行的拆分:

start_key | end_key | range_id | range_size_mb

---------------------------------------+--------------------------------------+----------+-------------------------

NULL | /"05544a6f1a9e2c370d61e80b08bfc9914" | 450 | 0.39971900000000000000

/"05544a6f1a9e2c370d61e80b08bfc9914" | /"145af2f7d21c2737757f48b85beaac411" | 382 | 1.0964470000000000000

/"145af2f7d21c2737757f48b85beaac411" | /"22f8086dd66f929f2a9f02213d8eb2880" | 383 | 1.0559140000000000000

/"22f8086dd66f929f2a9f02213d8eb2880" | /"340e8a0bbb4340a680a45b6f4d041f338" | 395 | 1.2896460000000000000

/"340e8a0bbb4340a680a45b6f4d041f338" | /"4cd3f28b0d95bcd3be3be03a47cc3e7bf" | 396 | 1.8605330000000000000

/"4cd3f28b0d95bcd3be3be03a47cc3e7bf" | /"500650bb144971c1138805e235a7275b3" | 397 | 0.26009000000000000000

/"500650bb144971c1138805e235a7275b3" | /"6777895c82eab8059a7bbbc3f0953fde3" | 398 | 1.7841590000000000000

/"6777895c82eab8059a7bbbc3f0953fde3" | /"73a828d7661f43b8696cf15103a061b06" | 401 | 0.85725000000000000000

/"73a828d7661f43b8696cf15103a061b06" | /"894c50ca8d93d101ae665c14427bf35ce" | 402 | 1.5699020000000000000

/"894c50ca8d93d101ae665c14427bf35ce" | /"9cdda5fe73ed53904d41ab67a47737a25" | 403 | 1.4688710000000000000

/"9cdda5fe73ed53904d41ab67a47737a25" | /"a94b701dd474c23494ca834eaf9ed8fb6" | 404 | 0.94120900000000000000

/"a94b701dd474c23494ca834eaf9ed8fb6" | /"bfb04e1b2ff37d4188c7722575504ca27" | 575 | 1.6290150000000000000

/"bfb04e1b2ff37d4188c7722575504ca27" | /"cfc85f2b55491a6a480552b135c26d34f" | 576 | 1.1618950000000000000

/"cfc85f2b55491a6a480552b135c26d34f" | /"db4f0c8dfad0c9c478e15b78ddc91834a" | 577 | 0.84471700000000000000

/"db4f0c8dfad0c9c478e15b78ddc91834a" | /"e8a406deefeff94205f867661bfbcd269" | 578 | 1.0015310000000000000

/"e8a406deefeff94205f867661bfbcd269" | /"f7a6e34db298c3e4204ddf43842e06cf1" | 579 | 1.1321730000000000000

/"f7a6e34db298c3e4204ddf43842e06cf1" | NULL | 580 | 0.60192500000000000000

数据在所有范围内都是平衡的,因此整体性能更好。我们仍然依赖于FerretDB的单个Docker实例,因此在您的环境中,您可以更好地使用承载FerretDB的实例。更好的是,因为CockroachDB可以扩展读写,所以可以并行运行多个FerretDB实例并加载数据。

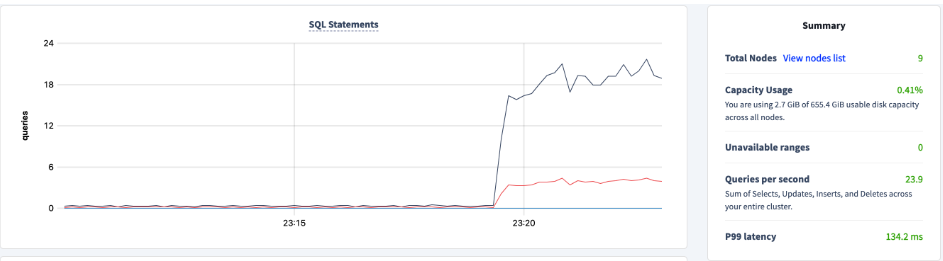

25 qps没有什么好写的,延迟也相当高。我发现以下标志–numInsertionWorkersPerCollection=50有助于提高mongorestore的性能。玩这个数字直到你达到一个很好的平衡。

mongorestore --archive=sampledata.archive --nsInclude=sample_mflix.comments --numInsertionWorkersPerCollection=100

如果在还原注释集合时碰巧收到以下错误:

2022-09-09T15:08:16.551-0400 sample_mflix.comments 6.79MB

2022-09-09T15:08:16.551-0400 finished restoring sample_mflix.comments (17017 documents, 0 failures)

2022-09-09T15:08:16.551-0400 demux finishing when there are still outs (22)

2022-09-09T15:08:16.551-0400 Failed: sample_mflix.comments: error restoring from archive 'sampledata.archive': (InternalError) [pool.go:288 pgdb.(*Pool).InTransaction] ERROR: restart transaction: TransactionRetryWithProtoRefreshError: TransactionAbortedError(ABORT_REASON_NEW_LEASE_PREVENTS_TXN): "sql txn" meta={id=cc2e0dc4 key=/Table/121/5/"2ee7d65280b51e55fb4701fdb5787ab1"/0 pri=0.02374602 epo=0 ts=1662749935.838933077,1 min=1662749935.635443487,0 seq=2} lock=true stat=PENDING rts=1662749935.838933077,1 wto=false gul=1662749935.885443487,0 (SQLSTATE 40001)

2022-09-09T15:08:16.551-0400 17017 document(s) restored successfully. 0 document(s) failed to restore.

这意味着该区域的租约已转移到另一个区域,CockroachDB重试了该交易。不幸的是,它迫使mongorestore退出。我们可以将租约锁定到本地区域并防止这种行为。

让我们看看当前的首选项是什么:

SHOW ZONE CONFIGURATION FROM TABLE sample_mflix.comments_5886d2d7;

target | raw_config_sql

--------------------+-----------------------------------------------

DATABASE ferretdb | ALTER DATABASE ferretdb CONFIGURE ZONE USING

| range_min_bytes = 134217728,

| range_max_bytes = 536870912,

| gc.ttlseconds = 600,

| num_replicas = 3,

| constraints = '[]',

| lease_preferences = '[]'

让我们使用CockroachDB多区域抽象来实现期望的结果:

ALTER DATABASE ferretdb PRIMARY REGION "aws-us-east-1";

ALTER DATABASE ferretdb ADD REGION "aws-us-east-2";

ALTER DATABASE ferretdb ADD REGION "aws-us-west-2";

SHOW ZONE CONFIGURATION FROM TABLE sample_mflix.comments_5886d2d7;

target | raw_config_sql

--------------------+------------------------------------------------------------------------------------------------------

DATABASE ferretdb | ALTER DATABASE ferretdb CONFIGURE ZONE USING

| range_min_bytes = 134217728,

| range_max_bytes = 536870912,

| gc.ttlseconds = 600,

| num_replicas = 5,

| num_voters = 3,

| constraints = '{+region=aws-us-east-1: 1, +region=aws-us-east-2: 1, +region=aws-us-west-2: 1}',

| voter_constraints = '[+region=aws-us-east-1]',

| lease_preferences = '[[+region=aws-us-east-1]]'

总之,我们现在保证在us-east-1区域有一个副本,us-east-1有一个lease_holder,负责协调读写操作。

考虑

在写这篇文章时,我很难完全恢复评论集。由于各种问题,如错误、移动租约、重复主键冲突和连接中断,评论集合恢复很可能会失败。在过去的两天里,我一直在与它斗争,但并没有丢失所有数据,已经加载的数据是可访问和可查询的。我从这个练习中得到的最难的教训是阅读文档。推动性能提升的最大影响是numInsertionWorkersPerCollection=100。我们没有推动足够的并行工作。我认为分开表有点帮助,但在这一点上,我认为这是转移注意力。也许我会尝试另一个负载而不分裂。lease_holder钉扎是必要的,因为它发生在启用–numInsertionWorkersPerCollection=100标志的恢复过程中。作为我的最后一次尝试,我已经打开了所有的调整,并达到了40044行。这是我所能做到的。在达到生产级之前,还有很多工作要做。

2022-09-09T15:49:34.466-0400 sample_mflix.comments 11.1MB

2022-09-09T15:49:36.940-0400 sample_mflix.comments 11.1MB

2022-09-09T15:49:36.940-0400 finished restoring sample_mflix.comments (33079 documents, 0 failures)

2022-09-09T15:49:36.940-0400 Failed: sample_mflix.comments: error restoring from archive 'sampledata.archive': (InternalError) [pool.go:288 pgdb.(*Pool).InTransaction] read tcp 172.29.0.2:52130->3.217.93.138:26257: read: connection reset by peer

2022-09-09T15:49:36.940-0400 33079 document(s) restored successfully. 0 document(s) failed to restore.

2022-09-09T15:49:36.940-0400 demux finishing when there are still outs (21)

这是最后一次恢复的输出,CockroachDB和mongosh中的计数对应。

sample_mflix> db.comments.count()

40044

count

---------

40044

结论

最后,我想指出,这是一个过于简化的示例,毫无疑问,我认为所有迁移都很容易。尽管如此,这只是一个实验,目前还没有工程工作在进行,以使 CockroachDB和FerretDB更好地工作,除非以下问题获得足够的投票。未来还有机会将FerretDB添加为MongoDB副本集,谁知道呢,也许我们可以通过所谓的在线迁移将数据移动到CockroachDB?此外,我还要重申:MongoDB和CockroachDB不是可比技术。这两种产品都有一个箱子。为工作选择最好的工具!我只是给你另一个选择。综上所述,我们还有很多路要走,但我喜欢这条路的方向!

原文标题:Migrating MongoDB Collections to CockroachDB With FerretDB

原文作者:Artem Ervits

原文链接:https://dzone.com/articles/migrating-mongodb-collections-to-cockroachdb-with