1 Spark Thrift部署

下载地址:

http://mirrors.hust.edu.cn/apache/spark/spark-2.1.0/spark-2.1.0-bin-hadoop2.6.tgz

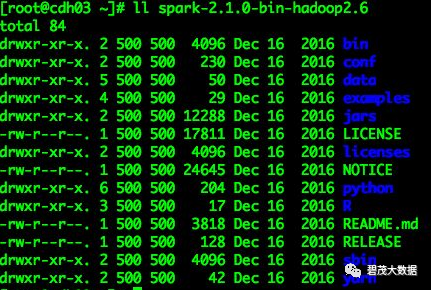

解压

[root@cdh03 ~]# tar -zxvf spark-2.1.0-bin-hadoop2.6.tgz

拷贝至/opt/cloudera/parcels/SPARK2/lib/spark2/jars目录

在集群所有节点上执行

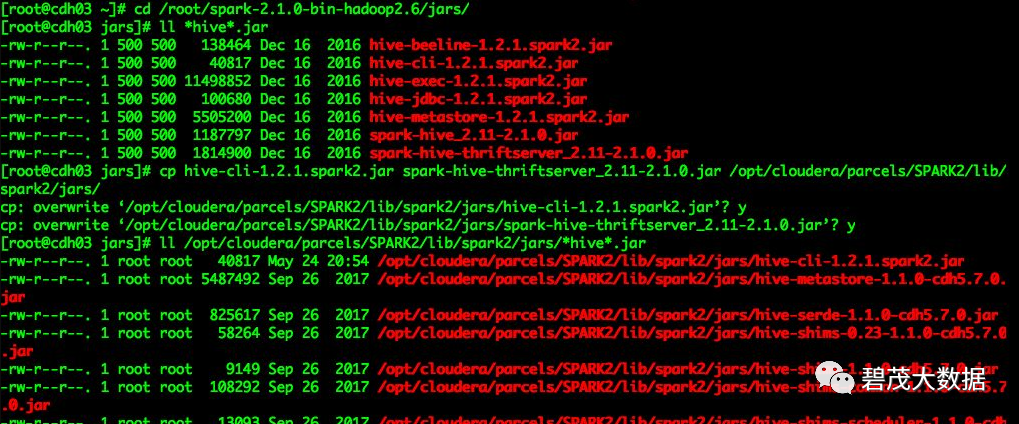

[root@cdh03 ~]# cd root/spark-2.1.0-bin-hadoop2.6/jars/

[root@cdh03 jars]# ll *hive*.jar

[root@cdh03 jars]# cp hive-cli-1.2.1.spark2.jar spark-hive-thriftserver_2.11-2.1.0.jar opt/cloudera/parcels/SPARK2/lib/spark2/jars/

[root@cdh03 jars]# ll opt/cloudera/parcels/SPARK2/lib/spark2/jars/*hive*.jar

把/opt/cloudera/parcels/SPARK2/lib/spark2/jars目录下的所有jar上传至HDFS

[root@cdh03 jars]# kinit spark/admin

Password for spark/admin@FAYSON.COM:

[root@cdh03 jars]# cd opt/cloudera/parcels/SPARK2/lib/spark2/jars/

[root@cdh03 jars]# hadoop fs -mkdir -p user/spark/share/spark2-jars

[root@cdh03 jars]# hadoop fs -put *.jar user/spark/share/spark2-jars

[root@cdh03 jars]# hadoop fs -ls user/spark/share/spark2-jars

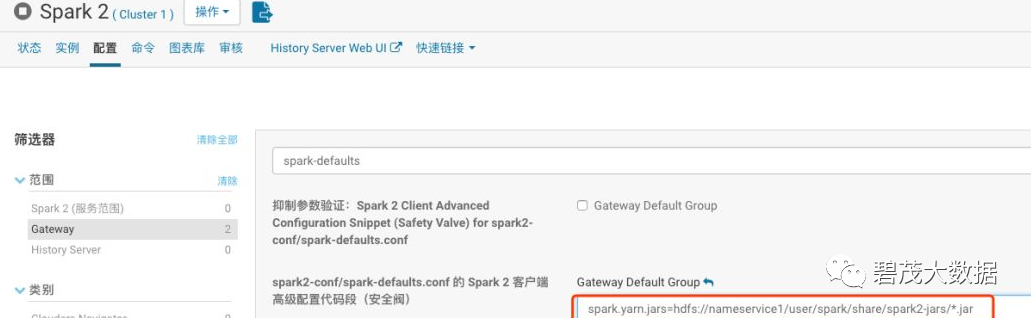

修改Spark的配置

spark.yarn.jars=hdfs://nameservice1/user/spark/share/spark2-jars/*.jar

Spark Thrift启动和停止脚本

[root@cdh03 jars]# cd root/spark-2.2.0-bin-hadoop2.6/sbin/

[root@cdh03 sbin]# ll *thrift*.sh

[root@cdh03 sbin]# cp *thrift*.sh opt/cloudera/parcels/SPARK2/lib/spark2/sbin/

[root@cdh03 sbin]# ll opt/cloudera/parcels/SPARK2/lib/spark2/sbin/*thriftserver*

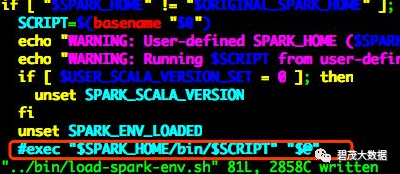

修改load-spark-env.sh脚本

[root@cdh03 sbin]# cd opt/cloudera/parcels/SPARK2/lib/spark2/bin

[root@cdh03 bin]# vim load-spark-env.sh

# 该脚本是启动Spark相关服务加载依赖环境

内容注释

exec "$SPARK_HOME/bin/$SCRIPT" "$@"

部署Spark SQL客户端

[root@cdh03 ~]# cd spark-2.1.0-bin-hadoop2.6/bin/

[root@cdh03 bin]# pwd

[root@cdh03 bin]# cp root/spark-2.1.0-bin-hadoop2.6/bin/spark-sql opt/cloudera/parcels/SPARK2/lib/spark2/bin

[root@cdh03 bin]# ll opt/cloudera/parcels/SPARK2/lib/spark2/bin/spark-sql

2 Spark Thrift的启动与停止

创建一个Kerberos账号,导出hive.keytab文件

[root@cdh01 ~]# kadmin.local

kadmin.local: addprinc -randkey hive/cdh03.fayson.com@FAYSON.COM

kadmin.local: xst -norandkey -k hive-cdh03.keytab hive/cdh03.fayson.com@FAYSON.COM

查看

[root@cdh01 ~]# klist -e -kt hive-cdh03.keytab

文件拷贝hive-cdh03.keytab至Spark2.1 ThriftServer服务所在服务器

[root@cdh01 ~]# scp hive-cdh03.keytab cdh03.fayson.com:/opt/cloudera/parcels/SPARK2/lib/spark2/sbin/

启动Thrift Server

cd opt/cloudera/parcels/SPARK2/lib/spark2/sbin

./start-thriftserver.sh --hiveconf hive.server2.authentication.kerberos.principal=hive/cdh03.fayson.com@FAYSON.COM \

--hiveconf hive.server2.authentication.kerberos.keytab=hive-cdh03.keytab \

--hiveconf hive.server2.thrift.port=10002 \

--hiveconf hive.server2.thrift.bind.host=0.0.0.0 \

--principal hive/cdh03.fayson.com@FAYSON.COM --keytab hive-cdh03.keytab

检查监听

[root@cdh03 sbin]# netstat -apn |grep 10002

Spark ThriftServer服务停止

[root@cdh03 sbin]# ./stop-thriftserver.sh

3 验证

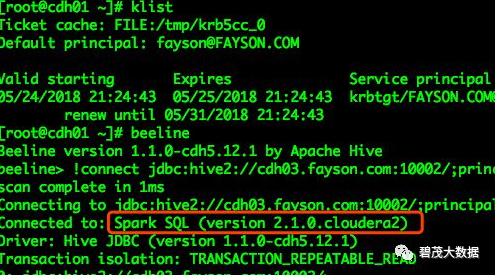

beeline测试

[root@cdh01 ~]# kinit fayson

[root@cdh01 ~]# klist

[root@cdh01 ~]# beeline

beeline> !connect jdbc:hive2://cdh03.fayson.com:10002/;principal=hive/cdh03.fayson.com@FAYSON.COM

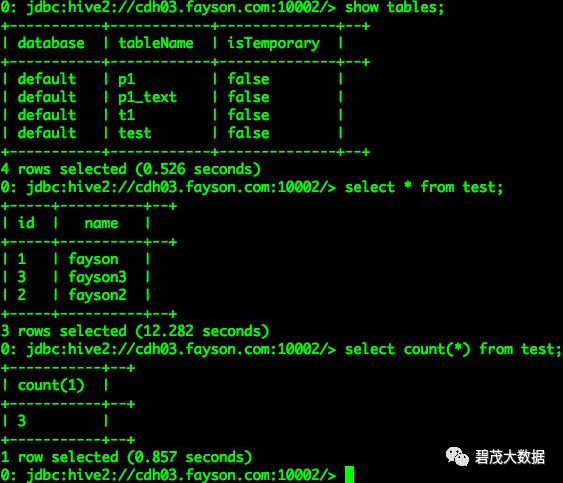

SQL测试

0: jdbc:hive2://cdh03.fayson.com:10001/> show tables;

0: jdbc:hive2://cdh03.fayson.com:10001/> select * from test;

0: jdbc:hive2://cdh03.fayson.com:10001/> select count(*) from test;

0: jdbc:hive2://cdh03.fayson.com:10001/>

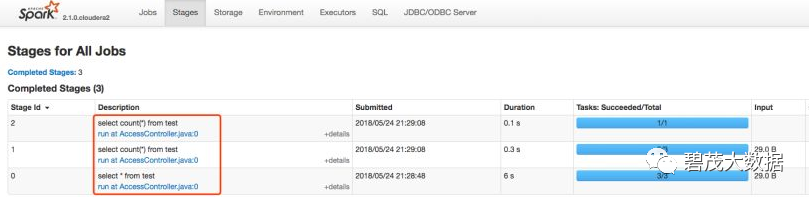

查看SQL操作是否是通过Spark执行

spark-sql验证

[root@cdh03 ~]# kinit fayson

[root@cdh03 ~]# /opt/cloudera/parcels/CDH/lib/spark/bin/spark-sql

关注公众号:领取精彩视频课程&海量免费语音课程

文章转载自碧茂大数据,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。