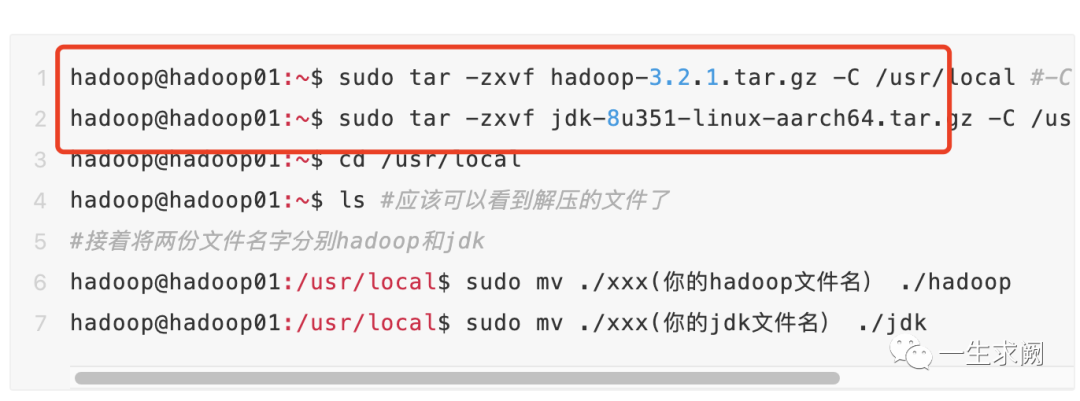

首先纠正搭建hadoop集群那篇文章中一个错误:

我的安装包是在~/Downloads文件夹下,而上面我写错了,实际应该是:

hadoop@hadoop01:~/Downloads$ sudo tar -zxvf hadoop-3.2.1.tar.gz -C usr/local #-C参数是指定解压到哪个目录下hadoop@hadoop01:~/Downloads$ sudo tar -zxvf jdk-8u351-linux-aarch64.tar.gz -C usr/local

接下来正文开始:

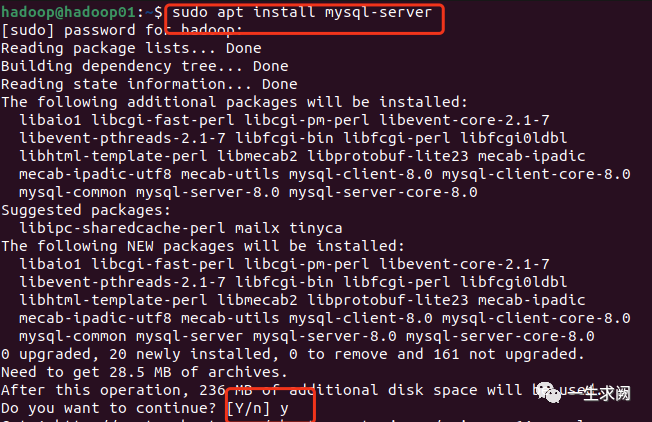

1. 安装mysql

1.1 启动hadoop01节点,进入终端

hadoop@hadoop01:~$ sudo apt install mysql-server

通过命令安装最新版mysql(通过命令会默认安装最新版mysql)

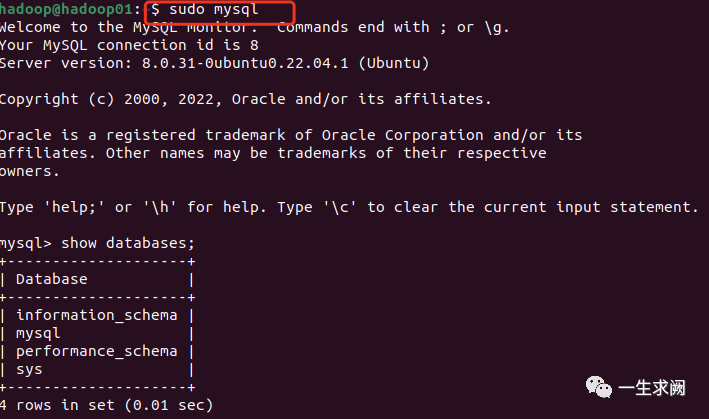

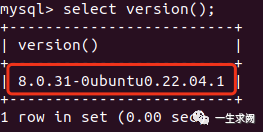

1.2 通过命令进入mysql,目前我安装的最新版是mysql-8.0.31

hadoop@hadoop01:~$ sudo mysql

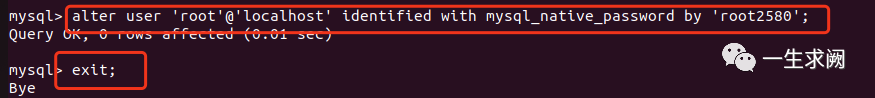

1.3 在mysql命令行输入以下命令回车,修改密码(密码自己设置)

alter user 'root'@'localhost' identified with mysql_native_password by 'root2580';exit; #然后退出

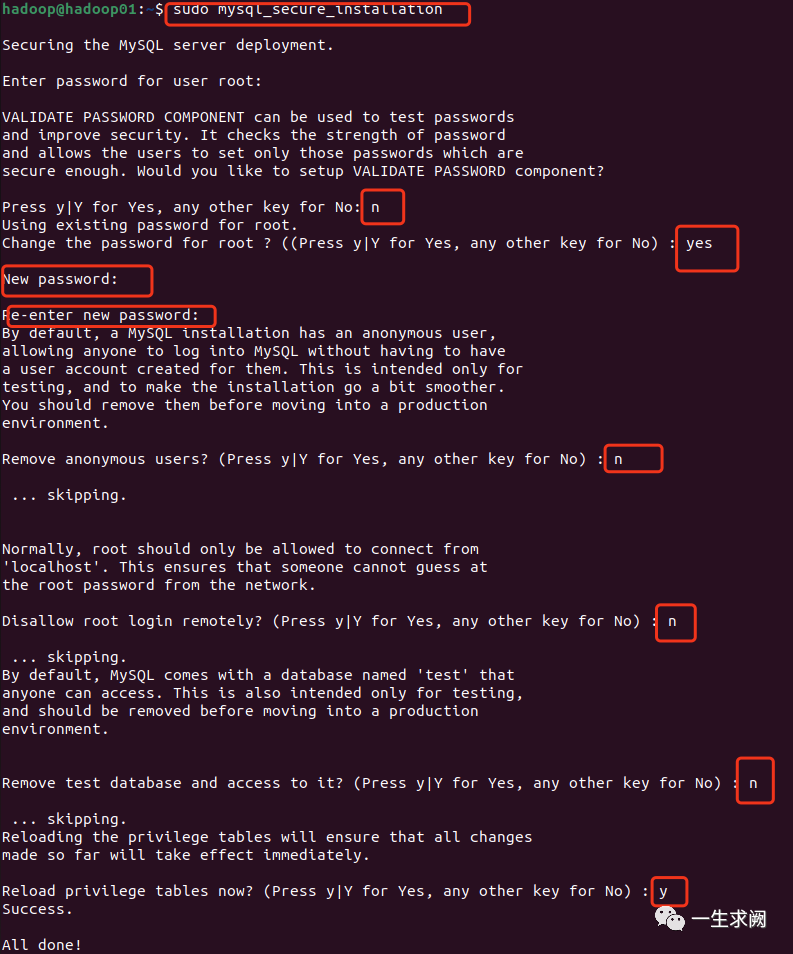

退出之后输入:

hadoop@hadoop01:~$ sudo mysql_secure_installationVALIDATE PASSWORD COMPONENT...(使用密码强度校验组件):nChange the password for root ? (修改密码) : yesNew password: (自己输入修改之后的密码)Re-enter password:(再输入一次密码)Remove anonymous users? (删除匿名用户) : nDisallow root login remotely? (不允许root账户远程登录) : nRemove test database and access to it? (移除test数据库) : nReload privilege tables now? (现在重新载入权限表) : y#结束后mysql就已经配置并修改好密码

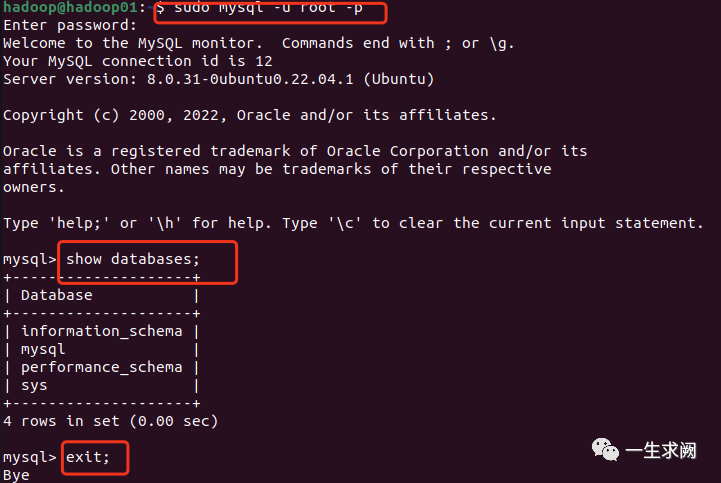

1.4 使用修改的密码登录mysql,测试是否成功

hadoop@hadoop01:~$ sudo mysql -u root -p

至此mysql安装并测试完成。

2. 配置hive

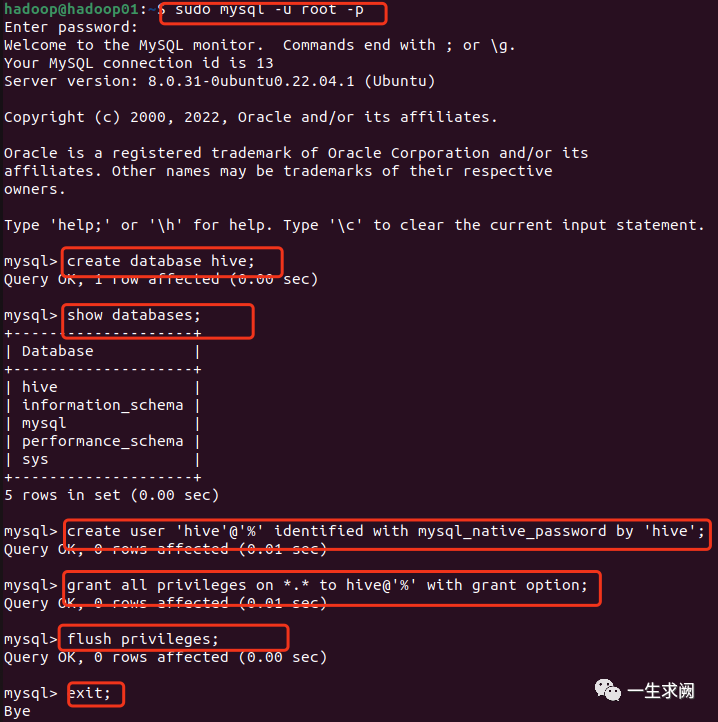

2.1 首先登录mysql,建立hive数据库,创建hive用户和密码

#创建hive数据库create database hive;#创建hive用户和密码(密码自己设置)create user 'hive'@'%' identified with mysql_native_password by 'hive';#授权grant all privileges on *.* to hive@'%' with grant option;#刷新权限flush privileges;#退出exit;

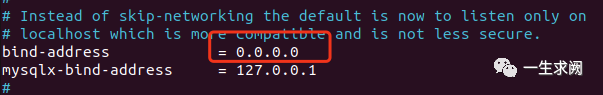

修改/etc/mysql/mysql.conf.d/mysqld.cnf文件内容:

hadoop@hadoop01:~$ sudo vim etc/mysql/mysql.conf.d/mysqld.cnfbind-address = 0.0.0.0 #将127.0.0.1改为0.0.0.0mysqlx-bind-address = 127.0.0.1

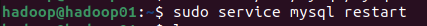

重启mysql服务:

hadoop@hadoop01:~$ sudo service mysql restart

2.2 安装hive

hive下载地址:https://dlcdn.apache.org/hive/

我安装的是hive-3.1.2 ,大家按自己想安装的版本来

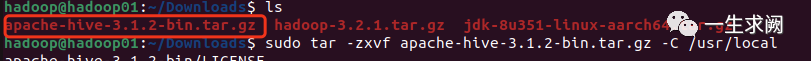

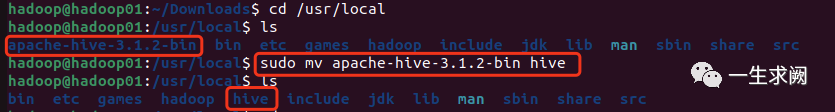

2.2.1 解压至指定文件夹下,并将文件名修改为hive

hadoop@hadoop01:~/Downloads$ sudo tar -zxvf apache-hive-3.1.2-bin.tar.gz -C usr/localhadoop@hadoop01:/usr/local$ sudo mv apache-hive-3.1.2-bin hive

2.2.2 配置环境变量

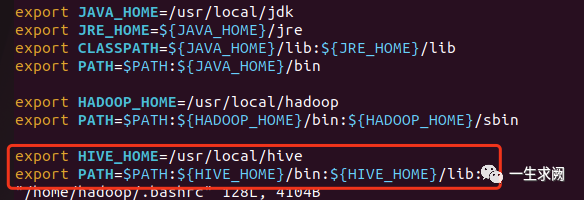

hadoop@hadoop01:~$ sudo vim ~/.bashrc添加:export HIVE_HOME=/usr/local/hive #自己hive的安装路径export PATH=$PATH:${HIVE_HOME}/bin:${HIVE_HOME}/lib:接着:hadoop@hadoop01:~$ source ~/.bashrc #使环境变量生效

2.2.3 进入${HIVE_HOME}/conf目录下(${HIVE_HOME}是自己hive的安装路径)

hadoop@hadoop01:~$ cd /usr/local/hive/conf

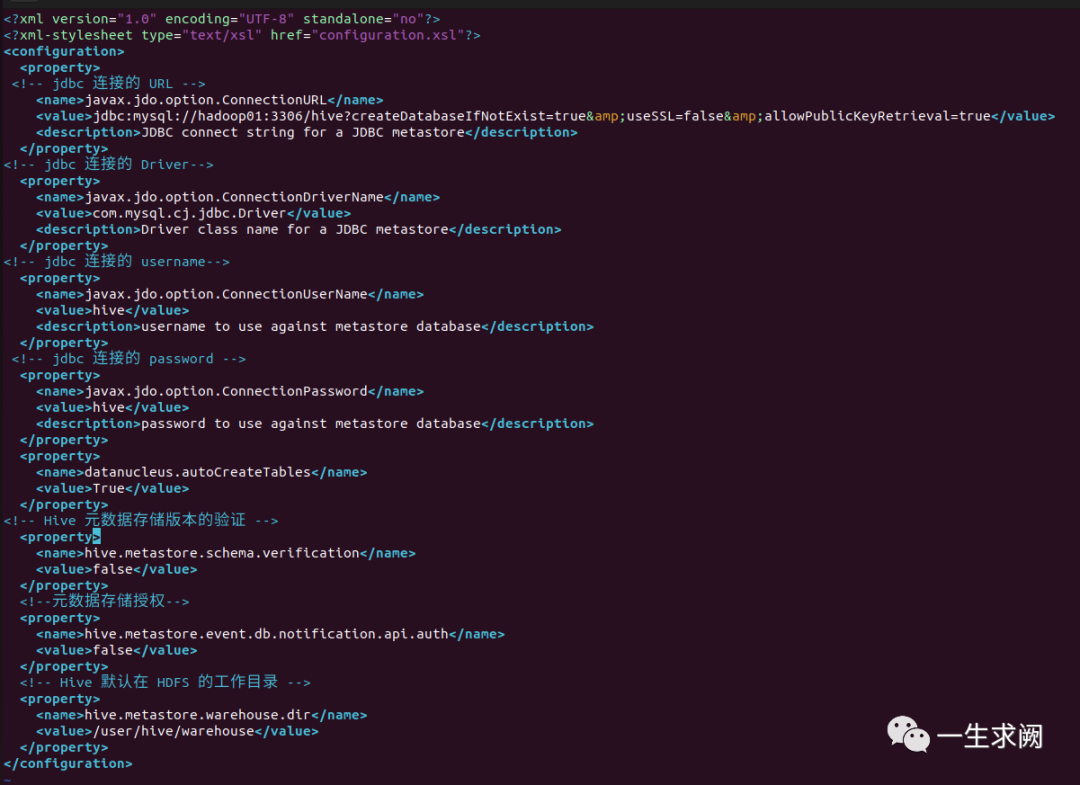

创建hive-site.xml文件并添加如下内容:

hadoop@hadoop01:/usr/local/hive/conf$ sudo vim hive-site.xml添加以下内容:具体内容按自己的实际情况进行修改<?xml version="1.0" encoding="UTF-8" standalone="no"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?><configuration><property><!-- jdbc 连接的 URL --><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://hadoop01:3306/hive?createDatabaseIfNotExist=true&useSSL=false&allowPublicKeyRetrieval=true</value><description>JDBC connect string for a JDBC metastore</description></property><!-- jdbc 连接的 Driver--><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.cj.jdbc.Driver</value><description>Driver class name for a JDBC metastore</description></property><!-- jdbc 连接的 username--><property><name>javax.jdo.option.ConnectionUserName</name><value>hive</value><description>username to use against metastore database</description></property><!-- jdbc 连接的 password --><property><name>javax.jdo.option.ConnectionPassword</name><value>hive</value><description>password to use against metastore database</description></property><property><name>datanucleus.autoCreateTables</name><value>True</value></property><!-- Hive 元数据存储版本的验证 --><property><name>hive.metastore.schema.verification</name><value>false</value></property><!--元数据存储授权--><property><name>hive.metastore.event.db.notification.api.auth</name><value>false</value></property><!-- Hive 默认在 HDFS 的工作目录 --><property><name>hive.metastore.warehouse.dir</name><value>/user/hive/warehouse</value></property></configuration>

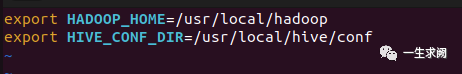

创建hive-env.sh并添加以下内容:

hadoop@hadoop01:/usr/local/hive/conf$ sudo vim hive-env.sh添加以下内容:export HADOOP_HOME=/usr/local/hadoop #自己的hadoop安装路径export HIVE_CONF_DIR=/usr/local/hive/conf #自己的hive安装路径

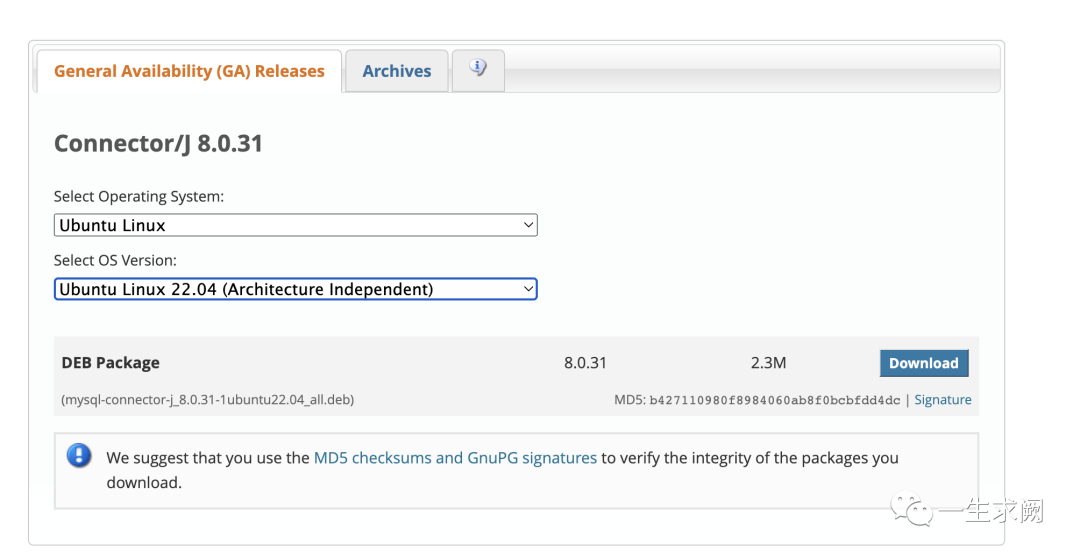

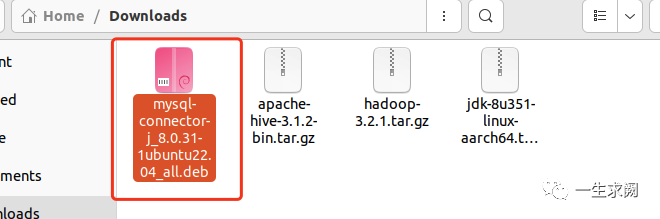

2.2.4 下载并解压mysql的jar包,并移动到${HIVE_HOME}/lib目录下

mysql-jar包下载地址:https://dev.mysql.com/downloads/connector/j/

根据自己的系统和mysql版本来安装

如果是用ubuntu自带的firefox来下载的话,默认是放在Downloads里

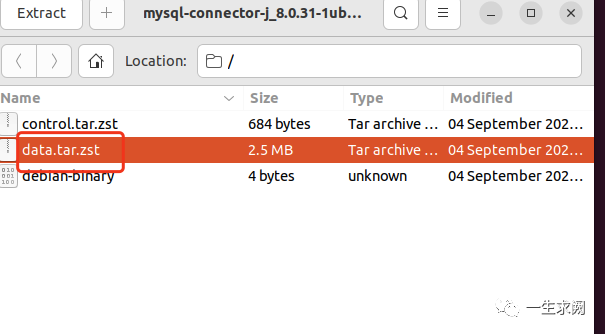

点击进入这个包:

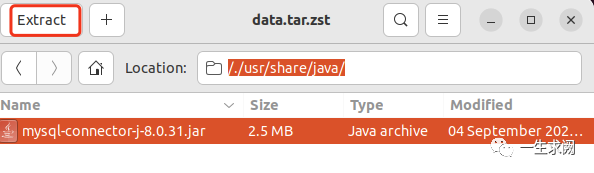

进入这个文件夹:/./usr/share/java/

点击extract提取到Downloads里

将jar包移动到${HIVE_HOME}/lib目录下:hadoop@hadoop01:~/Downloads$ sudo mv mysql-connector-j-8.0.31.jar usr/local/hive/lib

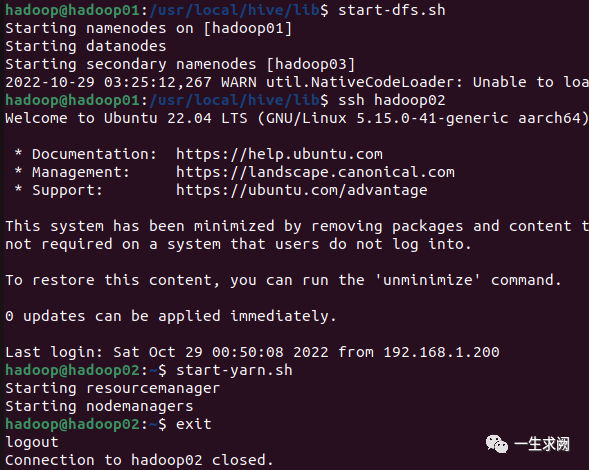

2.2.5 启动hadoop集群

hadoop@hadoop01:/usr/local/hive/lib$ start-dfs.shhadoop@hadoop01:/usr/local/hive/lib$ ssh hadoop02hadoop@hadoop02:~$ start-yarn.shhadoop@hadoop02:~$ exit

2.2.6 接着切换到${HIVE_HOME}/bin目录下初始化hive

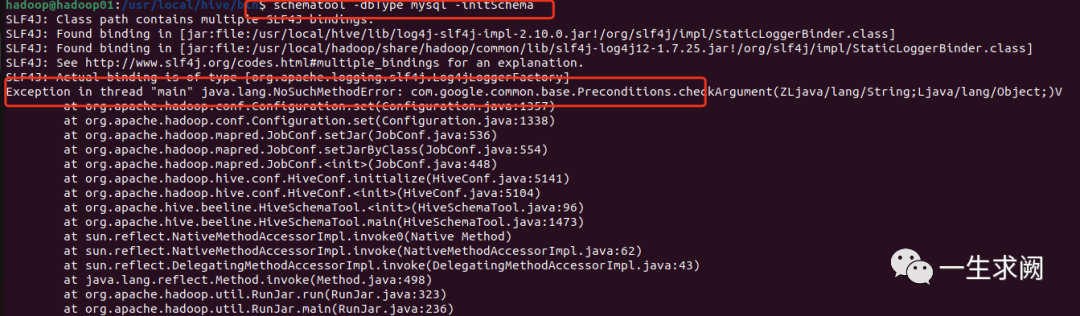

hadoop@hadoop01:/usr/local/hive/bin$ schematool -dbType mysql -initSchema

然后遇到报错:

这里是因为hadoop和hive的两个版本guava.jar不一致,解决方案如下:

#先到hive的lib目录下找到guavaxxx.jar包并删除hadoop@hadoop01:/usr/local/hive/bin$ cd /usr/local/hive/libhadoop@hadoop01:/usr/local/hive/lib$ sudo rm guava-19.0.jar#再去hadoop的lib目录下找到guavaxxx.jar包,并复制一份到hive的lib目录下hadoop@hadoop01:/usr/local/hive/lib$ cd /usr/local/hadoop/share/hadoop/common/libhadoop@hadoop01:/usr/local/hadoop/share/hadoop/common/lib$ sudo cp -r guava-27.0-jre.jar /usr/local/hive/lib

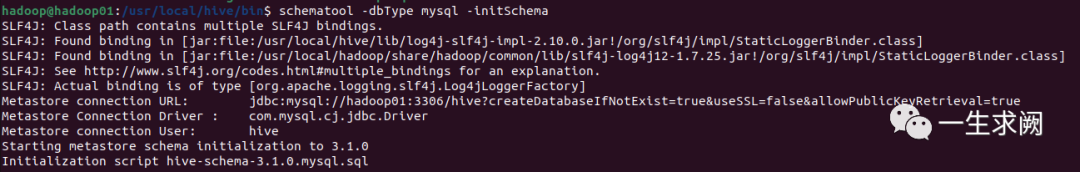

之后再重新初始化hive

hadoop@hadoop01:/usr/local/hive/bin$ schematool -dbType mysql -initSchema

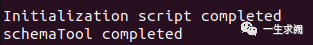

初始化完成。

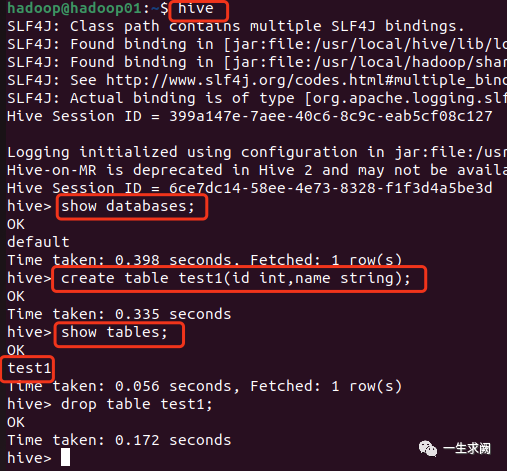

启动hive并测试:

hadoop@hadoop01:~$ hive

至此hive就安装并测试成功啦~