在云原生大潮下,使用operator部署有状态应用,作为常用的方式,已有众多数据库使用,如:ORACLE DB(23C将推出RAC on Kubernetes)、MySQL、PostgreSQL、TiDB、OceanBase、StarRocks、Apache Doris、PolarDB等。

0.1 环境清单

Kubernetes v1.23.8;

mysql-operator 8.0.31-2.0.7;

mysql-server 8.0.31。

0.2 主机清单

建议不低于3个K8s work节点,同时需要NFS一台,使用nfs作为MySQL动态数据存储(本次实现),也可使用本地文件存储数据,当然也有其它存储方案,如ceph等。

序号 | 地址 | 角色 | 备注 |

NO.1 | 192.168.80.18 | NFS-SERVER | 1台 |

NO.2 | 192.168.80.[15-17] | K8s Node | 3台 |

0.3 使用到的命名空间

mysql-operator、mysql-cluster

目前使用MySQL Operator方式在Kubernetes上部署高可用MySQL集群,常见的有4个提供商:

Oracle MySQL Operator;

Percona MySQL Operator;

Bitpoke MySQL Operator;

GrdsCloud MySQL Operator。

文中基于ORACLE官方提供的MySQL Operator进行实现、基础验证。

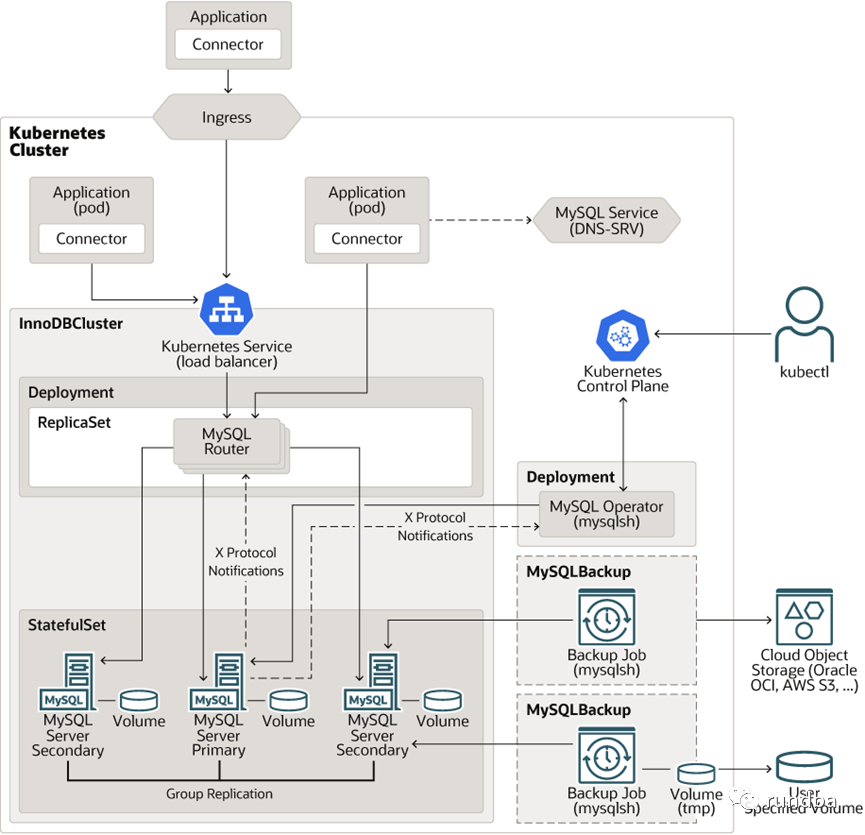

图1 MySQL Operator for Kubernetes体系架构图

用户Kubernetes的MySQL Operator

MySQL Operator for Kubernetes是一个专注于管理一个或多个MySQL InnoDB集群,这些集群由一组MySQL服务器和MySQL路由器组成。MySQL Operator本身在Kubernetes集群中运行,并由Kubernetes Deployment控制,以确保MySQL Operator保持可用和运行。

MySQL Operator默认部署在“mysql-operator” Kubernetes命名空间中;并监视Kubernetes集群中的所有InnoDB集群和相关资源。

为了执行这些任务,Operator订阅Kubernetes API服务器以更新事件,并根据需要连接到托管的MySQL server实例。

在Kubernetes控制器之上,Operator配置MySQL服务器、使用MySQL组复制(MySQL Group Replication)和MySQL路由器(MySQL Router)进行复制。

MySQL InnoDB集群

一旦InnoDB集群(InnoDB集群)资源部署到Kubernetes API服务器,Operator将创建资源,包括:

MySQL Server实例的Kubernetes StatefulSet:

这将管理Pod并分配相应的存储卷。此StatefulSet管理的每个Pod都运行多个容器。其中几个提供了一系列初始化步骤,用于准备MySQL Server配置和数据目录,然后两个容器保持活动状态以用于操作模式。其中一个容器(名为“mysql”)运行mysql Server本身,另一个容器是Kubernetes sidecar,负责与运营商自身协调节点的本地管理。

MySQL路由器的Kubernetes部署:

MySQL路由器是无状态服务,根据应用程序的选择将应用程序路由到当前的主服务器或副本。运营商可以根据集群的工作负载的需要,增加或减少路由器的数量。

MySQL InnoDB集群部署创建了这些Kubernetes服务:

一个服务是InnoDB集群的名称。它充当应用程序的主要入口点,并将传入连接发送到MySQL路由器。它们以“{clustername}.svc.cluster”的形式提供稳定名称。local”并暴露特定端口。

第二个名为“{clustername}-instances”的服务为各个服务器提供稳定的名称。通常不应直接使用;而是根据需要使用主服务来可靠地到达当前的主要或次要服务。但是,出于维护或监视目的,可能需要直接访问实例。每个pod实例都安装了MySQL Shell。

MySQL Operator for Kubernetes创建和管理不应手动修改的其他资源,包括:

名为“{clustername}-initconf”的Kubernetes ConfigMap,其中包含MySQL服务器的配置信息。

一系列Kubernetes Secrets以及系统不同部分的证书;名称包括“{clustername}.backup”、“{clustername.privsecrets}”和“{clustername.router}”。

可以使用使用Helm Charts安装MySQL Operator,也可使用清单文件进行安装。MySQL Operator默认会部署到mysql-operator命名空间。

2.1 使用Helm Charts安装MySQL Operator(本次未采用)

添加Helm存储库:

$> helm repo add mysql-operator https://mysql.github.io/mysql-operator/$> helm repo update

为Kubernetes安装MySQL Operator,本例使用名为mysql-operator的命名空间发布:

$> helm install my-mysql-operator mysql-operator/mysql-operator \--namespace mysql-operator --create-namespace

2.2 使用清单文件安装MySQL Operator(本次采用)

MySQL Operator for Kubernetes可以使用带有kubectl的原始清单文件进行安装。

1) 首先安装MySQL Operator for Kubernetes使用的自定义资源(CRD):

# 创建临时目录

mkdir root/mysql && cd cd mysql

# 下载deploy-crds.yaml

wget https://raw.githubusercontent.com/mysql/mysql-operator/trunk/deploy/deploy-crds.yaml

# 创建自定义资源

[root@k8s3-master mysql]# kubectl apply -f deploy-crds.yamlcustomresourcedefinition.apiextensions.k8s.io/innodbclusters.mysql.oracle.com createdcustomresourcedefinition.apiextensions.k8s.io/mysqlbackups.mysql.oracle.com createdcustomresourcedefinition.apiextensions.k8s.io/clusterkopfpeerings.zalando.org createdcustomresourcedefinition.apiextensions.k8s.io/kopfpeerings.zalando.org created

2) 接下来部署RBAC:

# 下载deploy-operator.yaml

wget https://raw.githubusercontent.com/mysql/mysql-operator/trunk/deploy/deploy-operator.yaml

# 创建部署RBAC

[root@k8s3-master mysql]# kubectl apply -f deploy-operator.yamlclusterrole.rbac.authorization.k8s.io/mysql-operator createdclusterrole.rbac.authorization.k8s.io/mysql-sidecar createdclusterrolebinding.rbac.authorization.k8s.io/mysql-operator-rolebinding createdclusterkopfpeering.zalando.org/mysql-operator creatednamespace/mysql-operator createdserviceaccount/mysql-operator-sa createddeployment.apps/mysql-operator created

3) 检查mysql-operator

通过检查在mysql-operator命名空间(由deploy-operator.yaml定义的可配置命名空间)中管理Operator的部署,验证Operator是否正在运行:

[root@k8s3-master mysql]# kubectl get deployment mysql-operator --namespace mysql-operator # MySQL Operator for Kubernetes准备就绪NAME READY UP-TO-DATE AVAILABLE AGEmysql-operator 1/1 1 1 55s

MySQL InnoDB集群部署也有两种方式,使用helm或kubectl方式。

3.1 创建命名空间(可选)

InnoDB集群默认会安装到defalut命名空间,为了安装到自定义命名空间mysql-cluster,可提前创建好并修改当前节点的默认命名空间。

创建mysql-cluster命名空间:

kubectl create namespace mysql-cluster

设置当前节点默认命名空间为mysql-cluster:

kubectl config set-context --current --namespace=mysql-cluster

3.2 使用Helm部署MySQL InnoDB集群(略,本次未采用)

详见官方链接:

https://dev.mysql.com/doc/mysql-operator/en/mysql-operator-innodbcluster-simple-helm.html

3.3 使用kubectl部署MySQL InnoDB集群

1) 创建secret

要使用kubectl创建InnoDB集群,首先创建一个包含新MySQL根用户凭据的秘密,在本例中,这个secret名为“mypwds”:

kubectl create secret generic mypwds \--from-literal=rootUser=root \--from-literal=rootHost=% \--from-literal=rootPassword="sakila"

也可通过yaml文件创建secret:

cat >> mypwds-secret.yaml << EOFapiVersion: v1kind: Secretmetadata:name: mypwdsstringData:rootUser: rootrootHost: '%'rootPassword: "sakila"EOF

创建secret:

kubectl apply -f mypwds-secret.yaml

2) 准备存储

部署MySQL InnoDB集群前需要提前准备好pvc,进行数据存储,否则部署InnoDB集群会失败,pvc名称必须为datadir-{clustername}-[0-9]。

本次使用nfs-subdir-external-provisioner作为外部动态存储,进行MySQL数据存储。

helm安装nfs-subdir-external-provisioner:

helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner \--set image.repository=registry.cn-beijing.aliyuncs.com/kubesphereio/nfs-subdir-external-provisioner \--set nfs.server=nfs158 \--set nfs.path=/vm/pv/dev-mysql-cluster \--set storageClass.name=nfs-client \--set nfs.volumeName=mycluster-nfs-provisioner \-n nfs-provisioner

在SC(nfs-client)创建3个动态pvc,分别为datadir-mycluster-0、datadir-mycluster-1、datadir-mycluster-2,作为mysql一主两从数据存储。

创建pvc文件:

cat >> pvc-mysql.yaml << EOFkind: PersistentVolumeClaimapiVersion: v1metadata:name: datadir-mycluster-0 #test-claimnamespace: mysql-cluster #namespace of your'sspec:storageClassName: nfs-client #name of SCaccessModes:- ReadWriteManyresources:requests:storage: 5Gi---kind: PersistentVolumeClaimapiVersion: v1metadata:name: datadir-mycluster-1 #test-claimnamespace: mysql-cluster #namespace of your'sspec:storageClassName: nfs-clientaccessModes:- ReadWriteManyresources:requests:storage: 5Gi---kind: PersistentVolumeClaimapiVersion: v1metadata:name: datadir-mycluster-2 #test-claimnamespace: mysql-cluster #namespace of your'sspec:storageClassName: nfs-clientaccessModes:- ReadWriteManyresources:requests:storage: 5GiEOF

创建pvc:

[root@k8s3-master mysql]# kubectl apply -f pvc-mysql.yamlpersistentvolumeclaim/datadir-mycluster-0 createdpersistentvolumeclaim/datadir-mycluster-1 createdpersistentvolumeclaim/datadir-mycluster-2 created

确保sc、pv、pvc均已生成:

[root@k8s3-master mysql]# kubectl get sc,pv,pvcNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGEstorageclass.storage.k8s.io/nfs-clientcluster.local/nfs-subdir-external-provisioner Delete Immediate true 131mNAMECAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEpersistentvolume/pvc-80bbd59d-a860-47f3-bad7-ecfaa0caa718 5Gi RWX Delete Bound mysql-cluster/datadir-mycluster-1 nfs-client 12spersistentvolume/pvc-88569241-1490-4614-8019-1a7b1804c67f 5Gi RWX Delete Bound mysql-cluster/datadir-mycluster-2 nfs-client 12spersistentvolume/pvc-88eed9e1-504c-4a1a-965c-52a95cd1ce07 5Gi RWX Delete Bound mysql-cluster/datadir-mycluster-0 nfs-client 12sNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEpersistentvolumeclaim/datadir-mycluster-0 Bound pvc-88eed9e1-504c-4a1a-965c-52a95cd1ce07 5Gi RWX nfs-client 12spersistentvolumeclaim/datadir-mycluster-1 Bound pvc-80bbd59d-a860-47f3-bad7-ecfaa0caa718 5Gi RWX nfs-client 12spersistentvolumeclaim/datadir-mycluster-2 Bound pvc-88569241-1490-4614-8019-1a7b1804c67f 5Gi RWX nfs-client 12s

3) 创建MySQL InnoDB集群

本例的InnoDBCluster定义创建了三个MySQL服务器实例和一个MySQL路由器实例。

创建mycluster.yaml配置文件:

cat >> mycluster.yaml << EOFapiVersion: mysql.oracle.com/v2kind: InnoDBClustermetadata:name: myclusterspec:secretName: mypwdstlsUseSelfSigned: trueinstances: 3version: 8.0.31router:instances: 1version: 8.0.31initDB:clone:donorUrl: mycluster-0.mycluster-instances.another.svc.cluster.local:3306rootUser: rootsecretKeyRef:name: mypwdsmycnf: |[mysqld]max_connections=162EOF

安装这个简单MySQL InnoDB集群:

[root@k8s3-master mysql]# kubectl apply -f mycluster.yamlinnodbcluster.mysql.oracle.com/mycluster created

通过innodbcluster类型来观察过程:

[root@k8s3-master mysql]# kubectl get innodbcluster --watchNAME STATUS ONLINE INSTANCES ROUTERS AGEmycluster PENDING 0 3 1 6s...mycluster INITIALIZING 0 3 1 3m37smycluster ONLINE_PARTIAL 1 3 1 3m37s...mycluster ONLINE_PARTIAL 2 3 1 3m50smycluster ONLINE_PARTIAL 2 3 1 19mmycluster ONLINE 2 3 1 37mmycluster ONLINE 3 3 1 37m # 最终3个实例和1个路由状态均为ONLINE

单次查询状态:

[root@k8s3-master mysql]# kubectl get innodbclusterNAME STATUS ONLINE INSTANCES ROUTERS AGEmycluster ONLINE 3 3 1 42m

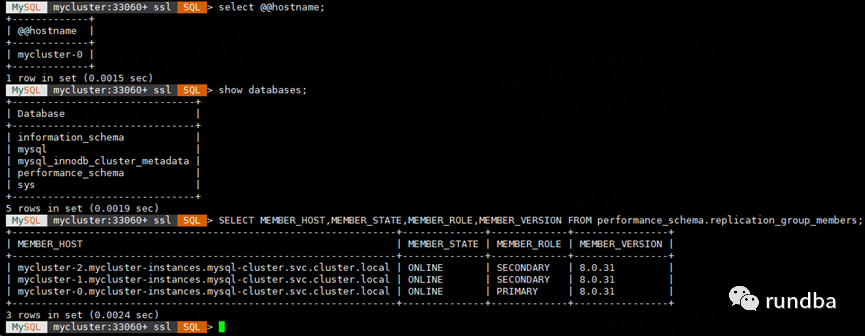

此示例与MySQL Shell连接以显示主机名:

[root@k8s3-master ~]# kubectl run --rm -it myshell --image=mysql/mysql-operator -- mysqlsh root@mycluster --sqlIf you don't see a command prompt, try pressing enter.Please provide the password for 'root@mycluster': ****** #输入secret中设置的root密码:sakilaMySQL Shell 8.0.25-operatorCopyright (c) 2016, 2021, Oracle and/or its affiliates.Oracle is a registered trademark of Oracle Corporation and/or its affiliates.Other names may be trademarks of their respective owners.Type '\help' or '\?' for help; '\quit' to exit.Creating a session to 'root@mycluster'Fetching schema names for autocompletion... Press ^C to stop.Your MySQL connection id is 104623 (X protocol)Server version: 8.0.31 MySQL Community Server - GPLNo default schema selected; type \use <schema> to set one.

如果没有看到命令提示,请尝试按enter键。

# 查询当前主机名

MySQL mycluster:33060+ ssl SQL > select @@hostname;+-------------+| @@hostname |+-------------+| mycluster-0 |+-------------+1 row in set (0.0012 sec)

这显示了一个成功的连接,该连接被路由到MySQL InnoDB集群中的mycluster-0 pod。

# 查看数据库信息

MySQL mycluster:33060+ ssl SQL > show databases;+-------------------------------+| Database |+-------------------------------+| information_schema || mysql || mysql_innodb_cluster_metadata || performance_schema || sys |+-------------------------------+5 rows in set (0.0022 sec)

# 查看MGR集群成员状态

MySQL mycluster:33060+ ssl SQL > SELECT MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION FROM performance_schema.replication_group_members;+-----------------------------------------------------------------+--------------+-------------+----------------+| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |+-----------------------------------------------------------------+--------------+-------------+----------------+| mycluster-2.mycluster-instances.mysql-cluster.svc.cluster.local | ONLINE | SECONDARY | 8.0.31 || mycluster-1.mycluster-instances.mysql-cluster.svc.cluster.local | ONLINE | SECONDARY | 8.0.31 || mycluster-0.mycluster-instances.mysql-cluster.svc.cluster.local | ONLINE | PRIMARY | 8.0.31 |+-----------------------------------------------------------------+--------------+-------------+----------------+3 rows in set (0.0023 sec)

图2 执行查询结果

https://dev.mysql.com/doc/mysql-operator/en/mysql-operator-preface.htmlhttps://kubernetes.io/zh-cn/docs/concepts/extend-kubernetes/operator/https://github.com/cncf/tag-app-delivery/blob/eece8f7307f2970f46f100f51932db106db46968/operator-wg/whitepaper/Operator-WhitePaper_v1-0.mdhttps://cn.ubuntu.com/blog/kubernetes-operators-the-top-5-things-to-watch-for-cnhttps://operatorhub.io/https://charmhub.io/https://github.com/mysql/mysql-operatorhttps://github.com/percona/percona-xtradb-cluster-operatorhttps://github.com/bitpoke/mysql-operatorhttps://www.bitpoke.io/docs/mysql-operator/https://operatorhub.io/operator/mysqlhttps://blog.csdn.net/qq_39458487/article/details/125260925https://blog.csdn.net/qq_42979842/article/details/127196096https://github.com/oracle/db-sharding/tree/master/oke-based-sharding-deploymenthttps://mp.weixin.qq.com/s?__biz=MzIyMDA1MzgyNw==&mid=2651973819&idx=1&sn=c577b2ea587413e62ec64bc1bbc17603&chksm=8c34a097bb432981bc26d2260d10894d7b8026a2f53c48f1a5f235d4b1a469849305128e98b3&scene=27