点击上方“IT那活儿”公众号,关注后了解更多内容,不管IT什么活儿,干就完了!!!

前 言

环境依赖

hadoop认证配置

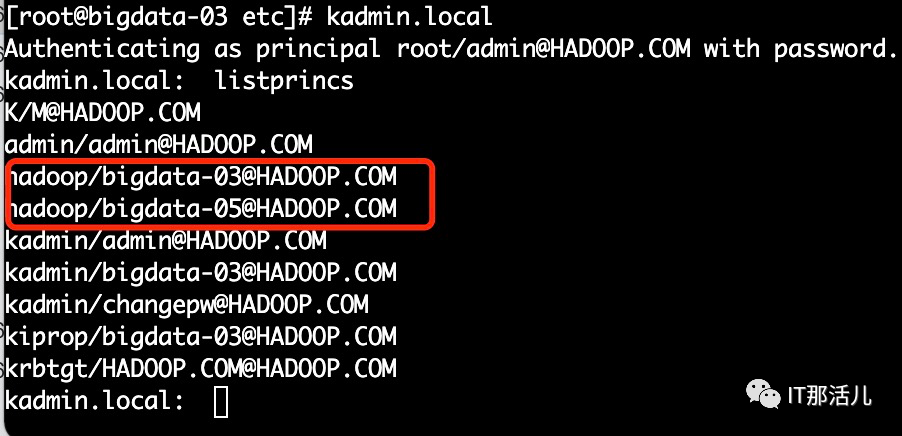

kadmin.local -q "addprinc -randkeyhadoop/bigdata-03@HADOOP.COM"

kadmin.local -q "addprinc -randkeyhadoop/bigdata-05@HADOOP.COM"

kadmin.local -q "xst -k root/keytabs/kerberos/hadoop.keytabhadoop/bigdata-03@HADOOP.COM"

kadmin.local -q "xst -k /root/keytabs/kerberos/hadoop.keytab hadoop/bigdata-05@HADOOP.COM"

klist -kt /root/keytabs/kerberos/hadoop.keytab

klist -kt /home/gpadmin/hadoop.keytab

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

</property>

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

<!-- 访问DataNode数据块时需通过Kerberos认证 -->

<property>

<name>dfs.block.access.token.enable</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<!-- NameNode服务的Kerberos主体,_HOST会自动解析为服务所在的主机名 -->

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>hadoop/_HOST@EXAMPLE.COM</value>

</property>

<!-- NameNode服务的Kerberos密钥文件路径 -->

<property>

<name>dfs.namenode.keytab.file</name>

<value>/home/gpadmin/hadoop.keytab</value>

</property>

<!-- Secondary NameNode服务的Kerberos主体 -->

<property>

<name>dfs.secondary.namenode.kerberos.principal</name>

<value>hadoop/_HOST@EXAMPLE.COM</value>

</property>

<!-- Secondary NameNode服务的Kerberos密钥文件路径 -->

<property>

<name>dfs.secondary.namenode.keytab.file</name>

<value>/home/gpadmin/hadoop.keytab</value>

</property>

<!-- WebHDFS REST服务的Kerberos主体 -->

<property>

<name>dfs.web.authentication.kerberos.principal</name>

<value>hadoop/_HOST@EXAMPLE.COM</value>

</property>

<!-- Hadoop Web UI的Kerberos密钥文件路径 -->

<property>

<name>dfs.web.authentication.kerberos.keytab</name>

<value>/home/gpadmin/hadoop.keytab</value>

</property>

<!-- DataNode服务的Kerberos主体 -->

<property>

<name>dfs.datanode.kerberos.principal</name>

<value>hadoop/_HOST@EXAMPLE.COM</value>

</property>

<!-- DataNode服务的Kerberos密钥文件路径 -->

<property>

<name>dfs.datanode.keytab.file</name>

<value>/home/gpadmin/hadoop.keytab</value>

</property>

<!-- 配置DataNode数据传输保护策略为仅认证模式 -->

<property>

<name>dfs.data.transfer.protection</name>

<value>authentication</value>

</property>

<!-- 使用HTTPS协议 -->

<property>

<name>dfs.http.policy</name>

<value>HTTPS_ONLY</value>

<description>所有开启的web页面均使用https, 细节在ssl server 和client那个配置文件内配置</description>

</property>

<!-- Resource Manager 服务的Kerberos主体 -->

<property>

<name>yarn.resourcemanager.principal</name>

<value>hadoop/_HOST@EXAMPLE.COM</value>

</property>

<!-- Resource Manager 服务的Kerberos密钥文件 -->

<property>

<name>yarn.resourcemanager.keytab</name>

<value>/home/gpadmin/hadoop.keytab</value>

</property>

<!-- Node Manager 服务的Kerberos主体 -->

<property>

<name>yarn.nodemanager.principal</name>

<value>hadoop/_HOST@EXAMPLE.COM</value>

</property>

<!-- Node Manager 服务的Kerberos密钥文件 -->

<property>

<name>yarn.nodemanager.keytab</name>

<value>/home/gpadmin/hadoop.keytab</value>

</property>

<!-- 历史服务器的Kerberos密钥文件 -->

<property>

<name>mapreduce.jobhistory.principal</name>

<value>hadoop/_HOST@EXAMPLE.COM</value>

</property>

<!-- 历史服务器的Kerberos主体 -->

<property>

<name>mapreduce.jobhistory.keytab</name>

<value>/home/gpadmin/hadoop.keytab</value>

</property>

<property>

<name>ssl.server.truststore.location</name>

<value>/home/gpadmin/kerberos_https/keystore</value>

<description>Truststore to be used by NN and DN. Must be specified.

</description>

</property>

<property>

<name>ssl.server.truststore.password</name>

<value>password</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.server.truststore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

<property>

<name>ssl.server.truststore.reload.interval</name>

<value>10000</value>

<description>Truststore reload check interval, in milliseconds.

Default value is 10000 (10 seconds).

</description>

</property>

<property>

<name>ssl.server.keystore.location</name>

<value>/home/gpadmin/kerberos_https/keystore</value>

<description>Keystore to be used by NN and DN. Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.password</name>

<value>password</value>

<description>Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.keypassword</name>

<value>password</value>

<description>Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

<property>

<name>ssl.server.exclude.cipher.list</name>

<value>TLS_ECDHE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA,

SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA,

SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA,

SSL_RSA_WITH_RC4_128_MD5</value>

<description>Optional. The weak security cipher suites that you want excluded

from SSL communication.</description>

</property>

keytool -keystore keystore -alias hadoop -validity 365000 -

keystore/home/gpadmin/kerberos_https/keystore/keystore -

genkey -keyalg RSA -keysize 2048 -dname "CN=hadoop,

OU=shsnc, O=snc, L=hunan, ST=changsha, C=CN"

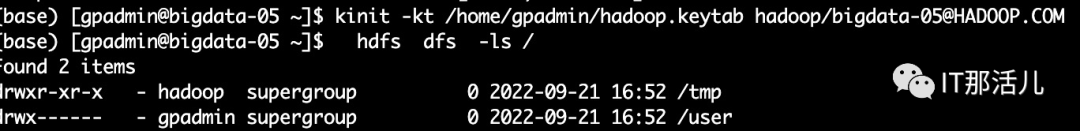

kinit -kt /home/gpadmin/hadoop.keytabhadoop/bigdata-05@HADOOP.COM

flink认证配置

<property>

<name>dfs.permissions.enabled</name>

<value>true</value>

</property>

为true时,新建凭证为hadoop 安装用户,如以gpadmin用户安装了hadoop。 kadmin.local -q "xst -k /root/keytabs/kerberos/hadoop.keytab gpadmin@HADOOP.COM" 为false时,新建凭证可以不是hadoop 安装用户。 kadmin.local -q "xst -k /root/keytabs/kerberos/hadoop.keytab xx@HADOOP.COM"

klist -kt /root/keytabs/kerberos/hadoop.keytab

security.kerberos.login.use-ticket-cache: true

security.kerberos.login.keytab: /home/gpadmin/hadoop.keytab

security.kerberos.login.principal: gpadmin@HADOOP.COM

security.kerberos.login.contexts: Client

flink run -m yarn-cluster

-p 1

-yjm 1024

-ytm 1024

-ynm amp_zabbix

-c com.shsnc.fk.task.tokafka.ExtratMessage2KafkaTask

-yt /home/gpadmin/jar_repo/config/krb5.conf

-yD env.java.opts.jobmanager=-Djava.security.krb5.conf=krb5.conf

-yD env.java.opts.taskmanager=-Djava.security.krb5.conf=krb5.conf

-yD security.kerberos.login.keytab=/home/gpadmin/hadoop.keytab

-yD security.kerberos.login.principal=gpadmin@HADOOP.COM

$jarname

本文作者:长研架构小组(上海新炬王翦团队)

本文来源:“IT那活儿”公众号

文章转载自IT那活儿,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。