实践练习二:手动部署 三节点OceanBase 集群

实验说明

1.1 实验目标

- 手动部署一个 OB 三副本集群和一个 OBProxy 代理节点。

- 创建一个业务租户、一个业务数据库,以及一些表,插入一些数据测试等。

1.2 实验环境信息

本次部署使用环境如下:

- 宿主机Oracle Linux Server release 6.9 48核,320G内存 600G硬盘

- 虚拟机软件vbox VirtualBox-6.0.6

- 虚拟主机4台 8c32g,80G硬盘,/根目录50G

- centos7.6 iso 系统镜像(CentOS-7-x86_64-DVD-1810.iso)

1.3机器角色划分

Zone | IP | hostname | RPC Port | Connect Port |

Zone1 | 192.168.56.41 | ob32-2 | 2882 | 2881 |

Zone2 | 192.168.56.42 | ob32-3 | 2882 | 2881 |

Zone3 | 192.168.56.43 | ob32-4 | 2882 | 2881 |

obproxy | 192.168.56.40 | ob32-1 | 2884 | 2883 |

mysql or obclient客户端 | 192.168.56.40 | ob32-1 |

机器环境准备

三台机器上相同的操作

配置本地yum源

因为四台LINUX服务器采用最小化安装的,又不能连接外网,需要配置本地ISO的yum源。

cd /etc/yum.repos.d

mkdir bk

mv *.repo bk/

echo "[EL]" > /etc/yum.repos.d/centos-media.repo

echo "name =Linux 7.x DVD" >> /etc/yum.repos.d/centos-media.repo

echo "baseurl=file:///mnt" >> /etc/yum.repos.d/centos-media.repo

echo "gpgcheck=1" >> /etc/yum.repos.d/centos-media.repo

echo "enabled=1" >> /etc/yum.repos.d/centos-media.repo

echo "gpgkey=file:///mnt/RPM-GPG-KEY-CentOS-7" >> /etc/yum.repos.d/centos-media.repo

echo "/dev/sr0 /mnt iso9660 defaults,ro,loop 0 0" >>/etc/fstab

cat /etc/fstab

mount -a

[root@ob32-1 soft]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos_ob32--1-root 81G 12G 69G 15% /

devtmpfs 16G 0 16G 0% /dev

tmpfs 16G 0 16G 0% /dev/shm

tmpfs 16G 8.7M 16G 1% /run

tmpfs 16G 0 16G 0% /sys/fs/cgroup

/dev/loop0 4.3G 4.3G 0 100% /mnt

/dev/sda1 1014M 133M 882M 14% /boot

tmpfs 3.2G 0 3.2G 0% /run/user/0

2.2安装必要的工具包

yum install -y wget net-tools vim tree

2.3关闭透明大页

echo never > /sys/kernel/mm/transparent_hugepage/enabled

cat /sys/kernel/mm/transparent_hugepage/enabled

2.4创建用户

useradd admin

echo 'admin:adminPWD123' | chpasswd

赋予admin用户sudo权限

chmod u+w /etc/sudoers

vi /etc/sudoers

admin ALL=(ALL) ALL

chmod u-w /etc/sudoers

2.5 用户会话设置

使用命令ulimit可以查看用户会话默认限制。修改下面文件可以默认会话限制修改永久生效。

sudo vi /etc/security/limits.conf

* soft nofile 655350

* hard nofile 655350

* soft stack 20480

* hard stack 20480

* soft nproc 655360

* hard nproc 655360

* soft core unlimited

* hard core unlimited

退出登陆,检查设置生效

Ulimit -a

2.6内核参数配置

内核参数主要是网络、内存相关的设置

sudo vi /etc/sysctl.conf

fs.aio-max-nr = 65536

net.core.somaxconn = 2048

net.core.netdev_max_backlog = 10000

net.core.rmem_default = 16777216

net.core.wmem_default = 16777216

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.ipv4.ip_local_port_range = 3500 65535

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_syncookies = 0

net.ipv4.tcp_rmem = 4096 87380 16777216

net.ipv4.tcp_wmem = 4096 65536 16777216

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_fin_timeout = 15

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_slow_start_after_idle=0

vm.swappiness = 0

fs.aio-max-nr=1048576

vm.min_free_kbytes = 2097152

vm.max_map_count=655360

修改后使用下面命令生效,并检查

sysctl -p

2.7关闭防火墙和Selinux

Systemctl disable firewalld

Systemctl stop firewalld

Systemctl status firewalld

Vi /etc/selinux/config

SELINUX=disabled

立即生效:

setenforce 0

2.8 节点时间同步设置

安装chrony

# yum install chrony

#systemctl enable chronyd

#systemctl start chronyd

在服务端主机192.168.56.40上配制/etc/chrony.conf

修改如下:

server 127.0.0.1 iburst

Allow 192.168.56.0/24

local stratum 10

重启chronyd服务

Systemctl enable chronyd

Systemctl restart chronyd

在客户端主机192.168.56.41、192.168.56.42和192.168.56.43 配制chrony.conf 文件

Server 192.168.56.40 iburst

Allow 192.168.56.0/24

local stratum 10

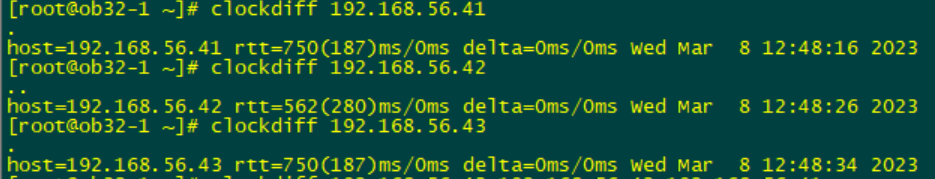

检查时间误差使用clockdiff命令

clockdiff 192.168.56.41

clockdiff 192.168.56.42

2.9 配置代理节点到集群三节点的SSH互信

ssh-keygen -t rsa

ssh-copy-id 192.168.56.41

ssh-copy-id 192.168.56.42

ssh-copy-id 192.168.56.43

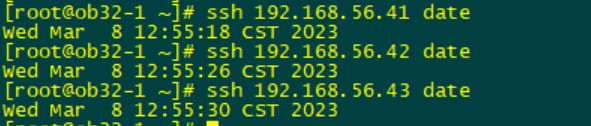

测试互信:

ssh 192.168.56.41 date

ssh 192.168.56.42 date

ssh 192.168.56.43 date

2.10 建立目录及权限

observer 工作目录:~/oceanbase-{version},安装软件时自动创建

·数据文件目录:/data/或/data/1

事务日志目录:/redo 或/data/log1

mkdir -p /data

mkdir -p /redo

目录权限

chown -R admin.admin /data /redo

chown -R admin.admin /data /redo

observer的安装

3.1上传rpm包

上传rpm包到中控代理节点,因为SSH免登录已经配置,再用脚本SCP分发到各个集群子节点

-rw-r--r--. 1 root root 10970208 Mar 5 21:51 obclient-2.2.1-4.el7.x86_64.rpm

-rw-r--r--. 1 root root 9907040 Mar 5 21:51 obproxy-ce-3.2.3.5-2.el7.x86_64.rpm

-rw-r--r--. 1 root root 49777272 Mar 5 21:51 oceanbase-ce-3.1.4-103000102023020719.el7.x86_64.rpm

-rw-r--r--. 1 root root 158392 Mar 5 21:51 oceanbase-ce-libs-3.1.4-103000102023020719.el7.x86_64.rpm

-rw-r--r--. 1 root root 1337808 Mar 5 21:51 libobclient-2.2.1-2.el7.x86_64.rpm

其中 libobclient和obclient是ob客户端和lib的安装包,oceanbase-ce和oceanbase-ce-libs是ob社区版数据库和lib的安装包,obproxy-ce是ob集群代理的安装包

3.2分发和部署软件

IPS="192.168.56.41 192.168.56.42 192.168.56.43"

for ip in $IPS; do echo $ip; \

scp libobclient-2.2.1-2.el7.x86_64.rpm obclient-2.2.1-4.el7.x86_64.rpm \

oceanbase-ce-3.1.4-103000102023020719.el7.x86_64.rpm oceanbase-ce-libs-3.1.4-103000102023020719.el7.x86_64.rpm admin@$ip:~/;done

for ip in $IPS; do echo $ip; ssh admin@$ip "sudo rpm -Uvh oceanbase-ce*.rpm" ;done

3.3准备目录

for ip in $IPS; do echo $ip; ssh admin@$ip "mkdir -p /home/admin/oceanbase/store/obdemo \

/data/obdemo/sstable /redo/obdemo/{clog,ilog,slog}";done

for ip in $IPS; do echo $ip; ssh admin@$ip "ln -s /data/obdemo/sstable /home/admin/oceanbase/store/obdemo/sstable";done

for ip in $IPS; do echo $ip; ssh admin@$ip "ln -s /redo/obdemo/clog /home/admin/oceanbase/store/obdemo/clog";done

for ip in $IPS; do echo $ip; ssh admin@$ip "ln -s /redo/obdemo/slog /home/admin/oceanbase/store/obdemo/slog";done

for ip in $IPS; do echo $ip; ssh admin@$ip "ln -s /redo/obdemo/ilog /home/admin/oceanbase/store/obdemo/ilog";done

for ip in $IPS; do echo $ip; ssh admin@$ip "echo 'export LD_LIBRARY_PATH= $LD_LIBRARY_PATH:~/oceanbase/lib'>> ~/.bash_profile";done

3.4查检目录结构

[admin@ob32-2 ~]$ tree ~/oceanbase

oceanbase

├── bin

│ ├── import_time_zone_info.py

│ └── observer

├── etc

│ ├── observer.config.bin

│ ├── observer.config.bin.history

│ ├── oceanbase_upgrade_dep.yml

│ ├── priv_checker.py

│ ├── timezone_V1.log

│ ├── upgrade_checker.py

│ ├── upgrade_cluster_health_checker.py

│ ├── upgrade_post_checker.py

│ ├── upgrade_post.py

│ ├── upgrade_pre.py

│ ├── upgrade_rolling_post.py

│ └── upgrade_rolling_pre.py

├── etc2

│ ├── observer.conf.bin

│ └── observer.conf.bin.history

├── etc3

│ ├── observer.conf.bin

│ └── observer.conf.bin.history

├── lib

│ ├── libaio.so -> libaio.so.1.0.1

│ ├── libaio.so.1 -> libaio.so.1.0.1

│ ├── libaio.so.1.0.1

│ ├── libmariadb.so -> libmariadb.so.3

│ └── libmariadb.so.3

├── log

│ ├── election.log

│ ├── election.log.wf

│ ├── observer.log

│ ├── observer.log.20230308104057

│ ├── observer.log.20230308114015

│ ├── observer.log.20230308123925

│ ├── observer.log.wf

│ ├── observer.log.wf.20230308104057

│ ├── observer.log.wf.20230308114015

│ ├── observer.log.wf.20230308123925

│ ├── rootservice.log

│ ├── rootservice.log.20230307062623

│ ├── rootservice.log.20230307183321

│ ├── rootservice.log.20230308064029

│ ├── rootservice.log.wf

│ ├── rootservice.log.wf.20230307062623

│ ├── rootservice.log.wf.20230307183321

│ └── rootservice.log.wf.20230308064029

├── run

│ ├── obclient.sock

│ └── observer.pid

└── store

└── obdemo

├── clog -> /redo/obdemo/clog

├── ilog -> /redo/obdemo/ilog

├── slog -> /redo/obdemo/slog

└── sstable -> /data/obdemo/sstable

13 directories, 43 files

[admin@ob32-2 ~]$ tree /data

/data

└── obdemo

└── sstable

└── block_file

2 directories, 1 file

[admin@ob32-2 ~]$ tree /redo

/redo

└── obdemo

├── clog

│ ├── 1

│ ├── 10

│ ├── 11

│ ├── 12

│ ├── 2

│ ├── 3

│ ├── 4

│ ├── 5

│ ├── 6

│ ├── 7

│ ├── 8

│ └── 9

├── ilog

│ ├── 1

│ └── 2

└── slog

└── 1

4 directories, 15 files

observer的启动

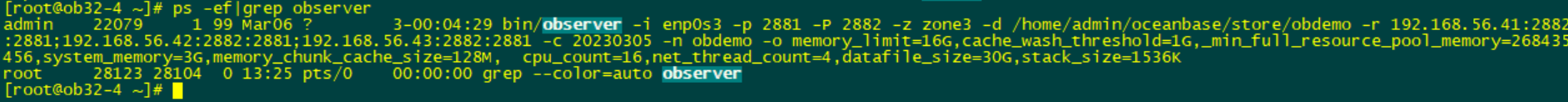

在主机192.168.56.41上:

[admin@ob32-2 ~]$cd ~/oceanbase && bin/observer -i enp0s3 -p 2881 -P 2882 -z zone1 -d ~/oceanbase/store/obdemo \

-r '192.168.56.41:2882:2881;192.168.56.42:2882:2881;192.168.56.43:2882:2881' \

-c 20230305 -n obdemo -o "memory_limit=8G,cache_wash_threshold=1G, \

__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M, \

cpu_count=16,net_thread_count=4,datafile_size=50G,stack_size=1536K"

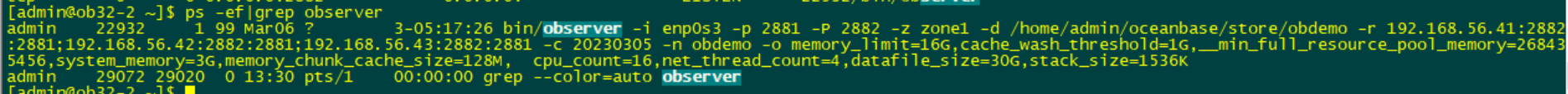

查看进程:

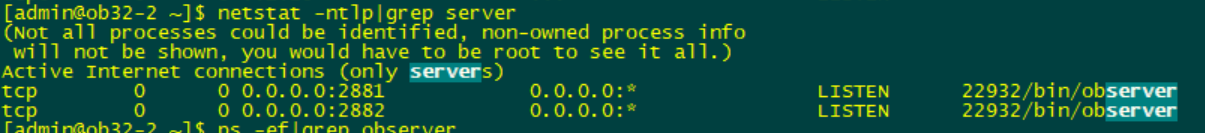

[admin@ob32-2 ~]$ netstat -ntlp|grep server

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

Active Internet connections (only servers)

tcp 0 0 0.0.0.0:2881 0.0.0.0:* LISTEN 22932/bin/observer

tcp 0 0 0.0.0.0:2882 0.0.0.0:* LISTEN 22932/bin/observer

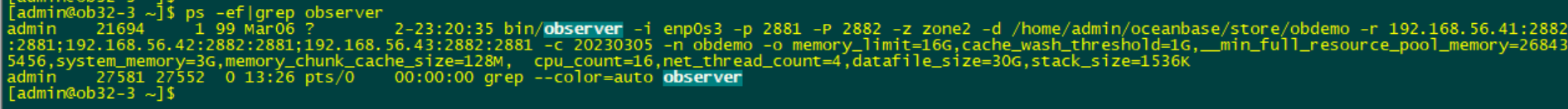

[admin@ob32-3 ~]$ cd ~/oceanbase && bin/observer -i enp0s3 -p 2881 -P 2882 -z zone2 -d ~/oceanbase/store/obdemo \

-r '192.168.56.41:2882:2881;192.168.56.42:2882:2881;192.168.56.43:2882:2881' \

-c 20230305 -n obdemo -o "memory_limit=20G,cache_wash_threshold=1G, \

__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M, \

cpu_count=16,net_thread_count=4,datafile_size=50G,stack_size=1536K"

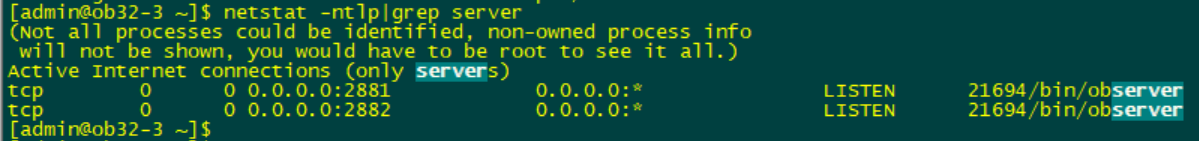

查看监听端口:

[admin@ob32-3 ~]$ netstat -ntlp|grep server

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

Active Internet connections (only servers)

tcp 0 0 0.0.0.0:2881 0.0.0.0:* LISTEN 21694/bin/observer

tcp 0 0 0.0.0.0:2882 0.0.0.0:* LISTEN 21694/bin/observer

在主机192.168.56.43上:

[admin@ob32-4 ~]$ cd ~/oceanbase && bin/observer -i enp0s3 -p 2881 -P 2882 -z zone3 -d ~/oceanbase/store/obdemo \

-r '192.168.56.41:2882:2881;192.168.56.42:2882:2881;192.168.56.43:2882:2881' \

-c 20230305 -n obdemo -o "memory_limit=20G,cache_wash_threshold=1G, \

__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M, \

cpu_count=16,net_thread_count=4,datafile_size=50G,stack_size=1536K"

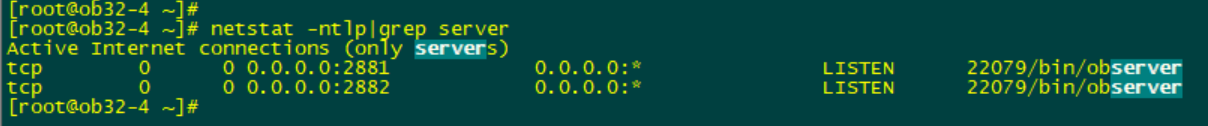

查看进程:

查看监听端口:

[root@ob32-4 ~]# netstat -ntlp|grep server

Active Internet connections (only servers)

tcp 0 0 0.0.0.0:2881 0.0.0.0:* LISTEN 22079/bin/observer

tcp 0 0 0.0.0.0:2882 0.0.0.0:* LISTEN 22079/bin/observer

observer集群初始化

5.1集群自举初始化

在三台集群节点任意一台机器执行,执行前先安装下客户端和依赖的lib rpm包,我在192.168.56.43上:

obclient -h 192.168.56.43 -u root -P 2881 -p -c -A (首次登陆密码为空)

set session ob_query_timeout=1000000000;

alter system bootstrap ZONE 'zone1' SERVER '192.168.56.41:2882', ZONE 'zone2' SERVER '192.168.56.42:2882', ZONE 'zone3' SERVER '192.168.56.43:2882';

5.2常见bootstrap失败原因

·集群里observer节点之间网络延时超过200ms(建议在30ms以内)。

·集群里observer节点之间时间同步误差超过100ms(建议在5ms以内)。

主机 ulimit 会话限制没有修改或生效。

主机内核参数 sysctl.conf没有修改或生效。

observer启动用户不对,建议admin用户。

observer启动目录不对,必须是~/oceanbase。具体版本号会有变化。

·observer的可用内存低于8G。

·observer 的事务日志目录可用空间低于5%。

observer启动参数不对(zone名称对不上,rootservice_list地址或格式不对,集群名不统一等)。

修改root密码,重新登录:

alter user root identified by 'rootPWD123' ;

obclient -h 192.168.56.43 -u root@sys -P 2881 -prootPWD123 -c -A oceanbase

obclient [oceanbase]> show processlist;

+------------+------+---------------------+-----------+---------+------+--------+------------------+

| Id | User | Host | db | Command | Time | State | Info |

+------------+------+---------------------+-----------+---------+------+--------+------------------+

| 3222011977 | root | 192.168.56.43:26586 | oceanbase | Query | 0 | ACTIVE | show processlist |

+------------+------+---------------------+-----------+---------+------+--------+------------------+

obclient [oceanbase]> select * from __all_virtual_disk_stat;

+---------------+----------+-------------+-----------+-------------+---------------+---------------------+

| svr_ip | svr_port | total_size | used_size | free_size | is_disk_valid | disk_error_begin_ts |

+---------------+----------+-------------+-----------+-------------+---------------+---------------------+

| 192.168.56.43 | 2882 | 32212254720 | 222298112 | 31989956608 | 1 | 0 |

| 192.168.56.41 | 2882 | 32212254720 | 155189248 | 32057065472 | 1 | 0 |

| 192.168.56.42 | 2882 | 32212254720 | 155189248 | 32057065472 | 1 | 0 |

+---------------+----------+-------------+-----------+-------------+---------------+---------------------+

obclient [oceanbase]> select * from __all_zone where name = 'merge_status';

+----------------------------+----------------------------+-------+--------------+-------+------+

| gmt_create | gmt_modified | zone | name | value | info |

+----------------------------+----------------------------+-------+--------------+-------+------+

| 2023-03-06 20:51:10.911802 | 2023-03-07 02:02:51.228916 | | merge_status | 0 | IDLE |

| 2023-03-06 20:51:10.916343 | 2023-03-07 02:02:41.060628 | zone1 | merge_status | 0 | IDLE |

| 2023-03-06 20:51:10.918664 | 2023-03-07 02:02:09.447780 | zone2 | merge_status | 0 | IDLE |

| 2023-03-06 20:51:10.920967 | 2023-03-07 02:01:59.256145 | zone3 | merge_status | 0 | IDLE |

+----------------------------+----------------------------+-------+--------------+-------+------+

OBProxy安装和配置启动

6.1在主机192.168.56.40上安装obproxy和obclient:

root@ob32-1 soft]# rpm -ivh obproxy-ce-3.2.3.5-2.el7.x86_64.rpm

Preparing... ################################# [100%]

Updating / installing...

1:obproxy-ce-3.2.3.5-2.el7 ################################# [100%]

[root@ob32-1 soft]# rpm -ivh libobclient-2.2.1-2.el7.x86_64.rpm

Preparing... ################################# [100%]

Updating / installing...

1:libobclient-2.2.1-2.el7 ################################# [100%]

[root@ob32-1 soft]# rpm -ivh obclient-2.2.1-4.el7.x86_64.rpm

Preparing... ################################# [100%]

Updating / installing...

1:obclient-2.2.1-4.el7 ################################# [100%]

6.2 查看目录

[admin@ob32-1 ~]$ tree

.

├── obproxy-3.2.3.5

│ └── bin

│ ├── obproxy

│ └── obproxyd.sh

└── oceanbase-ce

6.3启动obproxy代理进程

su - admin

cd ~/obproxy-3.2.3.5/ && bin/obproxy -r "192.168.56.41:2881;192.168.56.42:2881;192.168.56.43:2881" -p 2883 -o "enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false" -c obdemo

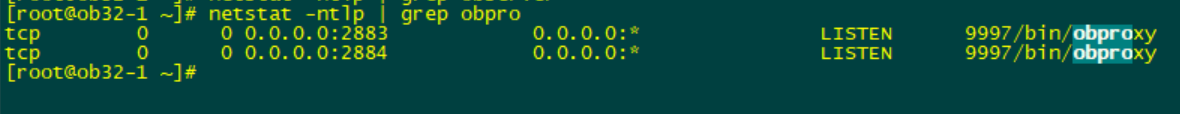

查看代理监听端口

[root@ob32-1 ~]# netstat -ntlp | grep obpro

tcp 0 0 0.0.0.0:2883 0.0.0.0:* LISTEN 9997/bin/obproxy

查看进程:

ps -ef|grep obproxy

[root@ob32-1 ~]# ps -ef|grep obproxy

admin 9997 1 2 Mar07 ? 00:32:43 bin/obproxy -r 192.168.56.41:2881;192.168.56.42:2881;192.168.56.43:2881 -p 2883 -o enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false -c obdemo

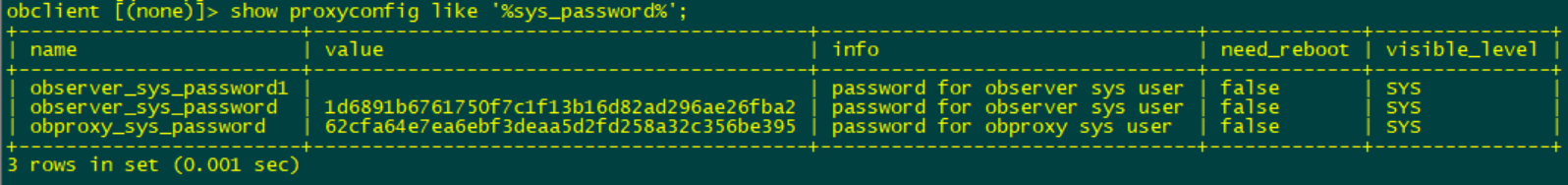

登录 OBProxy 修改密码

登录 OBProxy

登录用户名:root@proxysys,端口:2883,初始密码:空。

obclient -h 192.168.56.40 -u root@proxysys -P 2883 -p --proxy-mode

show proxyconfig like '%sys_password%';

6.4修改 OBProxy 用户密码

可通过修改参数的方式来修改 OBProxy 用户密码,使用命令为 alter proxyconfig set。

alter proxyconfig set obproxy_sys_password = 'proxySYSPWD123' ;

修改 OBProxy 连接 OceanBase 集群用户 proxyro 的密码

在修改 OBProxy 用户密码的同时还需要修改 OBProxy 连接 OceanBase 集群用户

proxyro 的密码,这样 OBProxy 才能和 OceanBase 集群正常连接。

OBProxy 连接 OceanBase 集群用户 proxyro 的密码就是前面 OceanBase 集群初始化

后创建的用户 proxyro 的密码。

alter proxyconfig set observer_sys_password = 'proxyROPWD123' ;

退出来重新用密码登陆:

obclient -h192.168.56.40 -uroot@proxysys -P2883 -pproxySYSPWD123 --proxy-mode

·创建obproxy和observer通讯用户

obclient -h192.168.56.41 -uroot@sys -P2881 -prootPWD123 -c -A oceanbase

grant select on oceanbase.* to proxyro identified by 'proxyROPWD123';

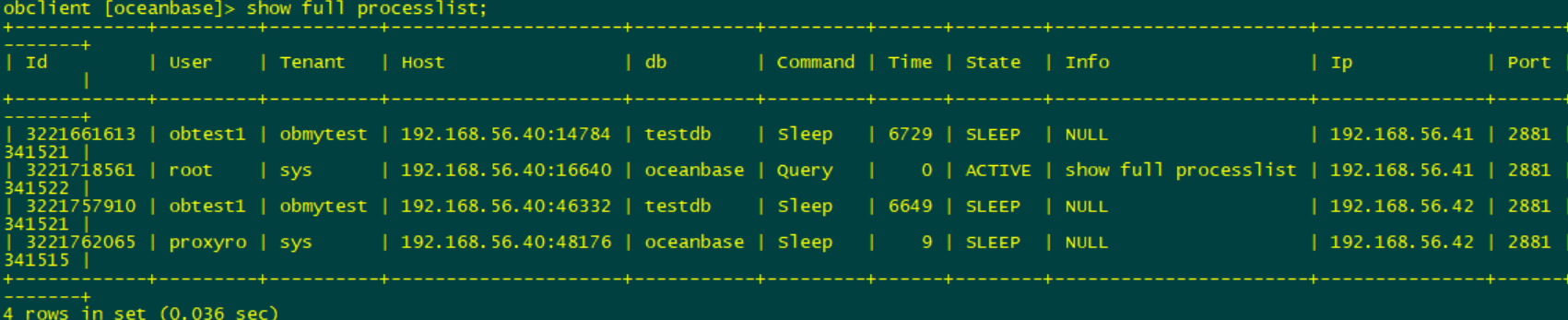

通过obproxy连接OceanBase集群·

obclient -h192.168.56.40 -uroot@sys#obdemo -P2883 -prootPWD123 -c -A oceanbase

show full processlist;

注意区分三个用户和密码的区别:

root@sys root@proxysys proxyro@sys

6.5 obproxy配置失败问题

·启动用户不对,建议admin用户。

·启动目录不对,必须是~/obproxy-3.2.0。具体版本号会有变化。

·proxyro的用户需要在OceanBase集群里创建,并建议设置密码。

·proxyro 的密码也必须在obproxy进程里通过参数observer_sys_password设置。

·obproxy启动时指定的集群名(-c)跟OceanBase集群里的cluster不一致。

资源、资源池、租户的创建

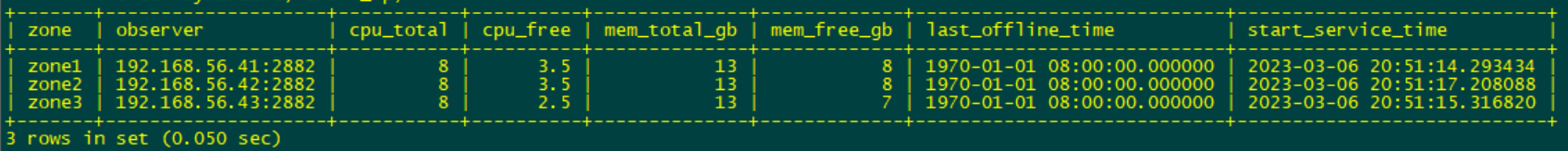

查看集群资源

[admin@ob32-1 ~]$ obclient -h192.168.56.40 -uroot@sys -P2883 -prootPWD123 -c -A oceanbase

Welcome to the OceanBase. Commands end with ; or \g.

Your OceanBase connection id is 524295

Server version: OceanBase 3.1.4 (r103000102023020719-16544a206f00dd3ceb4ca3011a625fbb24568154) (Built Feb 7 2023 19:32:02)

Copyright (c) 2000, 2018, OceanBase and/or its affiliates. All rights reserved.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

obclient> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free, round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb, usec_to_time(b.last_offline_time) last_offline_time, usec_to_time(b.start_service_time) start_service_time

from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

order by a.zone, a.svr_ip;

7.1创建资源单元规格UNIT

CREATE resource unit u1 max_cpu=2, min_cpu=2, max_memory='2G', min_memory='2G', max_iops=10000, min_iops=1000, max_session_num=1000000, max_disk_size='2G';

CREATE resource unit u2 max_cpu=3, min_cpu=3, max_memory='3G', min_memory='3G', max_iops=10000, min_iops=1000, max_session_num=1000000, max_disk_size='3G';

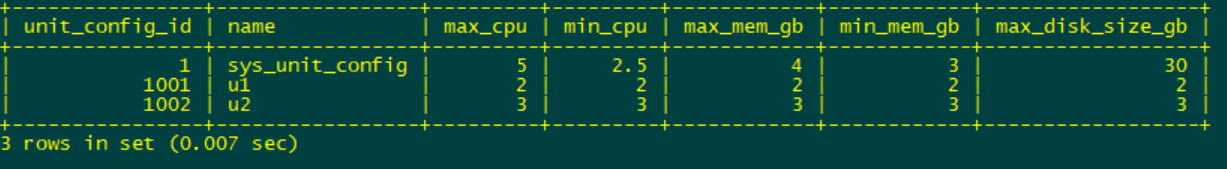

查看UNIT

obclient> select unit_config_id,name,max_cpu,min_cpu,round(max_memory/1024/1024/1024) max_mem_gb, round(min_memory/1024/1024/1024) min_mem_gb, round(max_disk_size/1024/1024/1024) max_disk_size_gb

from __all_unit_config

order by unit_config_id;

7.2创建资源池

create resource pool pool_1 unit='u1' , unit_num=1, zone_list=('zone1' ,'zone2');

create resource pool pool_2 unit='u2' , unit_num=1, zone_list=('zone3');

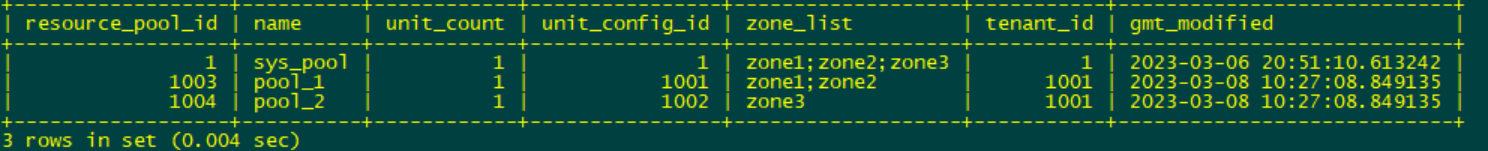

查看资源池

obclient> select resource_pool_id, name,unit_count, unit_config_id, zone_list, tenant_id, gmt_modified

from __all_resource_pool order by resource_pool_id ;

7.3创建租户

create tenant obmytest resource_pool_list=('pool_1','pool_2'), primary_zone='RANDOM',comment 'obclient tenant/instance', charset='utf8' set ob_tcp_invited_nodes='%';

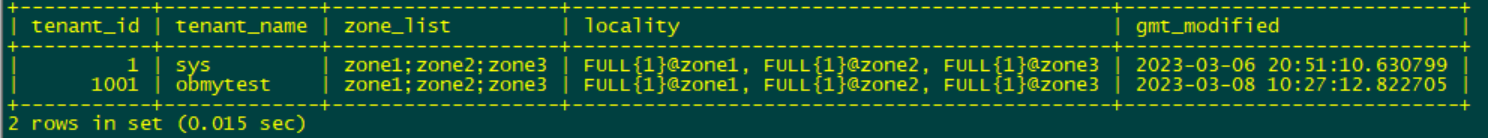

查看租户

obclient> select tenant_id, tenant_name, zone_list, locality ,gmt_modified from __all_tenant;

新租户下创建数据库表及用户

8.1创建数据库

obclient -h 192.168.56.40 -uroot@obmytest#obdemo -P2883 -p -c -A oceanbase(默认root密码为空)

obclient [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| oceanbase |

| information_schema |

| obclient |

| test |

+--------------------+

4 rows in set (0.003 sec)

obclient [oceanbase]> create database testdb;

Query OK, 1 row affected (0.044 sec)

obclient [oceanbase]> show databases;

+--------------------+

| Database |

+--------------------+

| oceanbase |

| information_schema |

| obclient |

| test |

| testdb |

+--------------------+

5 rows in set (0.011 sec)

8.2创建用户并授权

create user obtest1 identified by 'obtest1';

grant all privileges on testdb.* to obtest1 identified by 'obtest1';

8.3创建表及插入测试数据

[admin@ob32-1 ~]$ obclient -h 192.168.56.40 -uobtest1@obmytest#obdemo -P2883 -pobtest1 -c -A testdb

Welcome to the OceanBase. Commands end with ; or \g.

Your OceanBase connection id is 524291

Server version: OceanBase 3.1.4 (r103000102023020719-16544a206f00dd3ceb4ca3011a625fbb24568154) (Built Feb 7 2023 19:32:02)

Copyright (c) 2000, 2018, OceanBase and/or its affiliates. All rights reserved.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

obclient [testdb]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| testdb |

+--------------------+

2 rows in set (0.034 sec)

obclient [testdb]> create table ware(w_id int

, w_ytd decimal(12,2)

, w_tax decimal(4,4)

, w_name varchar(10)

, w_street_1 varchar(20)

, w_street_2 varchar(20)

, w_city varchar(20)

, w_state char(2)

, w_zip char(9)

, unique(w_name, w_city)

, primary key(w_id)

);

Query OK, 0 rows affected (0.376 sec)

obclient [testdb]> create table cust (c_w_id int NOT NULL

, c_d_id int NOT null

, c_id int NOT null

, c_discount decimal(4, 4)

, c_credit char(2)

, c_last varchar(16)

, c_first varchar(16)

, c_middle char(2)

, c_balance decimal(12, 2)

, c_ytd_payment decimal(12, 2)

, c_payment_cnt int

, c_credit_lim decimal(12, 2)

, c_street_1 varchar(20)

, c_street_2 varchar(20)

, c_city varchar(20)

, c_state char(2)

, c_zip char(9)

, c_phone char(16)

, c_since date

, c_delivery_cnt int

, c_data varchar(500)

, index icust(c_last, c_d_id, c_w_id, c_first, c_id)

, FOREIGN KEY (c_w_id) REFERENCES ware(w_id)

, primary key (c_w_id, c_d_id, c_id)

);

Query OK, 0 rows affected (0.353 sec)

obclient [testdb]> show tables;

+------------------+

| Tables_in_testdb |

+------------------+

| cust |

| ware |

+------------------+

2 rows in set (0.044 sec)

obclient [testdb]> create table t1(c1 varchar(50));

Query OK, 0 rows affected (0.219 sec)

obclient [testdb]> insert into t1 values('a'),('中');

Query OK, 2 rows affected (0.052 sec)

Records: 2 Duplicates: 0 Warnings: 0

obclient [testdb]> insert into t1 values('c'),('国');

Query OK, 2 rows affected (0.006 sec)

Records: 2 Duplicates: 0 Warnings: 0

obclient [testdb]> insert into t1 values('b'),('人');

Query OK, 2 rows affected (0.005 sec)

Records: 2 Duplicates: 0 Warnings: 0

obclient [testdb]> select * from t1;

+------+

| c1 |

+------+

| a |

| 中 |

| c |

| 国 |

| b |

| 人 |

+------+

6 rows in set (0.008 sec)

配置过程中遇到的坑

observer进程启动失败

1、首次启动OBserver进程时,前台无提示,但是进程起不来,后台observer.log出现如下错误:

报错:

[2023-03-05 22:35:48.194918] ERROR [STORAGE] open (ob_local_file_system.cpp:401) [20628][0][Y0-0000000000000000] [lt=7] [dc=0] data file size is too large, (ret=-4184, free_space=52002975744, data_file_size_=53687091200) BACKTRACE:0x9ad6cfe 0x98aac21 0x22ffff6 0x22ffc3b 0x22ff9b3 0x22f3b39 0x831b5bc 0x831a6b5 0x88f8d49 0x7de9d21 0x93cd421 0x93c2bb7 0x22bf8c5 0x2aec94edd3d5 0x22be5a9

[2023-03-05 22:35:48.417232] ERROR [SERVER] init_storage (ob_server.cpp:1712) [20628][0][Y0-0000000000000000] [lt=4] [dc=0] init partition service fail(ret=-4184, storage_env_={data_dir:"/home/admin/oceanbase/store/obdemo", default_block_size:2097152, disk_avail_space:53687091200, datafile_disk_percentage:90, redundancy_level:1, log_spec:{log_dir:"/home/admin/oceanbase/store/obdemo/slog", max_log_size:268435456, log_sync_type:0}, clog_dir:"/home/admin/oceanbase/store/obdemo/clog", ilog_dir:"/home/admin/oceanbase/store/obdemo/ilog", clog_shm_path:"/home/admin/oceanbase/store/obdemo/clog_shm", ilog_shm_path:"/home/admin/oceanbase/store/obdemo/ilog_shm", index_cache_priority:10, user_block_cache_priority:1, user_row_cache_priority:1, fuse_row_cache_priority:1, bf_cache_priority:1, clog_cache_priority:1, index_clog_cache_priority:1, bf_cache_miss_count_threshold:100, ethernet_speed:131072000}) BACKTRACE:0x9ad6cfe 0x98aac21 0x22fdf5f 0x22fdbab 0x22fd972 0x8060a4b 0x93cd64e 0x93c2bb7 0x22bf8c5 0x2aec94edd3d5 0x22be5a9

[2023-03-05 22:45:35.752787] WARN [COMMON] get_warning_disks (ob_io_disk.cpp:930) [21072][38][Y0-0000000000000000] [lt=0] [dc=0] not init(ret=-4006)

[2023-03-05 22:45:35.752803] WARN [COMMON] check_disk_error (ob_io_manager.cpp:699) [21072][38][Y0-0000000000000000] [lt=15] [dc=0] fail to get warning disks(ret=-4006)

[2023-03-05 22:45:35.752860] INFO ob_page_manager.cpp:43 [21072][0][Y0-0000000000000000] [lt=66] [dc=0] unregister pm finish(&pm=0x2aee7b483900, pm.get_tid()=21072)

[2023-03-05 22:45:35.855123] WARN [COMMON] get_all_tenant_id (ob_tenant_mgr.cpp:581) [21103][100][Y0-0000000000000000] [lt=9] [dc=0] tenant manager not init(ret=-4006)

[2023-03-05 22:45:35.855150] WARN [COMMON] compute_tenant_wash_size (ob_kvcache_store.cpp:659) [21103][100][Y0-0000000000000000] [lt=26] [dc=0] Fail to get all tenant ids, (ret=-4006)

--

[2023-03-05 22:45:36.772959] INFO ob_page_manager.cpp:43 [21059][0][Y0-0000000000000000] [lt=0] [dc=0] unregister pm finish(&pm=0x2aee6f315900, pm.get_tid()=21059)

[2023-03-05 22:45:36.773805] WARN [SERVER] destroy (ob_server.cpp:442) [21052][0][Y0-0000000000000000] [lt=9] [dc=0] log compressor destroyed

[2023-03-05 22:45:36.773832] WARN [SERVER] destroy (ob_server.cpp:443) [21052][0][Y0-0000000000000000] [lt=25] [dc=0] destroy observer end

[2023-03-05 22:45:36.773841] ERROR [SERVER] main (main.cpp:494) [21052][0][Y0-0000000000000000] [lt=6] [dc=0] observer init fail(ret=-4009) BACKTRACE:0x9ad6cfe 0x98aac21 0x22c3274 0x22c2d5b 0x22c2ac1 0x22c1758 0x22bfbca 0x2aee5c27e3d5 0x22be5a9

原因是指定的参数datafile_size=50G太大,超过了目录最大可用free可用空间,实际划分/根目录最大才50G,改为30G后,重启拉起observer进程还是报错

[root@ob32-2 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 50G 33G 18G 65% /

[2023-03-06 18:51:13.181324] WARN [STORAGE] inner_get_super_block_version (ob_local_file_system.cpp:912) [22441][0][Y0-0000000000000000] [lt=23] [dc=0] read superblock error.(ret=-4009, offset=0, read_size=0, errno=2, super_block_buf_holder={buf:0x2ba898772000, len:65536}, fd={fd:790, disk_id:{disk_idx:0, install_seq:0}}, errmsg="No such file or directory")

[2023-03-06 18:51:13.181381] WARN [STORAGE] inner_get_super_block_version (ob_local_file_system.cpp:912) [22441][0][Y0-0000000000000000] [lt=10] [dc=0] read superblock

ERROR [SERVER] init_storage (ob_server.cpp:1712) [22441][0][Y0-0000000000000000] [lt=3] [dc=0] init partition service fail()

原因是拉起进程失败后没有清理目录导致的,

tree /data

└── obdemo

└── sstable

└── block_file

需要清理这个产生的无效的block_file,重新拉起进程正常。

rm -f /data/obdemon/sstable/block_file

obproxy连接报错

obclient -h192.168.56.40 -uroot@proxysys -P2883 -p

obproxy 登录的时候, ERROR 2027 (HY000): received malformed packet

联系社区问答群的老师说 obclient连接@proxysys租户时,连接的需要加上 --proxy-mode参数

obclient -h192.168.56.40 -uroot@proxysys -P2883 -p --proxy-mode

连接@sys租户时就不用了。