导读

海云捷迅AWCloud私有云平台基于OpenCloudOS社区推出的8.5版本进行OpenStack Yoga版本安装部署适配操作。下文主要介绍了适配过程详解,让大家能够更直观了解整体流程。

1

安装前准备

1.1 前置操作

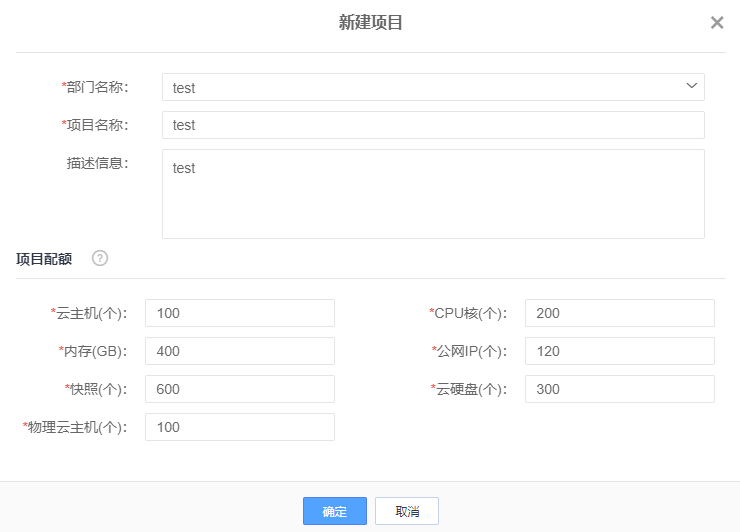

步骤1.使用admin用户登录AWCloud云管平台。

步骤2.通过快捷操作创建部门、项目、用户。

步骤3.创建私有网络,注意创建私有网络时不指定网关。

步骤4.创建安全组,放行所有TCP端口。

步骤5.使用已上传好的镜像opencloudos-8.5-x86_64.iso创建3台云主机。

步骤6.登录云主机,修改私有网络网卡配置。

如果是VxLAN私有网络,使用静态IP,注意修改MTU为1450。

vim/etc/sysconfig/network-scripts/ifcfg-ens3

TYPE=Ethernet

BOOTPROTO=static

NAME=ens3

DEVICE=ens3

ONBOOT=yes

IPADDR=10.10.10.4

NETMASK=255.255.255.0

MTU=1450

云平台普通用户无法添加外网网卡,可使用nova interface-attach --net-id 外部网络ID 虚拟机UUID 命令添加外网网卡。外部网络网卡配置参考:

vi etc/sysconfig/network-scripts/ifcfg-ens10

TYPE=Ethernet

BOOTPROTO=static

DEFROUTE=yes

# 网卡名称依据实际生成的网卡名称修改

NAME=ens10

DEVICE=ens10

ONBOOT=yes

IPADDR=192.168.133.92

NETMASK=255.255.240.0

GATEWAY=192.168.128.1

DNS1=114.114.114.114

配置网卡注意:在修改网卡ens10的配置文件之后,需要执行以下命令:

systemctl restart NetworkManager

nmcli c up ens10

nmcli c reload ens10

#然后ip a才会看到新增的网卡ip信息

步骤7.三台云主机网卡配置完成后,云主机之间2个网卡间互ping检查网络联通性。

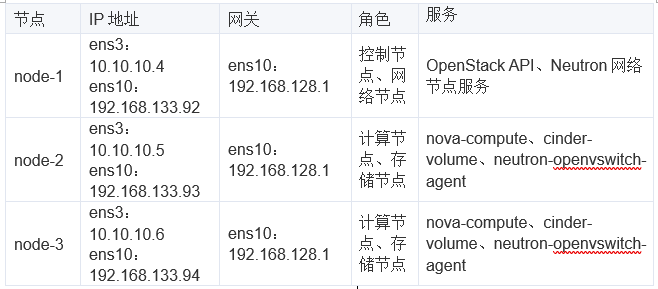

1.2 环境信息

本环境采用手动安装部署方式,环境信息如下表:

1.3 配置和修改操作系统

注意:以下步骤需要在三个节点上执行。

步骤1.配置主机名(每个主机修改各自主机名)

hostnamectl set-hostname node-1

hostnamectl set-hostname node-2

hostnamectl set-hostname node-3

步骤2.停用firewalld服务。

systemctl stop firewalld

systemctl disable firewalld

步骤3.禁用SELINUX。

sed-i 's/^SELINUX=enforcing$/SELINUX=disabled/' etc/selinux/config

setenforce 0

步骤4.配置/etc/hosts。

10.10.10.4 node-1

10.10.10.5 node-2

10.10.10.6 node-3

步骤5.启用network服务,停用NetworkManager服务。

yum install network-scripts -y

systemctl stop NetworkManager

systemctl disable NetworkManager

systemctl enable network

systemctl start network

2

安装OpenStack yum源和相关包

步骤1.安装OpenStack yum源。

yum install wget -y

wget https://mirrors.cloud.tencent.com/centos/8-stream/extras/x86_64/extras-common/Packages/c/centos-release-openstack-yoga-1-1.el8s.noarch.rpm

yum install centos-release-openstack-yoga-1-1.el8s.noarch.rpm

步骤2.安装完成之后,/etc/yum.repos.d/目录下会增加如下几个repo文件。

CentOS-Advanced-Virtualization.repo

CentOS-Ceph-Pacific.repo

CentOS-Messaging-rabbitmq.repo

CentOS-NFV-OpenvSwitch.repo

CentOS-OpenStack-yoga.repo

CentOS-Storage-common.repo

为以下5个repo替换地址(CentOS-Advanced-Virtualization.repo

CentOS-Ceph-Pacific.repo

CentOS-Messaging-rabbitmq.repo

CentOS-NFV-OpenvSwitch.repo

CentOS-Storage-common.repo):

sed -i -e "s|mirrorlist=|#mirrorlist=|g" -e "s|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g"-e 's|http://vault.centos.org/[^/]*/[^/]*/|https://mirrors.cloud.tencent.com/centos/8.5.2111/|g'/etc/yum.repos.d/CentOS-Advanced-Virtualization.repo

sed -i -e "s|mirrorlist=|#mirrorlist=|g" -e "s|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g"-e 's|http://vault.centos.org/[^/]*/[^/]*/|https://mirrors.cloud.tencent.com/centos/8.5.2111/|g' etc/yum.repos.d/CentOS-Ceph-Pacific.repo

# 继续执行,修改其他3个文件

CentOS-OpenStack-yoga.repo中修改源为centos/8-stream/:

[centos-openstack-yoga]

name=CentOS-$releasever - OpenStack yoga

baseurl=https://mirrors.cloud.tencent.com/centos/8-stream/cloud/$basearch/openstack-yoga/

#mirrorlist=http://mirrorlist.centos.org/?release=8-stream&arch=$basearch&repo=cloud-openstack-yoga

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Cloud

module_hotfixes=1

[centos-openstack-yoga-source]

name=CentOS-$releasever - OpenStack yoga - Source

baseurl=https://mirrors.cloud.tencent.com/centos/8-stream/cloud/Source/openstack-yoga/

gpgcheck=1

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Cloud

module_hotfixes=1

配置一个节点之后,其他节点可copy该节点yum配置。

步骤3.安装基础组件。

yum config-manager --set-enabled powertools

yum install -y python3-openstackclient

yum install -y openstack-selinux

3

配置时钟同步NTP

注意:以下步骤在node-1上执行。

步骤1.安装软件包。

yum install chrony -y

步骤2.node-1作为NTP server,阿里NTP服务器作为源,配置允许请求的客户端地址段。

vim etc/chrony.conf

pool ntp1.aliyun.com iburst

pool node-1 iburst

# 10.10.10.0/24为私有网卡网段

allow 10.10.10.0/24

步骤3.启动并启用服务。

systemctl enable chronyd

systemctl start chronyd

注意:以下步骤在node-2和node-3节点上执行。

步骤1.安装软件包。

yum install chrony -y

步骤2.修改/etc/chrony.conf。

echo > etc/chrony.conf

# 指定pool为node-1节点

cat <<eof |="" tee="" etc="" chrony.conf

server node-1 iburst

EOF

步骤3.启动并启用服务。

systemctl enable chronyd

systemctl start chronyd

4

配置数据库

注意:本次部署未配置高可用,在node-1上配置即可。

步骤1.安装软件包。

yum install mariadb mariadb-server python2-PyMySQL -y

步骤2.创建/etc/my.cnf.d/openstack.cnf文件。

[mysqld]

bind-address = 10.10.10.4

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

步骤3.启用服务

systemctl enable mariadb.service

systemctl start mariadb.service

步骤4.数据库初始化操作,一路回车键,需要输入密码处输入密码(password为:awcloud)。

mysql_secure_installation

5

配置消息队列

注意:本次部署未配置高可用,在node-1上配置即可。

步骤1.安装软件包。

yum install rabbitmq-server -y

步骤2.启用rabbitmq-server服务。

systemctl enable rabbitmq-server.service

systemctl start rabbitmq-server.service

步骤3.添加openstack用户并设置权限。

rabbitmqctl add_user openstack awcloud

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

6

配置Memcache

注意:本次部署未配置高可用,在node-1上配置即可。

步骤1.安装软件包。

yum install memcached python3-memcached -y

步骤2.修改memcache配置文件。

vim etc/sysconfig/memcached

PORT="11211"

USER="memcached"

MAXCONN="1024"

CACHESIZE="64"

OPTIONS="-l 127.0.0.1,::1,node-1"

步骤3.启用并开启服务。

systemctl enable memcached.service

systemctl start memcached.service

7

部署认证服务Keystone

注意:以下步骤在node-1上执行。

步骤1.配置数据库,创建keystone库,并授权keystone用户(这里密码设置为awcloud)访问权限。

mysql -u root -pawcloud

MariaDB [(none)]> CREATE DATABASE keystone;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'awcloud';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'awcloud';

步骤2.安装RPM包。

yum install openstack-keystone httpd python3-mod_wsgi -y

步骤3.修改keystone配置文件。

vim etc/keystone/keystone.conf

[database]

connection = mysql+pymysql://keystone:awcloud@node-1/keystone

[token]

provider = fernet

步骤4.初始化keystone数据库。

su -s bin/sh -c "keystone-manage db_sync" keystone

步骤5.初始化Fernet数据。

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

步骤6.配置服务引导。

keystone-manage bootstrap --bootstrap-password awcloud --bootstrap-admin-url http://node-1:5000/v3/ --bootstrap-internal-url http://node-1:5000/v3/

--bootstrap-public-url http://node-1:5000/v3/ --bootstrap-region-id RegionOne

步骤7.配置httpd服务。

编辑文件/etc/httpd/conf/httpd.conf。

ServerName node-1

创建软链接。

ln -s usr/share/keystone/wsgi-keystone.conf etc/httpd/conf.d/

步骤8.配置服务启动。

systemctl enable httpd.service

systemctl start httpd.service

步骤9.创建keystone_admin文件。

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=awcloud

export OS_AUTH_URL=http://node-1:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

步骤10.创建service project。

source keystone_admin

openstack project create --domain default --description "Service Project" service

8

部署镜像服务Glance

注意:以下步骤在node-1上执行。

步骤1.配置数据库。创建glance库,并授权glance用户(这里密码设置为awcloud)访问权限。

mysql -u root -pawcloud

MariaDB [(none)]> CREATE DATABASE glance;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'awcloud';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'awcloud';

步骤2.创建glance服务相关认证。

source keystone_admin

openstack user create --domain default --password awcloud glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image" image

openstack endpoint create --region RegionOne image public http://node-1:9292

openstack endpoint create --region RegionOne image internal http://node-1:9292

openstack endpoint create --region RegionOne image admin http://node-1:9292

步骤3.安装软件包。

yum install -y openstack-glance

步骤4.修改glance配置文件。

vim etc/glance/glance-api.conf

[database]

connection = mysql+pymysql://glance:awcloud@node-1/glance

[keystone_authtoken]

www_authenticate_uri = http://node-1:5000

auth_url = http://node-1:5000

memcached_servers = node-1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = awcloud

[paste_deploy]

flavor = keystone

# 镜像先默认存本地,后续如果对接ceph再修改

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = var/lib/glance/images/

步骤5.同步数据库。

su -s bin/sh -c "glance-manage db_sync" glance

步骤6.启动服务。

systemctl enable openstack-glance-api.service

systemctl start openstack-glance-api.service

9

部署Placement服务

注意:以下步骤在node-1上执行。

步骤1.配置数据库。

mysql -u root -pawcloud

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'awcloud';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'awcloud';

步骤2.创建placement服务相关认证。

source keystone_admin

openstack user create --domain default --password awcloud placement

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://node-1:8778

openstack endpoint create --region RegionOne placement internal http://node-1:8778

openstack endpoint create --region RegionOne placement admin http://node-1:8778

步骤3.安装RPM包。

yum install openstack-placement-api -y

步骤4.配置placement服务。

vim etc/placement/placement.conf

[placement_database]

connection = mysql+pymysql://placement:awcloud@node-1/placement

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://node-1:5000/v3

memcached_servers = node-1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = awcloud

步骤5.初始化数据库

su -s bin/sh -c "placement-manage db sync" placement

步骤6.修改placement配置/etc/httpd/conf.d/00-placement-api.conf,避免权限错误导致nova-compute服务无法访问placement API。

步骤7.重启httpd服务。

systemctl restart httpd

10

部署计算服务Nova

注意:以下步骤在node-1上执行。

步骤1.配置数据库。

mysql -u root -pawcloud

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'awcloud';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'awcloud';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'awcloud';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'awcloud';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'awcloud';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'awcloud';

步骤2.配置Nova认证相关。

source keystone_admin

openstack user create --domain default --password awcloud nova

openstack role add --project service --user nova admin

openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://node-1:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://node-1:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://node-1:8774/v2.1

步骤3.安装RPM包。

yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y

步骤4.配置Nova服务(/etc/nova/nova.conf)。

[DEFAULT]

use_syslog = True

verbose = True

syslog_log_facility=LOG_USER

log_dir=/var/log/nova

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:awcloud@node-1:5672/

# my_ip依据实际情况替换

my_ip = 10.10.10.4

[api_database]

connection = mysql+pymysql://nova:awcloud@node-1/nova_api

[database]

connection = mysql+pymysql://nova:awcloud@node-1/nova

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://node-1:5000/

auth_url = http://node-1:5000/

memcached_servers = node-1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = awcloud

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

api_servers = http://node-1:9292

[oslo_concurrency]

lock_path = var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://node-1:5000/v3

username = placement

password = awcloud

[neutron]

auth_url = http://node-1:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = awcloud

service_metadata_proxy = true

metadata_proxy_shared_secret = awcloud

步骤5.同步nova-api数据库。

su -s bin/sh -c "nova-manage api_db sync" nova

步骤6.注册cell0数据库。

su -s bin/sh -c "nova-manage cell_v2 map_cell0" nova

步骤7.创建cell1 cell。

su -s bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

步骤8.同步Nova数据库。

su -s bin/sh -c "nova-manage db sync" nova

步骤9.验证cell0和cell1是否注册成功。

su -s bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+--------------------------------------+---------------------------------------------+----------+

| 名称 | UUID | Transport URL | 数据库连接 | Disabled |

+-------+--------------------------------------+--------------------------------------+---------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@node-1/nova_cell0 | False |

| cell1 | 470941ea-37ae-4f50-a865-150ca07f7144 | rabbit://openstack:****@node-1:5672/ | mysql+pymysql://nova:****@node-1/nova | False |

+-------+--------------------------------------+--------------------------------------+---------------------------------------------+----------+

步骤10. 开启Nova相关服务。

systemctl enable openstack-nova-api.service openstack-nova-scheduler.service

openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service openstack-nova-scheduler.service

openstack-nova-conductor.service openstack-nova-novncproxy.service

注意:以下步骤在计算节点上执行(node-2、node-3)。

步骤1.安装nova-compute软件包。

yum install openstack-nova-compute bridge-utils -y

步骤2.配置文件修改。

vim etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:awcloud@node-1

# 不同计算节点替换my_ip即可

my_ip = 10.10.10.5

compute_driver=libvirt.LibvirtDriver

state_path=/var/lib/nova

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://node-1:5000/

auth_url = http://node-1:5000/

memcached_servers = node-1:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = awcloud

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

#192.168.133.92为node-1另外一块外网网卡IP,便于后续vnc直接访问虚拟机

novncproxy_base_url = http://192.168.133.92:6080/vnc_auto.html

[glance]

api_servers = http://node-1:9292

[oslo_concurrency]

lock_path = var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://node-1:5000/v3

username = placement

password = awcloud

[neutron]

auth_url = http://node-1:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = awcloud

service_metadata_proxy = true

metadata_proxy_shared_secret = awcloud

[libvirt]

# 使用虚拟机安装时,指定为qemu

virt_type=qemu

步骤3.启动服务。

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

步骤4.将计算节点注册到cell数据库(在node-1上执行)。

su -s bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

或者修改nova配置文件,重启scheduler服务:

discover_hosts_in_cells_interval = 300

验证服务

查看Nova服务情况(node-1上执行)。

openstack compute service list

openstack hypervisor list

如果openstack hypervisor list无显示,则需要检查openstack-nova-compute和libvirtd服务是否正常。

11

部署网络服务Neutron

说明:官方文档以Linux bridge作为L2 mech driver,本次以Open vSwitch为例。

注意:以下步骤在node-1上执行:

步骤1.配置数据库。

mysql -u root -pawcloud

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'awcloud';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'awcloud';

步骤2.创建Neutron相关认证服务。

source keystone_admin

openstack user create --domain default --password awcloud neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network

openstack endpoint create --region RegionOne network public http://node-1:9696

openstack endpoint create --region RegionOne network internal http://node-1:9696

openstack endpoint create --region RegionOne network admin http://node-1:9696

步骤3.安装软件包。

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch -y

步骤4.修改文件/etc/neutron/neutron.conf。

vim etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

transport_url = rabbit://openstack:awcloud@node-1

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[database]

connection = mysql+pymysql://neutron:awcloud@node-1/neutron

[keystone_authtoken]

www_authenticate_uri = http://node-1:5000

auth_url = http://node-1:5000

memcached_servers = node-1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = awcloud

[nova]

auth_url = http://node-1:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = awcloud

步骤5.配置ML2文件。

vim etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = *

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

步骤6.配置neutron ovs agent文件。

vim etc/neutron/plugins/ml2/openvswitch_agent.ini

[ovs]

bridge_mappings = physnet1:br_ex

# local_ip依据实际情况进行替换

local_ip = 10.10.10.4

[agent]

tunnel_types = vxlan

l2_population = True

[securitygroup]

firewall_driver = iptables_hybrid

步骤7.配置l3 agent。

vim etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = openvswitch

external_network_bridge =

步骤8.配置dhcp agent。

vim etc/neutron/dhcp_agent.ini

interface_driver = openvswitch

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

步骤9.配置metadata agent。

vim etc/neutron/metadata_agent.ini

nova_metadata_host = node-1

metadata_proxy_shared_secret = awcloud

步骤10.配置软链接。

ln -s etc/neutron/plugins/ml2/ml2_conf.ini etc/neutron/plugin.ini

步骤11.初始化数据库。

su -s bin/sh -c "neutron-db-manage --config-file etc/neutron/neutron.conf --config-file etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

步骤12.查看ovs服务是否启动。

systemctl status openvswitch

# 如果没启动,启动服务

systemctl enable openvswitch

systemctl start openvswitch

步骤13.添加ovs网桥。

ovs-vsctl add-br br_ex

步骤14.启动服务。

systemctl enable neutron-server.service neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl start neutron-server.service neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

注意:以下步骤在计算节点上执行(node-2、node-3)。

步骤1.安装软件包。

yum install openstack-neutron-openvswitch ipset -y

步骤2.配置文件修改。

vim etc/neutron/neutron.conf

[DEFAULT]

transport_url = rabbit://openstack:awcloud@node-1

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://node-1:5000

auth_url = http://node-1:5000

memcached_servers = node-1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = awcloud

步骤3.配置neutron openvswitch agent。

vim etc/neutron/plugins/ml2/openvswitch_agent.ini

[ovs]

bridge_mappings = physnet1:br_ex

# local_ip依据实际情况进行替换

local_ip = 10.10.10.5

[agent]

tunnel_types = vxlan

l2_population = True

[securitygroup]

firewall_driver = iptables_hybrid

步骤4.查看ovs服务是否启动。

systemctl status openvswitch

# 如果没启动,启动服务

systemctl enable openvswitch

systemctl start openvswitch

步骤5.添加ovs网桥。

ovs-vsctl add-br br_ex

步骤6.启动neutron openvswitch agent服务。

systemctl enable neutron-openvswitch-agent

systemctl start neutron-openvswitch-agent

12

部署存储服务Cinder

本次安装时存储后端使用LVM driver,不对接Ceph。

注意:以下步骤在node-1上执行:

步骤1.配置数据库。

mysql -u root -pawcloud

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'awcloud';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'awcloud';

步骤2.创建Cinder相关认证服务。

source keystone_admin

openstack user create --domain default --password awcloud cinder

openstack role add --project service --user cinder admin

openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

openstack endpoint create --region RegionOne volumev3 public http://node-1:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 internal http://node-1:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne volumev3 admin http://node-1:8776/v3/%\(project_id\)s

步骤3.安装软件包。

yum install openstack-cinder -y

步骤4.修改配置文件。

vim etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:awcloud@node-1

auth_strategy = keystone

my_ip = 10.10.10.4

glance_api_servers = http://node-1:9292

[database]

connection = mysql+pymysql://cinder:awcloud@node-1/cinder

[keystone_authtoken]

www_authenticate_uri = http://node-1:5000

auth_url = http://node-1:5000

memcached_servers = node-1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = awcloud

步骤5.初始化Cinder数据库.

su -s bin/sh -c "cinder-manage db sync" cinder

步骤6.配置Nova使用Cinder。

vim etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

步骤7.重启openstack-nova-api服务。

systemctl restart openstack-nova-api

步骤8.启动服务。

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

注意:以下步骤在node-2和node-3上执行:

步骤1.在云管平台给node-2虚拟机和node-3虚拟机挂载一块50~100GB的数据盘。

步骤2.安装软件包。

yum install lvm2 device-mapper-persistent-data -y

步骤3.基于新加的数据盘(如/dev/vdb)创建pv。

pvcreate dev/vdb

步骤4.创建vg。

vgcreate cinder-volumes dev/vdb

步骤5.安装软件包。

yum install openstack-cinder targetcli -y

步骤6.修改cinder配置文件。

vim etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:awcloud@node-1

auth_strategy = keystone

# 依据实际情况修改

my_ip = 10.10.10.5

glance_api_servers = http://node-1:9292

enabled_backends = lvm

[database]

connection = mysql+pymysql://cinder:awcloud@node-1/cinder

[keystone_authtoken]

www_authenticate_uri = http://node-1:5000

auth_url = http://node-1:5000

memcached_servers = node-1:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = awcloud

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

target_protocol = iscsi

target_helper = lioadm

步骤7.启动cinder-volume。

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

13

部署Horizon组件(选装)

注意:该组件在node-1上安装即可。

步骤1.安装软件包。

yum install openstack-dashboard -y

步骤2.修改Horizon配置文件。

vim etc/openstack-dashboard/local_settings

# 配置为node-1外网网卡IP。

OPENSTACK_HOST = "192.168.133.92"

ALLOWED_HOSTS = ['*']

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'node-1:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

TIME_ZONE = "Asia/Shanghai"

# 上面几项修改即可,以下为新增信息。

# 启用对域的支持。

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

# 配置API版本。

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

# 配置默认域。

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

# 配置默认角色。

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

WEBROOT = '/dashboard/'

步骤3.重建dashboard配置。

cd usr/share/openstack-dashboard

python3 manage.py make_web_conf --apache > etc/httpd/conf.d/openstack-dashboard.conf

步骤4.建立软连接。

ln -s etc/openstack-dashboard usr/share/openstack-dashboard/openstack_dashboard/conf

步骤5.修改配置/etc/httpd/conf.d/openstack-dashboard.conf。

<VirtualHost*:80>

ServerAdmin webmaster@openstack.org

ServerName openstack_dashboard

DocumentRoot usr/share/openstack-dashboard/

LogLevel warn

ErrorLog /var/log/httpd/openstack_dashboard-error.log

CustomLog /var/log/httpd/openstack_dashboard-access.log combined

WSGIScriptReloading On

WSGIDaemonProcess openstack_dashboard_website processes=10

WSGIProcessGroup openstack_dashboard_website

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

#WSGIScriptAlias / /usr/share/openstack-dashboard/openstack_dashboard/wsgi.py

#WSGIScriptAlias /dashboard /usr/share/openstack-dashboard/openstack_dashboard/wsgi/django.wsgi

WSGIScriptAlias /dashboard /usr/share/openstack-dashboard/openstack_dashboard/wsgi.py

<location "="">

Require all granted

<location>

#Alias /static /usr/share/openstack-dashboard/static

Alias /dashboard/static /usr/share/openstack-dashboard/static

<location "/static">

SetHandler None

<location>

<Virtualhost>

步骤6.重启httpd服务和memcached服务。

systemctl restart httpd.service && systemctl restart memcached.service

步骤7.访问

http://192.168.133.92/dashboard。

14

后续操作

步骤1.调整nova-api、neutron-server workers数量(在node-1上执行)。

nova(/etc/nova/nova.conf),DEFAULT域下增加如下内容:

[DEFAULT]

metadata_workers = 2

osapi_compute_workers = 2

执行命令:

systemctl restart openstack-nova-api

neutron(/etc/neutron/neutron.conf),DEFAULT域下增加如下内容:

[DEFAULT]

api_workers = 2

rpc_workers = 2

执行命令:

systemctl restart neutron-server

步骤2.修改Neutron network MTU值

因为是在OpenStack虚拟机里再创建VxLAN类型网络,外层虚拟机的网卡MTU值已经是1450,因此这里需要修改MTU值,以确保虚拟机正常通信。

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

path_mtu=1450

步骤3.下载镜像(在node-1上执行),如果无法下载,可先下载至本地再上传至node-1。

参考:

https://docs.openstack.org/glance/yoga/install/verify.html

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

上传镜像:

glance image-create --name "cirros" --file cirros-0.4.0-x86_64-disk.img --disk-format

qcow2 --container-format bare --visibility=public

步骤4.创建实例类型(flavor),128M内存、1G磁盘,1 core。

nova flavor-create tinysmall 1 128 1 1

步骤5.可登录horizon界面创建虚拟机,也可命令创建。可以验证从镜像启动虚拟机、从卷启动虚拟机。

步骤6.外部网络相关操作。

改造node-1上的网络配置。

vim /etc/sysconfig/network-scripts/ifcfg-br_ex

DEVICE=br_ex

BOOTPROTO=static

ONBOOT=yes

IPADDR=192.168.131.7

NETMASK=255.255.240.0

GATEWAY=192.168.128.1

TYPE=OVSBridge

DEVICETYPE=ovs

修改外部网络网卡配置为:

vim /etc/sysconfig/network-scripts/ifcfg-ens10

DEVICE=ens10

ONBOOT=yes

TYPE=OVSPort

DEVICETYPE=ovs

OVS_BRIDGE=br_ex

修改br_ex的mac地址为ens10的mac地址:

ovs-vsctl set bridge br_ex other-config:hwaddr=$替换为ens10的MAC地址

修改完成后,重启neutron-server和neutron-openvswitch-agent服务。

systemctl restart neutron-server

systemctl restart neutron-openvswitch-agent

创建外部网络:

openstack network create --provider-physical-network physnet1 --provider-network-type flat --external public

#为了防止冲突,需要确认地址是否已使用(192.168.133.50~192.168.133.100)

openstack subnet create public-subnet --network public --subnet-range 192.168.128.0/20 --allocation-pool start=192.168.133.50,end=192.168.133.100 --gateway 192.168.128.1 --no-dhcp

创建路由器,设置网关,关联私有网络子网:

neutron router-create test-router

neutron router-gateway-set test-router public

neutron net-create net1

openstack subnet create net1_subnet --network net1 --subnet-range 10.10.10.0/24

neutron router-interface-add test-router net1_subnet

使用net1创建虚拟机,验证虚拟机内部访问114.114.114.114。

相关推荐