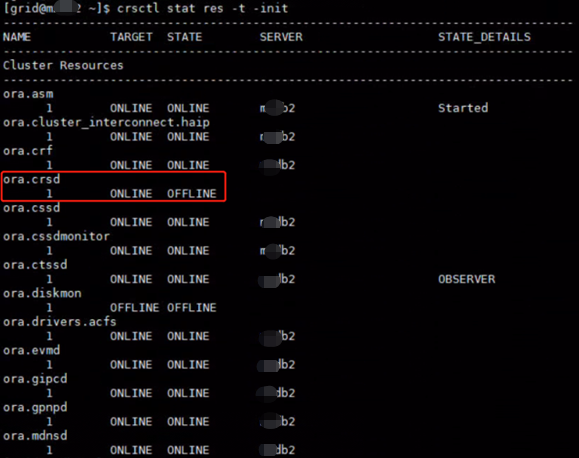

问题概述

2023年6月6号上午12:00左右接到反馈,某客户数据库2节点crsd资源无法启动。

另:1节点主机因硬件原因无法启动,不在本次故障处理范围。

问题分析

(1)GI ALERT LOG分析

通过GI alert日可以看到, CRSD资源因CRS-1006:The OCR location +ocr is inaccessible错误启动失败。

2023-06-05 11:51:23.702:

[ohasd(54330)]CRS-2112:The OLR service started on node xxxdb2.

2023-06-05 11:51:23.713:

[ohasd(54330)]CRS-1301:Oracle High Availability Service started on node xxxdb2.

2023-06-05 11:51:23.713:

[ohasd(54330)]CRS-8017:location: /etc/oracle/lastgasp has 2 reboot advisory log files, 0 were announced and 0 errors occurred

2023-06-05 11:51:27.208:

[/u01/app/11.2.0/grid/bin/orarootagent.bin(54373)]CRS-2302:Cannot get GPnP profile. Error CLSGPNP_NO_DAEMON (GPNPD daemon is not running).

2023-06-05 11:51:31.642:

[gpnpd(54467)]CRS-2328:GPNPD started on node xxxdb2.

2023-06-05 11:51:34.106:

[cssd(54536)]CRS-1713:CSSD daemon is started in clustered mode

2023-06-05 11:51:35.905:

[ohasd(54330)]CRS-2767:Resource state recovery not attempted for 'ora.diskmon' as its target state is OFFLINE

2023-06-05 11:51:35.905:

[ohasd(54330)]CRS-2769:Unable to failover resource 'ora.diskmon'.

2023-06-05 11:51:54.120:

[cssd(54536)]CRS-1707:Lease acquisition for node xxxdb2 number 2 completed

2023-06-05 11:51:55.396:

[cssd(54536)]CRS-1605:CSSD voting file is online: /dev/asm-disk3; details in /u01/app/11.2.0/grid/log/xxxdb2/cssd/ocssd.log.

2023-06-05 11:51:55.403:

[cssd(54536)]CRS-1605:CSSD voting file is online: /dev/asm-disk1; details in /u01/app/11.2.0/grid/log/xxxdb2/cssd/ocssd.log.

2023-06-05 11:51:55.416:

[cssd(54536)]CRS-1605:CSSD voting file is online: /dev/asm-disk2; details in /u01/app/11.2.0/grid/log/xxxdb2/cssd/ocssd.log.

2023-06-05 11:52:04.545:

[cssd(54536)]CRS-1601:CSSD Reconfiguration complete. Active nodes are xxxdb2 .

2023-06-05 11:52:06.114:

[ctssd(54780)]CRS-2403:The Cluster Time Synchronization Service on host xxxdb2 is in observer mode.

2023-06-05 11:52:06.386:

[ctssd(54780)]CRS-2407:The new Cluster Time Synchronization Service reference node is host xxxdb2.

2023-06-05 11:52:06.388:

[ctssd(54780)]CRS-2401:The Cluster Time Synchronization Service started on host xxxdb2.

2023-06-05 11:52:08.109:

[ohasd(54330)]CRS-2767:Resource state recovery not attempted for 'ora.diskmon' as its target state is OFFLINE

2023-06-05 11:52:08.109:

[ohasd(54330)]CRS-2769:Unable to failover resource 'ora.diskmon'.

[client(54852)]CRS-10001:05-Jun-23 11:52 ACFS-9391: Checking for existing ADVM/ACFS installation.

[client(54857)]CRS-10001:05-Jun-23 11:52 ACFS-9392: Validating ADVM/ACFS installation files for operating system.

[client(54859)]CRS-10001:05-Jun-23 11:52 ACFS-9393: Verifying ASM Administrator setup.

[client(54862)]CRS-10001:05-Jun-23 11:52 ACFS-9308: Loading installed ADVM/ACFS drivers.

[client(54865)]CRS-10001:05-Jun-23 11:52 ACFS-9154: Loading 'oracleoks.ko' driver.

[client(54893)]CRS-10001:05-Jun-23 11:52 ACFS-9154: Loading 'oracleadvm.ko' driver.

[client(54962)]CRS-10001:05-Jun-23 11:52 ACFS-9154: Loading 'oracleacfs.ko' driver.

[client(55017)]CRS-10001:05-Jun-23 11:52 ACFS-9327: Verifying ADVM/ACFS devices.

[client(55020)]CRS-10001:05-Jun-23 11:52 ACFS-9156: Detecting control device '/dev/asm/.asm_ctl_spec'.

[client(55024)]CRS-10001:05-Jun-23 11:52 ACFS-9156: Detecting control device '/dev/ofsctl'.

[client(55029)]CRS-10001:05-Jun-23 11:52 ACFS-9322: completed

2023-06-05 11:52:33.053:

[crsd(55199)]CRS-1012:The OCR service started on node xxxdb2.

2023-06-05 11:52:33.191:

[evmd(54804)]CRS-1401:EVMD started on node xxxdb2.

2023-06-05 11:52:34.347:

[crsd(55199)]CRS-1201:CRSD started on node xxxdb2.

2023-06-05 11:52:34.795:

[crsd(55199)]CRS-1006:The OCR location +ocr is inaccessible. Details in /u01/app/11.2.0/grid/log/xxxdb2/crsd/crsd.log.

2023-06-05 11:52:44.957:

[ohasd(54330)]CRS-2765:Resource 'ora.crsd' has failed on server 'xxxdb2'.

(2)CRSD LOG分析

从CRSD LOG里可以看到“OCR设备头检查失败”和“物理磁盘访问错误”的报错。

2023-06-05 11:52:34.363: [ CRSPE][622757632]{2:38983:2} PE MASTER NAME: xxxdb2

2023-06-05 11:52:34.363: [ CRSPE][622757632]{2:38983:2} Starting to read configuration

2023-06-05 11:52:34.373: [ CRSPE][622757632]{2:38983:2} Reading (2) servers

2023-06-05 11:52:34.376: [ CRSPE][622757632]{2:38983:2} DM: set global config version to: 73

2023-06-05 11:52:34.376: [ CRSPE][622757632]{2:38983:2} DM: set pool freeze timeout to: 60000

2023-06-05 11:52:34.376: [ CRSPE][622757632]{2:38983:2} DM: Set event seq number to: 15400000

2023-06-05 11:52:34.376: [ CRSPE][622757632]{2:38983:2} DM: Set threshold event seq number to: 15480000

2023-06-05 11:52:34.376: [ CRSPE][622757632]{2:38983:2} Sent request to write event sequence number 15500000 to repository

2023-06-05 11:52:34.385: [ CRSPE][622757632]{2:38983:2} Wrote new event sequence to repository

2023-06-05 11:52:34.437: [ CRSPE][622757632]{2:38983:2} Reading (16) types

2023-06-05 11:52:34.446: [ CRSPE][622757632]{2:38983:2} Reading (16) resources

2023-06-05 11:52:34.447: [ CRSPE][622757632]{2:38983:2} Reading (3) server pools

2023-06-05 11:52:34.474: [ OCRRAW][624858880]proprior: Header check from OCR device 0 offset 7421952 failed (26). ---->OCR设备头检查失败

2023-06-05 11:52:34.474: [ OCRRAW][624858880]proprior: Retrying buffer read from another mirror for disk group [+ocr] for block at offset [7421952]

2023-06-05 11:52:34.475: [ OCRASM][624858880]proprasmres: Block from mirror #1 is same as buffer passed

2023-06-05 11:52:34.476: [ OCRASM][624858880]proprasmres: Block from mirror #2 is same as buffer passed

2023-06-05 11:52:34.487: [ OCRASM][624858880]proprasmres: Total 2 mirrors detected

2023-06-05 11:52:34.487: [ OCRASM][624858880]proprasmres: Block from mirror #1 same as block from mirror #2

2023-06-05 11:52:34.487: [ OCRASM][624858880]proprasmres: 2 mirrors found in this disk group.

2023-06-05 11:52:34.487: [ OCRASM][624858880]proprasmres: The buffer passed matches the buffers read from all 2 mirrors.

2023-06-05 11:52:34.487: [ OCRASM][624858880]proprasmres: Need to invoke checkdg. The buffer passed matches with buffer from all mirrors.

2023-06-05 11:52:34.794: [ OCRASM][624858880]proprasmres: Successfully returned after calling Check DG.

2023-06-05 11:52:34.795: [ OCRRAW][624858880]proprior: ASM re silver returned [22]

2023-06-05 11:52:34.795: [ OCRRAW][624858880]proprseterror: Error in accessing physical storage [26] Marking context invalid. ---->物理磁盘访问错误

2023-06-05 11:52:34.795: [ OCRRAW][624858880]proprdc: backend_ctx->prop_ctx_tag=PROPCTXT

2023-06-05 11:52:34.795: [ OCRRAW][624858880]proprdc: backend_ctx->prop_valid=0

2023-06-05 11:52:34.795: [ OCRRAW][624858880]proprdc: backend_ctx->prop_boot_mode=1

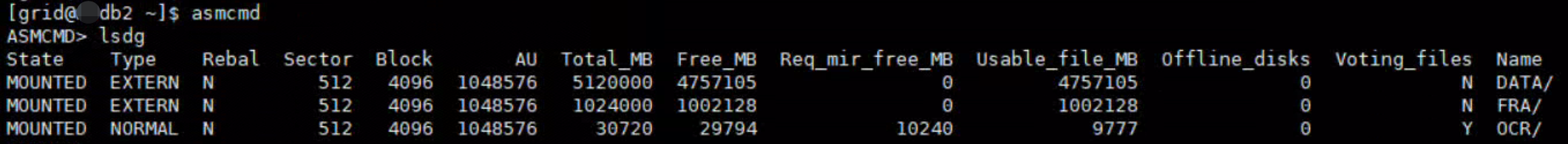

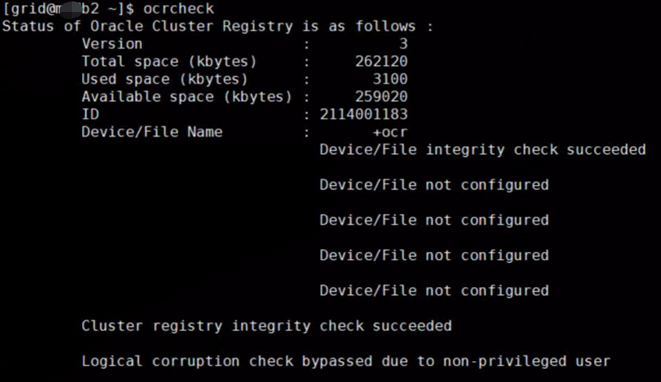

(3)OCR检查

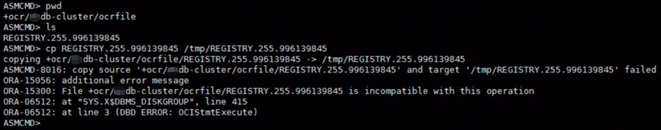

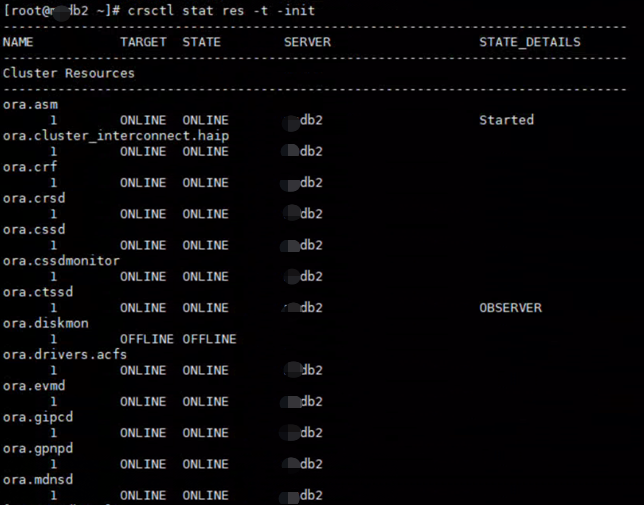

检查OCR磁盘组,正常。

执行ocrcheck,正常。

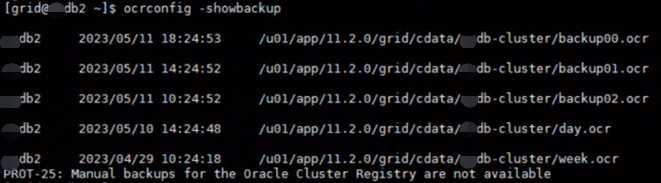

查看OCR备份信息,在5月11号后没有自动备份记录。往前查CRS LOG,在2023-05-11 20:34的时候已经开始报错。

问题原因

根据以上分析,当前所有磁盘组都MOUNT正常,CRSD访问OCR报错,问题应该是OCR文件损坏。可以根据OCR备份进行还原来尝试修复。

解决方案

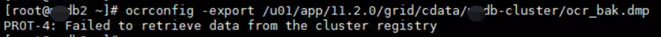

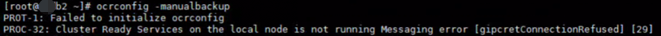

(1)备份OCR

还原前尝试通过多种方法备份现有OCR,均失败。经与客户沟通,近期未对集群做变更,所以跳过备份步骤。

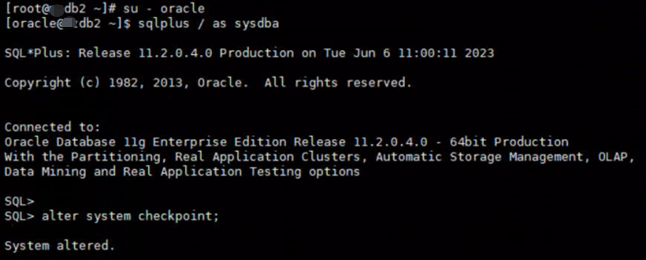

(2)还原OCR

以独占模式在一个节点上启动集群,还原OCR。

su - root

crsctl start crs -excl -nocrs

ocrconfig -restore /u01/app/11.2.0/grid/cdata/mzdb-cluster/backup00.ocr

(3)重启CRS

重启CRS后,CRSD启动正常,数据库恢复正常。

参考文档

How to Restore ASM Based OCR when OCR backup is located in ASM diskgroup? (Doc ID 2569847.1)

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。