前言

今天手很贱,把etcd一个节点玩挂了。正好测试了一下etcd的灾难恢复。记录一下整个过程。

玩挂的过程

先来说说手贱的过程。

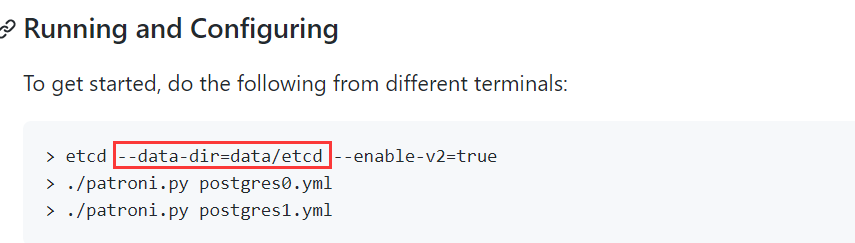

本来好好的三个点的集群,我把其中一个节点停了,然后想着加一个参数data-dir自定义一下存储的路径。我加了参数,然后执行的时候忘记把initial-cluster-state new 这里改成的new改成existing了。前面介绍过这个参数:新建集群的时候,这个值为new;假如已经存在的集群,这个值为existing。

再次重启执行的时候,就一直报错tocommit(563911) is out of range [lastIndex(0)]. Was the raft log corrupted, truncated, or lost。

2021-05-17 15:22:37.433035 I | rafthttp: started HTTP pipelining with peer 2bf12de0ba4711712021-05-17 15:22:37.433048 E | rafthttp: failed to find member 2bf12de0ba471171 in cluster 9285a17724e8e3892021-05-17 15:22:37.433065 E | rafthttp: failed to find member 2bf12de0ba471171 in cluster 9285a17724e8e389raft2021/05/17 15:22:37 INFO: 146e82bb772bb629 [term: 0] received a MsgHeartbeat message with higher term from e1de69976edadc68 [term: 165]raft2021/05/17 15:22:37 INFO: 146e82bb772bb629 became follower at term 165raft2021/05/17 15:22:37 tocommit(563911) is out of range [lastIndex(0)]. Was the raft log corrupted, truncated, or lost?panic: tocommit(563911) is out of range [lastIndex(0)]. Was the raft log corrupted, truncated, or lost?goroutine 63 [running]:log.(*Logger).Panicf(0xc0000d6a00, 0x10e525e, 0x5d, 0xc000568340, 0x2, 0x2) /usr/local/go/src/log/log.go:219 +0xc1

一直没办法启动。

如何解决

这里参考了官方文档的Disaster recovery章节。

我们当前是三个节点的集群,另外2个节点是好的,集群现在还是能正常运转的。文档中说最多可承受(n-1)/2个节点永久性故障。n代表集群节点总数。如果是3个节点的集群,只能承受1个挂,2个挂了就不行。挂的节点多于(n-1)/2,将不可避免地丢失仲裁。一旦仲裁丢失,群集将无法达成共识,因此无法继续接受更新。所以说etcd的节点,还是搞5个节点比较好。像我们的分布式MySQL,也是5个节点的zk。

1.我们需要从其他成员那边获取当前集群的快照。

[etcd@133e0e204e203 ~]$ ETCDCTL_API=3 etcdctl --endpoints=http://133.0.204.204:2380 snapshot save snapshot.db {"level":"info","ts":1621239833.8479724,"caller":"snapshot/v3_snapshot.go:119","msg":"created temporary db file","path":"snapshot.db.part"}{"level":"info","ts":"2021-05-17T16:23:53.850+0800","caller":"clientv3/maintenance.go:200","msg":"opened snapshot stream; downloading"}{"level":"info","ts":1621239833.8501642,"caller":"snapshot/v3_snapshot.go:127","msg":"fetching snapshot","endpoint":"http://133.0.204.204:2380"}{"level":"info","ts":"2021-05-17T16:23:53.855+0800","caller":"clientv3/maintenance.go:208","msg":"completed snapshot read; closing"}{"level":"info","ts":1621239833.916909,"caller":"snapshot/v3_snapshot.go:142","msg":"fetched snapshot","endpoint":"http://133.0.204.204:2380","size":"20 kB","took":0.068859986}{"level":"info","ts":1621239833.9169798,"caller":"snapshot/v3_snapshot.go:152","msg":"saved","path":"snapshot.db"}Snapshot saved at snapshot.db

这里需要注意,指定--endpoints地址只能指定一个

[etcd@133e0e204e203 ~]$ ETCDCTL_API=3 etcdctl --endpoints=http://133.0.204.204:2380,http://133.0.204.205:2380 snapshot save snapshot.dbError: snapshot must be requested to one selected node, not multiple [http://133.0.204.204:2380 http://133.0.204.205:2380]

2.还原snapshot

有了快照文件,我们就可以通过快照文件进行还原了。

[etcd@133e0e204e203 ~]$ ETCDCTL_API=3 etcdctl snapshot restore snapshot.db \> --name infra0 \> --initial-cluster infra0=http://133.0.204.203:2380,infra1=http://133.0.204.204:2380,infra2=http://133.0.204.205:2380 \> --initial-cluster-token etcd-cluster-1 \> --initial-advertise-peer-urls http://133.0.204.203:2380Error: data-dir "infra0.etcd" exists

执行还原的时候,报原目录已存在,这个时候要把原来的目录给干掉或者移动到其他地方。

[etcd@133e0e204e203 ~]$ rm -rf infra0.etcd/[etcd@133e0e204e203 ~]$ ETCDCTL_API=3 etcdctl snapshot restore snapshot.db \--name infra0 \--initial-cluster infra0=http://133.0.204.203:2380,infra1=http://133.0.204.204:2380,infra2=http://133.0.204.205:2380 \ --initial-cluster-token etcd-cluster-1 \--initial-advertise-peer-urls http://133.0.204.203:2380 {"level":"info","ts":1621239883.873639,"caller":"snapshot/v3_snapshot.go:296","msg":"restoring snapshot","path":"snapshot.db","wal-dir":"infra0.etcd/member/wal","data-dir":"infra0.etcd","snap-dir":"infra0.etcd/member/snap"}{"level":"info","ts":1621239883.95011,"caller":"mvcc/kvstore.go:380","msg":"restored last compact revision","meta-bucket-name":"meta","meta-bucket-name-key":"finishedCompactRev","restored-compact-revision":10023}{"level":"info","ts":1621239883.9542909,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"9285a17724e8e389","local-member-id":"0","added-peer-id":"146e82bb772bb629","added-peer-peer-urls":["http://133.0.204.203:2380"]}{"level":"info","ts":1621239883.9544687,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"9285a17724e8e389","local-member-id":"0","added-peer-id":"2bf12de0ba471171","added-peer-peer-urls":["http://133.0.204.204:2380"]}{"level":"info","ts":1621239883.9544845,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"9285a17724e8e389","local-member-id":"0","added-peer-id":"e1de69976edadc68","added-peer-peer-urls":["http://133.0.204.205:2380"]}{"level":"info","ts":1621239883.9815514,"caller":"snapshot/v3_snapshot.go:309","msg":"restored snapshot","path":"snapshot.db","wal-dir":"infra0.etcd/member/wal","data-dir":"infra0.etcd","snap-dir":"infra0.etcd/member/snap"}

再次执行restore,此时就把整个数据目录做了恢复,这个步骤就类似restore database一样。

3.重启故障节点

最后一步就是启动故障节点,这次要指定initial-cluster-state为existing。

[etcd@133e0e204e203 ~]$ nohup etcd --name infra0 --initial-advertise-peer-urls http://133.0.204.203:2380 \--listen-peer-urls http://133.0.204.203:2380 \--listen-client-urls http://133.0.204.203:2379,http://127.0.0.1:2379 \--advertise-client-urls http://133.0.204.203:2379 \--initial-cluster-token etcd-cluster-1 \--initial-cluster infra0=http://133.0.204.203:2380,infra1=http://133.0.204.204:2380,infra2=http://133.0.204.205:2380 \--initial-cluster-state existing --enable-v2=true &[etcd@133e0e204e203 ~]$ etcdctl endpoint --endpoints=http://133.0.204.203:2380,http://133.0.204.204:2380,http://133.0.204.205:2380 status --write-out=table +---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| http://133.0.204.203:2380 | 146e82bb772bb629 | 3.4.15 | 20 kB | false | false | 166 | 581706 | 581706 | || http://133.0.204.204:2380 | 2bf12de0ba471171 | 3.4.15 | 20 kB | true | false | 166 | 581706 | 581706 | || http://133.0.204.205:2380 | e1de69976edadc68 | 3.4.15 | 20 kB | false | false | 166 | 581706 | 581706 | |+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

故障节点重启成功,重新加入到了集群。当然这是etcd v3版本的恢复过程,比较方便。如果是v2版本,则需要执行etcdctl backup命令来进行备份恢复。

后记

整个恢复过程还算简单,但是数据量大了,就不知恢复速度如何了。建议搞etcd还是5个节点吧。3个节点还是有点危险。如果一个3节点集群同时遭受了两个不可恢复的计算机故障,这个群集是无法恢复仲裁并继续运行的。