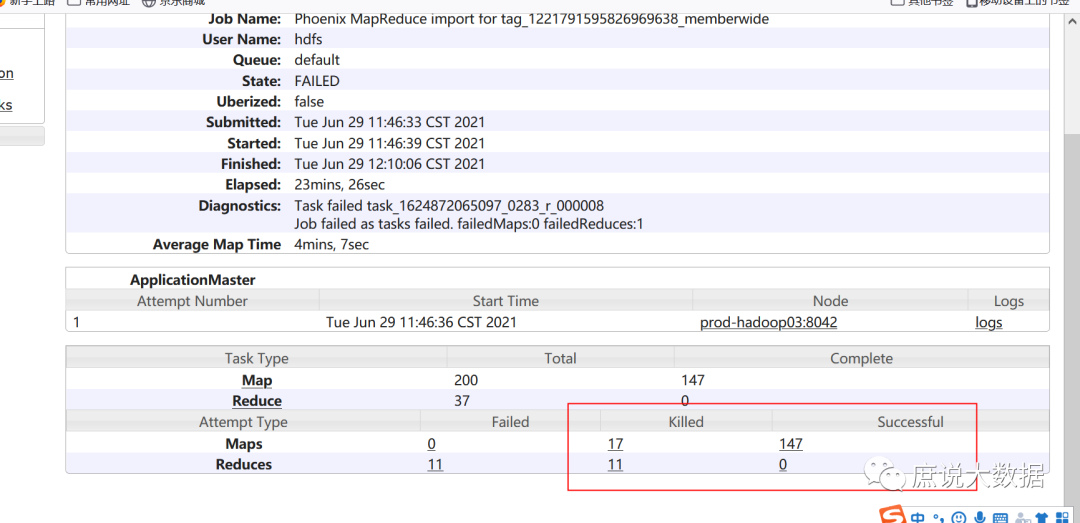

日志分析定位

解决问题方案

取消内存的检查(不推荐) 增大mapreduce.map.memory.mb 或者 mapreduce.reduce.memory.mb,同时注意mapreduce.map.java.opts和mapreduce.reduce.java.opts 适当增大yarn.nodemanager.vmem-pmem-ratio的大小 application日志

[pid=27948,containerID=container_e195_1624872065097_0283_01_000087] is running beyond physical memory limits. Current usage: 4.1 GB of 4 GB physical memory used; 9.5 GB of 8.4 GB virtual memory used. Killing container. Dump of the process-tree for container_e195_1624872065097_0283_01_000087 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 27948 27947 27948 27948 (bash) 0 0 116006912 75 /bin/bash -c /usr/java/jdk1.8.0_131/bin/java -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Dhdp.version=2.6.5.0-292 -Xmx7577m -Djava.io.tmpdir=/data/hadoop/yarn/local/usercache/hdfs/appcache/application_1624872065097_0283/container_e195_1624872065097_0283_01_000087/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/data/hadoop/yarn/log/application_1624872065097_0283/container_e195_1624872065097_0283_01_000087 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 172.19.51.13 39739 attempt_1624872065097_0283_r_000005_0 214404767416407 1>/data/hadoop/yarn/log/application_1624872065097_0283/container_e195_1624872065097_0283_01_000087/stdout 2>/data/hadoop/yarn/log/application_1624872065097_0283/container_e195_1624872065097_0283_01_000087/stderr |- 27964 27948 27948 27948 (java) 2384 573 10085986304 1084906 /usr/java/jdk1.8.0_131/bin/java -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Dhdp.version=2.6.5.0-292 -Xmx7577m -Djava.io.tmpdir=/data/hadoop/yarn/local/usercache/hdfs/appcache/application_1624872065097_0283/container_e195_1624872065097_0283_01_000087/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/data/hadoop/yarn/log/application_1624872065097_0283/container_e195_1624872065097_0283_01_000087 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 172.19.51.13 39739 attempt_1624872065097_0283_r_000005_0 214404767416407 Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143.

验证步骤

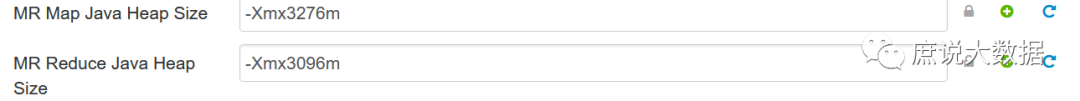

查看系统mapreduce.map.memory.mb和mapreduce.reduce.memory.mb 基本满足目前的条件

调整yarn.nodemanager.vmem-pmem-ratio=2.5(默认的是2.1)

Container [pid=5893,containerID=container_e196_1624946421771_0010_01_000105] is running beyond physical memory limits. Current usage: 4.0 GB of 4 GB physical memory used; 9.5 GB of 10 GB virtual memory used. Killing container. Dump of the process-tree for container_e196_1624946421771_0010_01_000105 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 5908 5893 5893 5893 (java) 3027 645 10076332032 1049242 /usr/java/jdk1.8.0_131/bin/java -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Dhdp.version=2.6.5.0-292 -Xmx7577m -Djava.io.tmpdir=/data/hadoop/yarn/local/usercache/hdfs/appcache/application_1624946421771_0010/container_e196_1624946421771_0010_01_000105/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/data/hadoop/yarn/log/application_1624946421771_0010/container_e196_1624946421771_0010_01_000105 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 172.19.51.14 34299 attempt_1624946421771_0010_r_000008_0 215504279044201 |- 5893 5891 5893 5893 (bash) 0 0 116006912 51 /bin/bash -c /usr/java/jdk1.8.0_131/bin/java -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Dhdp.version=2.6.5.0-292 -Xmx7577m -Djava.io.tmpdir=/data/hadoop/yarn/local/usercache/hdfs/appcache/application_1624946421771_0010/container_e196_1624946421771_0010_01_000105/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/data/hadoop/yarn/log/application_1624946421771_0010/container_e196_1624946421771_0010_01_000105 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Dyarn.app.mapreduce.shuffle.logger=INFO,shuffleCLA -Dyarn.app.mapreduce.shuffle.logfile=syslog.shuffle -Dyarn.app.mapreduce.shuffle.log.filesize=0 -Dyarn.app.mapreduce.shuffle.log.backups=0 org.apache.hadoop.mapred.YarnChild 172.19.51.14 34299 attempt_1624946421771_0010_r_000008_0 215504279044201 1>/data/hadoop/yarn/log/application_1624946421771_0010/container_e196_1624946421771_0010_01_000105/stdout 2>/data/hadoop/yarn/log/application_1624946421771_0010/container_e196_1624946421771_0010_01_000105/stderr Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143.

虽然还是报错了,但是物理内存和虚拟内存是满足条件的,那么为什么还会报错呢?这个一开始也是陷入了纠结之中,明明都满足了条件为什么还是会报错呢?直到在stackflow上看到:每个容器都是在jvm中运行的map和reduce,JVM对内容应该低于map和reduce 设置的内存,以便它们在 YARN 分配的 Container 内存范围内。3. 在mapreduce上设置mapreduce.map.java.opts和mapreduce.reduce.java.opts

参考文档

https://blog.csdn.net/MrZhangBaby/article/details/101292617 https://stackoverflow.com/questions/21005643/container-is-running-beyond-memory-limits

文章转载自庶说大数据,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。