《LLM As DBA》论文_清华大学_Xuanhe Zhou.pdf

免费下载

LLM As DBA

Xuanhe Zhou

Tsinghua University

Beijing, China

zhouxuan19@mails.tsinghua.edu.cn

Guoliang Li

Tsinghua University

Beijing, China

liguoliang@tsinghua.edu.cn

Zhiyuan Liu

Tsinghua University

Beijing, China

liuzy@tsinghua.edu.cn

ABSTRACT

Database administrators (DBAs) play a crucial role in managing,

maintaining and optimizing a database system to ensure data avail-

ability, performance, and reliability. However, it is hard and tedious

for DBAs to manage a large number of database instances (e.g., mil-

lions of instances on the cloud databases). Recently large language

models (LLMs) have shown great potential to understand valuable

documents and accordingly generate reasonable answers. Thus, we

propose D-Bot, a LLM-based database administrator that can con-

tinuously acquire database maintenance experience from textual

sources, and provide reasonable, well-founded, in-time diagnosis

and optimization advice for target databases. This paper presents a

revolutionary LLM-centric framework for database maintenance,

including

(𝑖)

database maintenance knowledge detection from doc-

uments and tools,

(𝑖𝑖)

tree of thought reasoning for root cause

analysis, and

(𝑖𝑖𝑖)

collaborative diagnosis among multiple LLMs.

Our preliminary experimental results that D-Bot can eciently

and eectively diagnose the root causes and our code is available

at github.com/TsinghuaDatabaseGroup/DB-GPT.

1 INTRODUCTION

Limitations of DBAs. Currently, most companies still rely on

DBAs for database maintenance (DM, e.g., tuning, conguring,

diagnosing, optimizing) to ensure high performance, availability

and reliability of the databases. However, there is a signicant gap

between DBAs and DM tasks. First, it takes a long time to train a DBA.

There are numerous relevant documents (e.g., administrator guides),

which can span over 10,000 pages for just one database product

and consumes DBAs several years to partially grasp the skills by

applying in real practice. Second, it is hard to obtain enough DBAs

to manage a large number of database instances, e.g. millions of

instance on cloud databases. Third, a DBA may not provide in-time

response in emergent cases (especially for correlated issues across

multiple database modules) and cause great nancial losses.

Limitations of Database Tools. Many database products are

equipped with semi-automatic maintenance tools to relieve the

pressure of human DBAs [

5

,

6

,

10

–

12

]. However, they have sev-

eral limitations. First, they are built by empirical rules [

4

,

24

] or

small-scale ML models (e.g., classiers [

13

]), which have poor text

processing capability and cannot utilize available documents to

answer basic questions. Second, they cannot exibly generalize

to scenario changes. For empirical methods, it is tedious to man-

ually update rules by newest versions of documents. And learned

methods require costly model retraining and are not suitable for

online maintenance. Third, they cannot reason the root cause of an

anomaly like DBAs, such as looking up more system views based

xxxx

Query

Rewriter

Query

Planner

Query

Executor

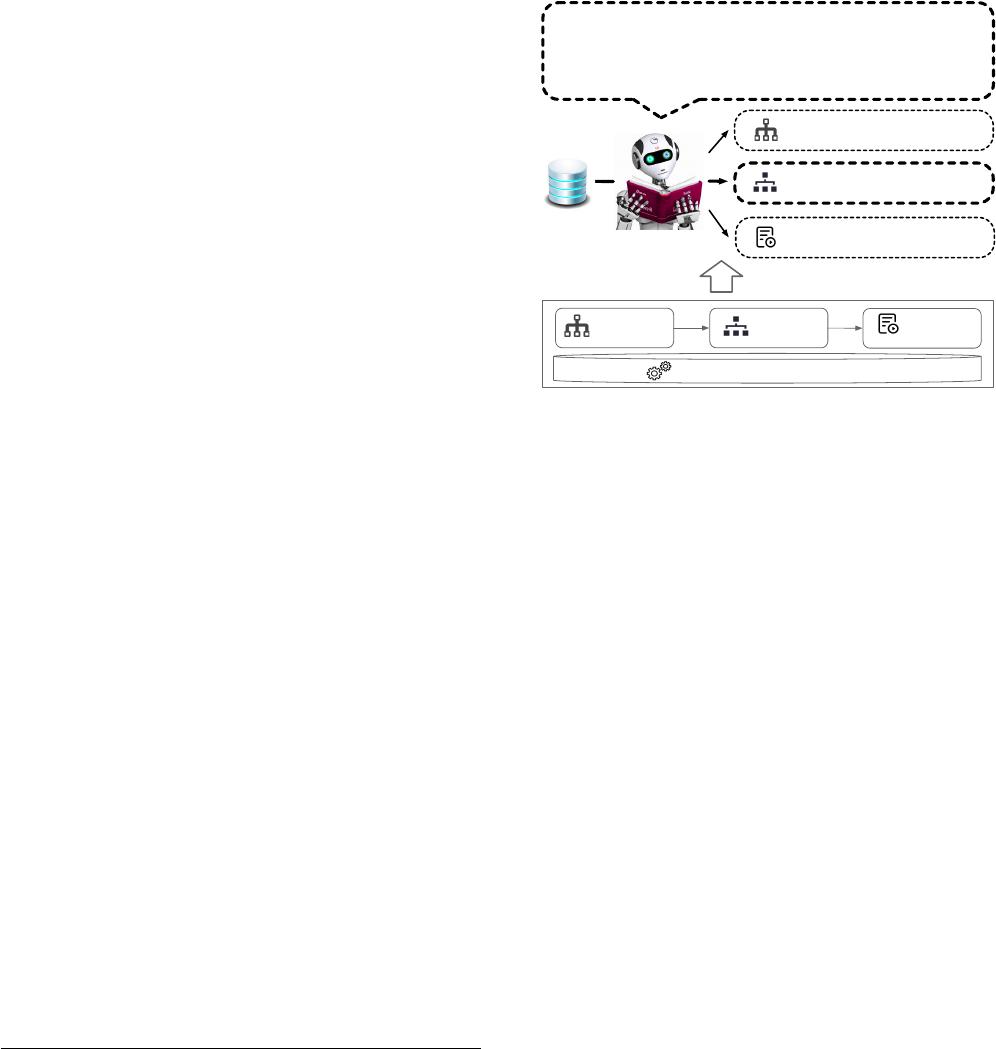

D-Bot

Rewrite logical query …

Add lacking indexes …

Documents

Optimize query plan …

System Configuration

Thought: High memory usage seems to be caused by

poor join performance and much inactive memory

Reasoning: Poor joins can be solved by plan optimization

Action: optimize_query_plan

Figure 1: LLM As DBA

on the initial analysis results. This capability is vital to detect useful

information in complex cases.

Our Vision: A Human-Beyond Database Adminstrator. To this

end, we aim to build a human-beyond “DBA” that can tirelessly learn

from documents (see Figure 1), which, given a set of documents,

automatically (1) learns experience from documents, (2) obtains

status metrics by interacting with the database, (3) reasons about

possible root causes with the abnormal metrics, and (4) accordingly

gives optimization advice by calling proper tools.

Challenges. Recent advances in Large Language Models (LLMs)

have demonstrated superiority in understanding natural language,

generating basic codes, and using external tools. However, leverag-

ing LLM to design a “human-beyond DBA” is still challenging.

(1) Experience learning from documents. Just like human learners

taking notes in classes, although LLMs have undergone training on

vast corpus, important knowledge points (e.g., diagnosis experience)

cannot be easily utilized without careful attention. However, most

texts are of long documents (with varying input lengths and section

correlations) and dierent formats of the extracted experience can

greatly aect the utilization capability of the LLM.

(2) Reasoning by interacting with database. With the extracted

experience, we need to inspire LLM to reason about the given

anomalies. Dierent from basic prompt design in machine learning,

database diagnosis is an interactive procedure with the database

(e.g., looking up system views or metrics). However, LLM responses

are often untrustworthy (“hallucination” problem), and it is critical

to design strategies that guide LLM to utilize proper interfaces of

the database and derive reasonable analysis.

(3) Mechanism for communication across multiple LLMs. Similar

to human beings, one LLM alone may be stuck in sub-optimal

arXiv:2308.05481v2 [cs.DB] 11 Aug 2023

solutions, and it is vital to derive a framework where multiple

LLMs collaborate to tackle complex database problems. By pooling

their collective intelligence, these LLMs can provide comprehensive

and smart solutions that a single LLM or even skilled human DBA

would struggle to think out.

Idea of LLM as DBA. Based on above observations, we introduce

D-Bot, an LLM based database administrator. First, D-Bot trans-

forms documents into experiential knowledge by dividing them into

manageable chunks and summarizing them for further extraction

of maintenance insights with LLM. Second, it iteratively generates

and assesses dierent formats of task descriptions to assist LLM in

understanding the maintenance tasks better. Third, D-Bot utilizes

external tools by employing matching algorithms to select appro-

priate tools and providing LLM with instructions on how to use the

APIs of selected tools. Once equipped with the experience, tools,

and input prompt, LLM can detect anomalies, analyze root causes,

and provide suggestions, following a tree of thought strategy to re-

vert to previous steps if a failure occurs. Moreover, D-Bot promotes

collaborative diagnosis by allowing multiple LLMs to communicate

based on predened environmental settings, inspiring more robust

solutions via debate-like communications.

Contributions. We make the following contributions.

(1) We design a LLM-centric database maintenance framework, and

explore potential to overcome limitations of traditional strategies.

(2) We propose an eective data collection mechanism by

(𝑖)

de-

tecting experiential knowledge from documents and

(𝑖𝑖)

leveraging

external tools with matching algorithms.

(3) We propose a root cause analysis method that utilizes LLM and

tree search algorithm for accurate diagnosis.

(4) We propose an innovative concept of collaborative diagnosis

among LLMs, thereby oering more comprehensive and robust

solutions to complex database problems.

(5) Our preliminary experimental results that D-Bot can eciently

and eectively diagnose the root causes.

2 PRELIMINARIES

Database Anomalies. In databases, there are ve common prob-

lems that can negatively aect the normal execution status. (1)

Running Slow. The database exhibits longer response time than

expectancy, leading to bad execution performance. (2) Full Disk

Capacity. The database’s disk space is exhausted, preventing it from

storing new data. (3) Execution Errors. The database experiences

errors, potentially due to improper error handling in the application

(e.g., leaking sensitive data or system details) or issues within data-

base (e.g., improper data types). (4) Hanging. The database becomes

unresponsive, which is usually caused by long-running queries,

deadlocks, or resource contention. (5) Crashing. The database un-

expectedly shuts down, causing data inaccessible. For a mature

database product, each anomaly type is explained in the do cumenta-

tion and suitable to be learned by LLMs.

Observation Tools for Anomaly Detection. “Observability of

the database” is vital to detect above anomalies, including logs, met-

rics, and traces. (1) Logs are records of database events. For example,

PostgresSQL supports slow query logs (with error messages that

can help debug and solve execution issues), but these logs may

record a large scale of data and are generally not enabled in on-

line stage. (2) Metrics capture the aggregated database and system

statistics. For example, views like pg_stat_statements record the

templates and statistics of slow queries; tools like Prometheus [

20

]

provide numerous monitoring metrics, making it possible to cap-

ture the real time system status. (3) Traces provide visibility into

how requests behave during executing in the database. Dierent

from logs that help to identify the database problem, traces help to

locate the specic abnormal workload or application.

Optimization Tools for Anomaly Solving. Users mainly con-

cern how to restore to the normal status after an anomaly occurs.

Here we showcase some optimization tools. (1) For slow queries,

since most open-source databases are weak in logical transfor-

mation, there are external engines (e.g., Calcite with

∼

120 query

rewrite rules) and tuning guides (e.g., Oracle with over 34 trans-

formation suggestions) that help to optimize slow queries. (2) For

knob tuning, many failures (e.g., max_connections in Postgres)

or bad performance (e.g., memory management knobs) are cor-

related with database knobs (e.g., for a slow workload, incresae

innodb_buer_pool_size in MySQL by 5% if the memory usage

is lower than 60%). Similarly, there are index tuning rules that

generate potentially useful indexes (e.g., taking columns within

the same predicate as a composite index). Besides, we can utilize

more advanced methods, such as selecting among heuristic meth-

ods [

3

,

21

,

22

] and learned methods [

7

–

9

,

15

,

23

,

25

,

26

] for problems

like index lacking, which is not within the scope of this paper.

We aim to design D-Bot, an LLM-based DBA, for automatically

diagnosing the database anomalies and use LLM to directly (or call

appropriate tools to indirectly) provide the root causes.

3 THE VISON OF D-BOT

Existing LLMs are criticized for problems like “Brain in a Vat” [

14

].

Thus, it is essential to establish close connections between LLMs

and the target database, allowing us to guide LLMs in eectively

maintaining the database’s health and functionality. Hence, we

propose D-Bot, which is composed of two stages.

First, in preparation stage, D-Bot generates experience (from

documents) and prompt template (from diagnosis samples), which

are vital to guide online maintenance.

•

Documents

→

Experience. Given a large volume of diverse,

long, unstructured database documents (e.g., database man-

ual, white paper, blogs), we rst split each document into

chunks that can be processed by the LLM. To aggregate

correlated chunks together (e.g., chunk

𝑣

𝑖

that explains the

meaning of “bloat-table” and chunk

𝑣

𝑗

that utilizes “bloat-

table” in root cause analysis), we generate a summary for

each chunk based on both its content and its subsections.

Finally, we utilize LLM to extract maintenance experience

from chunks with similar summaries (Section 4).

•

Prompt Template Generation. To help LLM better under-

stand the DM tasks, we iteratively generate and score dif-

ferent formats of task descriptions using DM samples (i.e.,

given the anomaly and solutions, ask LLM to describe the

task), and adopt task description that both scores high perfor-

mance and is sensible to human DBAs (in cases of learning

bias) for LLM diagnosis (Section 5).

2

of 11

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论