VLDB2022_ConnectorX:Accelerating Data Loading From Databases to Dataframes_腾讯云数据库.pdf

免费下载

ConnectorX: Accelerating Data Loading From Databases to

Dataframes [Technical Report]

Xiaoying Wang

†∗

, Weiyuan Wu

†∗

, Jinze Wu

†

, Yizhou Chen

†

, Nick Zrymiak

†

, Changbo Qu

†

, Lampros

Flokas

‡

, George Chow

†

, Jiannan Wang

†

, Tianzheng Wang

†

, Eugene Wu

‡

, Qingqing Zhou

♦

Simon Fraser University

†

Columbia University

‡

Tencent Inc.

♦

{xiaoying_wang, youngw, jinze_wu, yizhou_chen_3, nzrymiak, changboq, kai_yee_chow, jnwang, tzwang}@sfu.ca

†

{lamokas, ewu}@cs.columbia.edu

‡

hewanzhou@tencent.com

♦

ABSTRACT

Data is often stored in a database management system (DBMS) but

dataframe libraries are widely used among data scientists. An im-

portant but challenging problem is how to bridge the gap between

databases and dataframes. To solve this problem, we present Con-

nectorX, a client library that enables fast and memory-ecient data

loading from various databases (e.g.,PostgreSQL, MySQL, SQLite,

SQLServer, Oracle) to dierent dataframes (e.g., Pandas, PyArrow,

Modin, Dask, and Polars). We rst investigate why the loading pro-

cess is slow and why it consumes large memory. We surprisingly

nd that the main overhead comes from the client-side rather than

query execution and data transfer. We integrate several existing

and new techniques to reduce the overhead and carefully design

the system architecture and interface to make ConnectorX easy to

extend to various databases and dataframes. Moreover, we propose

server-side result partitioning that can be adopted by DBMSs in

order to better support exporting data to data science tools. We

conduct extensive experiments to evaluate ConnectorX and com-

pare it with popular libraries. The results show that ConnectorX

signicantly outperforms existing solutions. ConnectorX is open

sourced at: https://github.com/sfu-db/connector-x.

1 INTRODUCTION

Dataframe libraries such as Pandas [

63

], Dask [

72

], and Modin [

66

]

are widely used among data scientists for data manipulation and

analysis. In contrast, enterprise environments often store their data

in database management systems (DBMSs). Thus, the rst step

in most data science applications is to load data from the DBMS.

Unfortunately, this data loading process is not only notoriously

slow but also consumes inordinate amounts of client memory [

4

,

5

,

7

,

8

,

57

], which easily leads to out-of-memory errors or performance

degradation.

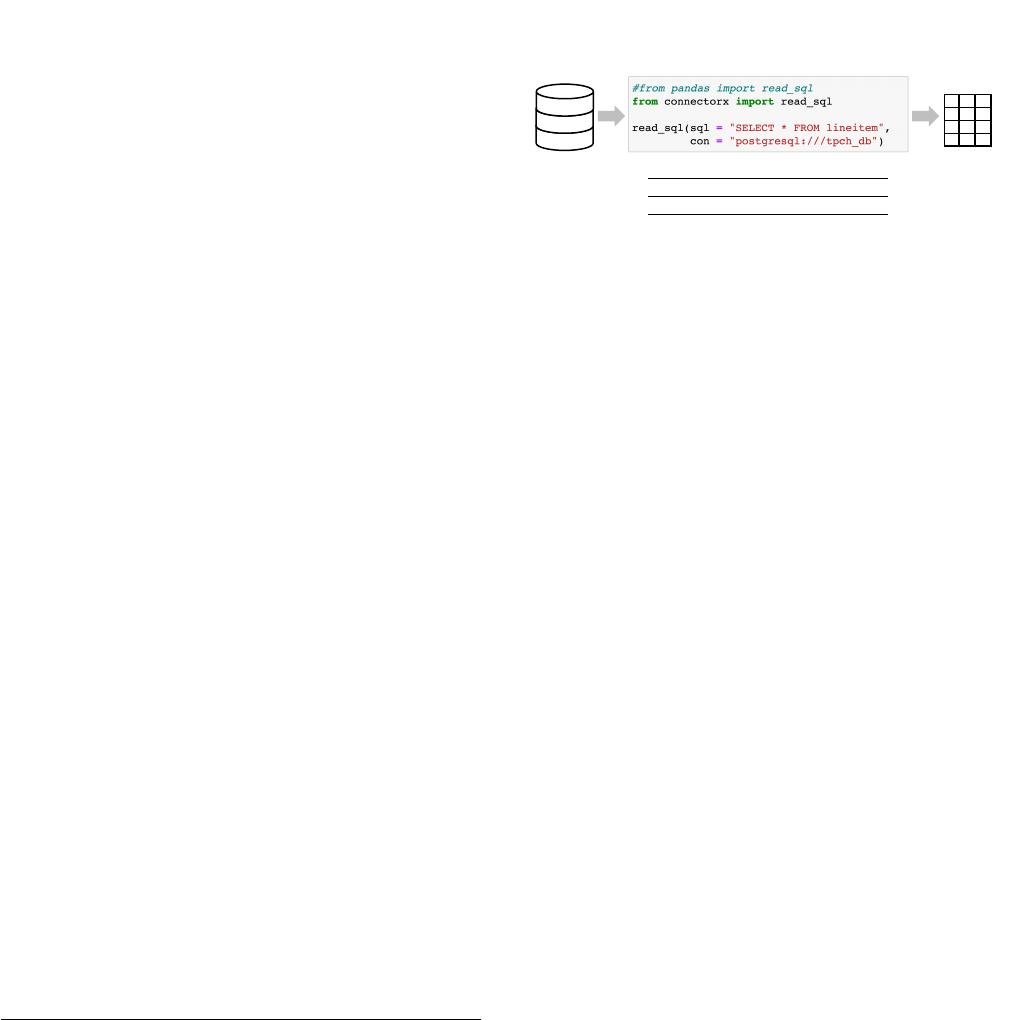

Example 1.1. Pandas is the most widely used dataframe library in

Python, with a total 1.2B downloads on PyPI as of Jan 2022. Suppose

that a data scientist loads the TPC-H ‘lineitem’ table (7.2 GB) from

PostgreSQL into a Pandas.DataFrame using the Pandas

read_sql

call in Figure 1. The function species a query string and database

connection (e.g.,

conn

), retrieves the query results, and loads them

into a DataFrame object. We conducted an experiment using two

AWS instances, where PostgreSQL was deployed on one instance and

the code was run on another instance (see Section 3 for details). The

whole data loading process is highly inecient—it takes 12.5 mins

and consumes over 95.6 GB of memory. In fact, the actual time spent

on query execution is less than 1 min (13

×

time overhead) and the

nal Pandas.DataFrame is only 24.4 GB (4× memory overhead) .

* Both authors contributed equally to this research.

Dataframe

Database

Tot al

Time

Peak Memory

Pandas

12.46

mins

95.6

GB

ConnectorX

0.97

min

31.4

GB

Bridging the gap between Databases and Dataframes

Figure 1: Speed up loading the lineitem table (7.2 GB in CSV)

from database to dataframe with less memory usage.

On the other hand, ConnectorX is on the critical path of many

data science tasks. For example, ELT and ETLT processes can benet

from

read_sql

for data extraction, of which the dataframe result

enables exible light weight transformations before loading to data

warehouses. And since the heavy data transformation happens

in the data warehouses, data extraction becomes a bottleneck in

these scenarios. Another example is exploratory data analysis, in

which data scientists may interactively fetch and analyze the data

in order to get insights. If the whole data is too large, it might

not be possible to fetch all the data at once and process locally.

Instead, one needs to load data from databases multiple times on

demand by adding dierent lters. In this case, a faster

read_sql

function could save the human waiting time and help data scientists

work more eciently. Furthermore,

read_sql

can also become a

bottleneck in a ML pipeline.

Example 1.2. We construct an end-to-end ML pip eline on a real-

world DDoS [

15

] dataset. The pipeline consists of three steps: data

loading (through

read_sql

), data pre-processing and model training.

In particular, data pre-processing includes procedures such as featuriz-

ing the IP address columns, encoding the label and splitting the entire

data into training and validation dataset. The ML model follows the

SGB DDoS trac classication model proposed by paper [

68

], and

implemented using XGBoost [

41

] library. We set the early stop round

to 20 and report the averaged result in running the pipeline ve times

with dierent random seeds. The time breakdown is shown in Figure 2

(DDoS resides in PostgreSQL, setup is the same with previous exam-

ple). We can see that

Pandas.read_sql

takes more than 54% of the

total pipeline running time.

These two examples indicate the importance of speeding up

read_sql

for bridging the gap between databases and dataframes.

In this paper, we focus on developing an ecient data loader for

dataframes (i.e.,

read_sql

) that is easily compatible with both ex-

isting client data science libraries and legacy DBMSs. Note that

Figure 2: Time break down of a ML pipeline built on DDoS

dataset.

data scientists need only change a single line of client code to en-

joy the benets (see Figure 1). We propose ConnectorX, a fast and

memory-ecient data loading library that supports many DBMSs

(e.g., PostgreSQL, SQLite, MySQL, SQLServer, Oracle) to client

dataframes (e.g., Pandas, PyArrow, Modin, Dask, and Polars). We

made our contributions while sought to address four major ques-

tions.

First, where are the actual data loading bottlenecks? We pro-

le the

Pandas.read_sql

implementation in Section 3 (due to its

popularity). We nd that the runtime can be split into two parts:

the server side runtime includes query execution, serialization, and

network transfer, and the client side includes deserialization and

conversion into a dataframe. We were surprised to nd that >85%

of time is spent in the client, and that the conversion materializes

all intermediate transformation results in memory. These ndings

suggest that client-side optimizations are sucient to dramatically

reduce data loading costs.

Second, how do we both reduce the runtime and memory,

while also making the system extensible to new DBMSs? In

Section 4, we design a succinct domain-specic language (DSL)

for mapping DBMS result representations into dataframes—this

reduces the lines of code by 1-2 orders of magnitude as compared

to not using our DSL. Under the covers, ConnectorX compiles the

DSL to execute over a streaming workow system that eciently

translates bytes received from the network into objects in memory.

The workow executes in a pipelined fashion, and seamlessly com-

bines optimization techniques including parallel execution, string

allocation optimizations, and an ecient data representation.

Third, are widely used client query partitioning scheme good

enough? Parallelization via query partitioning is the dominant way

to reduce execution and loading time. Existing libraries [

60

,

66

,

72

]

including ConnectorX partition the query on the client, which is

popular since it does not require modication to the server. Unfortu-

nately, we nd that it introduces extra user burden, load imbalances,

wasted server resources, and data inconsistencies. Thus, we study

server-side result partitioning in Section 5, where the DBMS di-

rectly partitions the query result and sends back in parallel without

changing the protocol and access method. We prototype and demon-

strate the ecacy using PostgreSQL, and advocate DBMS vendors

to add this support to benet data science applications.

Fourth, does a new data loading library matter? Since its rst

release in April 2021, ConnectorX has been widely adopted by real

users, with a total of 300K+ downloads and 640+ Github stars in a

year. It has been applied to extracting data in ETL [

11

] and loading

ML data from DBMS [

12

]. It is also integrated into popular open

source projects such as Polars [

17

], Modin [

66

] and DataPrep [

14

].

For example, Polars is the most popular dataframe library in Rust,

and it uses ConnectorX as the default way to read data from various

databases [

13

]. Our experiments in Section 6 show that ConnectorX

signicantly outperforms existing libraries (Pandas, Dask, Modin,

Turbodbc) when loading large query results. Compared to Pandas,

it reduces runtime by 13× and memory utilization by 3×.

In summary, our paper makes the following contributions:

(1)

We perform an in-depth empirical analysis of the

read_sql

function in Pandas. We surprisingly nd that the main over-

head for

read_sql

comes from the client-side instead of

query execution and data transfer.

(2)

We design and implement ConnectorX that greatly reduces

the overhead of

read_sql

with no requirement to modify

existing database servers and client protocols.

(3)

We propose a carefully designed architecture and interface

to make ConnectorX easy to extend, and design a DSL to

simplify the type mapping from databases to dataframes.

(4)

We identify the issues of client-side query partitioning, and

propose server-side result partitioning and implement pro-

totype systems to address these issues.

(5)

We conduct extensive experiments to evaluate ConnectorX

and compare it with popular libraries. The results show that

ConnectorX signicantly outperforms existing solutions.

The remainder of this paper is organized as follows. We review

related work in Section 2. We perform an in-depth empirical analy-

sis of

read_sql

in Section 3, and propose ConnectorX in Section 4.

Section 5 dives into the topic of query partitioning. We present

evaluation results in Section 6, and conclude our paper in Section 7.

2 RELATED WORK

Bridging the gap between DBMS and ML has become a hot topic in

the database community. ConnectorX ts into the big picture by

supporting ecient data loading from DBMSs to dataframes.

Accelerating Data Access From DBMS. There has been abundant

works in speeding up moving data out of database systems.

(1) Server-Side Enhancement.

Accessing data from database sys-

tems through tuple-level protocol is notoriously slow [

57

,

70

]. Previ-

ous work [

70

] shows that existing wire protocols suer from redun-

dant information and expensive (de)serialization, and propose a new

protocol to tackle these issues. More approaches tend to leverage

existing open data formats (e.g. Parquet [

25

], ORC [

24

], Avro [

23

],

Arrow [

6

], Dermel [

59

], Pickle [

1

]) to speed up the process by avoid-

ing tuple-level accessing. Li et. al [

57

] adopts Flight [

46

] to enable

zero-copy on data export in Arrow IPC format. Data warehouses

such as Redshift [

20

], BigQuery [

16

] and Snowake [

43

] support

unloading data into cloud stores (e.g. Amazon S3 [

22

], Azure Blob

Storage [

26

], Google Cloud Storage [

30

]) in the format like CSV

and Parquet directly [

21

,

29

,

35

]. Data lake and Lakehouse solu-

tions [

27

,

74

,

77

] also advocate direct accessibility of open formats.

Parallelism is another eective way to speed up data transfer,

supported by many tools [

2

,

10

,

28

] to move data between two

le systems (e.g. HDFS, S3) or between le system and DBMSs.

Databricks points out the single SQL endpoint bottleneck and pro-

poses to tackle it with cloud fetch architecture [

39

], in which a

query’s result is exported to a cloud storage in multiple partitions,

enabling parallel downloading from the client. Similar support is

also provided by other cloud-native data warehouses [21, 29, 35].

However, all these server-side attempts require users to modify

the source code of a database server or switch to a new one, which

of 14

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论