NeuralField-LDM Scene Generation with Hierarchical Latent Diffusion Models.pdf

100墨值下载

NeuralField-LDM: Scene Generation with Hierarchical Latent Diffusion Models

Seung Wook Kim

1,2,3*

Bradley Brown

1,5*†

Kangxue Yin

1

Karsten Kreis

1

Katja Schwarz

6†

Daiqing Li

1

Robin Rombach

7†

Antonio Torralba

4

Sanja Fidler

1,2,3

1

NVIDIA

2

University of Toronto

3

Vector Institute

4

CSAIL, MIT

5

University of Waterloo

6

University of T

¨

ubingen, T

¨

ubingen AI Center

7

LMU Munich

https://research.nvidia.com/labs/toronto-ai/NFLDM

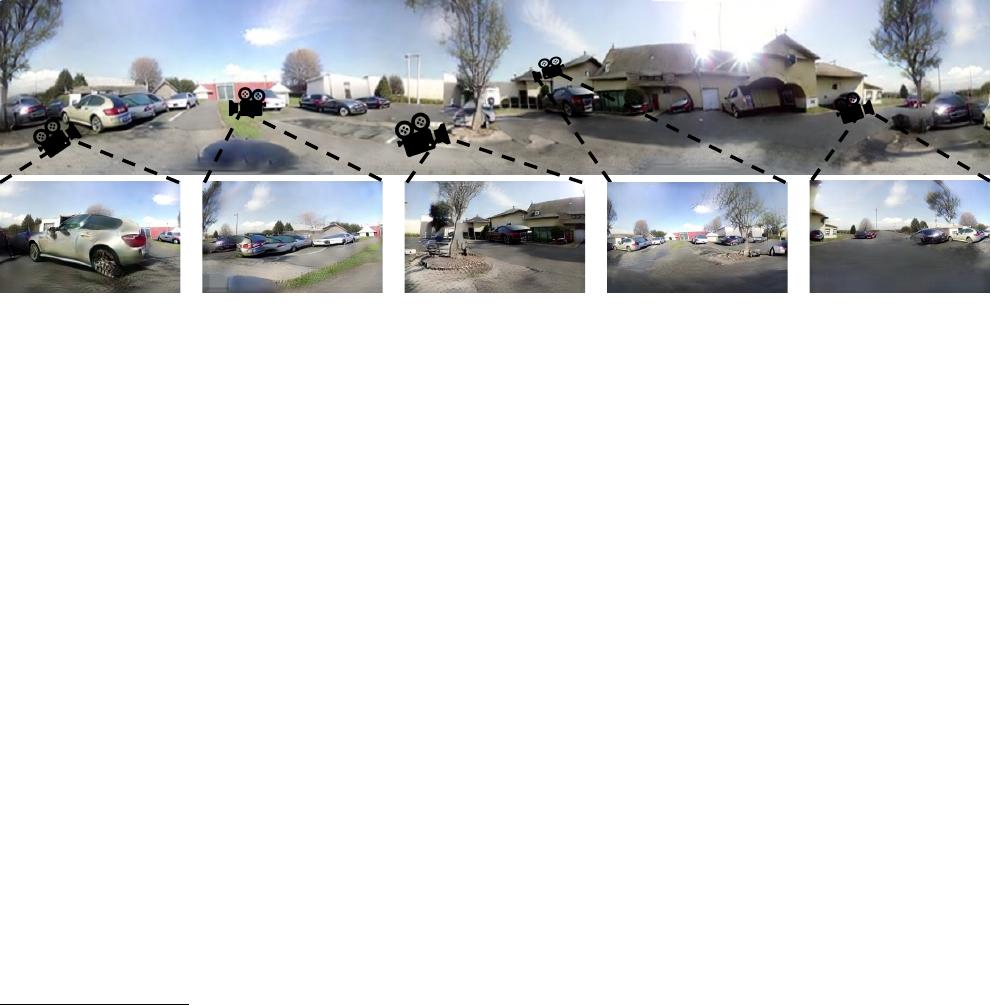

Figure 1. We introduce NeuralField-LDM, a generative model for complex open-world 3D scenes. This figure contains a panorama

constructed from NeuralField-LDM’s generated scene. We visualize different parts of the scene by placing cameras on them.

Abstract

Automatically generating high-quality real world 3D

scenes is of enormous interest for applications such as vir-

tual reality and robotics simulation. Towards this goal, we

introduce NeuralField-LDM, a generative model capable of

synthesizing complex 3D environments. We leverage Latent

Diffusion Models that have been successfully utilized for ef-

ficient high-quality 2D content creation. We first train a

scene auto-encoder to express a set of image and pose pairs

as a neural field, represented as density and feature voxel

grids that can be projected to produce novel views of the

scene. To further compress this representation, we train a

latent-autoencoder that maps the voxel grids to a set of la-

tent representations. A hierarchical diffusion model is then

fit to the latents to complete the scene generation pipeline.

We achieve a substantial improvement over existing state-

of-the-art scene generation models. Additionally, we show

how NeuralField-LDM can be used for a variety of 3D con-

tent creation applications, including conditional scene gen-

eration, scene inpainting and scene style manipulation.

1. Introduction

There has been increasing interest in modelling 3D real-

world scenes for use in virtual reality, game design, digi-

*

Equal contribution.

†

Work done during an internship at NVIDIA.

tal twin creation and more. However, designing 3D worlds

by hand is a challenging and time-consuming process, re-

quiring 3D modeling expertise and artistic talent. Recently,

we have seen success in automating 3D content creation

via 3D generative models that output individual object as-

sets [17, 53, 85]. Although a great step forward, automating

the generation of real-world scenes remains an important

open problem and would unlock many applications ranging

from scalably generating a diverse array of environments

for training AI agents (e.g. autonomous vehicles) to the de-

sign of realistic open-world video games. In this work, we

take a step towards this goal with NeuralField-LDM (NF-

LDM), a generative model capable of synthesizing complex

real-world 3D scenes. NF-LDM is trained on a collection

of posed camera images and depth measurements which are

easier to obtain than explicit ground-truth 3D data, offering

a scalable way to synthesize 3D scenes.

Recent approaches [3, 7, 9] tackle the same problem of

generating 3D scenes, albeit on less complex data. In [7,9],

a latent distribution is mapped to a set of scenes using ad-

versarial training, and in GAUDI [3], a denoising diffusion

model is fit to a set of scene latents learned using an auto-

decoder. These models all have an inherent weakness of

attempting to capture the entire scene into a single vector

that conditions a neural radiance field. In practice, we find

that this limits the ability to fit complex scene distributions.

Recently, diffusion models have emerged as a very pow-

erful class of generative models, capable of generating high-

arXiv:2304.09787v1 [cs.CV] 19 Apr 2023

quality images, point clouds and videos [20, 27, 45, 55, 60,

85,91]. Yet, due to the nature of our task, where image data

must be mapped to a shared 3D scene without an explicit

ground truth 3D representation, straightforward approaches

fitting a diffusion model directly to data are infeasible.

In NeuralField-LDM, we learn to model scenes using a

three-stage pipeline. First, we learn an auto-encoder that en-

codes scenes into a neural field, represented as density and

feature voxel grids. Inspired by the success of latent diffu-

sion models for images [60], we learn to model the distribu-

tion of our scene voxels in latent space to focus the genera-

tive capacity on core parts of the scene and not the extrane-

ous details captured by our voxel auto-encoders. Specif-

ically, a latent-autoencoder decomposes the scene voxels

into a 3D coarse, 2D fine and 1D global latent. Hierar-

chichal diffusion models are then trained on the tri-latent

representation to generate novel 3D scenes. We show how

NF-LDM enables applications such as scene editing, birds-

eye view conditional generation and style adaptation. Fi-

nally, we demonstrate how score distillation [53] can be

used to optimize the quality of generated neural fields, al-

lowing us to leverage the representations learned from state-

of-the-art image diffusion models that have been exposed to

orders of magnitude more data.

Our contributions are: 1) We introduce NF-LDM, a hi-

erarchical diffusion model capable of generating complex

open-world 3D scenes and achieving state of the art scene

generation results on four challenging datasets. 2) We ex-

tend NF-LDM to semantic birds-eye view conditional scene

generation, style modification and 3D scene editing.

2. Related Work

2D Generative Models In past years, generative adver-

sarial networks (GANs) [4, 19, 31, 48, 65] and likelihood-

based approaches [38, 56, 58, 78] enabled high-resolution

photorealistic image synthesis. Due to their quality, GANs

are used in a multitude of downstream applications rang-

ing from steerable content creation [34,39, 41, 42, 68, 89] to

data driven simulation [30,35,36,39]. Recently, autoregres-

sive models and score-based models, e.g. diffusion models,

demonstrate better distribution coverage while preserving

high sample quality [11, 12, 15, 23, 25, 50, 55, 60, 61, 79].

Since evaluation and optimization of these approaches in

pixel space is computationally expensive, [60, 79] apply

them to latent space, achieving state-of-the-art image syn-

thesis at megapixel resolution. As our approach operates on

3D scenes, computational efficiency is crucial. Hence, we

build upon [60] and train our model in latent space.

Novel View Synthesis In their seminal work [49],

Mildenhall et al. introduce Neural Radiance Fields (NeRF)

as a powerful 3D representation. PixelNeRF [84] and IBR-

Net [82] propose to condition NeRF on aggregated features

from multiple views to enable novel view synthesis from

a sparse set of views. Another line of works scale NeRF

to large-scale indoor and outdoor scenes [46, 57, 86, 88].

Recently, Nerfusion [88] predicts local radiance fields and

fuses them into a scene representation using a recurrent neu-

ral network. Similarly, we construct a latent scene represen-

tation by aggregating features across multiple views. Dif-

ferent from the aforementioned methods, our approach is a

generative model capable of synthesizing novel scenes.

3D Diffusion Models A few recent works propose to ap-

ply denoising diffusion models (DDM) [23,25,72] on point

clouds for 3D shape generation [45,85,91]. While PVD [91]

trains on point clouds directly, DPM [45] and LION [85]

use a shape latent variable. Similar to LION, we design a

hierarchical model by training separate conditional DDMs.

However, our approach generates both texture and geometry

of a scene without needing 3D ground truth as supervision.

3D-Aware Generative Models 3D-aware generative

models synthesize images while providing explicit control

over the camera pose and potentially other scene proper-

ties, like object shape and appearance. SGAM [69] gener-

ates a 3D scene by autoregressively generating sensor data

and building a 3D map. Several previous approaches gen-

erate NeRFs of single objects with conditional coordinate-

based MLPs [8, 51, 66]. GSN [9] conditions a coordinate-

based MLP on a “floor plan”, i.e. a 2D feature map, to

model more complex indoor scenes. EG3D [7] and Vox-

GRAF [67] use convolutional backbones to generate 3D

representations. All of these approaches rely on adversarial

training. Instead, we train a DDM on voxels in latent space.

The work closest to ours is GAUDI [3], which first trains an

auto-decoder and subsequently trains a DDM on the learned

latent codes. Instead of using a global latent code, we en-

code scenes onto voxel grids and train a hierarchical DDM

to optimally combine global and local features.

3. NeuralField-LDM

Our objective is to train a generative model to synthe-

size 3D scenes that can be rendered to any viewpoint. We

assume access to a dataset {(i, κ, ρ)}

1..N

which consists of

N RGB images i and their camera poses κ, along with a

depth measurement ρ that can be either sparse (e.g. Lidar

points) or dense. The generative model must learn to model

both the texture and geometry distributions of the dataset in

3D by learning solely from the sensor observations, which

is a highly non-trivial problem.

Past work typically tackles this problem with a gener-

ative adversarial network (GAN) framework [7, 9, 66, 67].

They produce an intermediate 3D representation and ren-

der images for a given viewpoint with volume render-

ing [29, 49]. Discriminator losses then ensure that the 3D

representation produces a valid image from any viewpoint.

However, GANs come with notorious training instability

of 37

100墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论