TAPS3D.pdf

100墨值下载

TAPS3D: Text-Guided 3D Textured Shape Generation from Pseudo Supervision

Jiacheng Wei

1*

Hao Wang

1*

Jiashi Feng

2

Guosheng Lin

1†

Kim-Hui Yap

1

1

Nanyang Technological University, Singapore

2

ByteDance

{jiacheng002@e., hao005@e., gslin@, ekhyap@}ntu.edu.sg, jshfeng@bytedance.com

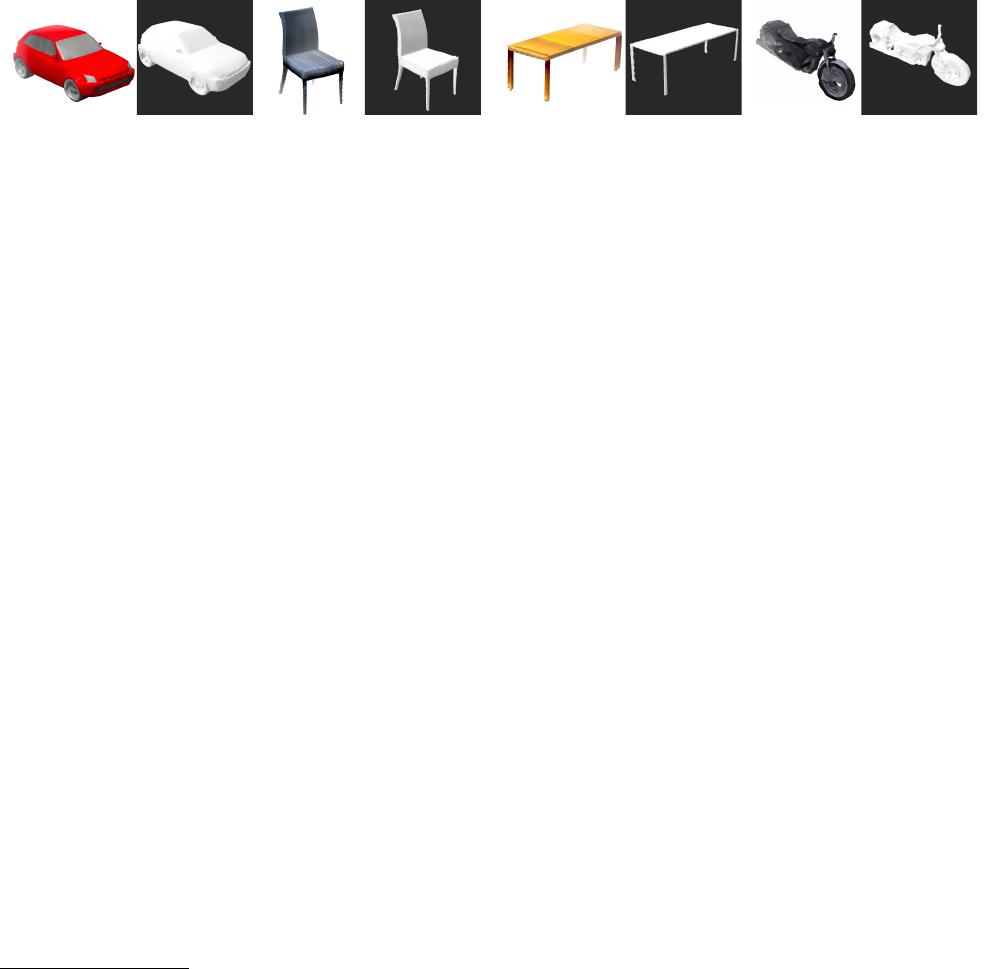

“a red hatchback car” “a gray armless chair” “a wooden office table” “a black motorbike”

Figure 1. Our proposed model is capable of generating detailed textured shapes based on given text prompts. We demonstrate the effective-

ness of our approach by showcasing generated results for four distinct object classes: Car, Chair, Table, and Motorbike. Both the textured

meshes and geometries are presented, with visualizations rendered using ChimeraX [10].

Abstract

In this paper, we investigate an open research task

of generating controllable 3D textured shapes from the

given textual descriptions. Previous works either require

ground truth caption labeling or extensive optimization

time. To resolve these issues, we present a novel frame-

work, TAPS3D, to train a text-guided 3D shape generator

with pseudo captions. Specifically, based on rendered 2D

images, we retrieve relevant words from the CLIP vocab-

ulary and construct pseudo captions using templates. Our

constructed captions provide high-level semantic supervi-

sion for generated 3D shapes. Further, in order to pro-

duce fine-grained textures and increase geometry diversity,

we propose to adopt low-level image regularization to en-

able fake-rendered images to align with the real ones. Dur-

ing the inference phase, our proposed model can generate

3D textured shapes from the given text without any addi-

tional optimization. We conduct extensive experiments to

analyze each of our proposed components and show the

efficacy of our framework in generating high-fidelity 3D

textured and text-relevant shapes. Code is available at

https://github.com/plusmultiply/TAPS3D

1. Introduction

3D objects are essential in various applications [21–24],

such as video games, film special effects, and virtual real-

∗

Equal contribution. Work done during an internship at Bytedance.

†

Corresponding author.

ity. However, realistic and detailed 3D object models are

usually hand-crafted by well-trained artists and engineers

slowly and tediously. To expedite this process, many re-

search works [3,9,13,34,48,49] use deep generative models

to achieve automatic 3D object generation. However, these

models are primarily unconditioned, which can hardly gen-

erate objects as humans will.

In order to control the generated 3D objects from text,

prior text-to-3D generation works [43, 44] leverage the pre-

trained vision-language alignment model CLIP [37], such

that they can only use 3D shape data to achieve zero-shot

learning. For example, Dream Fields [13] combines the ad-

vantages of CLIP and NeRF [27], which can produce both

3D representations and renderings. However, Dream Fields

costs about 70 minutes on 8 TPU cores to produce a sin-

gle result. This means the optimization time during the

inference phase is too slow to use in practice. Later on,

GET3D [9] is proposed with faster inference time, which

incorporates StyleGAN [15] and Deep Marching Tetrahe-

dral (DMTet) [46] as the texture and geometry generators

respectively. Since GET3D adopts a pretrained model to do

text-guided synthesis, they can finish optimization in less

time than Dream Fields. But the requirement of test-time

optimization still limits its application scenarios. CLIP-

NeRF [50] utilizes conditional radiance fields [45] to avoid

test-time optimization, but it requires ground truth text data

for the training purpose. Therefore, CLIP-NeRF is only ap-

plicable to a few object classes that have labeled text data

for training, and its generation quality is restricted by the

NeRF capacity.

To address the aforementioned limitations, we propose

1

arXiv:2303.13273v1 [cs.CV] 23 Mar 2023

to generate pseudo captions for 3D shape data based on

their rendered 2D images and construct a large amount of

⟨3D shape, pseudo captions⟩ as training data, such that

the text-guided 3D generation model can be trained over

them. To this end, we propose a novel framework for

Text-guided 3D textured shApe generation from Pseudo

Supervision (TAPS3D), in which we can generate high-

quality 3D shapes without requiring annotated text training

data or test-time optimization.

Specifically, our proposed framework is composed of

two modules, where the first generates pseudo captions for

3D shapes and feeds them into a 3D generator to con-

duct text-guided training within the second module. In the

pseudo caption generation module, we follow the language-

free text-to-image learning scheme [20, 54]. We first adopt

the CLIP model to retrieve relevant words from given ren-

dered images. Then we construct multiple candidate sen-

tences based on the retrieved words and pick sentences hav-

ing the highest CLIP similarity scores with the given im-

ages. The selected sentences are used as our pseudo cap-

tions for each 3D shape sample.

Following the notable progress of text-to-image gener-

ation models [29, 38, 39, 42, 52], we use text-conditioned

GAN architecture in the text-guided 3D generator training

part. We adopt the pretrained GET3D [9] model as our

backbone network since it has been demonstrated to gen-

erate high-fidelity 3D textured shapes across various object

classes. We input the pseudo captions as the generator con-

ditions and supervise the training process with high-level

CLIP supervision in an attempt to control the generated 3D

shapes. Moreover, we introduce a low-level image regu-

larization loss to produce fine-grained textures and increase

geometry diversity. We empirically train the mapping net-

works only of a pretrained GET3D model so that the train-

ing is stable and fast, and also, the generation quality of the

pretrained model can be preserved.

Our proposed model TAPS3D can produce high-quality

3D textured shapes with strong text control as shown in

Fig. 1, without any per prompt test-time optimization. Our

contribution can be summarized as:

• We introduce a new 3D textured shape generative

framework, which can generate high-quality and fi-

delity 3D shapes without requiring paired text and 3D

shape training data.

• We propose a simple pseudo caption generation

method that enables text-conditioned 3D generator

training, such that the model can generate text-

controlled 3D textured shapes without test time opti-

mization, and significantly reduce the time cost.

• We introduce a low-level image regularization loss on

top of the high-level CLIP loss in an attempt to produce

fine-grained textures and increase geometry diversity.

2. Related Work

2.1. Text-Guided 3D Shape Generation

Text-guided 3D shape generation aims to generate 3D

shapes from the textual descriptions so that the genera-

tion process can be controlled. There are mainly two cat-

egories of methods, i.e., fully-supervised and optimization-

based methods. The fully-supervised method [6, 8, 25] uses

ground truth text and the paired 3D objects with explicit

3D representations as training data. Specifically, CLIP-

Forge [43] uses a two-stage training scheme, which con-

sists of shape autoencoder training, and conditional nor-

malizing flow training. VQ-VAE [44] performs zero-shot

training with 3D voxel data by utilizing the pretrained CLIP

model [37].

Regarding the optimization-based methods [13, 18, 26,

34], Neural Radiance Fields (NeRF) are usually adopted as

the 3D generator. To generate 3D shapes for each input text

prompt, they use the CLIP model to supervise the seman-

tic alignment between rendered images and text prompts.

Since NeRF suffers from extensive generation time, 3D-

aware image synthesis [3, 3, 4, 11, 30, 31] has become pop-

ular, which generates multi-view consistent images by in-

tegrating neural rendering in the Generative Adversarial

Networks (GANs). Specifically, there are no explicit 3D

shapes generated during the process, while the 3D shapes

can be extracted from the implicit representations, such as

the occupancy field or signed distance function (SDF), us-

ing the marching cube algorithm. These optimization-based

methods provide a solution to generate 3D shapes, but their

generation speed was compromised. Although [50] is free

from test-time optimization, it requires text data for train-

ing, which limits its applicable 3D object classes. Our pro-

posed method attempts to alleviate both the paired text data

shortage issues and the long optimization time in the pre-

vious work. We finally produce high-quality 3D shapes to

bring our method to practical applications.

2.2. Text-to-Image Synthesis

We may draw inspiration from text-to-image generation

methods. Typically, many research works [36, 40,53] adopt

the conditional GAN architecture, where they directly take

the text features and concatenate them with the random

noise as the input. Recently, autoregressive models [7, 39]

and diffusion models [29, 38, 41, 42] made great improve-

ment on text to image synthesis while demanding huge

computational resources and massive training data.

With the introduction of StyleGAN [14–16] mapping

networks, the input random noise can be first mapped to an-

other latent space that has disentangled semantics, then the

model can generate images with better quality. Further, ex-

ploring the latent space of StyleGAN has been proved use-

ful by several works [1, 17,33] in text-driven image synthe-

2

of 14

100墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论