Graph Transformer GANs for Graph-Constrained House Generation.pdf

100墨值下载

Graph Transformer GANs for Graph-Constrained House Generation

Hao Tang

1

Zhenyu Zhang

2

Humphrey Shi

3

Bo Li

2

Ling Shao

4

Nicu Sebe

5

Radu Timofte

1,6

Luc Van Gool

1,7

1

CVL, ETH Zurich

2

Tencent Youtu Lab

3

U of Oregon & UIUC & Picsart AI Research

4

UCAS-Terminus AI Lab, UCAS

5

University of Trento

6

University of Wurzburg

7

KU Leuven

Abstract

We present a novel graph Transformer generative adver-

sarial network (GTGAN) to learn effective graph node re-

lations in an end-to-end fashion for the challenging graph-

constrained house generation task. The proposed graph-

Transformer-based generator includes a novel graph Trans-

former encoder that combines graph convolutions and self-

attentions in a Transformer to model both local and global

interactions across connected and non-connected graph

nodes. Specifically, the proposed connected node atten-

tion (CNA) and non-connected node attention (NNA) aim

to capture the global relations across connected nodes and

non-connected nodes in the input graph, respectively. The

proposed graph modeling block (GMB) aims to exploit local

vertex interactions based on a house layout topology. More-

over, we propose a new node classification-based discrim-

inator to preserve the high-level semantic and discrimina-

tive node features for different house components. Finally,

we propose a novel graph-based cycle-consistency loss that

aims at maintaining the relative spatial relationships be-

tween ground truth and predicted graphs. Experiments on

two challenging graph-constrained house generation tasks

(i.e., house layout and roof generation) with two public

datasets demonstrate the effectiveness of GTGAN in terms

of objective quantitative scores and subjective visual real-

ism. New state-of-the-art results are established by large

margins on both tasks.

1. Introduction

This paper focuses on converting an input graph to a re-

alistic house footprint, as depicted in Figure 1. Existing

house generation methods such as [2, 15, 18, 26, 30, 43, 45],

typically rely on building convolutional layers. However,

convolutional architectures lack an understanding of long-

range dependencies in the input graph since inherent in-

ductive biases exist. Several Transformer architectures

[3, 6, 11, 16, 17, 22, 41, 42, 44, 52, 53] based on the self-

attention mechanism have recently been proposed to encode

long-range or global relations, thus learn highly expressive

feature representations. On the other hand, graph convolu-

tion networks are good at exploiting local and neighborhood

vertex correlations based on a graph topology. Therefore,

it stands to reason to combine graph convolution networks

and Transformers to model local as well as global interac-

tions for solving graph-constrained house generation.

In addition, the proposed discriminator aims to distin-

guish real and fake house layouts, which ensures that our

generated house layouts or roofs look realistic. At the same

time, the discriminator classifies the generated rooms to

their corresponding real labels, preserving the discrimina-

tive and semantic features (e.g., size and position) for differ-

ent house components. To maintain the graph-level layout,

we also propose a novel graph-based cycle-consistency loss

to preserve the relative spatial relationships between ground

To this end, we propose a novel graph Transformer gen-

erative adversarial network (GTGAN), which consists of

two main novel components, i.e., a graph Transformer-

based generator and a node classification-based discrimi-

nator (see Figure 1). The proposed generator aims to gen-

erate a realistic house from the input graph, which consists

of three components, i.e., a convolutional message passing

neural network (Conv-MPN), a graph Transformer encoder

(GTE), and a generation head. Specifically, Conv-MPN first

receives graph nodes as inputs and aims to extract discrim-

inative node features. Next, the embedded nodes are fed to

GTE, in which the long-range and global relation reasoning

is performed by the connected node attention (CNA) and

non-connected node attention (NNA) modules. Then, the

output from both attention modules is fed to the proposed

graph modeling block (GMB) to capture local and neigh-

borhood relationships based on a house layout topology. Fi-

nally, the output of GTE is fed to the generative head to pro-

duce the corresponding house layout or roof. To the best of

our knowledge, we are the first to use a graph Transformer

to model local and global relations across graph nodes for

solving graph-constrained house generation.

truth and predicted graphs.

Overall, our contributions are summarized as follows:

arXiv:2303.08225v1 [cs.CV] 14 Mar 2023

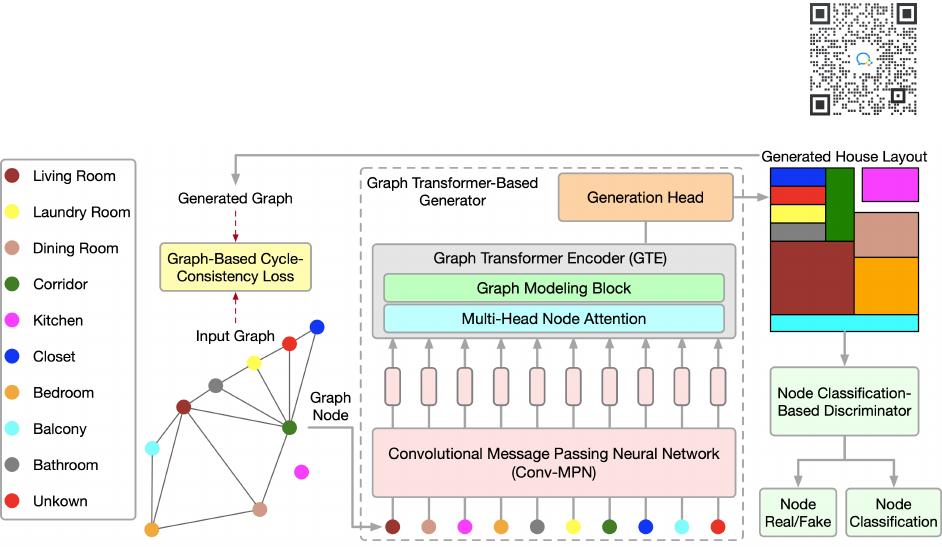

Figure 1. Overview of the proposed GTGAN on house layout generation. It consists of a novel graph Transformer-based generator G and

a novel node classification-based discriminator D. The generator takes graph nodes as input and aims to capture local and global relations

across connected and non-connected nodes using the proposed graph modeling block and multi-head node attention, respectively. Note that

we do not use position embeddings since our goal is to predict positional node information in the generated house layout. The discriminator

D aims to distinguish real and generated layouts and simultaneously classify the generated house layouts to their corresponding room

types. The graph-based cycle-consistency loss aligns the relative spatial relationships between ground truth and predicted nodes. The

whole framework is trained in an end-to-end fashion so that all components benefit from each other.

• We propose a novel Transformer-based network (i.e., GT-

GAN) for the challenging graph-constrained house gener-

ation task. To the best of our knowledge, GTGAN is the

first Transformer-based framework, enabling more effec-

tive relation reasoning for composing house layouts and

validating adjacency constraints.

• We propose a novel graph Transformer generator that

combines both graph convolutional networks and Trans-

formers to explicitly model global and local correlations

across both connected and non-connected nodes simul-

taneously. We also propose a new node classification-

based discriminator to preserve high-level semantic and

discriminative features for different types of rooms.

• We propose a novel graph-based cycle-consistency loss to

guide the learning process toward accurate relative spatial

distance of graph nodes.

• Qualitative and quantitative experiments on two challeng-

ing graph-constrained house generation tasks (i.e., house

layout generation and house roof generation) with two

datasets demonstrate that GTGAN can generate better

house structures than state-of-the-art methods, such as

HouseGAN [26] and RoofGAN [30].

2. Related Work

Generative Adversarial Networks [12] have been widely

used for image generation [19,32,35,36]. The vanilla GAN

consists of a generator and a discriminator. The generator

aims to synthesize photorealistic images from a noise vec-

tor, while the discriminator aims to distinguish between real

and generated samples. To create user-specific images, the

conditional GAN (CGAN) [25] was proposed. A CGAN

combines a vanilla GAN and external information, such as

class labels [7], text descriptions [39, 40, 51], object key-

points [31], human skeletons [33], semantic maps [29, 37,

38], edge maps [34], or attention maps [24]. This paper

mainly focuses on the challenging graph-constrained gen-

eration task, which aims to transfer an input graph to a real-

istic house.

Graph-Constrained Layout Generation has been a focus

of research recently [10, 15, 23, 43]. For example, Wang et

al. [43] presented a layout generation framework that plans

an indoor scene as a relation graph and iteratively inserts a

3D model at each node. Hu et al. [15] converted a layout

graph along with a building boundary into a floorplan that

fulfills both the layout and boundary constraints. Ashual et

al. [2] and Johnson et al. [18] tried to generate image layouts

and synthesize realistic images from input scene graphs via

GCNs. Nauata et al. [26] proposed a graph-constrained gen-

erative adversarial network, whose generator and discrimi-

nator are built upon a relational architecture. Our innovation

is a novel graph Transformer GAN, where the input con-

straint is encoded into the graph structure of the proposed

graph Transformer-based generator and node classification-

based discriminator. Experimental results show the effec-

tiveness of GTGAN over all the leading methods.

Transformers in Computer Vision. The Transformer was

first proposed in [41] for machine translation and has es-

tablished state-of-the-art results in many natural language

of 10

100墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论