Super-Resolution Neural Operator.pdf

50墨值下载

Super-Resolution Neural Operator

Min Wei

*

Xuesong Zhang

∗ †

Beijing University of Posts and Telecommunications, China

{mw, xuesong zhang}@bupt.edu.cn

Abstract

We propose Super-resolution Neural Operator (SRNO),

a deep operator learning framework that can resolve high-

resolution (HR) images at arbitrary scales from the low-

resolution (LR) counterparts. Treating the LR-HR image

pairs as continuous functions approximated with different

grid sizes, SRNO learns the mapping between the corre-

sponding function spaces. From the perspective of ap-

proximation theory, SRNO first embeds the LR input into

a higher-dimensional latent representation space, trying to

capture sufficient basis functions, and then iteratively ap-

proximates the implicit image function with a kernel inte-

gral mechanism, followed by a final dimensionality reduc-

tion step to generate the RGB representation at the target

coordinates. The key characteristics distinguishing SRNO

from prior continuous SR works are: 1) the kernel integral

in each layer is efficiently implemented via the Galerkin-

type attention, which possesses non-local properties in the

spatial domain and therefore benefits the grid-free contin-

uum; and 2) the multilayer attention architecture allows for

the dynamic latent basis update, which is crucial for SR

problems to “hallucinate” high-frequency information from

the LR image. Experiments show that SRNO outperforms

existing continuous SR methods in terms of both accuracy

and running time. Our code is at https://github.com/

2y7c3/Super-Resolution-Neural-Operator

1. Introduction

Single image super-resolution (SR) addresses the in-

verse problem of reconstructing high-resolution (HR) im-

ages from their low-resolution (LR) counterparts. In a data-

driven way, deep neural networks (DNNs) learn the inver-

sion map from many LR-HR sample pairs and have demon-

strated appealing performances [4, 20, 21, 24, 29, 40, 41].

Nevertheless, most DNNs are developed in the configura-

tion of single scaling factors, which cannot be used in sce-

*

Equal contributions. This work is supported by the National Natural

Science Foundation of China (61871055).

†

Corresponding author.

DIV2K( ) Urban100(

SRNO

)

𝑓

ℎ

Ω(𝒱

⋅⋅⋅

⋅⋅⋅

⋅⋅⋅

)

𝑓

ℎ

Ω(ℋ

)

𝑐

ℎ

Ω(ℋ

))Set5(

𝒫𝒦ℒ

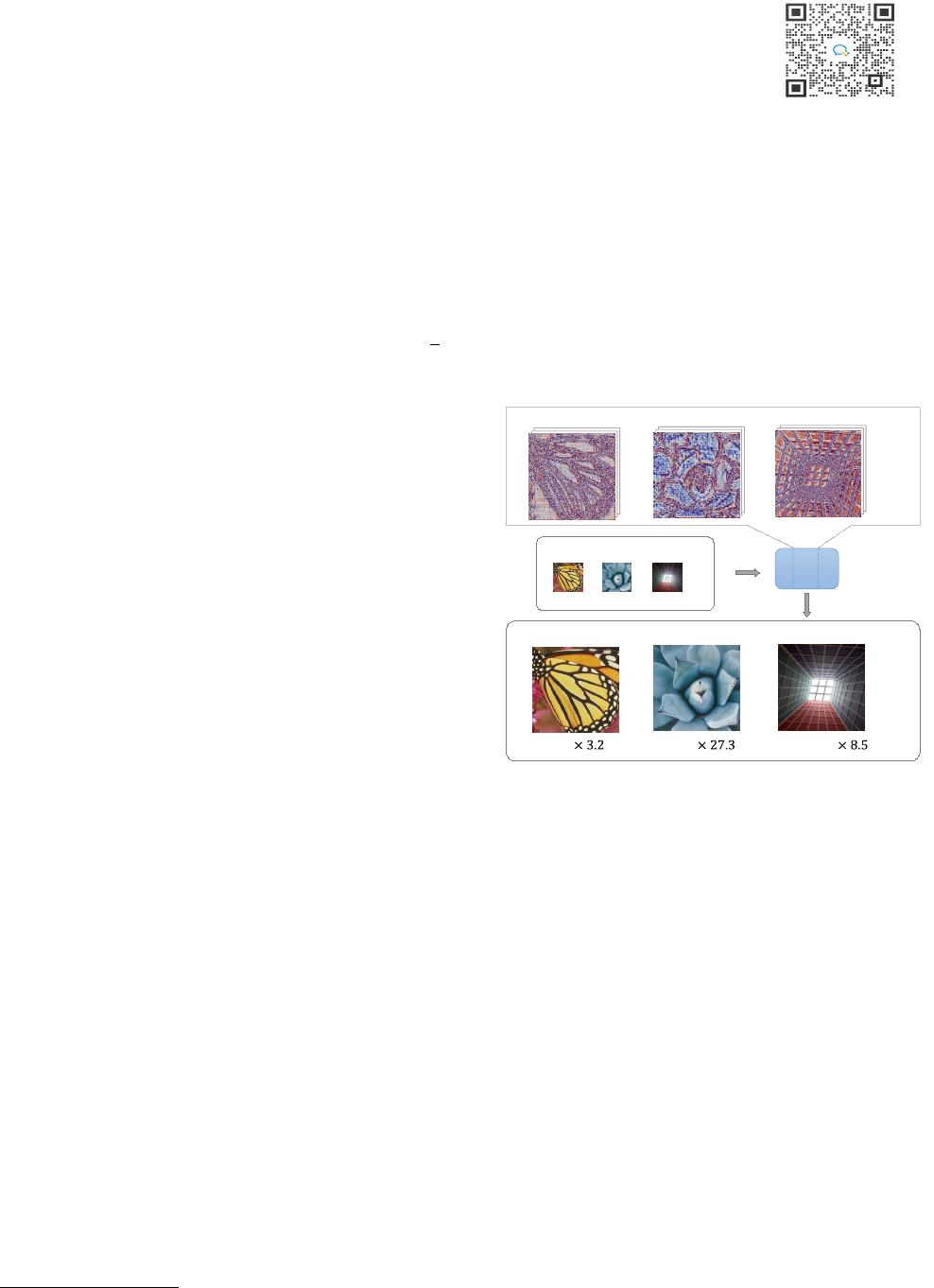

Figure 1. Overview of Super-Resolution Neural operator

(SRNO). SRNO is composed of three parts, L (Lifting), K (ker-

nel integrals) and P (Projection), which perform consecutively

to learn mappings between approximation spaces H(Ω

h

c

) and

H(Ω

h

f

) associated with grid sizes h

c

and h

f

, respectively. The

key component, K, uses test functions in the latent Hilbert space

V(Ω

h

f

) to seek instance-specific basis functions.

narios requiring arbitrary SR factors [37, 38]. Recently, im-

plicit neural functions (INF) [5, 18] have been proposed to

represent images in arbitrary resolution, and paving a feasi-

ble way for continuous SR. These networks, as opposed to

storing discrete signals in grid-based formats, represent sig-

nals with evaluations of continuous functions at specified

coordinates, where the functions are generally parameter-

ized by a multi-layer perceptron (MLP). To share knowl-

edge across instances instead of fitting individual functions

for each signal, encoder-based methods [5, 15, 26] are pro-

posed to retrieve latent codes for each signal, and then a de-

coding MLP is shared by all the instances to generate the

required output, where both the coordinates and the cor-

responding latent codes are taken as input. However, the

point-wise behavior of MLP in the spatial dimensions re-

sults in limited performance when decoding various objects,

particularly for high-frequency components [30, 32].

1

arXiv:2303.02584v1 [cs.CV] 5 Mar 2023

Neural operator is a newly proposed neural network ar-

chitecture in the field of computational physics [17, 19, 23]

for numerically efficient solvers of partial differential equa-

tions (PDE). Stemming from the operator theory, nueral op-

erators learn mappings between infinite-dimensional func-

tion spaces, which is inherently capable of continuous func-

tion evaluations and has shown promising potentials in var-

ious applications [9,13,27]. Typically, neural operator con-

sists of three components: 1) lifting, 2) iterative kernel in-

tegral, and 3) projection. The kernel integrals operate in the

spatial domain, and thus can explicitly capture the global

relationship constraining the underlying solution function

of the PDE. The attention mechanism in transformers [36]

is a special case of kernel integral where linear transforms

are first exerted to the feature maps prior to the inner prod-

uct operations [17]. Tremendous successes of transformers

in various tasks [6, 22, 34] have shown the importance of

capturing global correlations, and this is also true for SR to

improve performance. [8].

In this paper, we propose the super-resolution neural op-

erator (SRNO), a deep operator learning framework that

can resolve HR images from their LR counterparts at ar-

bitrary scales. As shown in Fig.1, SRNO learns the map-

ping between the corresponding function spaces by treating

the LR-HR image pairs as continuous functions approxi-

mated with different grid sizes. The key characteristics dis-

tinguishing SRNO from prior continuous SR works are: 1)

the kernel integral in each layer is efficiently implemented

via the Galerkin-type attention, which possesses non-local

properties in the spatial dimensions and have been proved

to be comparable to a Petrov-Galerkin projection [3]; and

2) the multilayer attention architecture allows for the dy-

namic latent basis update, which is crucial for SR problems

to “hallucinate” high-frequency information from the LR

image. When employing same encoders to capture features,

our method outperforms previous continuous SR methods

in terms of both reconstruction accuracy and running time.

In summary, our main contributions are as follows:

• We propose the methodology of super-resolution neu-

ral operator that maps between finite-dimensional

function spaces, allowing for continuous and zero-shot

super-resolution irrespective the discretization used on

the input and output spaces.

• We develop an architecture for SRNO that first ex-

plores the common latent basis for the whole training

set and subsequently refines an instance-specific basis

by the Galerkin-type attention mechanism.

• Numerically, we show that the proposed SRNO outper-

forms existing continuous SR methods with less run-

ning time, and even generates better results on the res-

olutions for which the fixed scale SR networks were

trained.

2. Related Work

Deep learning based SR methods. [4, 20, 21, 24, 29,

40, 41] have achieved impressive performances, in multi-

scale scenarios one has to train and store several models

for each scale factor, which is unfeasible when consider-

ing time and memory budgets. In recent years, several

methods [11, 31, 37] are proposed to achieve arbitrary-scale

SR with a single model, but their performances are limited

when dealing with out-of-distribution scaling factors. In-

spired by INF, LIIF [5] takes continuous coordinates and

latent variables as inputs, and employs an MLP to achieve

outstanding performances for both in-distribution and out-

of-distribution factors. In contrast, LTE [18] transforms in-

put coordinates into the Fourier domain and uses the dom-

inant frequencies extracted from latent variables to address

the spectral bias problem [30, 32]. In a nutshell, treat-

ing images as RGB-valued functions and sharing the im-

plicit function space are the keys to the success of LIIF-like

works [5, 18]. Nevertheless, a purely local decoder, like

MLP, is not able to accurately approximate arbitrary im-

ages, although it is rather sensitive to the input coordinates.

Neural Operators. Recently, a novel neural net-

work architecture, Neural Operator (NO), was proposed

for discretization invariant solutions of PDEs via infinite-

dimensional operator learning [10,17,19,23]. Neural opera-

tors only need to be trained once and are capable of transfer-

ring solutions between differently discretized meshes while

keeping a fixed approximation error. A valuable merit of

NO is that it does not require knowledge of the underlying

PDE, which allows us to introduce it by the following ab-

stract form,

(L

a

u)(x) = f(x), x ∈ D,

u(x) = 0, x ∈ ∂D,

(1)

where u : D → R

d

u

is the solution function residing in the

Banach space U, and L : A → L(U; U

∗

) is an operator-

valued functional that maps the coefficient function a ∈ A

of the PDE to f ∈ U

∗

, the dual space of U. As in many

cases the inverse operator of L even does not exist, NO

seeks a feasible operator G : A → U, a 7→ u, directly

mapping the coefficient to the solution within an acceptable

tolerance.

The operator G is numerically approximated by training

a neural network G

θ

: A → U, where θ are the train-

able parameters. Suppose we have N pairs of observa-

tions {a

j

, u

j

}

N

j=1

where the input functions a

j

are sam-

pled from probability measure µ compactly supported on

A, and u

j

= G(a

j

) are used as the supervisory output func-

tions. The infinitely dimensional operator learning problem

G ← G

θ

thus is associated with the empirical-risk mini-

mization problem [35]. In practice, we actually measure

the approximation loss using the sampled observations u

(j)

o

2

of 16

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论