CelebV-Text A Large-Scale Facial Text-Video Dataset.pdf

50墨值下载

CelebV-Text: A Large-Scale Facial Text-Video Dataset

Jianhui Yu

1∗

Hao Zhu

2∗

Liming Jiang

3

Chen Change Loy

3

Weidong Cai

1

Wayne Wu

4

1

University of Sydney

2

SenseTime Research

3

S-Lab, Nanyang Technological University

4

Shanghai AI Laboratory

jianhui.yu@sydney.edu.au haozhu96@gmail.com {liming002,ccloy}@ntu.edu.sg

tom.cai@sydney.edu.au wuwenyan0503@gmail.com

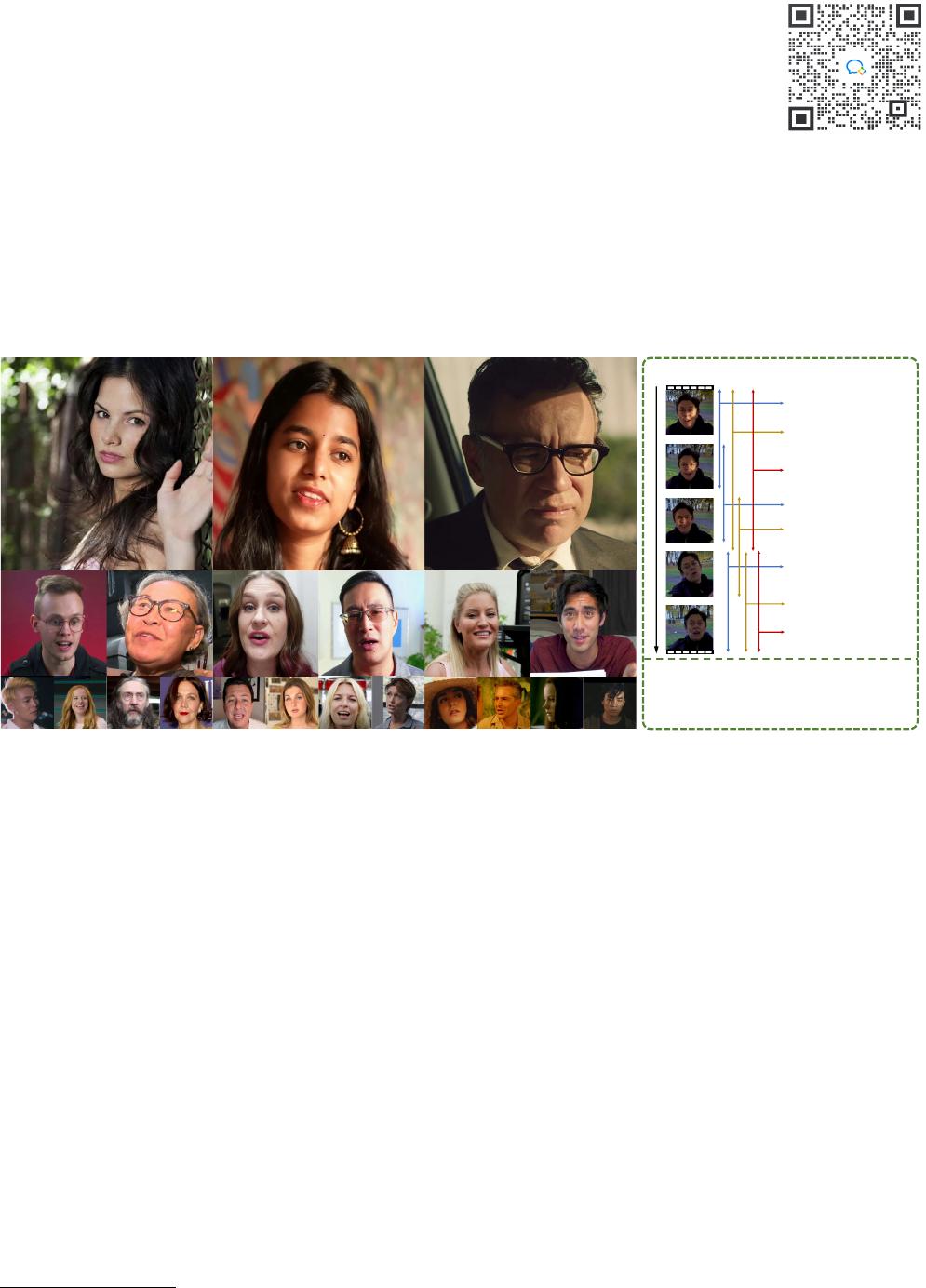

(a) Video Samples (left: general appearance, middle: detailed appearance, right:

changes to back lighting.

The light direction then

to the left of the face.

side lighting with 45 degrees

The light direction is firstly

surprised.isFinally, this man

Then, he turns to be contempt.

seems happy.

At the beginning, the man

and frowns at the same time.

In the end, he wags his head

He then smiles for a bit.

shot time.

This man begins to gaze for a

..

....

..

time

)Light directions/ Emotion/ Action(

Dynamic description:

(b) Text Descriptions

light conditions)

.temperaturecolorlightdayawithlight intensity of this video is darkThe

.freckle on the left cheekgets aHe and bags under eyes. eyebrows

archedThe man has a short black hair with a receding hairline. He has

)Light conditions/ Detailed Appearance / General Appearance(

Static description:

Figure 1. Overview of CelebV-Text. CelebV-Text contains (a) 70, 000 video samples and (b) 1, 400, 000 text descriptions. Each video

sample is annotated with general appearance, detailed appearance, light conditions, action, emotion, and light directions.

Abstract

Text-driven generation models are flourishing in video

generation and editing. However, face-centric text-to-video

generation remains a challenge due to the lack of a suitable

dataset containing high-quality videos and highly relevant

texts. This paper presents CelebV-Text, a large-scale, di-

verse, and high-quality dataset of facial text-video pairs, to

facilitate research on facial text-to-video generation tasks.

CelebV-Text comprises 70,000 in-the-wild face video clips

with diverse visual content, each paired with 20 texts gen-

erated using the proposed semi-automatic text generation

strategy. The provided texts are of high quality, describ-

ing both static and dynamic attributes precisely. The supe-

riority of CelebV-Text over other datasets is demonstrated

via comprehensive statistical analysis of the videos, texts,

and text-video relevance. The effectiveness and potential

of CelebV-Text are further shown through extensive self-

evaluation. A benchmark is constructed with representa-

tive methods to standardize the evaluation of the facial text-

to-video generation task. All data and models are publicly

available

1

.

*

Equal contribution.

1

Project page: https://celebv-text.github.io

1. Introduction

Text-driven video generation has recently garnered sig-

nificant attention in the fields of computer vision and com-

puter graphics. By using text as input, video content can be

generated and controlled, inspiring numerous applications

in both academia and industry [5,35,45,49]. However, text-

to-video generation still faces many challenges, particularly

in the face-centric scenario where generated video frames

often lack quality [19, 35, 39] or have weak relevance to in-

put texts [2,4,41,74]. We believe that one of the main issues

is the absence of a well-suited facial text-video dataset con-

taining high-quality video samples and text descriptions of

various attributes highly relevant to videos.

Constructing a high-quality facial text-video dataset

poses several challenges, mainly in three aspects. 1) Data

collection. The quality and quantity of video samples

largely determine the quality of generated videos [11, 47,

50, 66]. However, obtaining such a large-scale dataset with

high-quality samples while maintaining a natural distribu-

tion and smooth video motion is challenging. 2) Data an-

notation. The relevance of text-video pairs needs to be en-

sured. This requires a comprehensive coverage of text for

describing the content and motion appearing in the video,

1

arXiv:2303.14717v1 [cs.CV] 26 Mar 2023

such as light conditions and head movements. 3) Text gen-

eration. Producing diverse and natural texts are non-trivial.

Manual text generation is expensive and not scalable. While

auto-text generation is easily extensible, it is limited in nat-

uralness.

To overcome the challenges mentioned above, we care-

fully design a comprehensive data construction pipeline that

includes data collection and processing, data annotation,

and semi-auto text generation. First, to obtain raw videos,

we follow the data collection steps of CelebV-HQ, which

has proven to be effective in [73]. We introduce a minor

modification to the video processing step to improve the

video’s smoothness further. Next, to ensure highly relevant

text-video pairs, we analyze videos from both temporal dy-

namics and static content and establish a set of attributes

that may or may not change over time. Finally, we propose

a semi-auto template-based method to generate texts that

are diverse and natural. Our approach leverages the advan-

tages of both auto- and manual-text methods. Specifically,

we design a rich variety of grammar templates as [10,57] to

parse annotation and manual texts, which are flexibly com-

bined and modified to achieve high diversity, complexity,

and naturalness.

With the proposed pipeline, we create CelebV-Text,

a Large-Scale Facial Text-Video Dataset, which includes

70, 000 in-the-wild video clips with a resolution of at least

512× 512 and 1, 400, 000 text descriptions with 20 for each

clip. As depicted in Figure 1, CelebV-Text consists of high-

quality video samples and text descriptions for realistic face

video generation. Each video is annotated with three types

of static attributes (40 general appearances, 5 detailed ap-

pearances, and 6 light conditions) and three types of dy-

namic attributes (37 actions, 8 emotions, and 6 light direc-

tions). All dynamic attributes are densely annotated with

start and end timestamps, while manual-texts are provided

for labels that cannot be discretized. Furthermore, we have

designed three templates for each attribute type, resulting in

a total of 18 templates that can be flexibly combined. All

attributes and manual-texts are naturally described in our

generated texts.

CelebV-Text surpasses existing face video datasets [11]

in terms of resolution (over 2 times higher), number of sam-

ples, and more diverse distribution. In addition, the texts in

CelebV-Text exhibit higher diversity, complexity, and natu-

ralness than those in text-video datasets [20, 73]. CelebV-

Text also shows high relevance of text-video pairs, validated

by our text-video retrieval experiments [18]. To further ex-

amine the effectiveness and potential of CelebV-Text, we

evaluate it on a representative baseline [20] for facial text-

to-video generation. Our results show better relevance be-

tween generated face videos and texts when compared to

a state-of-the-art large-scale pretrained model [27]. Fur-

thermore, we show that a simple modification of [20] with

text interpolation can significantly improve temporal coher-

ence. Finally, we present a new benchmark for text-to-video

generation to standardize the facial text-to-video generation

task, which includes representative models [5, 20] on three

text-video datasets.

The main contributions of this work are summarized as

follows: 1) We propose CelebV-Text, the first large-scale

facial text-video dataset with high-quality videos, as well

as rich and highly-relevant texts, to facilitate research in fa-

cial text-to-video generation. 2) Comprehensive statistical

analyses are conducted to examine video/text quality and

diversity, as well as text-video relevance, demonstrating the

superiority of CelebV-Text. 3) A series of self-evaluations

are performed to demonstrate the effectiveness and poten-

tial of CelebV-Text. 4) A new benchmark for text-to-video

generation is constructed to promote the standardization of

the facial text-to-video generation task.

2. Related Work

Text-to-Video Generation. Text-driven video generation,

which involves generating videos from text descriptions,

has recently gained significant interest as a challenging task.

Mittal et al. [45] first introduced this task to generate se-

mantically consistent videos conditioned on encoded cap-

tions. Other studies, such as [5, 16, 48], attempt to generate

video samples conditioned on encoded text inputs. How-

ever, due to the low richness of text descriptions and the

small number of data samples, the generated video samples

are often at low resolution or lack relevance with the input

texts. More recently, several works [20, 27, 28, 61, 63–65]

have employed discrete latent codes [17, 60] for more re-

alistic video generation. Some of these works treat videos

as a sequence of independent images [20, 27, 64, 65], while

Phenaki [61] considers temporal relations between each

frame for a more robust video decoding process. An-

other branch of studies leverage diffusion models for text-

to-video generation [21, 25, 26, 55], which require mil-

lions or billions of samples to achieve high-quality gener-

ation. While text-to-video generation methods are rapidly

evolving, they are generally designed for generating gen-

eral videos. Among these methods, only MMVID [20] has

conducted specific experiments with face-centric descrip-

tions. One possible reason for this is that facial text-to-

video generation requires more accurate and detailed text

descriptions than general tasks. However, there is currently

no suitable dataset available that provides such properties

for face-centric text-to-video generation.

Multimodal Datasets. Existing multimodal datasets can be

categorized into two classes: open-world and closed-world.

Open-world datasets [3,9,13,33,36,37,42,45,53,54,68,72]

are widely used for text-to-image/video generation tasks.

Some of them have manual annotations [13, 33, 36, 54, 68]

and part of them are directly collected from the Internet,

such as subtitles [42, 53]. Closed-world datasets are mostly

composed of images or videos collected in constrained en-

vironment with corresponding information such as text.

CLEVR [29] is a synthetic text-image dataset produced by

arranging 3D objects with different shapes under a con-

trolled background. While MUGEN [22] is a video-audio-

text dataset that was collected using CoinRun [12] by intro-

ducing audio and new interactions. The corresponding text

is produced by human annotators and grammar templates.

2

of 21

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论