VideoFusion.pdf

50墨值下载

VideoFusion: Decomposed Diffusion Models for High-Quality Video Generation

*

Zhengxiong Luo

1,2,4,5

Dayou Chen

2

Yingya Zhang

2

†

Yan Huang

4,5

Liang Wang

4,5

Yujun Shen

3

Deli Zhao

2

Jingren Zhou

2

Tieniu Tan

4,5,6

1

University of Chinese Academy of Sciences (UCAS)

2

Alibaba Group

3

Ant Group

4

Center for Research on Intelligent Perception and Computing (CRIPAC)

5

Institute of Automation, Chinese Academy of Sciences (CASIA)

6

Nanjing University

zhengxiong.luo@cripac.ia.ac.cn {dayou.cdy, yingya.zyy, jingren.zhou}@alibaba-inc.com

{shenyujun0302, zhaodeli}@gmail.com {yhuang, wangliang, tnt}@nlpr.ia.ac.cn

Shared Base Noise

Shared Residual Noise

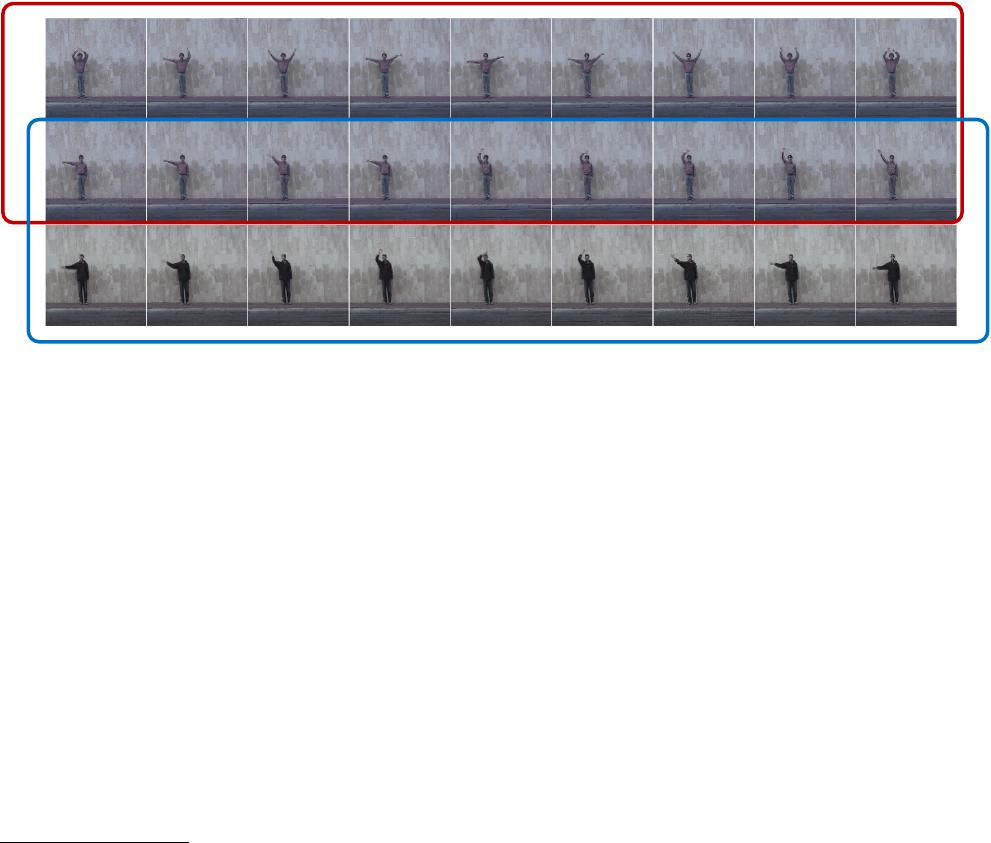

Figure 1. Unconditional generation results on the Weizmann Action dataset [11]. Videos of the top-two rows share the same base noise but

have different residual noises. Videos of the bottom two rows share the same residual noise but have different base noises.

Abstract

A diffusion probabilistic model (DPM), which constructs

a forward diffusion process by gradually adding noise to

data points and learns the reverse denoising process to gen-

erate new samples, has been shown to handle complex data

distribution. Despite its recent success in image synthesis,

applying DPMs to video generation is still challenging due

to high-dimensional data spaces. Previous methods usually

adopt a standard diffusion process, where frames in the

same video clip are destroyed with independent noises,

ignoring the content redundancy and temporal correlation.

This work presents a decomposed diffusion process via

resolving the per-frame noise into a base noise that is

shared among all frames and a residual noise that varies

along the time axis. The denoising pipeline employs two

*

Work done at Alibaba DAMO Academy.

†

Corresponding author.

jointly-learned networks to match the noise decomposition

accordingly. Experiments on various datasets confirm

that our approach, termed as VideoFusion, surpasses both

GAN-based and diffusion-based alternatives in high-quality

video generation. We further show that our decomposed

formulation can benefit from pre-trained image diffusion

models and well-support text-conditioned video creation.

1. Introduction

Diffusion probabilistic models (DPMs) are a class of

deep generative models, which consist of : i) a diffusion

process that gradually adds noise to data points, and ii) a

denoising process that generates new samples via iterative

denoising [14, 18]. Recently, DPMs have made awesome

achievements in generating high-quality and diverse im-

ages [20–22, 25, 27, 36].

Inspired by the success of DPMs on image generation,

many researchers are trying to apply a similar idea to video

arXiv:2303.08320v3 [cs.CV] 22 Mar 2023

prediction/interpolation [13, 44, 48]. While study about

DPMs for video generation is still at an early stage [16] and

faces challenges since video data are of higher dimensions

and involve complex spatial-temporal correlations.

Previous DPM-based video-generation methods usually

adopt a standard diffusion process, where frames in the

same video are added with independent noises and the

temporal correlations are also gradually destroyed in noised

latent variables. Consequently, the video-generation DPM

is required to reconstruct coherent frames from independent

noise samples in the denoising process. However, it is quite

challenging for the denoising network to simultaneously

model spatial and temporal correlations.

Inspired by the idea that consecutive frames share most

of the content, we are motivated to think: would it be

easier to generate video frames from noises that also

have some parts in common? To this end, we modify

the standard diffusion process and propose a decomposed

diffusion probabilistic model, termed as VideoFusion, for

video generation. During the diffusion process, we resolve

the per-frame noise into two parts, namely base noise

and residual noise, where the base noise is shared by

consecutive frames. In this way, the noised latent variables

of different frames will always share a common part,

which makes the denoising network easier to reconstruct

a coherent video. For intuitive illustration, we use the

decoder of DALL-E 2 [25] to generate images conditioned

on the same latent embedding. As shown in Fig. 2a, if

the images are generated from independent noises, their

content varies a lot even if they share the same condition.

But if the noised latent variables share the same base noise,

even an image generator can synthesize roughly correlated

sequences (shown in Fig. 2b). Therefore, the burden of the

denoising network of video-generation DPM can be largely

alleviated.

Furthermore, this decomposed formulation brings addi-

tional benefits. Firstly, as the base noise is shared by all

frames, we can predict it by feeding one frame to a large

pretrained image-generation DPM with only one forward

pass. In this way, the image priors of the pretrained

model could be efficiently shared by all frames and thereby

facilitate the learning of video data. Secondly, the base

noise is shared by all video frames and is likely to be related

to the video content. This property makes it possible for

us to better control the content or motions of generated

videos. Experiments in Sec. 4.7 show that, with adequate

training, VideoFusion tends to relate the base noise with

video content and the residual noise to motions (Fig. 1).

Extensive experiments show that VideoFusion can achieve

state-of-the-art results on different datasets and also well

support text-conditioned video creation.

(a)

(b)

Figure 2. Comparison between images generated from (a) inde-

pendent noises; (b) noises with a shared base noise. Images of the

same row are generated by the decoder of DALL-E 2 [25] with the

same condition.

2. Related Works

2.1. Diffusion Probabilistic Models

DPM is first introduced in [35], which consists of a

diffusion (encoding) process and a denoising (decoding)

process. In the diffusion process, it gradually adds random

noises to the data x via a T -step Markov chain [18]. The

noised latent variable at step t can be expressed as:

z

t

=

p

ˆα

t

x +

p

1 − ˆα

t

t

(1)

with

ˆα

t

=

t

Y

k=1

α

k

t

∼ N(0, 1), (2)

where α

t

∈ (0, 1) is the corresponding diffusion coefficient.

For a T that is large enough, e.g. T = 1000, we have

√

ˆα

T

≈ 0 and

√

1 − ˆα

T

≈ 1. And z

T

approximates a

random gaussian noise. Then the generation of x can be

modeled as iterative denoising.

In [14], Ho et al. connect DPM with denoising score

matching [37] and propose a -prediction form for the

denoising process:

L

t

= k

t

− z

θ

(z

t

, t)k

2

, (3)

where z

θ

is a denoising neural network parameterized by

θ, and L

t

is the loss function. Based on this formulation,

DPM has been applied to various generative tasks, such as

image-generation [15, 25], super-resolution [19, 28], image

translation [31], etc., and become an important class of deep

generative models. Compared with generative adversarial

networks (GANs) [10], DPMs are easier to be trained and

able to generate more diverse samples [5, 26].

2.2. Video Generation

Video generation is one of the most challenging tasks in

the generative research field. It not only needs to generate

high-quality frames but also the generated frames need to be

temporally correlated. Previous video-generation methods

are mainly GAN-based. In VGAN [45] and TGAN [29],

of 10

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论