VLDB2024_CoroGraph:Bridging Cache Efficiency and Work Efficiency for Graph Algorithm Execution_华为.pdf

免费下载

CoroGraph: Bridging Cache Eiciency and Work Eiciency for

Graph Algorithm Execution

Xiangyu Zhi

Xiao Yan*

Department of Computer Science and

Engineering, Southern University of

Science and Technology

zhixy2021@mail.sustech.edu.cn

yanx@sustech.edu.cn

Bo Tang

Ziyao Yin

Department of Computer Science and

Engineering, Southern University of

Science and Technology

tangb3@sustech.edu.cn

yinzy2020@mail.sustech.edu.cn

Yanchao Zhu

Minqi Zhou

Gauss Department, Huawei Company

zhuyanchao2@huawei.com

zhouminqi@huawei.com

ABSTRACT

Many systems are designed to run graph algorithms eciently in

memory but they achieve only cache eciency or work eciency.

We tackle this fundamental trade-o in existing systems by design-

ing CoroGraph, a system that attains both cache eciency and work

eciency for in-memory graph processing. CoroGraph adopts a

novel hybrid execution model, which generates update messages

at vertex granularity to prioritize promising vertices for work e-

ciency, and commits updates at partition granularity to share data

access for cache eciency. To overlap the random memory access

of graph algorithms with computation, CoroGraph extensively uses

coroutine, i.e., a lightweight function in C++ that can yield and

resume with low overhead, to prefetch the required data. A suite

of designs are incorporated to reap the full benets of coroutine,

which include prefetch pipeline, cache-friendly graph format, and

stop-free synchronization. We compare CoroGraph with ve state-

of-the-art graph algorithm systems via extensive experiments. The

results show that CoroGraph yields shorter algorithm execution

time than all baselines in 18 out of 20 cases, and its speedup over the

best-performing baseline can be over 2x. Detailed proling suggests

that CoroGraph achieves both cache eciency and work eciency

with a low memory stall and a small number of processed edges.

PVLDB Reference Format:

Xiangyu Zhi, Xiao Yan, Bo Tang, Ziyao Yin, Yanchao Zhu, Minqi Zhou.

CoroGraph: Bridging Cache Eciency and Work Eciency for Graph

Algorithm Execution. PVLDB, 17(4): 891 - 903, 2023.

doi:10.14778/3636218.3636240

PVLDB Artifact Availability:

The source code, data, and/or other artifacts have been made available at

https://github.com/DBGroup-SUSTech/corograph.

1 INTRODUCTION

Graphs are ubiquitous in many domains such as social media [

13

,

15

], e-commerce [

7

], nance [

1

,

14

], and biology [

22

]. Algorithms

are executed on these graphs to support various applications, e.g.,

*Xiao Yan is the corresponding author.

This work is licensed under the Creative Commons BY-NC-ND 4.0 International

License. Visit https://creativecommons.org/licenses/by-nc-nd/4.0/ to view a copy of

this license. For any use beyond those covered by this license, obtain permission by

emailing info@vldb.org. Copyright is held by the owner/author(s). Publication rights

licensed to the VLDB Endowment.

Proceedings of the VLDB Endowment, Vol. 17, No. 4 ISSN 2150-8097.

doi:10.14778/3636218.3636240

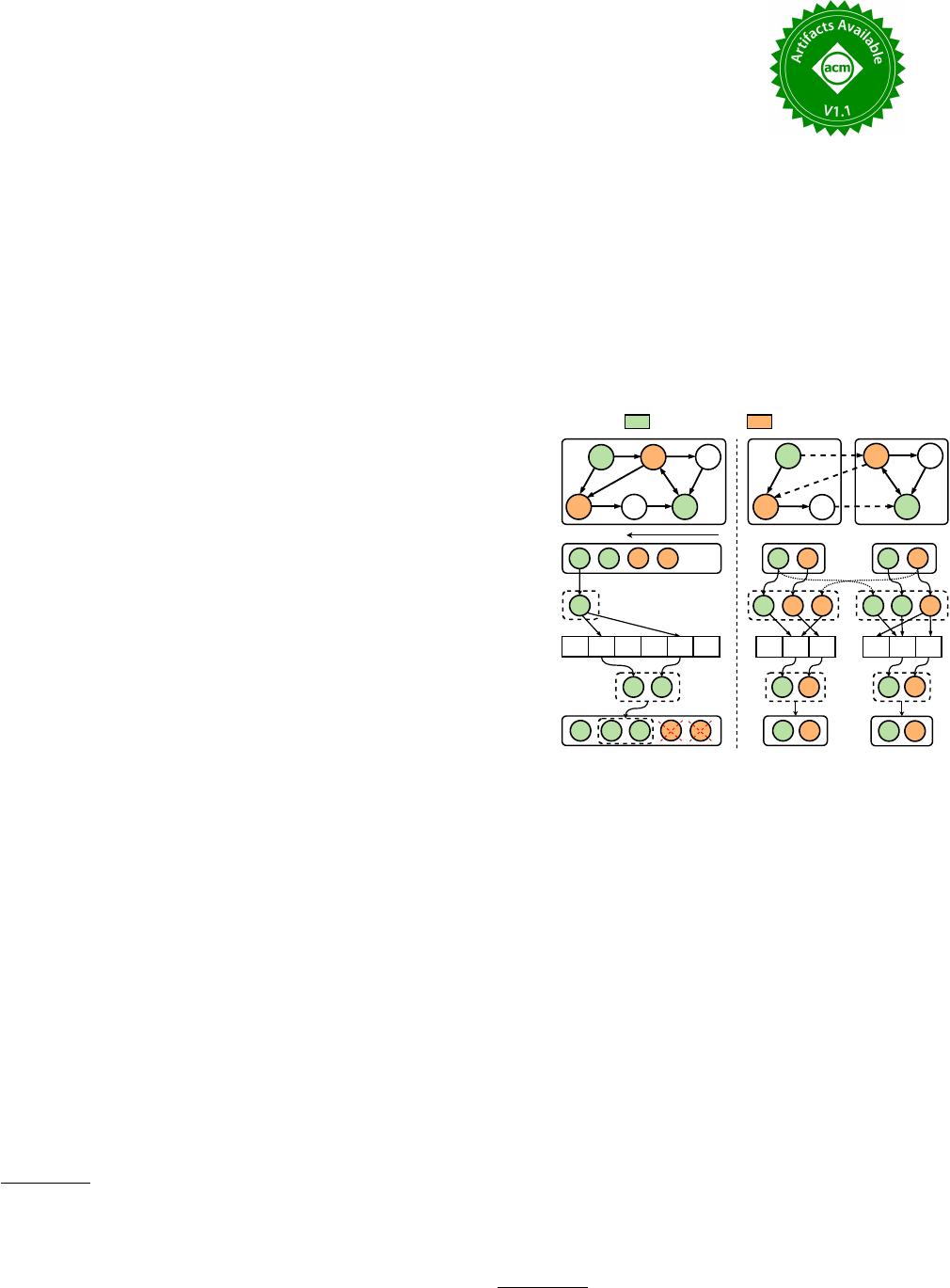

scatter

gather

B C D

E FA

Priority

Graph

Frontiers

...

A

Partition 1 Partition 2

BA A DE E

(a) Vertex-centric Model

(b) Partition-centric Model

A B C D E F

A B C D E F

B E B C

A D B E BA D E

Frontier

State

Next Frontiers

update

B C D

E FA

E F

Frontiers

D B EB E B C

E F

High priority Low priority

Figure 1: The partition-centric and vertex-centric execution

models in existing systems (best viewed in color).

community identication [

2

,

45

], recommendation [

26

], fraud de-

tection [

4

], and drug discovery [

20

]. Examples of popular graph al-

gorithms include PageRank (PR), single source shortest path (SSSP),

weakly connected component (WCC), and k-core [

43

]. Many frame-

works are designed to execute graph algorithms eciently, e.g.,

Ligra [

46

], Galois [

40

], Gemini [

50

], GraphIt [

49

], and GPOP [

31

].

In this paper, we consider in-memory graph algorithm frameworks

that work on a single machine with multiple cores and focus on

reducing algorithm execution time.

1.1 Motivation and Problem

Graph algorithms generally adopt the following pattern: each vertex

𝑣

in the graph is associated with a vertex state

𝑠 [𝑣]

; there are some

active vertices called frontiers; algorithm updates the neighbors

1

of each frontier, and these neighbors may become new frontiers.

Existing graph algorithm frameworks optimize either the work

eciency or cache eciency of graph algorithm execution.

Work eciency is measured by the number of vertex state update

operations conducted during graph algorithm execution [

33

,

40

].

1

To be precise, we mean the vertex states of the neighbors for a frontier. For conciseness,

we use vertex to denote vertex state when the context is clear.

891

Since each update is conducted along an edge (i.e., from frontier to

target vertex), work eciency is also quantied by the number of

processed edges [

33

]. Existing systems (e.g., Galois) achieve work

eciency with a vertex-centric execution model. The observation is

that frontiers make dierent contributions to algorithm progress,

and thus work-ecient graph algorithms (e.g., SSSP, k-core, and

WCC) determine a priority value for each frontier to prioritize

promising frontiers that accelerate algorithm progress. As shown

in Figure 1(a), the vertex-centric model uses a queue to manage the

frontiers, and the highest-priority frontier (e.g.,

𝐴

) is obtained from

the queue each time. The neighbors of the dequeued frontier (e.g.,

𝐵

and

𝐸

) are updated, and if a neighbor meets the condition specied

by the algorithm after update, it is inserted into the frontier queue,

and its lower-priority instances will be pruned.

Cache eciency is quantied by the ratio of the CPU stall time

caused by cache misses over the algorithm execution time (called

memory bound [

10

]) [

46

,

47

]. Since the neighbors of a frontier are

usually not consecutive in memory, the neighbor update operation

of graph algorithms essentially conducts random memory access.

Random access causes both read amplication and cache miss

2

,

which result in high memory bound. Existing systems (e.g., GPOP)

improve cache eciency with a partition-centric execution model.

In particular, the vertex states are organized into partitions that t in

L2 or L3 cache, and processing is conducted at partition granularity.

As shown in Figure 1(b), the partition-centric model involves a

scatter phase and a gather phase. In the scatter phase, the frontiers

of each partition is copied to the partitions where its neighboring

vertices reside. For instance, the neighbors of frontier

𝐴

span two

partitions, so

𝐴

is copied to both partitions. In the gather phase,

each partition collects the states from the scatter phase, updates

the vertices within the partition, and gets the frontier for the next

iteration. By ensuring that the working partition resides in cache,

the partition-centric model avoids memory stall and achieves high

cache eciency.

Table 1 shows the trade-o between cache eciency and work

eciency in existing graph frameworks. In particular, GPOP has low

memory stall but poor work eciency. This is because its partition-

centric model batches computation by partition to share data access

and cannot follow the priority order to process individual frontiers.

Galois conducts less work but has high memory stall. This is because

its vertex-centric model processes each frontier individually and

cannot share data access among the frontiers as in the partition-

centric model. This fundamental trade-o leads us to the following

question–is it possible to architect a graph algorithm framework that

achieves both cache and work eciency?

1.2 Our Solution: CoroGraph

Our initial design is to reduce memory stall for the vertex-centric

model with a coroutine-based prefetch pipeline. In particular, soft-

ware prefetch allows programs to specify the data to load [

9

], and

coroutines are lightweight functions that can yield and resume with

low overhead [

19

]. In our pipeline, processing tasks are conducted

2

Read amplication means that a oat or integer vertex state is fetched via 64-byte

cache line load while cache miss happens because the automatic hardware prefetch of

CPU only loads consecutive data.

Table 1: Execution statistics of representative graph frame-

works and our CoroGraph. The algorithm is SSSP, and the

graph is Livejournal. GPOP targets cache eciency while

Galois targets work eciency. For both memory bound and

processed edges (i.e., # of edges), smaller is better.

System

SSSP

Memory bound # of edges (M) Time (ms)

Ligra 65.3% 569 1270

Gemini 55.2% 573 954

GraphIt 60.5% 145 782

GPOP 32.9% 604 594

Galois 70.2% 89 513

CoroGraph 28.3% 68 262

by coroutines, which issue prefetch instructions, switch to the com-

putation tasks of other coroutines, and resume for computation

after the required data are fetched to cache. This design overlaps

memory access and computation but the performance is unsatis-

factory for two reasons. First, memory stall dominates execution

time for vertex-centric model (over 70% for Galois in Table 1), and

thus the gain is limited even if memory access and computation are

perfectly overlapped (e.g., max speedup for Galois is 100

/

70

≈

1

.

43).

Second, the threads have data conicts when processing dierent

frontiers in parallel because the frontiers share common neighbors,

and these data conicts invalidate prefetched data.

To tackle the problems above, CoroGraph adopts a novel hybrid

execution model that combines the benets of partition-centric and

vertex-centric execution. In particular, like vertex-centric execution,

the frontiers are managed by a priority queue and processed in the

order of their contributions to algorithm progress, resulting in

good work eciency. Meanwhile, like partition-centric execution,

updates to vertex states are committed at partition granularity.

To accommodate the two mismatching patterns, the frontiers are

processed in a scatter phase that generates update messages while

the actual updates are conduct in a gather phase. A single thread is

assigned to process all updates to a partition, which results in good

cache eciency by sharing data access among update operations.

The thread-to-partition update pattern also benets prefetch by

eliminating the data conicts that invalidate prefetched data.

Beside the hybrid execution model, we tailor a suite of optimiza-

tions in CoroGraph. First, we use the aforementioned coroutine-

based prefetch pipeline to overlap memory access with computation.

Second, we propose a lightweight but eective strategy to switch

between prefetch and direct data access because prefetch becomes

slower than direct access as a thread gradually warms the cache by

processing a partition. Third, since the threads are blocked when

waiting to access shared data structures, we adopt a exible syn-

chronization strategy, which allows the threads to proceed with

other tasks instead of blocking. Besides, we also adjust the graph

storage format to reduce cache miss and consider the NUMA ar-

chitecture of modern processors when scheduling the tasks and

conguring the prefetch pipeline.

We experiment with 4 popular graph algorithms (i.e., SSSP, k-

core, PR, and WCC) and compare our CoroGraph with 5 sate-of-the-

art graph algorithm frameworks (i.e., Ligra, Gemini, GPOP, Galois,

892

of 13

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

文档被以下合辑收录

评论