VLDB2024_AutoTQA:Towards Autonomous Tabular Question Answering through Multi-Agent Large Language Models_PingCAP.pdf

免费下载

AutoTQA: Towards Autonomous Tabular estion Answering

through Multi-Agent Large Language Models

Jun-Peng Zhu

East China Normal University

& PingCAP, China

zjp.dase@stu.ecnu.edu.cn

Peng Cai

East China Normal University

pcai@dase.ecnu.edu.cn

Kai Xu

PingCAP, China

xukai@pingcap.com

Li Li

PingCAP, China

lili@pingcap.com

Yishen Sun

PingCAP, China

sunyishen@pingcap.com

Shuai Zhou

PingCAP, China

zhoushuai@pingcap.com

Haihuang Su

PingCAP, China

suhaihuang@pingcap.com

Liu Tang

PingCAP, China

tl@pingcap.com

Qi Liu

PingCAP, China

liuqi@pingcap.com

ABSTRACT

With the growing signicance of data analysis, several studies aim

to provide precise answers to users’ natural language questions

from tables, a task referred to as tabular question answering (TQA).

The state-of-the-art TQA approaches are limited to handling only

single-table questions. However, real-world TQA problems are in-

herently complex and frequently involve multiple tables, which

poses challenges in directly extending single-table TQA designs to

handle multiple tables, primarily due to the limited extensibility of

the majority of single-table TQA methods.

This paper proposes AutoTQA, a novel

Auto

nomous

T

abular

Q

uestion

A

nswering framework that employs multi-agent large

language models (LLMs) across multiple tables from various sys-

tems (e.g., TiDB, BigQuery). AutoTQA comprises ve agents: the

User, responsible for receiving the user’s natural language inquiry;

the Planner, tasked with creating an execution plan for the user’s in-

quiry; the Engineer, responsible for executing the plan step-by-step;

the Executor, provides various execution environments (e.g., text-to-

SQL) to fulll specic tasks assigned by the Engineer; and the Critic,

responsible for judging whether to complete the user’s natural lan-

guage inquiry and identifying gaps between the current results and

initial tasks. To facilitate the interaction between dierent agents,

we have also devised agent scheduling algorithms. Furthermore,

we have developed LinguFlow, an open-source, low-code visual

programming tool, to quickly build and debug LLM-based appli-

cations, and to accelerate the creation of various external tools

and execution environments. We also implemented a series of data

connectors, which allows AutoTQA to access various tables from

multiple systems. Extensive experiments show that AutoTQA de-

livers outstanding performance on four representative datasets.

This work is licensed under the Creative Commons BY-NC-ND 4.0 International

License. Visit https://creativecommons.org/licenses/by-nc-nd/4.0/ to view a copy of

this license. For any use beyond those covered by this license, obtain permission by

emailing info@vldb.org. Copyright is held by the owner/author(s). Publication rights

licensed to the VLDB Endowment.

Proceedings of the VLDB Endowment, Vol. 17, No. 12 ISSN 2150-8097.

doi:10.14778/3685800.3685816

PVLDB Reference Format:

Jun-Peng Zhu, Peng Cai, Kai Xu, Li Li, Yishen Sun, Shuai Zhou, Haihuang

Su, Liu Tang, Qi Liu. AutoTQA: Towards Autonomous Tabular Question

Answering through Multi-Agent Large Language Models. PVLDB, 17(12):

3920 - 3933, 2024.

doi:10.14778/3685800.3685816

1 INTRODUCTION

Tabular Question Answering (TQA) [

4

,

7

,

8

,

12

,

16

,

20

,

24

,

27

,

52

–

54

,

59

] is a crucial task in data analysis, focusing on providing

answers from tables in response to a user’s natural language (NL)

inquiry. Data are commonly presented in tabular form in scenarios

such as nancial reports and statistical reports. Users often need

expertise to handle and address questions in such scenarios eec-

tively. TQA approaches address these issues without demanding

extensive expertise in natural language processing and data analy-

sis. In a real scenario, however, tables have complex forms, such

as relational database (RDB) tables (i.e., structured tables) and web

tables. Furthermore, TQA tasks can encompass the manipulation of

multiple tables, including operations such as joins, set operations,

and others. The ongoing exploration of a unied TQA framework

applicable to various scenarios remains a prominent research focus

within data analysis and natural language processing.

The state-of-the-art large language models (LLMs) such as Chat-

GPT [

25

], BLOOM [

36

] and LLaMA [

39

] have experienced rapid

development, to achieve general articial intelligence, showcas-

ing remarkable zero-shot [

44

] capabilities in a variety of linguistic

applications. However, while these LLMs demonstrate excellence

in general knowledge, their performance within specic domains,

such as data analysis, may yield somewhat erroneous answers and

should not be entirely relied upon due to a lack of domain-specic

training [

60

]. The emergence of LLMs presents new opportunities

and challenges for the tasks associated with TQA.

Limitations of Prior Art. As far as we know, state-of-the-art

TQA approaches have their limitations:

(1) Lack of eective solutions in multi-table TQA. Current

TQA approaches [

8

,

16

,

24

,

52

,

54

] are typically limited to address-

ing single-table TQA tasks. In this context, users send a natural

language inquiry, and these approaches can only analyze data from

3920

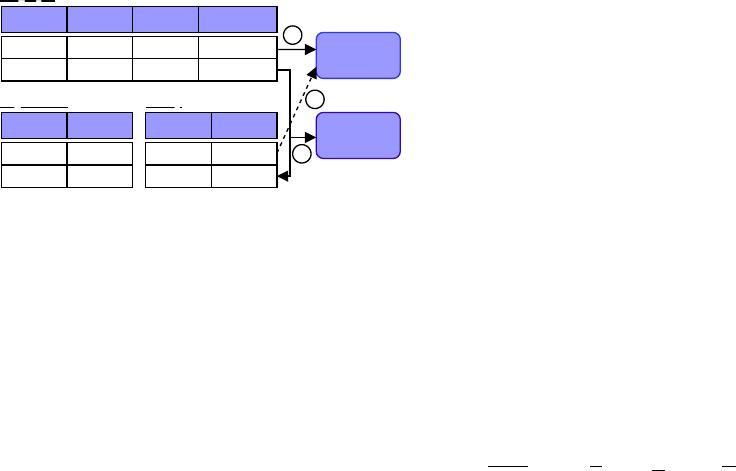

ID Name Email Depno

001 Lucy lucy@com 1

002 Tom tom@com 2

Employee

ID Bonus

001 $2100

002 $1569

Salary

What is the year-end bonus for Tom?

Single-table

QA

Depno DepName

1 R&D

2 FINANCE

Department

Multi-table

QA

1

2

3

Figure 1: A Motivating Example for Multiple Tables TQA.

a single table specied by a user to provide answers. Typically, this

process involves employing Python or SQL for data manipulation

on a single table. However, a common scenario arises where, for

example, for convenience of management, the company stores basic

employee information in the Employee table. Simultaneously, the

nance department establishes a Salary table for eective salary

management and security, housing employee IDs and the corre-

sponding salary details. When sending an inquiry such as “What

is the year-end bonus for Tom?”, it is required to perform a table

join operation, joining the Employee and Salary tables using the

employee ID as the join key. As depicted in Figure 1, in this instance,

the Employee table and the Salary table individually (➀ or ➁) can-

not answer the user’s inquiry. An operation of join (

➂

) between the

two tables is required. In addition to executing a table join, the task

may require complex analyses, such as set operations. It may even

be necessary to perform multiple complex operations in a specic

order (or steps) on multiple tables. MultiTabQA [

27

] is a method

that is able to address TQA tasks in multiple tables. Nevertheless,

it requires elaborate pre-training and ne-tuning processes, pos-

ing signicant complexity for the PingCAP. The task of multiple

tables TQA has not yet received exhaustive research attention, and

it continues to exhibit the following two limitations.

(2) Lack of adequate exploration of LLM capabilities. The

state-of-the-art ReAcTable [

54

], uses a combination of Chain of

Thought (CoT) [

45

] and ReAct [

51

] paradigm to facilitate responses

to user’s questions. However, relying solely on these two in-context

learning approaches is inadequate to fully explore the capabilities

of LLM. In the context of ReAcTable, the challenge arises when en-

countering an empty answer at an intermediate step, as the method

faces diculty distinguishing between an incorrect response and

a genuinely empty one. Through extensive experiments, we have

identied numerous factors contributing to the occurrence of empty

answers. Furthermore, it is crucial to note that encoding the data

from the table directly into the ReAcTable prompt [

54

] is not prac-

tical, especially for large tables in business operations. Another

crucial capability of LLMs is their ability to learn from conversa-

tions. LLMs represent a type of generative articial intelligence

from conversations, and existing TQA methods utilizing LLMs do

not fully exploit this potential. Providing appropriate guidance to

LLMs is likely to result in more accurate responses and improve

overall eciency.

(3) Lack of solutions for manipulating multiple tables from

multiple systems. The state-of-the-art TQA approaches [

7

,

12

,

16

,

20

,

24

,

54

,

59

] face challenges when simultaneously dealing with

structured database tables and semi-structured tables (such as web

tables). These tables could be stored in MySQL, S3 object storage, or

obtained in real time through APIs. These approaches require users

to undergo an intricate preprocessing and extract, transform, and

load (ETL) process, thereby signicantly increasing the complexity

of the TQA task. Large-scale ETL processes executed in batches

typically require signicant resources. Users of TQA should be

freed from these complexities.

Key Technical Challenges. As mentioned above, we summa-

rize the challenges in the design of the TQA approaches for the

dierent scenarios:

•

The rst technical challenge is how to design and imple-

ment a unied TQA framework capable of addressing both

single table and multiple tables TQA tasks.

•

The second technical challenge is how to maximize the uti-

lization of the LLM’s capabilities to provide answers while

concurrently verifying the correctness of those answers.

•

The third technical challenge is how to eciently manage

various tables from multiple systems.

Proposed Approach. To this end, we propose AutoTQA, an

Auto

nomous

T

abular

Q

uestion

A

nswering framework employing

multi-agent large language models in this paper. To overcome the

rst two challenges, we introduce AutoTQA, designed to eciently

support the TQA tasks of single table and multiple tables. AutoTQA

delves into multi-agent LLMs, involving ve distinct roles: User,

Planner, Engineer, Executor, and Critic. These agents collaborate

to respond to user inquiries through conversations. The User for-

mulates the question in natural language, the Planner generates a

detailed execution plan that combines standard operation proce-

dure (SOP) of business operations, the Engineer executes the plan

step-by-step, and the Executor invokes the suitable LLM-based ap-

plications for data processing using Python or SQL. Throughout

the task, the Critic intervenes to evaluate task completion and iden-

tify gaps in the plan and original tasks. Upon detecting gaps, the

Critic instructs the Planner to revise the plan. To precisely sched-

ule dierent agents to collaborate on tasks, we also propose agent

scheduling algorithms. To address the third challenge, AutoTQA

integrates a series of data connectors utilizing Trino [

37

,

40

] to

streamline access to data from multiple systems within a single

query.

It is essential to note that when the Engineer is tasked with

a specic sub-task, it does not execute the task directly, such as

writing Python or SQL code. The Engineer only needs to describe

the task and then delegate it to Executor. The Executor initializes

with the LLM-based execution environment, adapting to various

requirements (or tasks). AutoTQA decouples the Engineer from

the specic execution process, making it easier to locate problems

and improve accuracy in the LLM-based execution environment.

Rapidly and eciently building and debugging LLM-based applica-

tions presents challenges. To address this challenge, we developed

and implemented LinguFlow, an open source, low code visual pro-

gramming tool for the development and deployment of LLM-based

applications. Serving as a low-code programming tool, LinguFlow

helps application developers in the rapid construction, debugging,

and deployment of LLM-based applications. Developers need only

3921

of 14

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

文档被以下合辑收录

评论