HIndex-FLSM_Fragmented_Log-Structured_Merge_Trees_Integrated_with_Heat_and_IndexHIndex-FLSM:分段日志结构合并.pdf

免费下载

HIndex-FLSM: Fragmented Log-Structured Merge

Trees Integrated with Heat and Index

1

st

Xiaopeng Wang

Shenzhen Graduate School

Peking University

Shenzhen, China

wangxp@stu.pku.edu.cn

4

th

Xiyu Wang

ZTE Corporation

Shenzhen, China

wang.xiyu@zte.com.cn

2

nd

Hui Li*

Shenzhen Graduate School

Peking University

Shenzhen, China

lih64@pkusz.edu.cn

5

th

Ping Lu

ZTE Corporation

Shenzhen, China

lu.ping@zte.com.cn

3

rd

Hong Tan

Shenzhen Graduate School

Peking University

Shenzhen, China

hongtan@stu.pku.edu.cn

6

th

Han Wang

Shenzhen Graduate School

Peking University

Shenzhen, China

wanghan2017@pku.edu.cn

Abstract—Log-Structured Merge Tree (LSM-tree) is

commonly used for building high-performance persistent key-

value stores. However, they are known to suffer from severe I/O

amplification. To mitigate this issue, structures like Fragmented

Log-Structured Merge Trees (FLSM-tree) have been developed,

which leverage tiering merge policies to reduce write

amplification. Nevertheless, FLSM-tree fails to consider data

access frequency, access times, and other heat-related

information. Additionally, the Sorted String Table(SSTable) in

each level may not necessarily be ordered, leading to diminished

read and range query performance. To address these issues, we

have proposed a high-performance key-value storage structure,

tailored specifically for read/write-intensive workloads, called

HIndex-FLSM. This structure employs a heat calculation

mechanism for cold and hot data segregation and enhances read

performance by indexing Guard. The compaction process has

also been optimized by combining heat and ordered indexing.

To evaluate the performance of HIndex-FLSM, we have

implemented the prototype system of HIndex-FLSM, called

HIndex-PebblesDB, based on the prototype system PebblesDB

of FLSM-tree. Through benchmark testing of HIndex-

PebblesDB, we observed an approximately 20% improvement

in hot spot read and 57% in range query compared to

PebblesDB.

Keywords—key-value database,log-structured merge tree,

index, tiering merge policy

I. INTRODUCTION

According to the International Data Corporation (IDC)

report, China's data volume is expected to reach a staggering

30.02ZB in 2023, and an estimated 76.6ZB by 2027, owing to

the rise of cloud computing and the artificial intelligence

industry. Due to the significant portion of unstructured data in

the current data landscape, traditional relational databases

exhibit inflexibility in handling such data. As a result, various

non-relational storage systems have emerged, with key-value

storage systems[3,4] simplifying the data processing model by

employing key-value pairs for data access. This simplistic data

processing model provides high system scalability, facilitating

the construction of distributed storage systems to meet the

demands of handling large data volumes.

Within data-intensive systems, LSM-tree[1] is frequently

used as the core indexing structure[5,6] for persistent key-

value databases and is utilized in popular storage engines such

as LevelDB[7], RocksDB[8], HBase[9], and Cassandra[10].

The LSM-tree leverages the deferred persistence of write

requests in memory, which are then batch-processed into

sequential writes on persistent storage. This takes advantage

of the random read-write speeds in memory and the high

sequential write bandwidth in persistent storage. Therefore,

the LSM-tree is an effective solution for persistent key-value

storage in data-intensive systems.

LSM-tree continues to bear persistent issues that arise

from the differences in characteristics and speed between

persistent storage and memory, as well as inherent design

trade-offs. The most prominent of these issues is write

amplification. Write amplification refers to the volume of data

written exceeding the data intended for application writing. It

results in reduced lifespan of Solid State Drive (SSD), a

popular storage medium, and subsequent increase in

application cost overhead.

It's fascinating to see the recent developments in

partitioned tiering merge policy[11] that aim to improve write

performance. The Lightweight Compaction Tree (LWC-tree)

[12] adopts a similar partitioned tiering design and vertical

grouping. It further introduces a method to achieve workload

balancing for SSTable groups. PebblesDB proposes a data

layout structure based on a log-structured merge tree, called

FLSM-tree[2]. The FLSM-tree avoids SSTable rewriting

during compaction, significantly reducing write amplification.

dCompaction [13] introduces the concepts of virtual SSTables

and virtual merges to reduce the frequency of merges.

However, the lack of order among SSTable files within

each Guard adversely affects the system's read performance.

Therefore, using FLSM-tree to build a system necessitates

additional read optimization measures to minimize its impact

on system read performance.To mitigate the impact of FLSM-

tree on system read performance, we propose a high-

performance key-value storage structure suitable for read-

write intensive workloads, called HIndex-FLSM. HIndex-

FLSM is built upon the FLSM-tree structure and employs a

heat calculation mechanism for data segregation. It enhances

read performance by establishing indexes for Guards and

further optimizes the overall system performance by

integrating ordered indexing into the FLSM-tree's compaction

process. We have implemented a prototype of HIndex-FLSM,

named HIndex-PebblesDB, on PebblesDB. Test results

indicate a significant improvement in read performance under

read-intensive workloads when compared to PebblesDB.

The main structure of our paper is as follows: In Section

II, we introduced LSM-tree and FLSM-tree. Following that,

in Section III, we described the design and implementation of

71

2024 3rd International Joint Conference on Information and Communication Engineering (JCICE)

979-8-3503-8872-5/24/$31.00 ©2024 IEEE

DOI 10.1109/JCICE61382.2024.00024

2024 3rd International Joint Conference on Information and Communication Engineering (JCICE) | 979-8-3503-8872-5/24/$31.00 ©2024 IEEE | DOI: 10.1109/JCICE61382.2024.00024

Authorized licensed use limited to: ZTE CORPORATION. Downloaded on November 26,2024 at 04:23:11 UTC from IEEE Xplore. Restrictions apply.

our proposed HIndex-FLSM structure. In Section IV, we

experimentally validated the performance of the prototype

implementation HIndex-PebblesDB for HIndex-FLSM.

II. RELATED WORK

As data accumulates, the query performance of the LSM-

tree can decline due to the growing number of components in

the disk. To address this issue, the components in the disk

gradually merge to reduce the overall count. Two primary

merging strategies are typically used in practice, namely the

horizontal merge policy and the tiering merge policy. Most

LSM-tree implementations use a well-known optimization

technique called Partitioning. Partitioning involves dividing

the disk components of the LSM-tree into multiple small

partitions, usually fixed in size. Both leveling merge and

tiering merge strategies can be employed to support

partitioning. However, the use of the horizontal partitioning

merge strategy in LSM-tree structures leads to severe write

amplification. This issue is illustrated using LevelDB as an

example of the horizontal merge strategy, while the FLSM-

tree demonstrates how the tiered partition merge strategy

reduces write amplification.

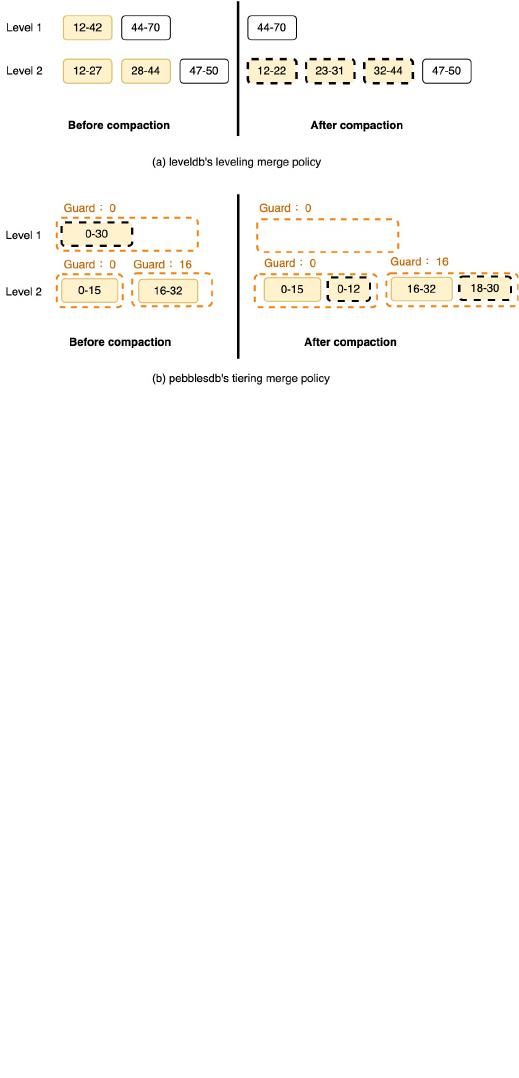

In the partitioning leveling merge policy pioneered by

LevelDB, each level's disk components are divided into

multiple fixed-sized SSTables, and each SSTable has key

range markings. To merge an SSTable from Level L into

Level L+1, all overlapping SSTables at Level L+1 are selected

and merged with the corresponding SSTable from Level L,

generating new SSTables that remain in Level L+1. As shown

in Figure 1a, the SSTable marked as 12-42 at Level 1 is

merged with the SSTables marked as 12-27 and 28-44 at Level

2, resulting in new SSTables marked as 12-22, 23-31, and 32-

44 at Level 2. In this horizontal partitioning merge strategy,

merging from lower to upper levels may impact multiple

SSTables in the upper level, necessitating read and write

operations for all these SSTables, leading to significant write

amplification.

The FLSM-tree is an improvement over LSM-tree and

effectively reduces the write amplification effect. It exhibits

low write amplification, high write throughput, and excellent

read performance under write-intensive workloads compared

to the original LSM-tree structure.

Fig. 1. The compaction process of LevelDB and PebblesDB.

The FLSM-tree is a combination of LSM-tree and skip-list

structures[14], with each level being divided into multiple

Guards. SSTables are enclosed within Guards at different

levels, and Guards within the same level are ordered by

attribute values in an ascending sequence, with no intersection

of key values between different Guards. Interestingly, queries

in an FLSM-tree are directed to a Guard through binary search,

unlike LSM-tree, which locates an SSTable within each level.

The compression process of SSTables in the FLSM-tree

is different from LSM-tree, as SSTables are compressed on a

per-Guard basis. As shown in Figure 1b, when the number of

SSTables within a Guard reaches a certain threshold, the

system triggers data compression on that Guard. All SSTables

within the Guard are merged, and the new SSTable created

directly enters the corresponding Guard in the next level. This

optimization ensures that data in the lower-level table files are

no longer rewritten, and data within the same level is rewritten

only once during the merge, avoiding multiple rewrites caused

by higher-level table file compression. This optimization

significantly reduces write amplification in FLSM-tree under

typical workloads compared to LSM-tree.

Upon studying the FLSM-tree structure, it was noted that

although it effectively reduces write amplification and

exhibits high write throughput, it suffers from relatively poor

read performance. Additionally, considerations related to data

access frequency and access time were overlooked during read

and write processes and the compaction process. Based on this,

t this paper proposes a series of optimization strategies:

• Introduce a heat calculation method[15] based on the

FLSM-tree structure. By incorporating heat values,

partitioning Guards in the FLSM-tree based on heat

levels can enhance read performance without

compromising the high write throughput and low write

amplification of the FLSM-tree.

• Add ordered indexes[16,17] to Guards with higher

heat levels. This mechanism offers benefits such as

significantly improving single-point query

72

Authorized licensed use limited to: ZTE CORPORATION. Downloaded on November 26,2024 at 04:23:11 UTC from IEEE Xplore. Restrictions apply.

of 6

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

文档被以下合辑收录

评论