【李国良论文】Vector Database Management Techniques and Systems.pdf

免费下载

Vector Database Management Techniques and Systems

James Jie Pan

jamesjpan@tsinghua.edu.cn

Tsinghua University

Beijing, China

Jianguo Wang

csjgwang@purdue.edu

Purdue University

West Lafayette, Indiana, USA

Guoliang Li

liguoliang@tsinghua.edu.cn

Tsinghua University

Beijing, China

ABSTRACT

Feature vectors are now mission-critical for many applications, in-

cluding retrieval-based large language models (LLMs). Traditional

database management systems are not equipped to deal with the

unique characteristics of feature vectors, such as the vague notion

of semantic similarity, large size of vectors, expensive similarity

comparisons, lack of indexable structure, and diculty of answering

“hybrid” queries that combine structured attributes with feature vec-

tors. A number of vector database management systems (VDBMSs)

have been developed to address these challenges, combining novel

techniques for query processing, storage and indexing, and query

optimization and execution and culminating in a spectrum of perfor-

mance and accuracy characteristics and capabilities. In this tutorial,

we review the existing vector database management techniques and

systems. For query processing, we review similarity score design

and selection, vector query types, and vector query interfaces. For

storage and indexing, we review various indexes and discuss com-

pression as well as disk-resident indexes. For query optimization

and execution, we review hybrid query processing, hardware ac-

celeration, and distibuted search. We then review existing systems,

search engines and libraries, and benchmarks. Finally, we present

research challenges and open problems.

CCS CONCEPTS

• Information systems → Data management systems.

KEYWORDS

Vector Database, Vector Similarity Search, Dense Retrieval, 𝑘-NN

ACM Reference Format:

James Jie Pan, Jianguo Wang, and Guoliang Li. 2024. Vector Database

Management Techniques and Systems. In Companion of the 2024 Inter-

national Conference on Management of Data (SIGMOD-Companion ’24),

June 9–15, 2024, Santiago, AA, Chile. ACM, New York, NY, USA, 8 pages.

https://doi.org/10.1145/3626246.3654691

1 INTRODUCTION

High-dimensional feature vectors are now used in a variety of

dense retrieval search applications, including retrieval-based large

language models (LLMs) [

28

,

31

,

51

], e-commerce [

54

], recommen-

dation [

82

], document retrieval [

76

], and so on [

45

,

51

,

79

,

84

]. These

applications may involve billions of vectors and require millisecond

This work is licensed under a Creative Commons Attribution

International 4.0 License.

SIGMOD-Companion ’24, June 9–15, 2024, Santiago, AA, Chile

© 2024 Copyright held by the owner/author(s).

ACM ISBN 979-8-4007-0422-2/24/06.

https://doi.org/10.1145/3626246.3654691

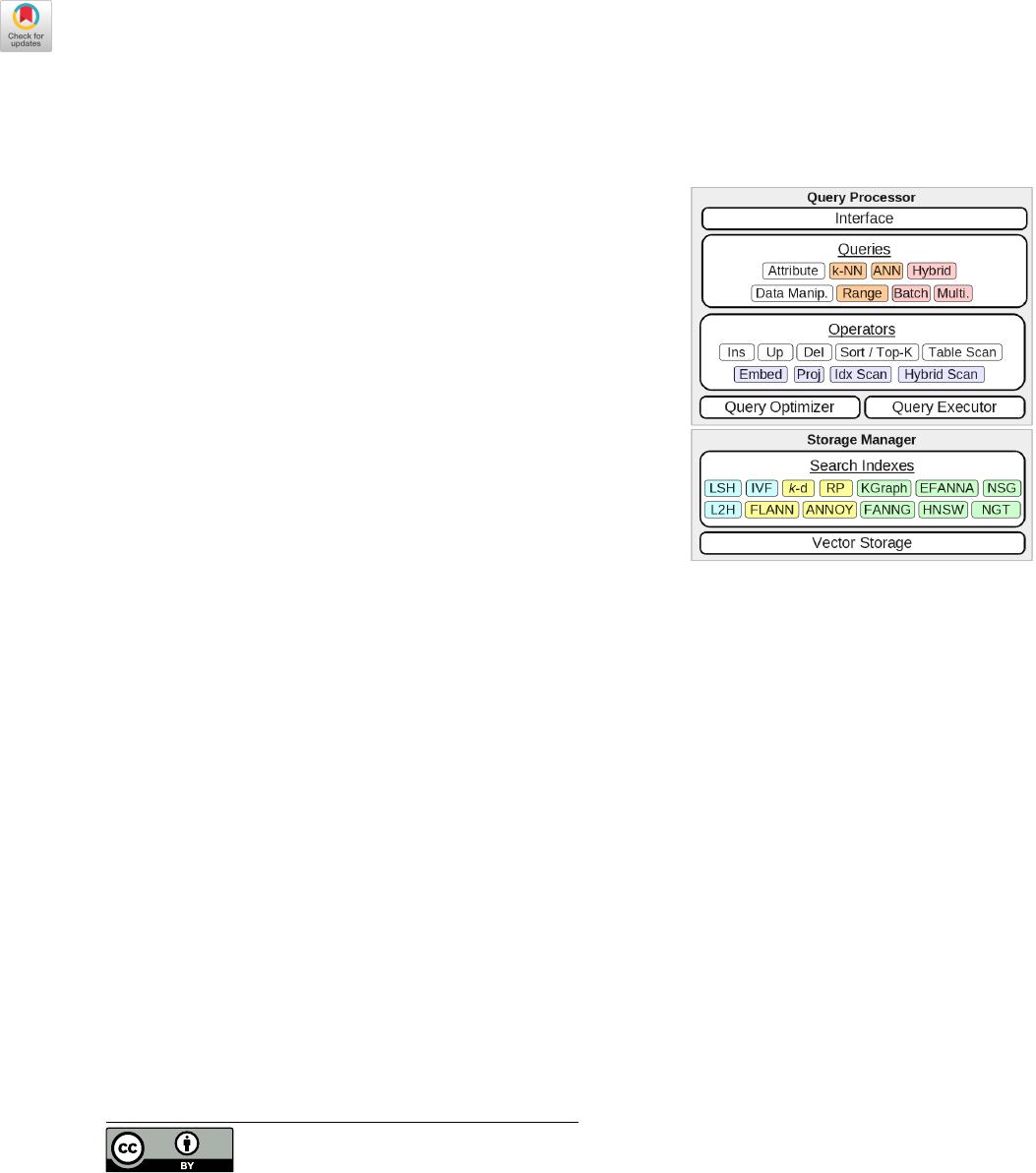

Figure 1: Overview of a VDBMS (Vector Database Manage-

ment System).

query latencies, all while needing to scale to increasing workloads

without sacricing performance or response quality.

But existing traditional database management systems, includ-

ing NoSQL and relational databases, are not designed for these

datasets and workloads. First, vector queries rely on the concept of

similarity which can be vague for dierent applications, requiring

a dierent query specication. Second, similarity computation is

more expensive than other types of comparisons seen in relational

predicates, requiring ecient techniques. Third, processing a vector

query often requires retrieving full vectors from the collection. But

each vector may be large, possibly spanning multiple disk pages,

and the cost of retrieval is more expensive compared to simple

attributes while also straining memory. Fourth, vector collections

lack obvious properties that can be used for indexing, such as being

sortable or ordinal, preventing the use of traditional techniques. Fi-

nally, “hybrid” queries require accessing both attributes and vectors

together, but it remains unclear how to do this eciently.

These challenges have led to the rise of vector database man-

agement systems (VDBMSs) specially adapted for these applica-

tions, and there are now over 20 commercial VDBMSs developed

within the past ve years. A typical VDBMS is composed of a query

processor and a storage manager (Figure 1). For the query proces-

sor, VDBMSs introduce new approaches to query interfaces, query

types such as

𝑘

-nearest-neighbor search, hybrid, and multi-vector

queries, and data operators such as similarity projection and hybrid

index scan. New techniques have also been proposed for query

597

SIGMOD-Companion ’24, June 9–15, 2024, Santiago, AA, Chile James Jie Pan, Jianguo Wang, and Guoliang Li

optimization and execution, including plan enumeration and se-

lection for hybrid query plans and hardware accelerated search.

For the storage manager, several techniques for large-scale vector

indexing and vector storage are now available, including hashing

and quantization-based approaches like

PQ

[

1

] and IVFADC [

49

]

that tend to be easy to update; tree-based indexes like FLANN [

62

]

and ANNOY [

2

] that tend to support logarithmic search complex-

ity; and graph-based indexes like HNSW [

58

] and others that are

ecient in practice but with less theoretical understanding. To-

gether, these techniques culminate into a variety of native systems,

extended systems, and search engines and libraries over a spectrum

of performance and accuracy characteristics and capabilities.

In this tutorial, we review techniques and systems for vector

data management along with benchmarks, followed by remaining

challenges and open problems.

Tutorial Overview. This tutorial contains three parts and the

intended length is 1.5 hours. The rst part discusses specic tech-

niques and will last 50 minutes.

(1) Query Processing (10 min.). Query processing begins with a search

specication that denes the search parameters. These include

considerations for similarity score design, score selection and the

curse of dimensionality [

22

,

30

,

61

], the query type such as basic,

multi-vector, and hybrid queries [79, 84], and the query interface.

(2) Storage and Indexing (30 min.). Vector indexes tend to rely on

novel partitioning techniques such as randomization, learned par-

titioning, and navigable partitioning. The performance, accuracy,

and storage characteristics of an index depend on the techniques

used and the index structure. We classify indexes into table, tree, or

graph-based, and then describe the techniques for each index type.

To deal with large size, we also discuss vector compression using

quantization [49, 59] and disk-resident indexes [32, 74].

(3) Optimization and Execution (10 min.). For processing hybrid

queries, several plan enumeration and plan selection techniques

have been proposed, including rule-based [

3

,

4

] and cost-based

selection [

79

,

84

], and which also introduce new hybrid operators

including block-rst scan [

43

,

79

,

84

,

87

] and visit-rst scan [

43

,

87

]. Several techniques also speed up vector search via hardware

acceleration using SIMD [

26

,

27

], GPUs [

50

], and distributed search.

To address slow index updates, some VDBMSs also rely on out-of-

place updates.

The second part discusses vector database management systems

and will last 30 minutes.

(1) Native Systems (10 min). Native systems such as Pinecone [

5

],

Miluvs [

6

,

79

], and Manu [

45

] aim at high performance vector

search applications by oering a narrower range of capabilities.

(2) Extended Systems (10 min). For applications that require more so-

phisticated capabilities, several extended systems such as AnalyticDB-

V [

84

], PASE [

90

],

pgvector

[

7

], and Vespa [

4

] have been developed

based on NoSQL or relational systems.

(3) Search Engines and Libraries (5 min). Several search engines such

as Apache Lucene [

8

] and Elasticsearch [

9

] now also incorporate

vector search capability via integrated vector indexes. Several li-

braries are also available such as Meta Faiss [

1

] that provide vector

search functionality.

(4) Benchmarks (5 min). We describe two notable benchmarks that

evaluate a wide variety of search algorithms and systems over a

range of workloads [29, 91].

The nal part discusses challenges and open problems (10 min.).

We describe several fundamental challenges, including how to per-

form similarity score selection, design more ecient hybrid oper-

ators and indexes, and estimate the cost of hybrid plans. We also

describe future applications, including index-supported incremental

search, multi-vector search, and enhancing security and privacy.

Target Audience. This tutorial is intended for database researchers

interested in understanding and advancing the state-of-art tech-

niques for large-scale vector database management and modern

applications beyond similarity search. This tutorial may also ben-

et industry practitioners interested in learning about the latest

commercial systems. There are no prerequisites beyond a basic

understanding of database concepts.

Related Tutorials. A recent tutorial [

28

] discusses how vector

search can be used for retrieval-based LLMs. There are also separate

tutorials on similarity search techniques [37, 67, 68].

Our tutorial aims to complement these tutorials by focusing

on vector database management systems as a whole, and most of

this tutorial has not been covered elsewhere. Specically, most of

the overlap is conned to Section 2.2, and the extent is not large.

In [

37

], a broad taxonomy of search techniques is given, along

with representative examples. Similarly in [

67

,

68

], an overview of

various exact and approximate search techniques is given. Some

of the material in Section 2.2, mainly locality-sensitive hashing,

learning-to-hash, ANNOY, and HNSW, overlaps with these past

tutorials. But Section 2.2 also discusses key indexing trends in

VDBMSs that have not been discussed in past tutorials, including

disk-based indexes, quantization-based compression approaches for

handling large vector collections, and the diversity of graph-based

indexes. Aside from this section, all other sections in this tutorial

have not been covered elsewhere as they pertain to the VDBMS

as a whole, including query processing, hybrid operators, plan

enumeration, and plan selection, and a survey of existing VDBMSs.

2 TUTORIAL

2.1 Query Processing

Similarity Scores. Dense retrieval works based on similarity. A

similarity score can be used to quantify the degree of similarity be-

tween two feature vectors. While many scores have been proposed,

dierent scores may lead to dierent query results, and so how to

perform score selection is an important problem for VDBMSs, in

addition to score design.

(1) Score Design. A similarity score is designed to accurately capture

similarity relationships between feature vectors. Existing similarity

scores can be classied as basic scores, aggregate scores, and learned

scores. Basic scores are derived directly from the vector space and

include Hamming distance, inner product, cosine angle, Minkowski

distance, and Mahalanobis distance. For certain workloads involv-

ing multiple query or feature vectors per entity, aggregate scores

such as mean, weighted sum, and others [

79

] combine multiple

scores into a single scalar score that can be more easily compared.

598

of 8

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论