【卡内基梅隆 北大】Demystifying Data Management for Large Language Models.pdf

免费下载

Demystifying Data Management for Large Language Models

Xupeng Miao

Carnegie Mellon University

Pittsburgh, PA, USA

xupeng@cmu.edu

Zhihao Jia

Carnegie Mellon University

Pittsburgh, PA, USA

zhihao@cmu.edu

Bin Cui

Peking University

Beijing, China

bin.cui@pku.edu.cn

ABSTRACT

Navigating the intricacies of data management in the era of Large

Language Models (LLMs) presents both challenges and opportuni-

ties for database and data management communities. In this tutorial,

we oer a comprehensive exploration into the vital role of data

management across the development and deployment phases of

advanced LLMs. We provide an in-depth survey of existing tech-

niques of managing knowledge and parameter data during the

whole LLM lifecycle, emphasizing the balance between eciency

and eectiveness. This tutorial stands to oer participants valuable

insights into the best practices and contemporary challenges in

data management for LLMs, equipping them with the knowledge

to navigate and contribute to this rapidly evolving eld.

CCS CONCEPTS

• Information systems

→

Data management systems; Infor-

mation systems applications; • Computing methodologies

→

Machine learning; Articial intelligence; Distributed com-

puting methodologies.

KEYWORDS

Large Language Model, Pre-training, Fine-tuning, Inference, Data

Management, Database, Distributed Computing, Knowledge Data

ACM Reference Format:

Xupeng Miao, Zhihao Jia, and Bin Cui. 2024. Demystifying Data Man-

agement for Large Language Models. In Companion of the 2024 Interna-

tional Conference on Management of Data (SIGMOD-Companion ’24), June

9–15, 2024, Santiago, AA, Chile. ACM, New York, NY, USA, 9 pages. https:

//doi.org/10.1145/3626246.3654683

1 INTRODUCTION

In the rapidly evolving landscape of articial intelligence, Large

Language Models (LLMs) like GPT-3 [

9

] and GPT-4 [

114

] have

become pivotal in advancing our understanding and capabilities

in data intelligence. These models, thriving on extensive datasets

and sophisticated algorithms, are not just about understanding and

generating human-like data contents. They also represent a signi-

cant stride in data management research [

37

,

82

,

108

,

132

,

145

,

168

]

and provide new research problems for database communities [

5

,

123

,

135

,

138

,

139

]. LLMs have introduced new complexities and

paradigms in processing, analyzing and leveraging data, marking a

This work is licensed under a Creative Commons Attribution

International 4.0 License.

SIGMOD-Companion ’24, June 9–15, 2024, Santiago, AA, Chile

© 2024 Copyright held by the owner/author(s).

ACM ISBN 979-8-4007-0422-2/24/06

https://doi.org/10.1145/3626246.3654683

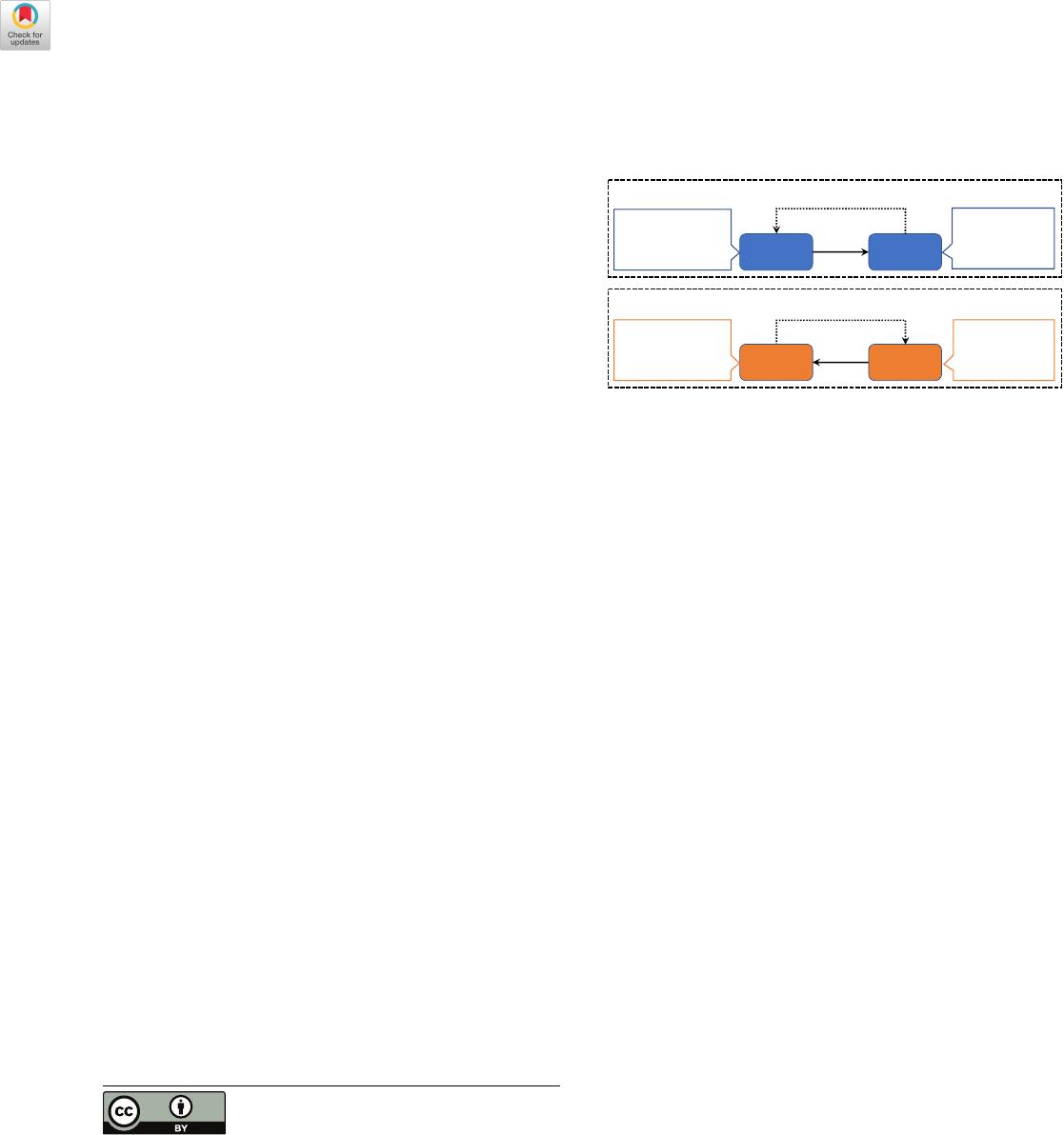

Knowledge

Data (§2.2)

Parameter

Data (§2.3)

Knowledge

Data (§2.4)

Parameter

Data (§2.5)

iterative

training

Iterative

decoding

LLM Development (e.g., Pre-training, Fine-tuning)

LLM Deployment (e.g., Inference)

• Data Collection

• Data Governance

• ...

• Parameter

Placement

• Parameter Sync

• ...

• Parameter

Storage

• ...

• Data Compression

• Data Organization

• ...

25 mins

15 mins

20 mins 10 mins

Figure 1: Tutorial overview

transformative phase in the eld. However, alongside these break-

throughs on LLMs for data management, the development [

101

] and

deployment [

159

] of these models introduce substantial challenges,

particularly in the realm of data management.

As shown in gure 1, this tutorial is designed to delve into the

intricacies of data management in both the development and de-

ployment phases of LLMs. We will explore the dual roles of data

in these phases: as knowledge data (e.g., input data like training

datasets) and as parameter data (model parameters). In the context

of this tutorial, we treat the development of LLMs as a transfor-

mation process where human knowledge is encoded into model

parameters. Conversely, during LLM deployment, these learned

parameters are utilized to produce human-like knowledge outputs

under given user queries like a knowledge database. These two

primary scenarios together make up a holistic LLM lifecycle, but

suer from unique and distinct data management problems.

Our journey will begin with a clear and generalized denition

of ’knowledge data’ as it applies to LLMs. We consider not only the

input data samples for training but also their latent decoding states

for inference. For LLM development, knowledge data serves as the

foundation upon which these advanced LLMs are constructed. The

data richness and diversity determine the breadth and depth of the

model’s capabilities. By examining various formats of knowledge

data, we gain insights into how LLMs assimilate and interpret

information from the world around us.

Following the discussion on knowledge data for LLM develop-

ment, we will also delve into the LLM inference process and explore

the management of the Key-Value (KV) cache for LLM deployment.

This component represents a latent knowledge base, encompassing

the current context and playing a pivotal role in the model’s itera-

tive decoding process. Understanding the KV cache’s functionality

and its management is essential for optimizing LLM deployment,

and ensuring ecient and accurate information retrieval.

Furthermore, another critical aspect of LLMs is parameter data

management. The vast number of model parameters in LLMs (i.e.,

547

SIGMOD-Companion ’24, June 9–15, 2024, Santiago, AA, Chile Xupeng Miao, Zhihao Jia, and Bin Cui

billions of) necessitates ecient management strategies for more

eective execution. This includes optimizing for parallelization and

other techniques that enhance both the development and deploy-

ment stages. Ecient management of parameter data is not only

crucial for the operational functionality of the model but also for

scaling up to handle increasingly complex tasks and larger data

volumes.

1.1 TARGET AUDIENCE AND LENGTH

Target Audience. Our tutorial aims to cater to a diverse audience,

including SIGMOD attendees from both research and industry back-

grounds. We intend to make this session accessible and engaging for

all, irrespective of their prior experience in the elds of databases

or machine learning. The tutorial will be comprehensive and self-

contained, featuring a broad introduction and motivational exam-

ples to ensure that even non-specialists can follow along easily.

Length. The intended length of this tutorial is 1.5 hours. There are

mainly six components in our outline, and they will take 10 mins

for background, 25 mins for §2.2, 15 mins for §2.3, 20 mins for §2.4,

10 mins for §2.5, and 10 mins for §2.6 respectively.

2 TUTORIAL OUTLINE

2.1 Background

2.1.1 LLM Development. The development of LLM is a complex

and multifaceted process, involving a series of sophisticated steps

that transform vast datasets into intelligent, responsive models. We

will start by introducing two basic concepts of: pre-training and ne-

tuning. These stages are fundamental in shaping the capabilities

and applications of these sophisticated models.

Pre-training. The foundation of any LLM is its pre-training

phase. This involves feeding the model a large corpus of data, al-

lowing it to learn and understand patterns, contexts, and nuances

in human language. The pre-training process is not just about data

ingestion but also about the model learning to make predictions

and generate responses that are coherent, contextually relevant,

and as human-like as possible. Key challenges in this stage include

improving data quality for better LLM capacity and managing the

vast model parameters for ecient model training.

Fine-tuning. After the initial pre-training process, LLMs often

undergo a ne-tuning process. This stage is crucial for adapting

the model to specic tasks or domains, such as legal language pro-

cessing, creative writing, or technical documentation. Fine-tuning

involves training the model (or partial model) on a smaller, more

specialized dataset, enabling it to hone its capabilities in particular

areas. The challenge here mainly lies in selecting the right datasets

that eectively represent the target domain without introducing

new biases or overtting the model.

Target

: audience will be familiar with the development of LLMs,

nuances and challenges associated with pre-training and ne-tuning.

2.1.2 LLM Deployment. We will then introduce the model deploy-

ment step, that generates human-like data using the pre-trained or

ne-tuned LLM. based on new input data.

Inference. LLM inference or serving requires the model to

quickly access its learned parameters and apply them to the input

queries, maintaining accuracy and coherence in its outputs. When

serving each input query, LLMs often employ iterative decoding

techniques, which also require to access all previous generated

contents as the context knowledge. KV cache temporarily saves

these contexts for lower inference latency. The challenge lies in

optimizing the data management to ensure fast and accurate re-

sponse, especially when dealing with large-scale data workloads

(e.g., multiple simultaneous requests or long sequences).

Target

: audience will be familiar with the deployment of LLMs,

such as the challenges during LLM inference.

2.2 Knowledge Data Management for LLM

Development

2.2.1 Data Collection. The rst step in knowledge data manage-

ment is the collection of a vast and diverse array of datasets. For

pre-training, this typically involves aggregating textual data from

books, articles, websites, code repositories and more, aimed at pro-

viding the model with a broad understanding of language patterns,

styles, and contexts. The challenge lies in not only gathering vast

amounts of data but also ensuring that it covers a wide spectrum of

topics and linguistic styles [

176

]. Multimodal models demand data

from a multitude of sources beyond text, such as image, audio and

video. Other formats including structured data like tables, metadata,

annotated or labeled data, and domain-specic data [

22

,

131

,

157

]

are also involved for unique model usage. Some empirical studies

have observed scaling laws [

49

,

50

,

68

,

106

,

146

], which describe

the phenomenon that larger data scales correlate with stronger

model performance. Recent LLMs like GPT-3 are typically trained

on hundreds of gigabytes to over a terabyte of text data.

Fine-tuning LLMs requires more specialized data, often gener-

ated with human intervention. Specialized datasets typically range

from a few megabytes to several gigabytes. User-generated data

includes creating specic prompts and corresponding responses or

using human-written instructions [

169

] and human feedback mech-

anisms [

116

] in reinforcement learning (RL) settings. The objective

is to rene the model’s capabilities for specic tasks or domains,

making it more adept at handling particular types of queries or

content. We will revisit the development of GPT-family [

9

] and

LLaMA-family [

137

] as examples to introduce the process of data

collection in practice.

2.2.2 Data Governance. Data governance encompasses a suite of

processes and practices aimed at ensuring the quality, consistency,

and appropriateness of the data used for training. This stage is piv-

otal in shaping the model’s learning path and eventual performance.

It involves a series of meticulous steps:

•

Data Deduplication. This process involves meticulously

identifying and removing duplicate contents within the datasets.

Such data redundancy can skew the model’s learning, lead-

ing to overemphasis on repeated patterns [

1

,

48

,

75

,

130

,

136

].

Eective deduplication ensures a more balanced and diverse

training regimen for the model.

•

Data Cleaning. Data cleaning in the context of LLM de-

velopment extends beyond mere error correction [

55

,

60

,

156

,

173

] and involves addressing critical aspects such as

548

of 9

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论