ICDE 2025_Artemis A Customizable Workload Generation Toolkit for Benchmarking Cardinality Estimation_OceanBase.pdf

免费下载

Artemis: A Customizable Workload Generation

Toolkit for Benchmarking Cardinality Estimation

Zirui Hu

1,2

, Rong Zhang

1,2

*, Chengcheng Yang

1,2

, Xuan Zhou

1,2

, Quanqing Xu

3

, Chuanhui Yang

3

1

School of Data Science and Engineering, East China Normal University,

2

Engineering Research Center of Blockchain Data Management, Ministry of Education,

3

OceanBase, Ant Group

zrhu@stu.ecnu.edu.cn, {rzhang, ccyang, xzhou}@dase.ecnu.edu.cn, {xuquanqing.xqq, rizhao.ych}@oceanbase.com

Abstract—Cardinality Estimation (CardEst) is crucial for

query optimization. Despite the remarkable achievement in

DBMS, there is a pressing need to test or tune the work of

CardEst. To satisfy the need, we introduce ARTEMIS, a customiz-

able workload generator, which can be used to generate various

scenarios with the sensitive features for CardEst, including

various data dependencies, complex SQL structures, and diverse

cardinalities. It designs a PK-oriented deterministic data gener-

ation mechanism to plot various data characteristics; a search-

based workload generation is proposed for composing queries

with various complexities; it takes a constraint optimization-

guided way to achieve a cost-effective cardinality calculation.

In this demonstration, users can explore the core features of

ARTEMIS in generating workloads.

Index Terms—cardinality estimation, benchmarking.

I. INTRODUCTION

Cardinality estimation (CardEst), renowned for the Achilles

Heel of query optimization, is one of the critical tasks in

query optimizer for estimating the intermediate result size of

each operator, and deciding an optimal query plan. However,

the intricate data dependencies and complicated selection/join

operators still make CardEst an unsolved hard issue [1].

Traditionally, DBMSs use histograms to collect data statistics

with low construction and maintenance costs. Due to high

statistics storage cost, the histogram-based method is gen-

erally adopted based on some assumptions, e.g., uniformity

within a histogram bucket and independence of attributes.

To move beyond these assumptions, learning-based methods

are leveraged to sketch the underlying data distribution and

attribute correlations. Learning-based methods are mainly cat-

egorized into data-driven and query-driven. The data-driven

method employs generative models for unsupervised learning

of the proportion of rows in the joint domain represented as

P (D

M

) = P (D

1

, D

2

, ..., D

n

) [2], where D

M

is the joint

domain from the full outer join of all tables, and D

i

is the

sub-domain of the i

th

attribute column. Supposing the size of

table T as |T |, the new query as Q, the joint domain covered

by Q as D

Q

, the cardinality of query Q is P (D

Q

) ∗ |T |.

The query-driven method uses discriminative models trained

on annotated query-cardinality pairs (Q, Card(Q)) to predict

the Card(

¯

Q) for new query

¯

Q. Though learning-based meth-

ods have demonstrated supreme capability on some classical

benchmarks, e.g., TPC-H, JOB-light [3], and STATS-CEB [4],

they can not be easily generalized to obtain high accuracy

across diversified scenarios, which makes it impossible for the

*Rong Zhang is the corresponding author.

practical industry use. However, providing diverse workloads

for CardEst has always been a tough work. The core challenges

come from the requirements of:

C1: Insufficient Data Dependency and Distribution. Both

data-driven and traditional methods fundamentally try to iden-

tify joint domain with strong correlations and plot their joint

distribution, which requires enormous datasets with various

data dependencies and distributions. Traditional OLAP bench-

marks, e.g., TPC-H, are meticulously designed for evaluating

execution engines with uniform distribution and independence

between attributes, which is too simple for the CardEst task.

The other CardEst benchmarks [4] typically rely on a fixed

schema using publicly available datasets, which have the

defect of representativeness and generalizability; additionally,

maintaining the established dependency relationships and data

distributions when scaling data is still a great challenge.

C2: Demanding Training Label Generation. For query-

driven methods, their effectiveness is fundamentally decided

by the size of diverse annotated queries and cardinality label

pairs for training, which can be generally obtained in two

ways. One is to extract training data from real-life industry

logs, but it is barely possible to collect such kinds of training

data due to the concerns of privacy [5]. The other one is to

construct self-defined training data. However, it may consume

a significant amount of CPU and memory resources, along

with tedious and time-consuming manual effort to construct

and run queries in a full-fledged system to gather labels. For

example, collecting cardinality labels for 500 queries with

1TB of input data could take nearly 10 days [6]. Therefore,

the exhaustive resource consumption and burdensome manual

effort for label generation challenge the model training.

C3: Limited Complexity of Workloads. The diversity of

training workloads greatly influences the model robustness

of learning-based methods, while the diversity of testing

workloads verifies the effectiveness of methods across various

scenarios. Workload diversity can be represented by three

dimensions [4], i.e., 1) query templates composed of operators,

2) access distribution of the predicates, and 3) query cardinal-

ities. On the one hand, existing benchmarks have fundamental

shortcomings in their template design. For instance, the state-

of-the-art CardEst benchmark STATS-CEB [4] loses sup-

port to some join types, including cycle/cyclic-joins, non-key

joins, and outer/semi/anti-joins, and selection predicates like

disjunctive logical predicates (∨) and arithmetic predicates.

On the other hand, existing benchmarks do not guarantee

4628

2025 IEEE 41st International Conference on Data Engineering (ICDE)

2375-026X/25/$31.00 ©2025 IEEE

DOI 10.1109/ICDE65448.2025.00369

BBAAD9C20180234D78A0072836F0B930E2B9B2091C41FBA0A4D98436B1942B0C6B4CB93861533B082259240898463FEBCAE921BA61D05B811BBFC2157ABE32D6241290ADFF21A9A794B72A276F474945CAF7ED3875D043744886019CE8BDACD8D7C6299B9E3

the generation diversity in predicate parameters or operator

cardinalities, since they only take a randomization and manual

inspection way to guarantee valid queries (cardinality > 0).

Therefore, generating diverse query templates and instantiating

parameters to fulfill arbitrary cardinality requirements remains

a challenging and time-consuming task.

To tame these challenges, we propose a benchmark toolkit

called ARTEMIS for CardEst with the following features.

First, we design a PK-oriented deterministic data generation

method by using a set of distribution functions to map from

PKs to common attribute columns, with PKs playing as

the seeds. Second, we employ a lightweight automatic label

generation method by modeling the cardinality calculation of

predicates as a Constraint Optimization Problem (COP) with

the predicates and the predefined data generation functions

as constraints, which can be solved precisely by COP solver.

Third, to accommodate diverse query generation, we first

utilize a dynamic weighted random walk over a well-defined

finite-state machine (FSM) to generate SQLs with various

predicates; we then vectorize the generated query template,

and compare it with the historical query template vectors to

adapt the weight on FSM; finally, for each generated query

template, we mutate or crossover each parameter with the dual

objectives of minimizing both the distance from the specified

cardinality requirement and the overlap in the accessed data

ranges between the new query and historical queries.

In summary, this paper makes the following contributions:

• We propose a computation-driven method for generating

realistic data dependencies and distributions.

• We introduce a constraint optimization-guided calculation

approach for efficient cardinality label generation.

• We design a guided search-based strategy to generate

diverse workloads.

• We provide a vivid demonstration to showcase the effec-

tiveness of ARTEMIS.

In this demonstration, we first provide an overview of

ARTEMIS in § II, including its workflow and key techniques.

The demonstration details are finally presented in § III.

II. DESIGN OVERVIEW

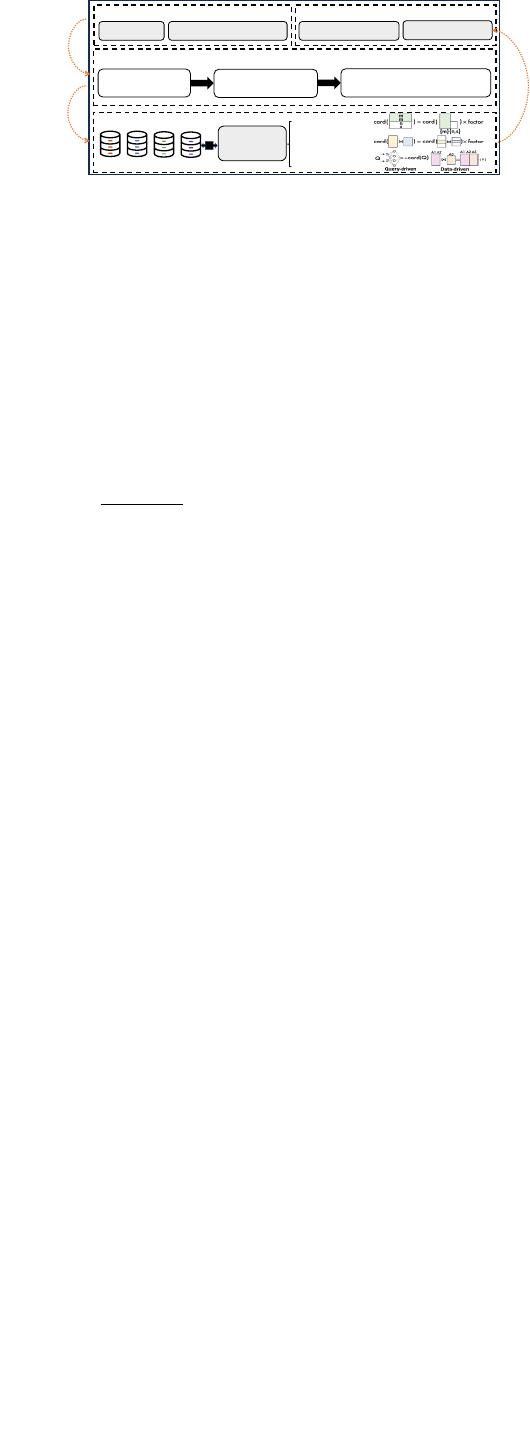

The architecture of ARTEMIS is shown in Fig. 1. Specifi-

cally, users configure their requirements about benchmarking

scenarios, including schema (e.g., table number and table

reference relationship); data characteristics (e.g., intra-column

distribution and inter-column dependency); query type dis-

tribution (e.g., chain/cycle joins, the joined table numbers),

and query cardinality. The generation has three phases: 1) it

generates schema and data following the requirements of the

data dependencies and distributions; 2) it constructs query tem-

plates that have various query types and diverse predicates; 3)

it instantiates predicate parameters for generating valid queries

with different cardinalities across various access ranges.

A. Data Characteristic Sketch

To scalably generate data with various distributions and

dependencies, we employ a PK-oriented deterministic gener-

ation strategy, where each non-key value is deduced from its

Application

Database Systems

User Interface

Schema Data/Workload Settings

Visualization

Cardinality Result

Generation Process

Step1: Generate

Schema and Data

Workflow of Artemis

Step2: Generate

Workload Templates

Step3: Instantiate Parameters

& Cardinality Calculation

Input

Test/Tune

Return Result

Cardinality

Estimation

Component

Histogram-based

Sampling-based

Learned-based

…

Fig. 1. ARTEMIS Architecture

corresponding PK under any intra-column distributions and

inter-column dependencies. Each table T has an automatic

incremental PK column, and each non-key column, e.g., col

i

,

follows an intra-column distribution ϕ

col

i

and has d

col

i

distinct

values from a specified domain (d

col

i

≤ |T |). We map PKs se-

quentially into d

col

i

groups with auto-incremented group IDs,

each corresponding to a distinct value x in col

i

. Let g

k

[P K]

(abbr. g

k

) represent the group ID for an individual PK w.r.t

x

k

in col

i

, where group sizes follow the frequency of x

k

from

ϕ

col

i

. Since for a single column, a linear function effectively

guarantees the intra-column distribution by controlling distinct

value frequency, we define the generation function from PK to

a non-key column col

i

as F unc

col

i

(g

k

) = α ∗ g

k

+ β, where

α =

x

max

−x

min

d

col

i

−1

and β = x

min

− α, with x

max/min

as the

column bounds. Given a value x

k

w.r.t g

k

, we can derive

its covered PK range. Specifically, from ϕ

col

i

, we obtain its

cumulative distribution function (CDF) F

col

i

. The PK range

for group g

k

is represented by start PK (P K

s

k

) and end

PK (P K

e

k

), calculated as P K

s

k

= ⌊|T | × F

col

i

(x

k−1

)⌋ + 1

and P K

e

k

= ⌊|T | × F

col

i

(x

k

)⌋, where x

k−1

and x

k

are

derived from the generation function of col

i

for g

k−1

and

g

k

, respectively. We efficiently map g

k

(i.e., x

k

) back to its

continuous PK range. Note that a column can be of any type,

achieved via a type transformer, and data is shuffled during

database population. Based on these generation functions,

we introduce the generation of four typical inter-column

dependencies for CardEst [7]: unique column combination

(UCC), order dependency (OD), functional dependency (FD),

and inclusion dependency (ID). We take ID as an example,

and the generation method of others can be found in our

GitHub repository [8]. ID indicates that any value in column

col

i

will also be found in col

j

(i ̸= j). Thus, ID dependency

exists between included column col

i

with d

col

i

and inclusive

column col

j

with d

col

j

. Since d

col

i

≤ d

col

j

, applying the same

generation function to both ensures values in col

i

exist in col

j

.

B. Workload Template Generation

To create a query template

b

Q, we have two generation steps:

1) we determine its query type and the number of involved

tables, then select a subset of table T

Q

to construct the query

template; 2) we compose the join predicates J and selection

predicates S in the query. The basic form is like SELECT

COUNT(*) FROM T

Q

WHERE J AND S. If there is a join,

we first initialize an empty join graph and then iteratively

update the graph by randomly selecting tables (nodes) to the

graph. The nodes selected are decided by the join type and join

number. For instance, to generate a cycle query, by searching

cycles within the schema that satisfy the required number of

4629

BBAAD9C20180234D78A0072836F0B930E2B9B2091C41FBA0A4D98436B1942B0C6B4CB93861533B082259240898463FEBCAE921BA61D05B811BBFC2157ABE32D6241290ADFF21A9A794B72A276F474945CAF7ED3875D043744886019CE8BDACD8D7C6299B9E3

of 4

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

文档被以下合辑收录

评论