ICDE 2025_BBS :Batch-Based Snapshot for the Cloud Database Backup_阿里云.pdf

免费下载

BBS: Batch-Based Snapshot for the Cloud Database

Backup

Xiaoshuang Peng

1

, Xiaopeng Fan

1

, Shi Cheng

2

, Lingbin Meng

2

, Cuiyun Fu

2

, Wenchao Zhou

2

, Chuliang Weng

1

East China Normal University

1

, Alibaba Group

2

{xspeng, xpfan}@stu.ecnu.edu.cn,

{chengshi.cs, lingbin.mlb, cuiyun.fcy, zwc231487}@alibaba-inc.com, clweng@dase.ecnu.edu.cn

Abstract—Many cloud databases provide fine-grained regular

snapshots and sparsely deleted snapshots based on importance,

and dynamically maintain large-scale snapshots to ensure data

security and mine the value of cold data. However, in existing

snapshot technologies, the write amplification feature of Copy-on-

Write (CoW) introduces additional expensive I/O operations in

a cloud environment. In Redirect-on-Write (RoW), the modified

data blocks are scattered among the snapshots, resulting in a

dependency between the snapshots, which seriously affects the

recovery performance.

In this paper, we observed that access to snapshots has the

characteristics of locality and continuity. We therefore propose an

efficient Batch-Based Snapshot index, called BBS, which batches

snapshot indexes according to database workload and access

behavior of snapshots. Specifically, we use two key techniques:

Shared-Subtrees Indexing and Batch-Based Dividing, to perform

split dependency of the snapshot index. The snapshot index

dependency chain is divided into batches, and there is no depen-

dency on snapshot indexes between batches. In-batch snapshot

indexes reduce memory overhead by sharing subtrees. The index

can directly locate data blocks instead of iterative traversal.

At the same time, the design of the snapshot index deletion

method is adapted to the snapshot sparse deletion model. We

have implemented a working system in Ceph. Evaluation results

on datasets demonstrate that, compared with existing techniques,

BBS can effectively balance the overhead between index memory

capacity and recovery time.

Index Terms—Snapshot recovery, Index, Block device

I. INTRODUCTION

Cloud native databases always take snapshots to ensure data

reliability and durability, and they provide snapshot point-in-

time recovery capability to protect against accidental deletion

or writes. The snapshot is a physical backup, which copies the

raw data to a storage system. Cloud Block Storage (CBS) is

an important data storage technique, such as Amazon Elastic

Block Store (EBS) [1], Openstack Cinder [2], Sheepdog [3]

and Ceph RBD [4], [5]. CBS usually partitions block devices

into logical data blocks, which are ultimately stored as objects

in the storage cluster.

Due to the stronger storage and computing resources in

the cloud, the database can take hundreds of thousands of

snapshots to save the data status in a more granular man-

ner. This provides an opportunity to separate read-intensive

services, such as security auditing and historical queries.

Storage frameworks such as Snowflake [6], and Delta Lake

[7] tend to put snapshots and online data together and query

on continuous snapshots to provide time travel capability,

which is also verified by the snapshot set query of Alibaba

Cloud [8]. Besides, applications [9]–[11] develop a declarative

extension to SQL that allows specifying and running multi-

snapshot computations conveniently to provide auditing and

other forms of fact checking. The new trend has a continuous

access feature instead of a single snapshot and is sensitive to

instant recovery latency.

However, existing snapshot techniques do not meet the new

recovery requirements. Redirect on Write (RoW) is a widely

adopted method in practical systems, due to its high write

performance and concurrent read characteristics. The principle

behind it is that RoW only writes new data blocks to the

snapshot instead of the source volume. Thus, the dispersion of

data blocks allows concurrent reading. However, the recovery

process requires accessing multiple snapshots because each

one only saves partial data blocks. The overhead of recovery

is significant when taking snapshots at a large scale. Index

recovery includes loading the index from the remote node

and locating the address of the data block locally. In RoW,

index recovery needs to load more indexes because of the

dependency, and the number of hops in locating is proportional

to the number of snapshots. Large index capacity increases

recovery latency and memory overhead, which challenge net-

work bandwidth and computing of the storage system.

Many works tend to build data block indexes [12]–[17]

to accelerate the recovery process. They cache the mapping

from data block numbers to physical block addresses, which

accelerates locating data blocks. However, fragmentation in-

evitably becomes worse as the database transactions run. The

recovery process is still affected by the number of snapshots.

Amazon EBS snapshot [18] copies the complete index of

the previous snapshot before updating data blocks to cut off

the dependency. In continuous access scenarios, although the

number of indexes to be loaded is reduced, the redundancy be-

tween indexes increases the local memory overhead. Besides,

Amazon EBS offers Fast Snapshot Restore (FSR) to pre-store

full initialized snapshots. This eliminates the latency of I/O

operations on a block when it is accessed for the first time.

However, prefetching full snapshots is resource-intensive.

Based on the workload of the database and the snapshot

access characteristics, we propose a new and effective in-

dex structure to reduce index size, which balances recovery

consumption and memory capacity. Specifically, the snapshot

dependency chain is divided into batches, with each index

relying on the previous indexes in its batch rather than on

snapshots outside the batch. By sharing the subtrees in a

batch, the data block address can be obtained directly, and then

the index memory overhead is decreased. Besides, prefetching

indexes in a batch instead of all dependent snapshot indexes

could significantly reduce the number of snapshots and the

recovery time.

Consequently, we present a Batch-Based Snapshot index,

called BBS. BBS has two key techniques, which are Shared-

Subtrees Indexing and Batch-Based Dividing. The former sets

two types of indexes in a batch. The first snapshot index

is a complete B+ tree, and the subsequent snapshots are

partial B+ trees. The complete tree no longer depends on the

former indexes, while the partial tree nodes point to previous

indexes in the batch, effectively reducing redundancy. The

latter batches according to the snapshot update ratio and the

recent average continuous access length. We integrate BBS

into Ceph RBD, which supports snapshot and continuous

recovery with low latency. We employ workloads to evaluate

the performance of the working system.

To sum up, we make the following contributions:

• We propose BBS, a high-performance snapshot index that

could directly locate data blocks and balance the overhead

of recovery time and index memory consumption.

• We design a batch-based snapshot deletion method to

adapt to the sparse snapshot deletion mechanism, which

could effectively speed up snapshot deletion.

• We implement BBS based on Ceph RBD. Evaluation

shows that BBS gains significant performance improve-

ment against advanced block storage systems.

The rest of this paper is organized as follows. Section

II presents the background. The motivation is introduced

in Section III. The design and implementation of BBS are

proposed in Section IV. The performance evaluation is shown

in Section V. Section VII discusses related work, and Section

VIII concludes the paper.

II. BACKGROUND

A. Snapshot Implementations

Snapshot technologies mainly include CoW, RoW, and their

variants, which have been extensively compared and analyzed

[19]–[22]. Briefly, the double write mechanism used by CoW

introduces overheads for regular I/O and a dramatic increase

in sync operations [22], [23]. The advantage of CoW is that

snapshot recovery is faster since only the original and current

snapshots are needed. Snapshots taken by the volume in

Rackspace Cloud Block Storage [24] , Google Cloud Compute

Engine Persistent Disk [25] and virtual disk in Microsoft

Azure [26] are CoW snapshots. The double write mechanism

in CoW introduces huge overhead in intensive snapshots and

reduces the throughput of the main database systems, so we

do not consider CoW.

For RoW-based snapshots, new data blocks are written to

the blocks belonging to the current snapshot. RoW sacrifices

contiguity in the original copy for lower overhead updates.

As the system captures extensive snapshots, the fragmentation

of the data block becomes increasingly severe. When locating

the data block, the snapshot checks whether it exists in the

snapshot. Otherwise, the snapshot searches earlier snapshots

by the root nodes until the data block is located. Thus the

process inevitably increases the number of hops. Iterative

search degrades performance due to the low update frequency

of data blocks and the massive amount of snapshots. Amazon

EBS [1] has an optimized RoW snapshot index that cuts off

the index dependencies. It copies the entire previous snapshot

index before updating the data blocks. Besides, the index uses

a uniform region to store continuous unmodified data blocks

to reduce the number of nodes. However, this index is not

applicable in batch access mode since the indexes are loaded

iteratively. Moreover, when the number of snapshots grows,

the size of each region shrinks, and the number of regions

grows, the index degenerates into a complete index, which

increases memory consumption.

B. Vary Layers Supported Snapshot

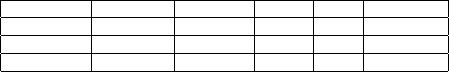

TABLE I: Summary of existing methods for database backup

and recovery (FS: file system, DB: database)

Methods Mysqldump Xtrabackup BTRFS LVM CoW&RoW

layer DB DB FS Block Cloud

backup speed slow slow fast fast fast

restore speed slow medium fast fast slow

Snapshot technologies can be implemented across various

layers, as shown in Table I. In the database layer, an ad-

ministrator could choose Mysqldump [27] for logical backup

and Percona Xtrabackup [28] for physical backup. A logical

backup stores the queries executed by transactions, while

a physical backup copies the raw data to storage. In the

recovery process, the stored queries are re-executed, or backup

data is copied to a database directory. However, recovery

procedures involve heavy I/O operations by database queries.

Xtrabackup does not perform transactions and only copies

the original data, resulting in a faster restoration compared to

Mysqldump. Whereas this backup approach incurs significant

storage overhead.

Snapshot technologies can also be implemented at the file

systems or the block layer. Each snapshot consists of a separate

tree, but the snapshot may share subtrees with other snapshots.

When the user produces a snapshot of the volume, it simply

duplicates the root node of the original volume as the new tree

root node of the snapshot, and the new tree root is pointed to

the same children as the original volume. Though creation

overhead is light, the overhead of the first write to a block

is heavy because the entire tree of meta-data nodes needs

to be copied and linked to the root node of the snapshot.

LVM [29] operates between the file system and the storage

device and provides fast snapshot creation and restoration

using CoW. However, the CoW approach negatively affects

run-time performance since it performs redundant writes due

to data copies.

of 14

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

文档被以下合辑收录

评论