DuckDB an Embeddable Analytical Database.pdf

免费下载

DuckDB: an Embeddable Analytical Database

Mark Raasveldt

m.raasveldt@cwi.nl

CWI, Amsterdam

Hannes Mühleisen

hannes@cwi.nl

CWI, Amsterdam

ABSTRACT

The immense popularity of SQLite shows that there is a need

for unobtrusive in-process data management solutions. How-

ever, there is no such system yet geared towards analytical

workloads. We demonstrate DuckDB, a novel data manage-

ment system designed to execute analytical SQL queries

while embedded in another process. In our demonstration,

we pit DuckDB against other data management solutions

to showcase its performance in the embedded analytics sce-

nario. DuckDB is available as Open Source software under a

permissive license.

ACM Reference Format:

Mark Raasveldt and Hannes Mühleisen. 2019. DuckDB: an Em-

beddable Analytical Database. In 2019 International Conference on

Management of Data (SIGMOD ’19), June 30-July 5, 2019, Ams-

terdam, Netherlands. ACM, New York, NY, USA, 4 pages. https:

//doi.org/10.1145/3299869.3320212

1 INTRODUCTION

Data management systems have evolved into large mono-

lithic database servers running as stand-alone processes. This

is partly a result of the need to serve requests from many

clients simultaneously and partly due to data integrity re-

quirements. While powerful, stand-alone systems require

considerable eort to set up properly and data access is con-

stricted by their client protocols [

12

]. There exists a com-

pletely separate use case for data management systems, those

that are embedded into other processes where the database

system is a linked library that runs completely within a “host”

process. The most well-known representative of this group

is SQLite, the most widely deployed SQL database engine

with more than a trillion databases in active use [

4

]. SQLite

strongly focuses on transactional (OLTP) workloads, and con-

tains a row-major execution engine operating on a B-Tree

storage format [

3

]. As a consequence, SQLite’s performance

on analytical (OLAP) workloads is very poor.

Permission to make digital or hard copies of part or all of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for prot or commercial advantage and that copies

bear this notice and the full citation on the rst page. Copyrights for third-

party components of this work must be honored. For all other uses, contact

the owner/author(s).

SIGMOD ’19, June 30-July 5, 2019, Amsterdam, Netherlands

© 2019 Copyright held by the owner/author(s).

ACM ISBN 978-1-4503-5643-5/19/06.

https://doi.org/10.1145/3299869.3320212

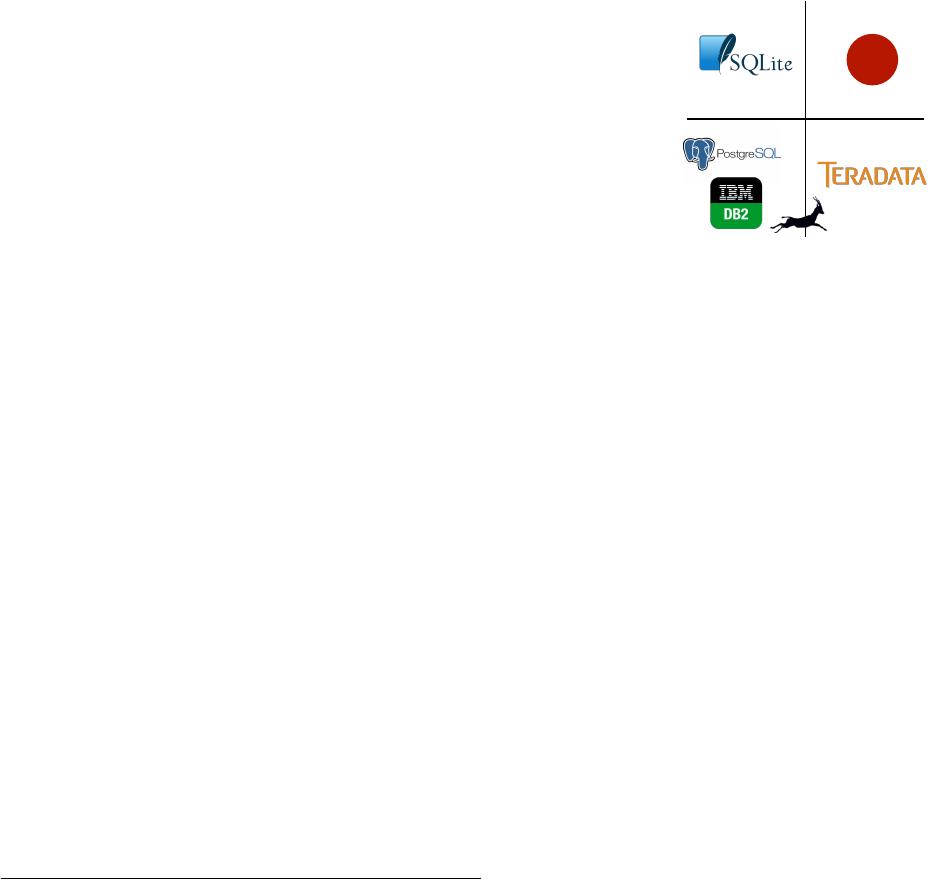

OLTP

OLAP

Embedded

Stand-Alone

?

Figure 1: Systems Landscape

There is a clear need for embeddable analytical data man-

agement. This needs stems from two main sources: Inter-

active data analysis and “edge” computing. Interactive data

analysis is performed using tools such as R or Python. The

basic data management operators available in these envi-

ronments through extensions (dplyr [

14

], Pandas [

6

], etc.)

closely resemble stacked relational operators, much like in

SQL queries, but lack full-query optimization and transac-

tional storage. Embedded analytical data management is also

desirable for edge computing scenarios. For example, con-

nected power meters currently forward data to a central

location for analysis. This is problematic due to bandwidth

limitations especially on radio interfaces, and also raises pri-

vacy concerns. An embeddable analytical database is very

well-equipped to support this use case, with data analyzed

on the edge node. The two use cases of interactive analysis

and edge computing appear orthogonal. But surprisingly, the

dierent use cases yield similar requirements. For example,

in both use cases portability and resource requirements are

critical, and systems that are careful with both will do well

in both usage scenarios.

In our previous research, we have developed MonetDBLite,

an embedded analytical system that was derived from the

MonetDB system [

13

]. MonetDBLite proved successfully

that there is a real interest in embedded analytics, it enjoys

thousands of downloads per month and is used all around

the world from the Dutch central bank to the New Zealand

police. However, its success also uncovered several issues

that proved very complex to address in a non-purpose-built

SIGMOD ’19, June 30-July 5, 2019, Amsterdam, Netherlands Mark Raasveldt and Hannes Mühleisen

system. The following requirements for embedded analytical

databases were identied:

•

High eciency for OLAP workloads, but without com-

pletely sacricing OLTP performance. For example,

concurrent data modication is a common use case in

dashboard-scenarios where multiple threads update

the data using OLTP queries and other threads run the

OLAP queries that drive visualizations simultaneously.

•

Ecient transfer of tables to and from the database

is essential. Since both database and application run

in the same process and thus address space, there is

a unique opportunity for ecient data sharing which

needs to be exploited.

•

High degree of stability, if the embedded database

crashes, for example due to an out-of-memory situ-

ation, it takes the host down with it. This can never

happen. Queries need to be able to be aborted cleanly

if they run out of resources, and the system needs to

gracefully adapt to resource contention.

•

Practical “embeddability” and portability, the database

needs to run in whatever environment the host does.

Dependencies on external libraries (e.g.

openssh

)

for either compile- or runtime have been found to

be problematic. Signal handling, calls to

exit()

and

modication of singular process state (locale, working

directory etc.) are forbidden.

In this demonstration, we present the capabilities of our

new system, DuckDB. DuckDB is a new purpose-built em-

beddable relational database management system. DuckDB

is available as Open-Source software under the permissive

MIT license

1

. To the best of our knowledge, there currently

exists no purpose-built embeddable analytical database de-

spite the clear need outlined above. DuckDB is no research

prototype but built to be widely used, with millions of test

queries run on each commit to ensure correct operation and

completeness of the SQL interface. Our rst-ever demonstra-

tion of DuckDB will pit it against other systems on a small

device. We will allow viewers to increase the size of the

dataset processed, and observe various metrics such as CPU

load and memory pressure as the dataset size changes. This

will demonstrate the performance of DuckDB for analytical

embedded data analysis.

2 DESIGN AND IMPLEMENTATION

DuckDB’s design decisions are informed by its intended use

case: embedded analytics. Overall, we follow the “textbook”

separation of components: Parser, logical planner, optimizer,

physical planner, execution engine. Orthogonal components

are the transaction and storage managers. While DuckDB

is rst in a new class of data management systems, none of

1

https://github.com/cwida/duckdb

API C/C++/SQLite

SQL Parser libpg_query [2]

Optimizer Cost-Based [7, 9]

Execution Engine Vectorized [1]

Concurrency Control Serializable MVCC [10]

Storage DataBlocks [5]

Table 1: DuckDB: Component Overview

DuckDB’s components is revolutionary in its own regard.

Instead, we combined methods and algorithms from the state

of the art that were best suited for our use cases.

Being an embedded database, DuckDB does not have a

client protocol interface or a server process, but instead is

accessed using a C/C++ API. In addition, DuckDB provides

a SQLite compatibility layer, allowing applications that pre-

viously used SQLite to use DuckDB through re-linking or

library overloading. As with MonetDBLite, we have also im-

plemented the database APIs for R (DBI) and Python (PEP

249).

The SQL parser is derived from Postgres’ SQL parser that

has been stripped down as much as possible [

2

]. This has

the advantage of providing DuckDB with a full-featured and

stable parser to handle one of the most volatile form of its

input, SQL queries. The parser takes a SQL query string as

input and returns a parse tree of C structures. This parse tree

is then immediately transformed into our own parse tree of

C++ classes to limit the reach of Postgres’ data structures.

This parse tree consists of statements (e.g.

SELECT

,

INSERT

etc.) and expressions (e.g. SUM(a)+1).

The logical planner consists of two parts, the binder and

the plan generator. The binder resolves all expressions re-

ferring to schema objects such as tables or views with their

column names and types. The logical plan generator then

transforms the parse tree into a tree of basic logical query

operators such as scan, lter, project, etc. After the plan-

ning phase, we have a fully type-resolved logical query plan.

DuckDB keeps statistics on the stored data, and these are

propagated through the dierent expression trees as part of

the planning process. These statistics are used in the opti-

mizer itself, and are also used for integer overow prevention

by upgrading types when required.

DuckDB’s optimizer performs join order optimization us-

ing dynamic programming [

7

] with a greedy fallback for

complex join graphs [

11

]. It performs attening of arbitrary

subqueries as described in Neumann et al. [

9

]. In addition,

there are a set of rewrite rules that simplify the expression

tree, by performing e.g. common subexpression elimination

of 4

免费下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

文档被以下合辑收录

评论