Planecell Representing Structural Space with Plane Elements.pdf

50墨值下载

Planecell: Representing Structural Space with Plane Elements

Lei Fan

1,2

, Long Chen

2

, Kai Huang

2

and Dongpu Cao

3

Abstract— Reconstruction based on the stereo camera has

received considerable attention recently, but two particular

challenges still remain. The first concerns the need to present

and compress data in an effective way, and the second is to

maintain as much of the available information as possible

while ensuring sufficient accuracy. To overcome these issues,

we propose a new 3D representation method, namely, planecell,

that extracts planarity from the depth-assisted image segmen-

tation and then directly projects these depth planes into the

3D world. The proposed method demonstrates its advancement

especially dealing with large-scale structural environment, such

as autonomous driving scene. The reconstruction result of our

method achieves equal accuracy compared to dense point clouds

and compresses the output file 200 times. To further obtain

global surfaces, an energy function formulated from Condi-

tional Random Field that generalizes the planar relationships

is maximized. We evaluate our method with reconstruction

baselines on the KITTI outdoor scene dataset, and the results

indicate the superiorities compared to other 3D space repre-

sentation methods in accuracy, memory requirements and the

scope of applications.

I. INTRODUCTION

3D reconstruction has been an active research area in

the computer vision community, which can be used in

numerous tasks, such as perception and navigation of intelli-

gent robotics, high precision mapping, and online modeling.

Among various sensors that can be used for reconstruc-

tion, stereos cameras are popular for offering advantages in

terms of being low-cost and supplying color information.

Many researchers have improved the precision and speed of

self-positioning and depth calculation algorithms to enable

better reconstruction. However, the basic map representa-

tion method determines the upper bound of reconstruction

performance to some extent. Current approaches including

point clouds, voxel-based or piece-wise planar methods are

confronted with problems dealing massive stereo image

sequences, such as significant redundancy, ambiguities and

high memory requirements. To overcome these limitations,

we propose a new representation method named planecell,

which models planes to deliver geometric information in the

3D space.

It is a classical approach to representing the 3D space

with a preliminary point-level map. The point-based repre-

This work was supported in part by the National Natural Science

Foundation of China under Grant 61773414.

1

Lei Fan is with the Vehicle Intelligence Pioneers Inc., Qingdao Shan-

dong 266109, P.R.China chenl46@mail.sysu.edu.cn

2

Lei Fan, Long Chen and Huang kai are with School of Data and Com-

puter Science, Sun Yat-sen University, Guangzhou, Guangdong, P.R.China.

chenl46@mail.sysu.edu.cn

3

Dongpu Cao is with Department of Mechanical and Mechatronics

Engineering, University of Waterloo, 200 university avenue west, Waterloo,

Ontario N2L3G1, Canada. dongpu@uwaterloo.ca

sentations usually suffer a tradeoff of density and efficiency.

Many approaches [17], [1], [14] have been developed to

address this issue, i.e., to merge similar points in the 3D

reconstruction results for both indoor and outdoor scenes.

The current leading representation method, called the voxel

map [2], [22], [17], [20], is designed to give each voxel

grid an occupancy probability, and then aggregates all points

within a fixed range. However, dense reconstructions using

regular voxel grids are limited to reach small volumes

because of their memory requirements.

Previous studies have adopted the plane prior both in

stereo matching [23] and reconstruction [17], [18], [10],

[3]. Deriving primitives in the model raises the complex-

ity, which restricts further applications. The structure-from-

motion method [3] presented the urban scene with planes

underlying sparse data. Superpixels or image segmentation

methods have been applied in the representation [4] as basic

components. Combined with meshes and smoothing terms, it

achieves good results on large-scale scenes. Although these

methods can reconstruct the scene in a dense and light-

weight approach, the accuracy and time-consumption are still

unsatisfactory.

In this paper, we propose a novel approach that differs

by mapping the 3D space with basic plane units directly

extracting from 2D images, which is called planecell for

it resembles cells to a living being. The proposed method

utilizes a general function to represent a group of points with

similar geometric information, i.e., belong to the same plane

by a depth-aware superpixel segmentation, and these planes

are projected into the real-world coordinates after plane-

fitting with depth values. The standardized representation

promotes memory efficiency and provides convenience for

following computations, such as large surface segmentation

and distance calculation. Our method extracts planecells from

images by superpixelizing the input image following the

hierarchical strategy of SEEDS [19] and converts them into

a 3D map. Further aggregation of planecells to a larger

surface is modeled by a Conditional Random Field (CRF)

formulation. The proposed representation is motivated by the

planar nature of the environment. The input to our method

is stereo pairs, and the output is a plane-based 3D map with

decent pixel-wise precision and high compression rate. Note

the depth acquirement is not specified, e.g. stereo matching

algorithms, LiDAR or RGB-D sensors can also be used.

The detailed contributions of this paper are as follows:

(a) We propose a novel plane-based 3D map representa-

tion method that demonstrates remarkable accuracy and has

enhanced the space perception abilities. (b) The proposed

method reduces the required memory of presenting large-

2018 IEEE Intelligent Vehicles Symposium (IV)

Changshu, Suzhou, China, June 26-30, 2018

978-1-5386-4451-5/18/$31.00 ©2018 IEEE 978

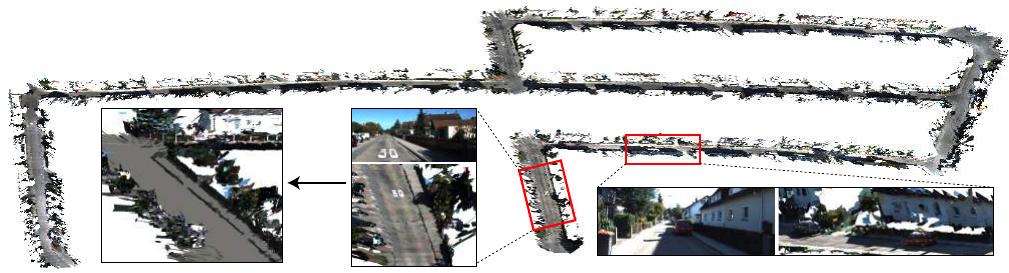

Road extraction after coplanar planecell aggregation

Frames: 2000 continuous stereo pairs from the KITTI odometry dataset

Time: 411s

Map Size: 89.1MB

Map details

Input image

Input image

Fig. 1. The map reconstructed by proposed method. The result shows three advantages: (a) the ability of dealing with large-scale reconstruction; (b) the

low-loss of accuracy (detailed quantitative evaluation is processed on KITTI stereo dataset compared to ground truth); (c) the low requirements of both

time and memory.

scale maps with innovative superpixel segmentation. (c) The

expansibility and efficiency of our representation method

are studied in applications include but not limited to road

extraction and obstacle avoidance in the experiment. In

practice, this objective can be optimized on a single core in

as little as 0.2 seconds for about 700 planecells. To further

aggregate coplanar planes requires only 0.1s per frame. The

remainder of this paper is organized as follows: Section II

briefly reviews the related literature. The overview of the

proposed method is introduced in Section III. The detail of

our method is explained in Section IV. In Section V, we

demonstrate the 3D representation. The experimental results

are conducted in Section VI. In Section VII, we conclude

this paper.

II. RELATED WORK

Basic 3D map representation methods using an image pair

are inheritors of various stereo matching algorithms [23],

[15], [24]. Point-based 3D reconstruction methods directly

transforming stereo matching results lack structural repre-

sentations. Recent point-level online scanning [25] produces

a high-quality 3D model of small objects with the geometric

surface prior, which is simpler to operate than strong shape

assumptions. For large-scale reconstructions, sparse point-

based representations are mainly used for their quality and

speed. The point-based maps embedded in the system [8]

is designed for real-time applications, such as localization.

Adopting denser point clouds in the mapping is challenging

because it involves managing millions of discrete values.

The heightmap is a representation adopting 2.5D contin-

uous surface representations, which shows its advancement

modeling large buildings and floors. Gallup et al. proposed

an n-layer heightmap [11] to support more complex 3D

reconstruction of urban scenes. The proposed heightmap

enforced vertical surfaces and avoided major limitations

when reconstructing overhanging structures. The basic unit

of heightmap is the probability occupancy grid computed by

the bayesian inference, which could compress surface data

efficiently but is also lossy of point-level precision.

Recent studies on voxelized 3D reconstruction focus on

infusing primitives into the reconstructions [16], [17], [9],

[5] or utilizing scalable data structures to meet CPU require-

ments [20]. Dame et al. proposed a formulation [6] which

combines shape priors-based tracking and reconstruction.

The map was represented as voxels with two parameters in-

cluding the distance to the closest surface and the confidence

value. Nonetheless, the accuracy of volumetric reconstruction

is always limited to itself, and re-estimating object surfaces

from voxels or 3D grids leads to ambiguities.

Planar nature assumptions have been applied to both the

reconstruction [10], [4] and depth recovery from a stereo

pair [23], [21]. For surface reconstruction or segmentation,

Liu et al. [16] partitioned the large-scale environment into

structural surfaces including planes, cylinders, and spheres

using a higher-order CRF. A bottom-up progressive approach

is adopted alternately on the input mesh with high geomet-

rical and topological noises. Adopting this assumption, we

present a new representation method of 3D space, which is

composed of planes with pixel-level accuracy.

III. SYSTEM OVERVIEW

As shown in Fig. 2, the input to the system is a com-

bination of the stereo image. The disparity map is pre-

calculated with stereo matching algorithms. We use a depth-

aware superpixel segmentation method with an additional

depth term. A hill-climbing [19] superpixel segmentation

method is applied to the color image with a regularization

term to reduce the complexity. Sparse depth results produced

by fast matching algorithms can still be the input, as we

utilize random sampling to omit the effect of outliers during

plane-fitting. The boundaries of the segmentation are further

updated after plane functions have been assigned to each

segment. The superpixels are the basic elements of the

mapping process. We extract the vertices of each plane

and then convert them into the camera coordinate system.

For existing 3D planes, we aggregate those whose spatial

relationship are planarity while minimizing the total energy

function.

979

of 8

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论