13.Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition.pdf

50墨值下载

1

Spatial Pyramid Pooling in Deep Convolutional

Networks for Visual Recognition

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun

Abstract—Existing deep convolutional neural networks (CNNs) require a fixed-size (e.g., 224×224) input image. This require-

ment is “artificial” and may reduce the recognition accuracy for the images or sub-images of an arbitrary size/scale. In this work,

we equip the networks with a more principled pooling strategy, “spatial pyramid pooling”, to eliminate the above requirement. The

new network structure, called SPP-net, can generate a fixed-length representation regardless of image size/scale. Pyramid

pooling is also robust to object deformations. With these advantages, SPP-net should in general improve all CNN-based

image classification methods. On the ImageNet 2012 dataset, we demonstrate that SPP-net boosts the accuracy of a variety

of published CNN architectures despite their different designs. On the Pascal VOC 2007 and Caltech101 datasets, SPP-net

achieves state-of-the-art classification results using a single full-image representation and no fine-tuning.

The power of SPP-net is also significant in object detection. Using SPP-net, we compute the feature maps from the entire

image only once, and then pool features in arbitrary regions (sub-images) to generate fixed-length representations for training

the detectors. This method avoids repeatedly computing the convolutional features. In processing test images, our method

computes convolutional features 30-170× faster than the recent and most accurate method R-CNN (and 24-64× faster overall),

while achieving better or comparable accuracy on Pascal VOC 2007.

In ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2014, our methods rank #2 in object detection and #3 in

image classification among all 38 teams. This manuscript also introduces the improvement made for this competition.

Index Terms—Convolutional Neural Networks, Spatial Pyramid Pooling, Image Classification, Object Detection

F

1 INTRODUCTION

We are witnessing a rapid, revolutionary change in

our vision community, mainly caused by deep con-

volutional neural networks (CNNs) [1] and the avail-

ability of large scale training data [2]. Deep-networks-

based approaches have recently been substantially

improving upon the state of the art in image clas-

sification [3], [4], [5], [6], object detection [7], [8], [5],

many other recognition tasks [9], [10], [11], [12], and

even non-recognition tasks.

However, there is a technical issue in the training

and testing of the CNNs: the prevalent CNNs require

a fixed input image size (e.g., 224×224), which limits

both the aspect ratio and the scale of the input image.

When applied to images of arbitrary sizes, current

methods mostly fit the input image to the fixed size,

either via cropping [3], [4] or via warping [13], [7],

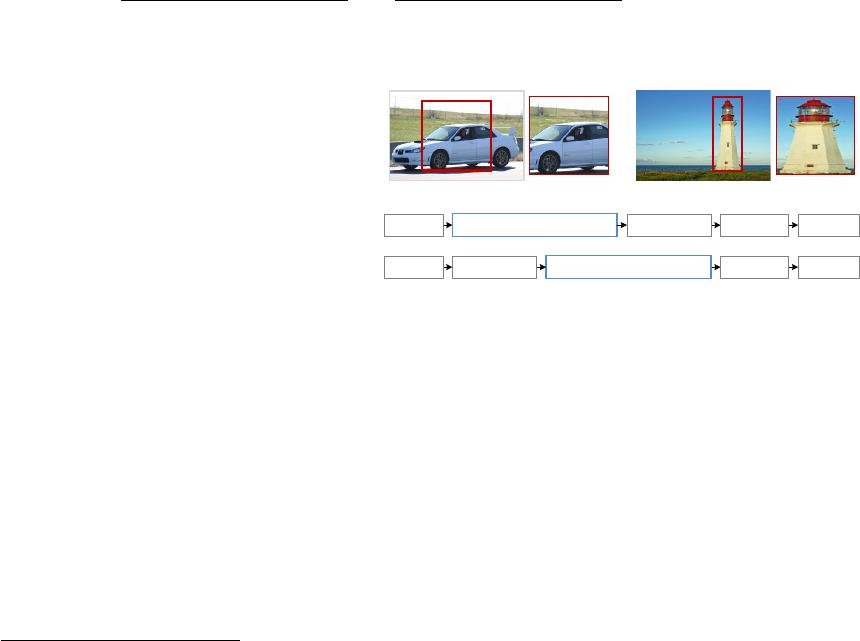

as shown in Figure 1 (top). But the cropped region

may not contain the entire object, while the warped

content may result in unwanted geometric distortion.

Recognition accuracy can be compromised due to the

content loss or distortion. Besides, a pre-defined scale

• K. He and J. Sun are with Microsoft Research, Beijing, China. E-mail:

{kahe,jiansun}@microsoft.com

• X. Zhang is with Xi’an Jiaotong University, Xi’an, China. Email:

xyz.clx@stu.xjtu.edu.cn

• S. Ren is with University of Science and Technology of China, Hefei,

China. Email: sqren@mail.ustc.edu.cn

This work was done when X. Zhang and S. Ren were interns at Microsoft

Research.

crop warp

spatial pyramid pooling

crop / warp

conv layersimage fc layers output

image conv layers fc layers output

Figure 1: Top: cropping or warping to fit a fixed size.

Middle: a conventional deep convolutional network

structure. Bottom: our spatial pyramid pooling net-

work structure.

(e.g., 224) may not be suitable when object scales vary.

Fixing the input size overlooks the issues involving

scales.

So why do CNNs require a fixed input size? A CNN

mainly consists of two parts: convolutional layers,

and fully-connected layers that follow. The convo-

lutional layers operate in a sliding-window manner

and output feature maps which represent the spatial

arrangement of the activations (Figure 2). In fact, con-

volutional layers do not require a fixed image size and

can generate feature maps of any sizes. On the other

hand, the fully-connected layers need to have fixed-

size/length input by their definition. Hence, the fixed-

size constraint comes only from the fully-connected

layers, which exist at a deeper stage of the network.

arXiv:1406.4729v2 [cs.CV] 29 Aug 2014

2

filter #175

filter #55

(a) image (b) feature maps (c) strongest activations

filter #66

filter #118

(a) image (b) feature maps (c) strongest activations

Figure 2: Visualization of the feature maps. (a) Two images in Pascal VOC 2007. (b) The feature maps of some

conv

5

filters. The arrows indicate the strongest responses and their corresponding positions in the images.

(c) The ImageNet images that have the strongest responses of the corresponding filters. The green rectangles

mark the receptive fields of the strongest responses.

In this paper, we introduce a spatial pyramid pool-

ing (SPP) [14], [15] layer to remove the fixed-size

constraint of the network. Specifically, we add an

SPP layer on top of the last convolutional layer. The

SPP layer pools the features and generates fixed-

length outputs, which are then fed into the fully-

connected layers (or other classifiers). In other words,

we perform some information “aggregation” at a

deeper stage of the network hierarchy (between con-

volutional layers and fully-connected layers) to avoid

the need for cropping or warping at the beginning.

Figure 1 (bottom) shows the change of the network

architecture by introducing the SPP layer. We call the

new network structure SPP-net.

We believe that aggregation at a deeper stage is

more physiologically sound and more compatible

with the hierarchical information processing in our

brains. When an object comes into our field of view,

it is more reasonable that our brains consider it as

a whole instead of cropping it into several “views”

at the beginning. Similarly, it is unlikely that our

brains distort all object candidates into fixed-size re-

gions for detecting/locating them. It is more likely

that our brains handle arbitrarily-shaped objects at

some deeper layers, by aggregating the already deeply

processed information from the previous layers.

Spatial pyramid pooling [14], [15] (popularly

known as spatial pyramid matching or SPM [15]), as

an extension of the Bag-of-Words (BoW) model [16],

is one of the most successful methods in computer

vision. It partitions the image into divisions from

finer to coarser levels, and aggregates local features

in them. SPP has long been a key component in the

leading and competition-winning systems for classi-

fication (e.g., [17], [18], [19]) and detection (e.g., [20])

before the recent prevalence of CNNs. Nevertheless,

SPP has not been considered in the context of CNNs.

We note that SPP has several remarkable properties

for deep CNNs: 1) SPP is able to generate a fixed-

length output regardless of the input size, while the

sliding window pooling used in the previous deep

networks [3] cannot; 2) SPP uses multi-level spatial

bins, while the sliding window pooling uses only

a single window size. Multi-level pooling has been

shown to be robust to object deformations [15]; 3) SPP

can pool features extracted at variable scales thanks

to the flexibility of input scales. Through experiments

we show that all these factors elevate the recognition

accuracy of deep networks.

SPP-net not only makes it possible to generate rep-

resentations from arbitrarily sized images/windows

for testing, but also allows us to feed images with

varying sizes or scales during training. Training with

variable-size images increases scale-invariance and

reduces over-fitting. We develop a simple multi-size

training method. For a single network to accept

variable input sizes, we approximate it by multiple

networks that share all parameters, while each of

these networks is trained using a fixed input size. In

each epoch we train the network with a given input

size, and switch to another input size for the next

epoch. Experiments show that this multi-size training

converges just as the traditional single-size training,

and leads to better testing accuracy.

The advantages of SPP are orthogonal to the specific

CNN designs. In a series of controlled experiments on

the ImageNet 2012 dataset, we demonstrate that SPP

improves four different CNN architectures in existing

publications [3], [4], [5] (or their modifications), over

the no-SPP counterparts. These architectures have

various filter numbers/sizes, strides, depths, or other

designs. It is thus reasonable for us to conjecture

that SPP should improve more sophisticated (deeper

and larger) convolutional architectures. SPP-net also

shows state-of-the-art classification results on Cal-

tech101 [21] and Pascal VOC 2007 [22] using only a

single full-image representation and no fine-tuning.

SPP-net also shows great strength in object detec-

tion. In the leading object detection method R-CNN

[7], the features from candidate windows are extracted

via deep convolutional networks. This method shows

remarkable detection accuracy on both the VOC and

of 14

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论