Synthetically Trained Neural Networks for Learning Human-Readable Plans from Real-World Demonstrations.pdf

50墨值下载

Synthetically Trained Neural Networks for Learning

Human-Readable Plans from Real-World Demonstrations

Jonathan Tremblay Thang To Artem Molchanov

†

Stephen Tyree Jan Kautz Stan Birchfield

Abstract— We present a system to infer and execute a human-

readable program from a real-world demonstration. The system

consists of a series of neural networks to perform percep-

tion, program generation, and program execution. Leveraging

convolutional pose machines, the perception network reliably

detects the bounding cuboids of objects in real images even

when severely occluded, after training only on synthetic images

using domain randomization. To increase the applicability of the

perception network to new scenarios, the network is formulated

to predict in image space rather than in world space. Additional

networks detect relationships between objects, generate plans,

and determine actions to reproduce a real-world demonstration.

The networks are trained entirely in simulation, and the system

is tested in the real world on the pick-and-place problem of

stacking colored cubes using a Baxter robot.

I. INTRODUCTION

In order for robots to perform useful tasks in real-world

settings, it must be easy to communicate the task to the robot;

this includes both the desired end result and any hints as to

the best means to achieve that result. In addition, the robot

must be able to perform the task robustly with respect to

changes in the state of the world, uncertainty in sensory

input, and imprecision in control output.

Teaching a robot by demonstration is a powerful approach

to solve these problems. With demonstrations, a user can

communicate a task to the robot and provide clues as to how

to best perform the task. Ideally, only a single demonstration

should be needed to show the robot how to do a new task.

Unfortunately, a fundamental limitation of demonstrations

is that they are concrete. If someone pours water into a glass,

the intent of the demonstration remains ambiguous. Should

the robot also pour water? If so, then into which glass?

Should it also pour water into an adjacent mug? When should

it do so? How much water should it pour? What should

it do if there is no water? And so forth. Concrete actions

themselves are insufficient to answer such questions. Rather,

abstract concepts must be inferred from the actions.

We believe that language, with its ability to capture ab-

stract universal concepts, is a natural solution to this problem

of ambiguity. By inferring a human-readable description of

the task from the demonstration, such a system allows the

user to debug the output and verify whether the demon-

stration was interpreted correctly by the system. A human-

readable description of the task can then be edited by the user

to fix any errors in the interpretation. Such a description also

The authors are affiliated with NVIDIA. Email: {jtremblay,

thangt, styree, jkautz, sbirchfield}@nvidia.com

†

Work was performed as an intern with NVIDIA. Also affiliated with the

University of Southern California. Email: molchano@usc.edu

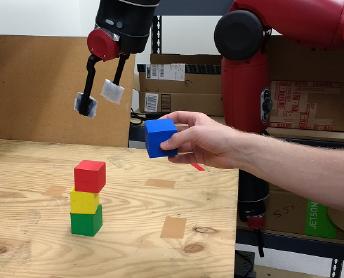

Fig. 1. In this work, a human stacks colored cubes either vertically or in a

pyramid. A sequence of neural networks learns a human-readable program

to be executed by a robot to recreate the demonstration.

provides qualitative insight into the expected ability of the

system to leverage previous experience on novel tasks and

scenarios.

In this paper we take a step in this direction by proposing a

system that learns a human-readable program from a single

demonstration in the real world. The learned program can

then be executed in the environment with different initial

conditions. The program is learned by a series of neural

networks that are trained entirely in simulation, thus yielding

inexpensive training data. To make the problem tractable, we

focus in this work on stacking colored cubes either vertically

or in pyramids, as illustrated in Fig. 1. Our system contains

the following contributions:

• The system learns from a single demonstration in the

real world. We believe that real-world demonstrations

are more natural, being applicable to a wider set of sce-

narios due to the reduced system complexity required,

as compared to AR/VR systems (e.g., [7]).

• The system generates human-readable plans. As demon-

strated in [8], this enables the resulting plan to be

verified by a human user before execution.

• The paper introduces image-centric domain randomiza-

tion for training the perception network. In contrast with

a world-centric approach (e.g., [27]), an image-centric

network makes fewer assumptions about the camera’s

position within the environment or the presence and

visibility of fixed objects (such as a table), and is

therefore portable to new situations without retraining.

1

1

Recalibrating to determine a camera’s exterior orientation is arguably

easier than creating a virtual environment to match a new actual environ-

ment, generating new training images, and retraining a network.

II. PREVIOUS WORK

Our work draws inspiration from recent work on one-

shot imitation learning. Duan et al. [7], for example, use

simulation to train a network by watching a user demonstra-

tion and replicating it with a robot. The method leverages a

special neural network architecture that extensively uses soft-

attention in combination with memory. During an extensive

training phase in a simulated environment, the network learns

to correctly repeat a demonstrated block stacking task. The

complexity of the architecture, in particular the attention and

memory mechanisms, support robustness when repeating the

demonstration, e.g., allowing the robot to repeat a failed step.

However, the intermediate representations are not designed

for interpretability. As argued in [11], the ability to generate

human interpretable representations is crucial for modularity

and stronger generalization, and thus it is a main focus of

our work.

Another closely related work by Denil et al. [5] learns

programmable agents capable of executing readable pro-

grams. They consider reinforcement learning in the context

of a simulated manipulator reaching task. This work draws

parallels to the third component of our architecture (the

execution network), which translates a human-readable plan

into a closed-loop sequence of robotic actions. Further, our

approach of decomposing the system is similar in spirit to

the modular networks of [6].

These prior approaches operate on a low-dimensional

representation of the objects in the environment and train

in simulation. Like Duan et al. [7], we acquire a label-free

low-dimensional representation of the world by leveraging

simulation-to-reality transfer. We use the simple but effective

principle of domain randomization [27] for transferring a

representation learned entirely in simulation. This approach

has been successfully applied in several robotic learning

applications, including the aforementioned work in demon-

stration learning [7], as well as grasp prediction [27] and

visuomotor control [16]. Building on prior work, we acquire

a more detailed description of the objects in a scene using

object part inference inspired by Cao et al. [3], allowing the

extraction of interpretable intermediate representations and

inference of additional object parameters, such as orientation.

Further, we make predictions in image space, so that robust

transfer to the real world requires only determining the

camera’s extrinsic parameters, rather than needing to develop

a simulated world to match the real environment for training.

Related work in imitation learning trains agents via

demonstrations. These methods typically focus on learning a

single complex task, e.g., steering a car based on human

demonstrations, instead of learning how to perform one-

shot replication in a multi-task scenario, e.g., repeating a

specific demonstrated block stacking sequence. Behavior

cloning [22], [23], [24] treats learning from demonstration as

a supervised learning problem, teaching an agent to exactly

replicate the behavior of an expert by learning a function

from the observed state to the next expert action. This ap-

proach may suffer as errors accumulate in the agent’s actions

leading eventually to states not encountered by the expert.

Inverse reinforcement learning [15], [19], [1] mitigates this

problem by estimating a reward function to explain the

behavior of the expert and training a policy with the learned

reward mapping. It typically requires running an expensive

reinforcement learning training step in the inner loop of

optimization or, alternatively, applying generative adversarial

networks [14], [13] or guided cost learning [10].

The conjunction of language and vision for environment

understanding has a long history. Early work by Wino-

grad [30] explored the use of language for a human to guide

and interpret interactions between a computerized agent and

a simulated 3D environment. Models can be learned to per-

form automatic image captioning [28], video captioning [25],

visual question answering [12], and understanding of and

reasoning about visual relationships [18], [21], [17]—all

interacting with a visual scene in natural language.

Recent work has studied the grounding of natural language

instructions in the context of robotics [20]. Natural language

utterances and the robot’s visual observations are grounded

in a symbolic space and the associated grounding graph,

allowing the robot to infer the specific actions required to

follow a subsequent verbal command.

Neural task programming (NTP) [31], a concurrent work

to ours, achieves one-shot imitation learning with an on-

line hierarchical task decomposition. An RNN-based NTP

model processes a demonstration to predict the next sub-

task (e.g., “pick-and-place”) and a relevant sub-sequence of

the demonstration, which are recursively input to the NTP

model. The base of the hierarchy is made up of primitive

sub-tasks (e.g., “grip”, “move”, or “release”), and recursive

sub-task prediction is made with the current observed state as

input, allowing closed loop control. Like our work, the NTP

model provides a human-readable program, but unlike our

approach the NTP program is produced during execution,

not before.

III. METHOD

An overview of our system is shown in Fig. 2. A camera

acquires a live video feed of a scene, and the positions and

relationships of objects in the scene are inferred in real time

by a pair of neural networks. The resulting percepts are fed

to another network that generates a plan to explain how to

recreate those percepts. Finally, an execution network reads

the plan and generates actions for the robot, taking into

account the current state of the world in order to ensure

robustness to external disturbances.

A. Perception networks

Given a single image, our perception networks infer the lo-

cations of objects in the scene and their relationships. These

networks perform object detection with pose estimation, as

well as relationship inference.

1) Image-centric domain randomization: Each object of

interest in this work is modeled by its bounding cuboid

consisting of up to seven visible vertices and one hidden

vertex. Rather than directly mapping from images to object

of 9

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论