MegaDepth:从互联网照片学习单视图深度预测.pdf

50墨值下载

MegaDepth: Learning Single-View Depth Prediction from Internet Photos

Zhengqi Li Noah Snavely

Department of Computer Science & Cornell Tech, Cornell University

Abstract

Single-view depth prediction is a fundamental problem

in computer vision. Recently, deep learning methods have

led to significant progress, but such methods are limited by

the available training data. Current datasets based on 3D

sensors have key limitations, including indoor-only images

(NYU), small numbers of training examples (Make3D), and

sparse sampling (KITTI). We propose to use multi-view In-

ternet photo collections, a virtually unlimited data source,

to generate training data via modern structure-from-motion

and multi-view stereo (MVS) methods, and present a large

depth dataset called MegaDepth based on this idea. Data

derived from MVS comes with its own challenges, includ-

ing noise and unreconstructable objects. We address these

challenges with new data cleaning methods, as well as auto-

matically augmenting our data with ordinal depth relations

generated using semantic segmentation. We validate the use

of large amounts of Internet data by showing that models

trained on MegaDepth exhibit strong generalization—not

only to novel scenes, but also to other diverse datasets in-

cluding Make3D, KITTI, and DIW, even when no images

from those datasets are seen during training.

1

1. Introduction

Predicting 3D shape from a single image is an important

capability of visual reasoning, with applications in robotics,

graphics, and other vision tasks such as intrinsic images.

While single-view depth estimation is a challenging, un-

derconstrained problem, deep learning methods have re-

cently driven significant progress. Such methods thrive when

trained with large amounts of data. Unfortunately, fully gen-

eral training data in the form of (RGB image, depth map)

pairs is difficult to collect. Commodity RGB-D sensors

such as Kinect have been widely used for this purpose [

34

],

but are limited to indoor use. Laser scanners have enabled

important datasets such as Make3D [

29

] and KITTI [

25

],

but such devices are cumbersome to operate (in the case

of industrial scanners), or produce sparse depth maps (in

1

Project website:

http://www.cs.cornell.edu/projects/

megadepth/

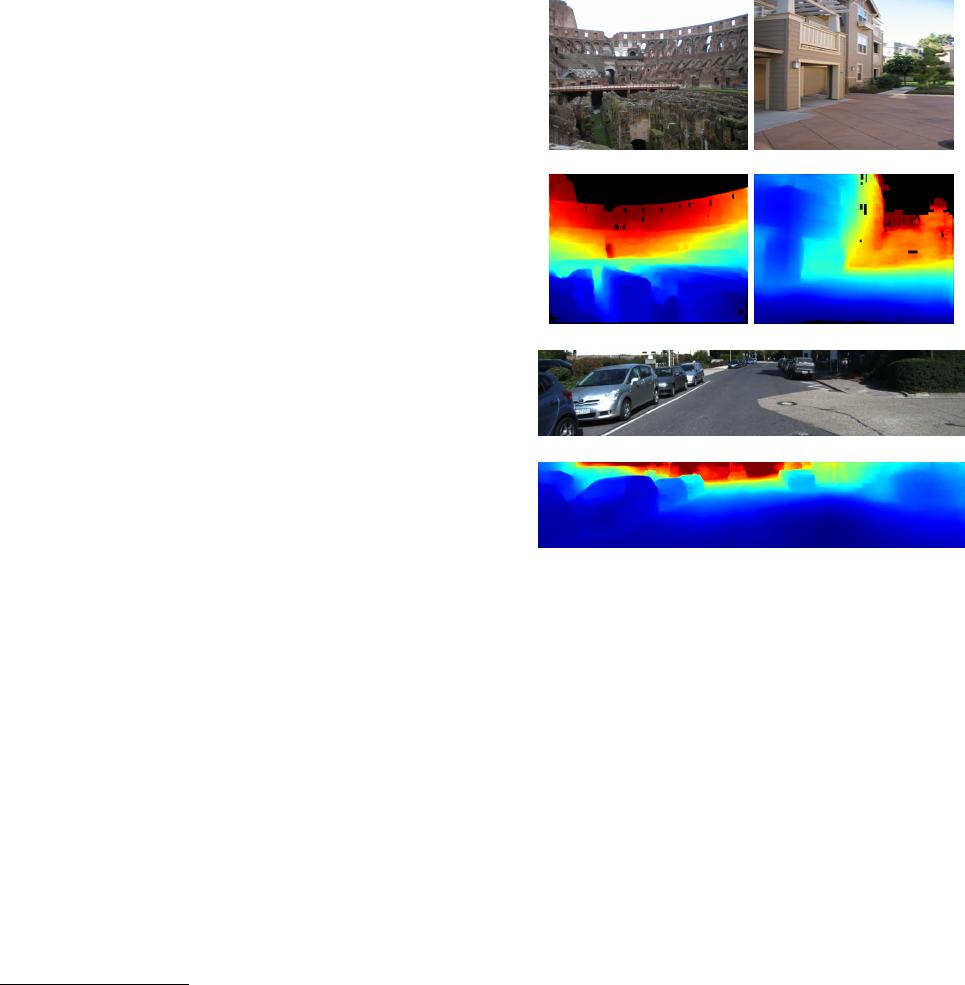

(a) Internet photo of Colosseum (b) Image from Make3D

(c) Our single-view depth prediction (d) Our single-view depth prediction

(e) Image from KITTI

(f) Our single-view depth prediction

Figure 1: We use large Internet image collections, combined

with 3D reconstruction and semantic labeling methods, to

generate large amounts of training data for single-view depth

prediction.

(a), (b), (e):

Example input RGB images.

(c),

(d), (f):

Depth maps predicted by our MegaDepth-trained

CNN (blue

=

near, red

=

far). For these results, the network

was not trained on Make3D and KITTI data.

the case of LIDAR). Moreover, both Make3D and KITTI

are collected in specific scenarios (a university campus, and

atop a car, respectively). Training data can also be generated

through crowdsourcing, but this approach has so far been

limited to gathering sparse ordinal relationships or surface

normals [12, 4, 5].

In this paper, we explore the use of a nearly unlimited

source of data for this problem: images from the Internet

from overlapping viewpoints, from which structure-from-

arXiv:1804.00607v4 [cs.CV] 28 Nov 2018

motion (SfM) and multi-view stereo (MVS) methods can

automatically produce dense depth. Such images have been

widely used in research on large-scale 3D reconstruction [

35

,

14

,

2

,

8

]. We propose to use the outputs of these systems

as the inputs to machine learning methods for single-view

depth prediction. By using large amounts of diverse training

data from photos taken around the world, we seek to learn

to predict depth with high accuracy and generalizability.

Based on this idea, we introduce MegaDepth (MD), a large-

scale depth dataset generated from Internet photo collections,

which we make fully available to the community.

To our knowledge, ours is the first use of Internet

SfM+MVS data for single-view depth prediction. Our main

contribution is the MD dataset itself. In addition, in creating

MD, we found that care must be taken in preparing a dataset

from noisy MVS data, and so we also propose new methods

for processing raw MVS output, and a corresponding new

loss function for training models with this data. Notably,

because MVS tends to not reconstruct dynamic objects (peo-

ple, cars, etc), we augment our dataset with ordinal depth

relationships automatically derived from semantic segmen-

tation, and train with a joint loss that includes an ordinal

term. In our experiments, we show that by training on MD,

we can learn a model that works well not only on images

of new scenes, but that also generalizes remarkably well to

completely different datasets, including Make3D, KITTI,

and DIW—achieving much better generalization than prior

datasets. Figure 1 shows example results spanning different

test sets from a network trained solely on our MD dataset.

2. Related work

Single-view depth prediction.

A variety of methods have

been proposed for single-view depth prediction, most re-

cently by utilizing machine learning [

15

,

28

]. A standard

approach is to collect RGB images with ground truth depth,

and then train a model (e.g., a CNN) to predict depth from

RGB [

7

,

22

,

23

,

27

,

3

,

19

]. Most such methods are trained on

a few standard datasets, such as NYU [

33

,

34

], Make3D [

29

],

and KITTI [

11

], which are captured using RGB-D sensors

(such as Kinect) or laser scanning. Such scanning methods

have important limitations, as discussed in the introduction.

Recently, Novotny et al. [

26

] trained a network on 3D mod-

els derived from SfM+MVS on videos to learn 3D shapes of

single objects. However, their method is limited to images

of objects, rather than scenes.

Multiple views of a scene can also be used as an im-

plicit source of training data for single-view depth pre-

diction, by utilizing view synthesis as a supervisory sig-

nal [

38

,

10

,

13

,

43

]. However, view synthesis is only a proxy

for depth, and may not always yield high-quality learned

depth. Ummenhofer et al. [

36

] trained from overlapping

image pairs taken with a single camera, and learned to pre-

dict image matches, camera poses, and depth. However, it

requires two input images at test time.

Ordinal depth prediction.

Another way to collect depth

data for training is to ask people to manually annotate depth

in images. While labeling absolute depth is challenging,

people are good at specifying relative (ordinal) depth rela-

tionships (e.g., closer-than, further-than) [

12

]. Zoran et al.

[

44

] used such relative depth judgments to predict ordinal

relationships between points using CNNs. Chen et al. lever-

aged crowdsourcing of ordinal depth labels to create a large

dataset called “Depth in the Wild” [

4

]. While useful for pre-

dicting depth ordering (and so we incorporate ordinal data

automatically generated from our imagery), the Euclidean

accuracy of depth learned solely from ordinal data is limited.

Depth estimation from Internet photos.

Estimating ge-

ometry from Internet photo collections has been an active

research area for a decade, with advances in both structure

from motion [

35

,

2

,

37

,

30

] and multi-view stereo [

14

,

9

,

32

].

These techniques generally operate on 10s to 1000s of im-

ages. Using such methods, past work has used retrieval and

SfM to build a 3D model seeded from a single image [

31

],

or registered a photo to an existing 3D model to transfer

depth [

40

]. However, this work requires either having a de-

tailed 3D model of each location in advance, or building one

at run-time. Instead, we use SfM+MVS to train a network

that generalizes to novel locations and scenarios.

3. The MegaDepth Dataset

In this section, we describe how we construct our dataset.

We first download Internet photos from Flickr for a set

of well-photographed landmarks from the Landmarks10K

dataset [

21

]. We then reconstruct each landmark in 3D using

state-of-the-art SfM and MVS methods. This yields an SfM

model as well as a dense depth map for each reconstructed

image. However, these depth maps have significant noise

and outliers, and training a deep network on this raw depth

data will not yield a useful predictor. Therefore, we propose

a series of processing steps that prepare these depth maps for

use in learning, and additionally use semantic segmentation

to automatically generate ordinal depth data.

3.1. Photo calibration and reconstruction

We build a 3D model from each photo collection using

COLMAP, a state-of-art SfM system [

30

] (for reconstructing

camera poses and sparse point clouds) and MVS system [

32

]

(for generating dense depth maps). We use COLMAP because

we found that it produces high-quality 3D models via its

careful incremental SfM procedure, but other such systems

could be used. COLMAP produces a depth map

D

for every

reconstructed photo

I

(where some pixels of

D

can be empty

if COLMAP was unable to recover a depth), as well as other

outputs, such as camera parameters and sparse SfM points

plus camera visibility.

of 11

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论