Pix3D:单图像3D形状建模的数据集和方法.pdf

50墨值下载

Pix3D: Dataset and Methods for Single-Image 3D Shape Modeling

Xingyuan Sun

∗1,2

Jiajun Wu

∗1

Xiuming Zhang

1

Zhoutong Zhang

1

Chengkai Zhang

1

Tianfan Xue

3

Joshua B. Tenenbaum

1

William T. Freeman

1,3

1

Massachusetts Institute of Technology

2

Shanghai Jiao Tong University

3

Google Research

Mismatched 3D shapes

Imprecise pose annotationsWell-aligned images and shapes

Our Dataset: Pix3D Existing Datasets

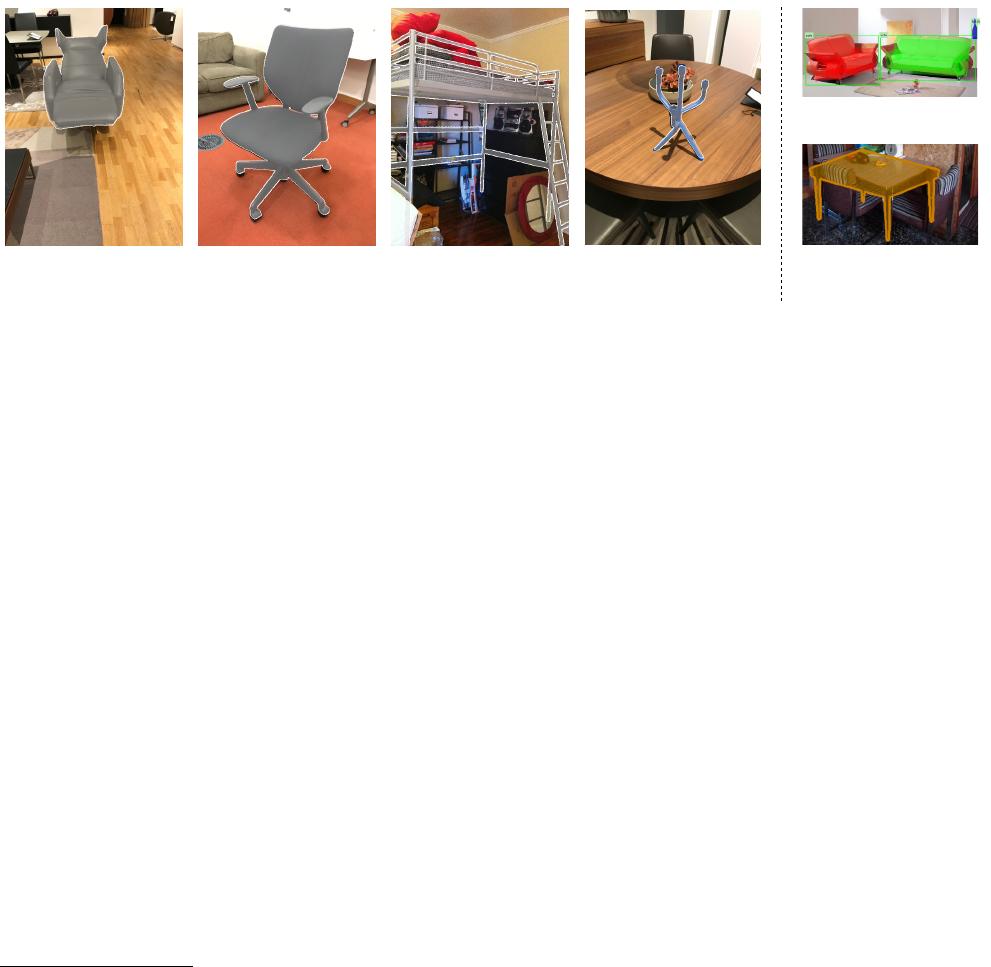

Figure 1: We present Pix3D, a new large-scale dataset of diverse image-shape pairs. Each 3D shape in Pix3D is associated with a rich and

diverse set of images, each with an accurate 3D pose annotation to ensure precise 2D-3D alignment. In comparison, existing datasets have

limitations: 3D models may not match the objects in images; pose annotations may be imprecise; or the dataset may be relatively small.

Abstract

We study 3D shape modeling from a single image and make

contributions to it in three aspects. First, we present Pix3D,

a large-scale benchmark of diverse image-shape pairs with

pixel-level 2D-3D alignment. Pix3D has wide applications in

shape-related tasks including reconstruction, retrieval, view-

point estimation, etc. Building such a large-scale dataset,

however, is highly challenging; existing datasets either con-

tain only synthetic data, or lack precise alignment between

2D images and 3D shapes, or only have a small number of

images. Second, we calibrate the evaluation criteria for 3D

shape reconstruction through behavioral studies, and use

them to objectively and systematically benchmark cutting-

edge reconstruction algorithms on Pix3D. Third, we design

a novel model that simultaneously performs 3D reconstruc-

tion and pose estimation; our multi-task learning approach

achieves state-of-the-art performance on both tasks.

1. Introduction

The computer vision community has put major efforts in

building datasets. In 3D vision, there are rich 3D CAD model

∗ indicates equal contributions.

repositories like ShapeNet [

7

] and the Princeton Shape Bench-

mark [

50

], large-scale datasets associating images and shapes

like Pascal 3D+ [

65

] and ObjectNet3D [

64

], and benchmarks

with fine-grained pose annotations for shapes in images like

IKEA [39]. Why do we need one more?

Looking into Figure 1, we realize existing datasets have

limitations for the task of modeling a 3D object from a single

image. ShapeNet is a large dataset for 3D models, but does

not come with real images; Pascal 3D+ and ObjectNet3D have

real images, but the image-shape alignment is rough because

the 3D models do not match the objects in images; IKEA has

high-quality image-3D alignment, but it only contains 90 3D

models and 759 images.

We desire a dataset that has all three merits—a large-scale

dataset of real images and ground-truth shapes with precise

2D-3D alignment. Our dataset, named Pix3D, has 395 3D

shapes of nine object categories. Each shape associates with

a set of real images, capturing the exact object in diverse

environments. Further, the 10,069 image-shape pairs have

precise 3D annotations, giving pixel-level alignment between

shapes and their silhouettes in the images.

Building such a dataset, however, is highly challenging.

For each object, it is difficult to simultaneously collect its

high-quality geometry and in-the-wild images. We can crawl

arXiv:1804.04610v1 [cs.CV] 12 Apr 2018

many images of real-world objects, but we do not have access

to their shapes; 3D CAD repositories offer object geometry,

but do not come with real images. Further, for each image-

shape pair, we need a precise pose annotation that aligns the

shape with its projection in the image.

We overcome these challenges by constructing Pix3D in

three steps. First, we collect a large number of image-shape

pairs by crawling the web and performing 3D scans ourselves.

Second, we collect 2D keypoint annotations of objects in the

images on Amazon Mechanical Turk, with which we optimize

for 3D poses that align shapes with image silhouettes. Third,

we filter out image-shape pairs with a poor alignment and, at

the same time, collect attributes (i.e., truncation, occlusion)

for each instance, again by crowdsourcing.

In addition to high-quality data, we need a proper metric

to objectively evaluate the reconstruction results. A well-

designed metric should reflect the visual appealingness of the

reconstructions. In this paper, we calibrate commonly used

metrics, including intersection over union, Chamfer distance,

and earth mover’s distance, on how well they capture human

perception of shape similarity. Based on this, we benchmark

state-of-the-art algorithms for 3D object modeling on Pix3D

to demonstrate their strengths and weaknesses.

With its high-quality alignment, Pix3D is also suitable for

object pose estimation and shape retrieval. To demonstrate

that, we propose a novel model that performs shape and pose

estimation simultaneously. Given a single RGB image, our

model first predicts its 2.5D sketches, and then regresses the

3D shape and the camera parameters from the estimated 2.5D

sketches. Experiments show that multi-task learning helps to

boost the model’s performance.

Our contributions are three-fold. First, we build a new

dataset for single-image 3D object modeling; Pix3D has a

diverse collection of image-shape pairs with precise 2D-3D

alignment. Second, we calibrate metrics for 3D shape recon-

struction based on their correlations with human perception,

and benchmark state-of-the-art algorithms on 3D reconstruc-

tion, pose estimation, and shape retrieval. Third, we present a

novel model that simultaneously estimates object shape and

pose, achieving state-of-the-art performance on both tasks.

2. Related Work

Datasets of 3D shapes and scenes.

For decades, re-

searchers have been building datasets of 3D objects, either

as a repository of 3D CAD models [

4

,

5

,

50

] or as images

of 3D shapes with pose annotations [

35

,

48

]. Both direc-

tions have witnessed the rapid development of web-scale

databases: ShapeNet [

7

] was proposed as a large repository of

more than 50K models covering 55 categories, and Xiang et

al. built Pascal 3D+ [

65

] and ObjectNet3D [

64

], two large-

scale datasets with alignment between 2D images and the 3D

shape inside. While these datasets have helped to advance the

field of 3D shape modeling, they have their respective limita-

tions: datasets like ShapeNet or Elastic2D3D [

33

] do not have

real images, and recent 3D reconstruction challenges using

ShapeNet have to be exclusively on synthetic images [

68

];

Pascal 3D+ and ObjectNet3D have only rough alignment be-

tween images and shapes, because objects in the images are

matched to a pre-defined set of CAD models, not their actual

shapes. This has limited their usage as a benchmark for 3D

shape reconstruction [60].

With depth sensors like Kinect [

24

,

27

], the community

has built various RGB-D or depth-only datasets of objects

and scenes. We refer readers to the review article from Fir-

man [

14

] for a comprehensive list. Among those, many ob-

ject datasets are designed for benchmarking robot manipula-

tion [

6

,

23

,

34

,

52

]. These datasets often contain a relatively

small set of hand-held objects in front of clean backgrounds.

Tanks and Temples [

31

] is an exciting new benchmark with 14

scenes, designed for high-quality, large-scale, multi-view 3D

reconstruction. In comparison, our dataset, Pix3D, focuses on

reconstructing a 3D object from a single image, and contains

much more real-world objects and images.

Probably the dataset closest to Pix3D is the large collec-

tion of object scans from Choi et al. [

8

], which contains a

rich and diverse set of shapes, each with an RGB-D video.

Their dataset, however, is not ideal for single-image 3D shape

modeling for two reasons. First, the object of interest may

be truncated throughout the video; this is especially the case

for large objects like sofas. Second, their dataset does not

explore the various contexts that an object may appear in, as

each shape is only associated with a single scan. In Pix3D,

we address both problems by leveraging powerful web search

engines and crowdsourcing.

Another closely related benchmark is IKEA [

39

], which

provides accurate alignment between images of IKEA objects

and 3D CAD models. This dataset is therefore particularly

suitable for fine pose estimation. However, it contains only

759 images and 90 shapes, relatively small for shape model-

ing

∗

. In contrast, Pix3D contains 10,069 images (13.3x) and

395 shapes (4.4x) of greater variations.

Researchers have also explored constructing scene datasets

with 3D annotations. Notable attempts include LabelMe-

3D [

47

], NYU-D [

51

], SUN RGB-D [

54

], KITTI [

16

], and

modern large-scale RGB-D scene datasets [

10

,

41

,

55

]. These

datasets are either synthetic or contain only 3D surfaces of

real scenes. Pix3D, in contrast, offers accurate alignment

between 3D object shape and 2D images in the wild.

Single-image 3D reconstruction.

The problem of recov-

ering object shape from a single image is challenging, as it

requires both powerful recognition systems and prior shape

knowledge. Using deep convolutional networks, researchers

have made significant progress in recent years [

9

,

17

,

21

,

29

,

42

,

44

,

57

,

60

,

61

,

63

,

67

,

53

,

62

]. While most of these ap-

proaches represent objects in voxels, there have also been

∗

Only 90 of the 219 shapes in the IKEA dataset have associated images.

of 17

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论