COCO-Stuff:语境中的事物和材料类别.pdf

50墨值下载

COCO-Stuff: Thing and Stuff Classes in Context

Holger Caesar

1

Jasper Uijlings

2

Vittorio Ferrari

1 2

University of Edinburgh

1

Google AI Perception

2

Abstract

Semantic classes can be either things (objects with a

well-defined shape, e.g. car, person) or stuff (amorphous

background regions, e.g. grass, sky). While lots of classifi-

cation and detection works focus on thing classes, less at-

tention has been given to stuff classes. Nonetheless, stuff

classes are important as they allow to explain important

aspects of an image, including (1) scene type; (2) which

thing classes are likely to be present and their location

(through contextual reasoning); (3) physical attributes, ma-

terial types and geometric properties of the scene. To un-

derstand stuff and things in context we introduce COCO-

Stuff

1

, which augments all 164K images of the COCO 2017

dataset with pixel-wise annotations for 91 stuff classes. We

introduce an efficient stuff annotation protocol based on su-

perpixels, which leverages the original thing annotations.

We quantify the speed versus quality trade-off of our pro-

tocol and explore the relation between annotation time and

boundary complexity. Furthermore, we use COCO-Stuff to

analyze: (a) the importance of stuff and thing classes in

terms of their surface cover and how frequently they are

mentioned in image captions; (b) the spatial relations be-

tween stuff and things, highlighting the rich contextual re-

lations that make our dataset unique; (c) the performance of

a modern semantic segmentation method on stuff and thing

classes, and whether stuff is easier to segment than things.

1. Introduction

Most of the recent object detection efforts have focused

on recognizing and localizing thing classes, such as cat and

car. Such classes have a specific size [21, 27] and shape [21,

51, 55, 39, 17, 14], and identifiable parts (e.g. a car has

wheels). Indeed, the main recognition challenges [18, 43,

35] are all about things. In contrast, much less attention has

been given to stuff classes, such as grass and sky, which are

amorphous and have no distinct parts (e.g. a piece of grass

is still grass). In this paper we ask: Is this strong focus on

things justified?

To appreciate the importance of stuff, consider that it

makes up the majority of our visual surroundings. For ex-

1

http://calvin.inf.ed.ac.uk/datasets/coco-stuff

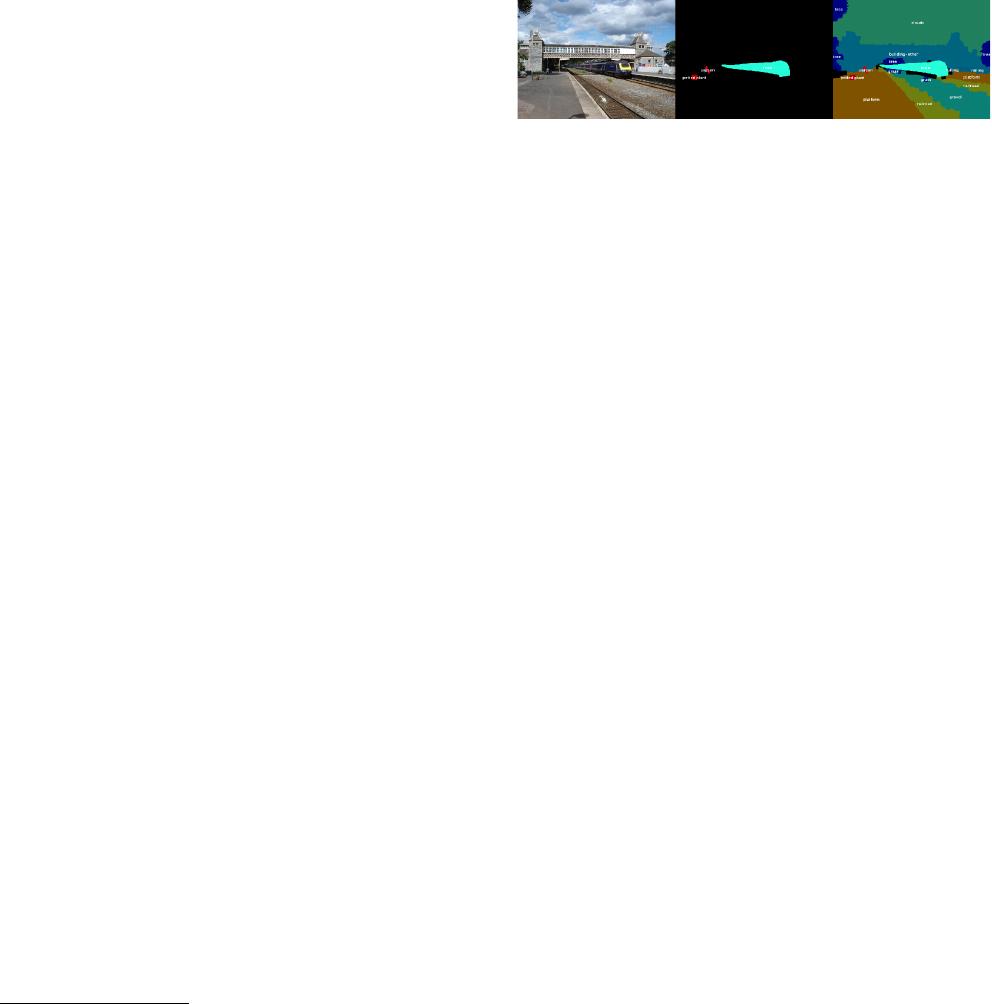

A large long train on a steel track.

A blue and yellow transit train leaving the station.

A train crossing beneath a city bridge with brick towers.

A train passing by an over bridge with a railway track (..).

A train is getting ready to leave the train station.

Figure 1: (left) An example image, (middle) its thing annotations

in COCO [35] and (right) enriched stuff and thing annotations

in COCO-Stuff. Just having the train, person, bench and potted

plant does not tell us much about the context of the scene, but with

stuff and thing labels we can infer the position and orientation of

the train, its stuff-thing interactions (train leaving the station) and

thing-thing interactions (person waiting for a different train). This

is also visible in the captions written by humans. Whereas the cap-

tions only mention one thing (train), they describe a multitude of

different stuff classes (track, station, bridge, tower, railway), stuff-

thing interactions (train leaving the station, train crossing beneath

a city bridge) and spatial arrangements (on, beneath).

ample, sky, walls and most ground types are stuff. Further-

more, stuff often determines the type of a scene, so it can

be very descriptive for an image (e.g. in a beach scene the

beach and water are the essential elements, more so than

people and volleyball). Stuff is also crucial for reasoning

about things: Stuff captures the 3D layout of the scene and

therefore heavily constrains the possible locations of things.

The contact points between stuff and things are critical for

determining depth ordering and relative positions of things,

which supports understanding the relations between them.

Finally, stuff provides context helping to recognize small or

uncommon things, e.g. a metal thing in the sky is likely an

aeroplane, while a metal thing in the water is likely a boat.

For all these reasons, stuff plays an important role in scene

understanding and we feel it deserves more attention.

In this paper we introduce the COCO-Stuff dataset,

which augments the popular COCO [35] with pixel-wise

annotations for a rich and diverse set of 91 stuff classes. The

original COCO dataset already provides outline-level anno-

tation for 80 thing classes. The additional stuff annotations

enable the study of stuff-thing interactions in the complex

COCO images. To illustrate the added value of our stuff an-

1

arXiv:1612.03716v4 [cs.CV] 28 Mar 2018

notations, Fig. 1 shows an example image, its annotations in

COCO and COCO-Stuff. The original COCO dataset offers

location annotations only for the train, potted plant, bench

and person, which are not sufficient to understand what the

scene is about. Indeed, the image captions written by hu-

mans (also provided by COCO) mention the train, its inter-

action with stuff (i.e. track), and the spatial arrangements

of the train and its surrounding stuff. All these elements are

necessary for scene understanding and show how COCO-

Stuff offers much more comprehensive annotations.

This paper makes the following contributions: (1) We in-

troduce COCO-Stuff, which augments the original COCO

dataset with stuff annotations. (2) We introduce an annota-

tion protocol for COCO-Stuff which leverages the existing

thing annotations and superpixels. We demonstrate both the

quality and efficiency of this protocol (Sec. 3). (3) Using

COCO-Stuff, we analyze the role of stuff from multiple an-

gles (Sec. 4): (a) the importance of stuff and thing classes

in terms of their surface cover and how frequently they are

mentioned in image captions; (b) the spatial relations be-

tween stuff and things, highlighting the rich contextual re-

lations that make COCO-Stuff unique; (c) we compare the

performance of a modern semantic segmentation method on

thing and stuff classes.

Hoping to further promote research on stuff and stuff-

thing contextual relations, we release COCO-Stuff and the

trained segmentation models online

1

.

2. Related Work

Defining things and stuff. The literature provides defi-

nitions for several aspects of stuff and things, including:

(1) Shape: Things have characteristic shapes (car, cat,

phone), whereas stuff is amorphous (sky, grass, water)

[21, 59, 28, 51, 55, 39, 17, 14]. (2) Size: Things occur

at characteristic sizes with little variance, whereas stuff re-

gions are highly variable in size [21, 2, 27]. (3) Parts:

Thing classes have identifiable parts [56, 19], whereas stuff

classes do not (e.g. a piece of grass is still grass, but a

wheel is not a car). (4) Instances: Stuff classes are typ-

ically not countable [2] and have no clearly defined in-

stances [14, 25, 53]. (5) Texture: Stuff classes are typically

highly textured [21, 27, 51, 14]. Finally, a few classes can

be interpreted as both stuff and things, depending on the im-

age conditions (e.g. a large number of people is sometimes

considered a crowd).

Several works have shown that different techniques are

required for the detection of stuff and things [51, 53, 31, 14].

Moreover, several works have shown that stuff is a useful

contextual cue to detect things and vice versa [41, 27, 31,

38, 45].

Stuff-only datasets. Early stuff datasets [6, 15, 34, 9] fo-

cused on texture classification and had simple images com-

pletely covered with a single textured patch. The more re-

Dataset Images Classes

Stuff

classes

Thing

classes

Year

MSRC 21 [46] 591 21 6 15 2006

KITTI [23] 203 14 9 4 2012

CamVid [7] 700 32 13 15 2008

Cityscapes [13] 25,000 30 13 14 2016

SIFT Flow [36] 2,688 33 15 18 2009

Barcelona [50] 15,150 170 31 139 2010

LM+SUN [52] 45,676 232 52 180 2010

PASCAL Context [38] 10,103 540 152 388 2014

NYUD [47] 1,449 894 190 695 2012

ADE20K [63] 25,210 2,693 1,242 1,451 2017

COCO-Stuff 163,957 172 91 80 2018

Table 1: An overview of datasets with pixel-level stuff and thing

annotations. COCO-Stuff is the largest existing dataset with dense

stuff and thing annotations. The number of stuff and thing classes

are estimated given the definitions in Sec. 2. Sec. 3.3 shows that

COCO-Stuff also has more usable classes than any other dataset.

cent Describable Textures Dataset [12] instead collects tex-

tured patches in the wild, described by human-centric at-

tributes. A related task is material recognition [44, 4, 5]. Al-

though the recent Materials in Context dataset [5] features

realistic and difficult images, they are mostly restricted to

indoor scenes with man-made materials. For the task of se-

mantic segmentation, the Stanford Background dataset [24]

offers pixel-level annotations for seven common stuff cat-

egories and a single foreground category (confounding all

thing classes). All stuff-only datasets above have no dis-

tinct thing classes, which make them inadequate to study

the relations between stuff an thing classes.

Thing-only datasets. These datasets have bounding box

or outline-level annotations of things, e.g. PASCAL

VOC [18], ILSVRC [43], COCO [35]. They have pushed

the state-of-the-art in Computer Vision, but the lack of stuff

annotations limits the ability to understand the whole scene.

Stuff and thing datasets. Some datasets have pixel-

wise stuff and thing annotations (Table 1). Early datasets

like MSRC 21 [46], NYUD [47], CamVid [7] and SIFT

Flow [36] annotate less than 50 classes on less than

5,000 images. More recent large-scale datasets like

Barcelona [50], LM+SUN [52], PASCAL Context [38],

Cityscapes [13] and ADE20K [63] annotate tens of thou-

sands of images with hundreds of classes. We compare

COCO-Stuff to these datasets in Sec. 3.3.

Annotating datasets. Dense pixel-wise annotation of im-

ages is extremely costly. Several works use interactive seg-

mentation methods [42, 57, 10] to speedup annotation; oth-

ers annotate superpixels [61, 22, 40]. Some works operate

in a weakly supervised scenario, deriving full image anno-

tations starting from manually annotated squiggles [3, 60]

or points [3, 30]. These approaches take less time, but typi-

cally lead to lower quality.

In this work we introduce a new annotation protocol to

obtain high quality pixel-wise stuff annotations at low hu-

man costs by using superpixels and by exploiting the ex-

isting detailed thing annotations of COCO [35] (Sec. 3.2).

of 11

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论