用核预测网络去噪.pdf

50墨值下载

Burst Denoising with Kernel Prediction Networks

Ben Mildenhall

1,2

∗

Jonathan T. Barron

2

Jiawen Chen

2

Dillon Sharlet

2

Ren Ng

1

Robert Carroll

2

1

UC Berkeley

2

Google Research

Abstract

We present a technique for jointly denoising bursts of im-

ages taken from a handheld camera. In particular, we pro-

pose a convolutional neural network architecture for pre-

dicting spatially varying kernels that can both align and de-

noise frames, a synthetic data generation approach based

on a realistic noise formation model, and an optimization

guided by an annealed loss function to avoid undesirable

local minima. Our model matches or outperforms the state-

of-the-art across a wide range of noise levels on both real

and synthetic data.

1. Introduction

The task of image denoising is foundational to the study

of imaging and computer vision. Traditionally, the prob-

lem of single-image denoising has been addressed as one

of statistical inference using analytical priors [20, 22], but

recent work has built on the success of deep learning by us-

ing convolutional neural networks that learn mappings from

noisy images to noiseless images by training on millions of

examples [29]. These networks appear to learn the likely

appearance of “ground truth” noiseless images in addition

to the statistical properties of the noise present in the input

images.

Multiple-image denoising has also traditionally been ap-

proached through the lens of classical statistical inference,

under the assumption that averaging multiple noisy and in-

dependent samples of a signal will result in a more accu-

rate estimate of the true underlying signal. However, when

denoising image bursts taken with handheld cameras, sim-

ple temporal averaging yields poor results because of scene

and camera motion. Many techniques attempt to first align

the burst or include some notion of translation-invariance

within the denoising operator itself [8]. The idea of de-

noising by combining multiple aligned image patches is

also key to many of the most successful single image tech-

niques [3, 4], which rely on the self-similarity of a single

∗

Work done while interning at Google.

image to allow some degree of denoising via averaging.

We propose a method for burst denoising with the signal-

to-noise ratio benefits of multi-image denoising and the

large capacity and generality of convolutional neural net-

works. Our model is capable of matching or outperforming

the state-of-the-art at all noise levels on both synthetic and

real data. Our contributions include:

1. A procedure for converting post-processed images

taken from the internet into data with the character-

istics of raw linear data captured by real cameras. This

lets us to train a model that generalizes to real images

and circumvents the difficulties in acquiring ground

truth data for our task from a camera.

2. A network architecture that outperforms the state-

of-the-art on synthetic and real data by predicting a

unique 3D denoising kernel to produce each pixel of

the output image. This provides both a performance

improvement over a network that synthesizes pixels di-

rectly, and a way to visually inspect how each burst

image is being used.

3. A training procedure for our kernel prediction network

that allows it to predict filter kernels that use infor-

mation from multiple images even in the presence of

small unknown misalignments.

4. A demonstration that a network that takes the noise

level of the input imagery as input during training and

testing generalizes to a much wider range of noise lev-

els than a blind denoising network.

2. Related work

Single-image denoising is a longstanding problem, origi-

nating with classical methods like anisotropic diffusion [20]

or total variation denoising [22], which used analytical pri-

ors and non-linear optimization to recover a signal from a

noisy image. These ideas were built upon to develop multi-

image or video denoising techniques such as VBM4D [17]

and non-local means [3, 14], which group similar patches

across time and jointly filter them under the assumption

that multiple noisy observations can be averaged to better

estimate the true underlying signal. Recently these ideas

arXiv:1712.02327v2 [cs.CV] 29 Mar 2018

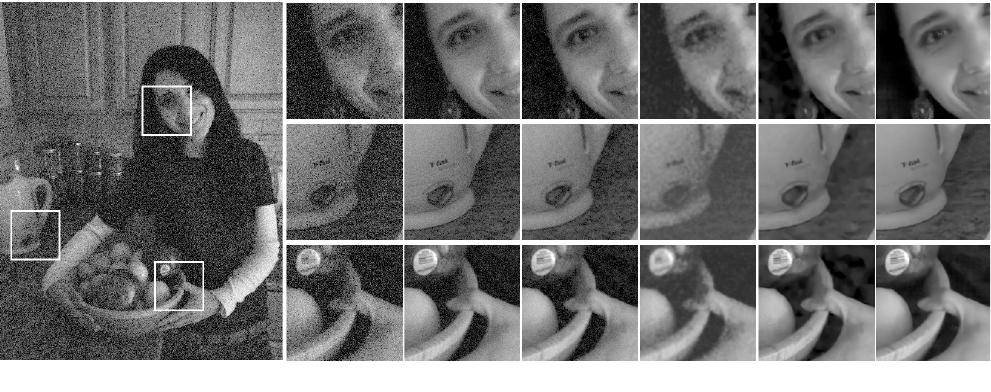

(a) Reference (b) Average (c) HDR+ (d) NLM (e) VBM4D (f) Ours (KPN)Reference frame

Figure 1: A qualitative evaluation of our model on real image bursts from a handheld camera in a low-light environment.

The reference frame from the input burst (a) is sharp, but noisy. Noise can be reduced by simply averaging a burst of similar

images (b), but this can fail in the presence of motion (see Figure 8). Our approach (f) learns to use the information present

in the entire burst to denoise a single frame, producing lower noise and avoiding artifacts compared to baseline techniques (c

– e). See the supplement for full resolution images and more examples.

have been retargeted towards the task of denoising a burst

of noisy images captured from commodity mobile phones,

with an emphasis on energy efficiency and speed [8, 16].

These approaches first align image patches to within a few

pixels and then perform joint denoising by robust averag-

ing (such as Wiener filtering). Another line of work has

focused on achieving high quality by combining multiple

image formation steps with a single linear operator and us-

ing modern optimization techniques to solve the associated

inverse problem [11, 10]. These approaches generalize to

multiple image denoising but require calculating alignment

as part of the forward model.

The success of deep learning has yielded a number of

neural network approaches to multi-image denoising [29,

27], in addition to a wide range of similar tasks such as joint

denoising and demosaicking [7], deblurring [24], and su-

perresolution [25]. Similar in spirit to our method, Kernel-

Predicting Networks [2] denoise Monte Carlo renderings

with a network that generates a filter for every pixel in

the desired output, which constrains the output space and

thereby prevents artifacts. Similar ideas have been applied

successfully to both video interpolation [18, 19] and video

prediction [6, 15, 28, 5], where applying predicted optical

flow vectors or filters to the input image data helps prevent

the blurry outputs often produced by direct pixel synthesis

networks.

3. Problem specification

Our goal is to produce a single clean image from a noisy

burst of N images captured by a handheld camera. Fol-

lowing the design of recent work [8], we select one image

X

1

in the burst as the “reference” and denoise it with the

help of “alternate” frames X

2

, . . . , X

N

. It is not necessary

for X

1

to be the first image acquired. All input images

are in the raw linear domain to avoid losing signal due to

the post-processing performed between capture and display

(e.g., demosaicking, sharpening, tone mapping, and com-

pression). Creating training examples for this task requires

careful consideration of the characteristics of raw sensor

data.

3.1. Characteristics of raw sensor data

Camera sensors output raw data in a linear color space,

where pixel measurements are proportional to the number

of photoelectrons collected. The primary sources of noise

are shot noise, a Poisson process with variance equal to the

signal level, and read noise, an approximately Gaussian pro-

cess caused by a variety of sensor readout effects. These ef-

fects are well-modeled by a signal-dependent Gaussian dis-

tribution [9]:

x

p

∼ N

y

p

, σ

2

r

+ σ

s

y

p

(1)

where x

p

is a noisy measurement of the true intensity y

p

at pixel p. The noise parameters σ

r

and σ

s

are fixed for

of 11

50墨值下载

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文档的来源(墨天轮),文档链接,文档作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。

下载排行榜

评论